16621 lines

939 KiB

Markdown

16621 lines

939 KiB

Markdown

# create api-key

|

||

Source: https://docs.vast.ai/api-reference/accounts/create-api-key

|

||

|

||

api-reference/openapi.json post /api/v0/auth/apikeys/

|

||

Creates a new API key with specified permissions for the authenticated user.

|

||

|

||

CLI Usage: `vastai create api-key --name <name> --permission_file <permissions_file> [--key_params <params>]`

|

||

|

||

|

||

|

||

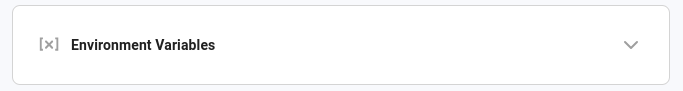

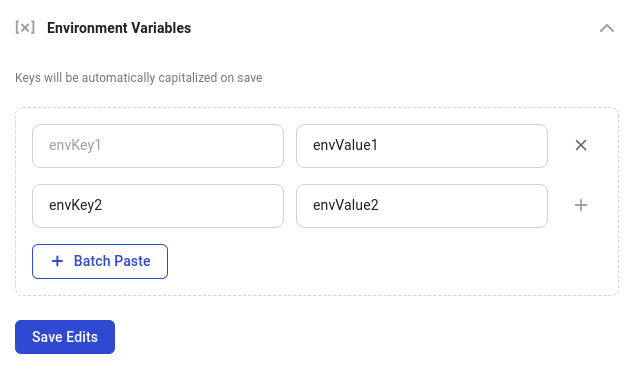

# create env-var

|

||

Source: https://docs.vast.ai/api-reference/accounts/create-env-var

|

||

|

||

api-reference/openapi.json post /api/v0/secrets/

|

||

Creates a new encrypted environment variable for the authenticated user.

|

||

Keys are automatically converted to uppercase. Values are encrypted before storage.

|

||

There is a limit on the total number of environment variables per user.

|

||

|

||

CLI Usage: `vastai create env-var <key> <value>`

|

||

|

||

|

||

|

||

# create ssh-key

|

||

Source: https://docs.vast.ai/api-reference/accounts/create-ssh-key

|

||

|

||

api-reference/openapi.json post /api/v0/ssh/

|

||

Creates a new SSH key and associates it with your account.

|

||

The key will be automatically added to all your current instances.

|

||

|

||

CLI Usage: `vastai create ssh-key <ssh_key>`

|

||

|

||

|

||

|

||

# create subaccount

|

||

Source: https://docs.vast.ai/api-reference/accounts/create-subaccount

|

||

|

||

api-reference/openapi.json post /api/v0/users/

|

||

Creates either a standalone user account or a subaccount under a parent account. Subaccounts can be restricted to host-only functionality.

|

||

|

||

CLI Usage: `vastai create subaccount --email <email> --username <username> --password <password> [--type host]`

|

||

|

||

|

||

|

||

# delete api key

|

||

Source: https://docs.vast.ai/api-reference/accounts/delete-api-key

|

||

|

||

api-reference/openapi.json delete /api/v0/auth/apikeys/{id}/

|

||

Deletes an existing API key belonging to the authenticated user.

|

||

The API key is soft-deleted by setting a deleted_at timestamp.

|

||

|

||

CLI Usage: `vastai delete api-key <id>`

|

||

|

||

|

||

|

||

# delete env var

|

||

Source: https://docs.vast.ai/api-reference/accounts/delete-env-var

|

||

|

||

api-reference/openapi.json delete /api/v0/secrets/

|

||

Deletes an environment variable associated with the authenticated user.

|

||

The variable must exist and belong to the requesting user.

|

||

|

||

CLI Usage: `vastai delete env-var <name>`

|

||

|

||

|

||

|

||

# delete ssh key

|

||

Source: https://docs.vast.ai/api-reference/accounts/delete-ssh-key

|

||

|

||

api-reference/openapi.json delete /api/v0/ssh/{id}/

|

||

Removes an SSH key from the authenticated user's account

|

||

|

||

CLI Usage: `vastai delete ssh-key <id>`

|

||

|

||

|

||

|

||

# set user

|

||

Source: https://docs.vast.ai/api-reference/accounts/set-user

|

||

|

||

api-reference/openapi.json put /api/v0/users/

|

||

Updates the user data for the authenticated user.

|

||

|

||

CLI Usage: `vastai set user --file <file_path>`

|

||

|

||

|

||

|

||

# show api keys

|

||

Source: https://docs.vast.ai/api-reference/accounts/show-api-keys

|

||

|

||

api-reference/openapi.json get /api/v0/auth/apikeys/

|

||

Retrieves all API keys associated with the authenticated user.

|

||

|

||

CLI Usage: `vastai show api-keys`

|

||

|

||

|

||

|

||

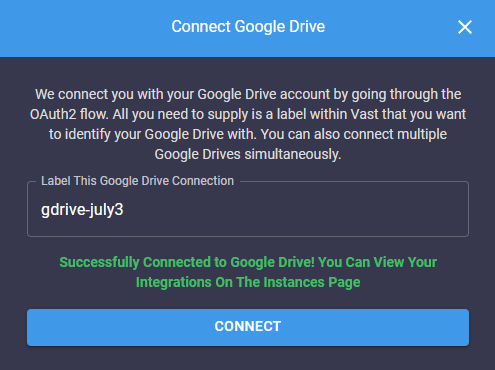

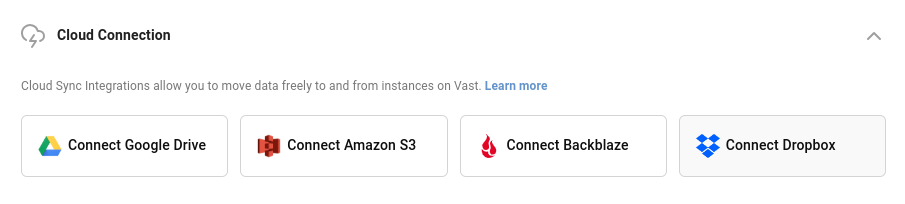

# show connections

|

||

Source: https://docs.vast.ai/api-reference/accounts/show-connections

|

||

|

||

api-reference/openapi.json get /api/v0/users/cloud_integrations/

|

||

Retrieves the list of cloud connections associated with the authenticated user.

|

||

|

||

CLI Usage: `vastai show connections`

|

||

|

||

|

||

|

||

# show env vars

|

||

Source: https://docs.vast.ai/api-reference/accounts/show-env-vars

|

||

|

||

api-reference/openapi.json get /api/v0/secrets/

|

||

Retrieve a list of environment variables (secrets) for the authenticated user.

|

||

|

||

CLI Usage: `vastai show env-vars [-s]`

|

||

|

||

|

||

|

||

# show ipaddrs

|

||

Source: https://docs.vast.ai/api-reference/accounts/show-ipaddrs

|

||

|

||

api-reference/openapi.json get /api/v0/users/{user_id}/ipaddrs/

|

||

This endpoint retrieves the history of IP address accesses for the authenticated user.

|

||

|

||

CLI Usage: `vastai show ipaddrs`

|

||

|

||

|

||

|

||

# show ssh keys

|

||

Source: https://docs.vast.ai/api-reference/accounts/show-ssh-keys

|

||

|

||

api-reference/openapi.json get /api/v0/ssh/

|

||

Retrieve a list of SSH keys associated with the authenticated user's account.

|

||

|

||

CLI Usage: `vastai show ssh-keys`

|

||

|

||

|

||

|

||

# show subaccounts

|

||

Source: https://docs.vast.ai/api-reference/accounts/show-subaccounts

|

||

|

||

api-reference/openapi.json get /api/v0/subaccounts/

|

||

Retrieve a list of subaccounts associated with the authenticated user's account.

|

||

|

||

CLI Usage: `vastai show subaccounts`

|

||

|

||

|

||

|

||

# show team role

|

||

Source: https://docs.vast.ai/api-reference/accounts/show-team-role

|

||

|

||

api-reference/openapi.json get /api/v0/team/roles/{id}/

|

||

Retrieve details of a specific team role by its name.

|

||

|

||

CLI Usage: `vastai show team-role <name>`

|

||

|

||

|

||

|

||

# show user

|

||

Source: https://docs.vast.ai/api-reference/accounts/show-user

|

||

|

||

api-reference/openapi.json get /api/v0/users/current/

|

||

Retrieve information about the current authenticated user, excluding the API key.

|

||

|

||

CLI Usage: `vastai show user`

|

||

|

||

|

||

|

||

# transfer credit

|

||

Source: https://docs.vast.ai/api-reference/accounts/transfer-credit

|

||

|

||

api-reference/openapi.json put /api/v0/commands/transfer_credit/

|

||

Transfers specified amount of credits from the authenticated user's account to another user's account.

|

||

|

||

The recipient can be specified by either email address or user ID.

|

||

|

||

CLI Usage: `vastai transfer credit <recipient_email> <amount>`

|

||

|

||

|

||

|

||

# update env var

|

||

Source: https://docs.vast.ai/api-reference/accounts/update-env-var

|

||

|

||

api-reference/openapi.json put /api/v0/secrets/

|

||

Updates the value of an existing environment variable for the authenticated user.

|

||

|

||

CLI Usage: `vastai update env-var <key> <value>`

|

||

|

||

|

||

|

||

# update ssh key

|

||

Source: https://docs.vast.ai/api-reference/accounts/update-ssh-key

|

||

|

||

api-reference/openapi.json put /api/v0/ssh/{id}/

|

||

Updates the specified SSH key with the provided value.

|

||

|

||

CLI Usage: `vastai update ssh-key <id> <ssh_key>`

|

||

|

||

|

||

|

||

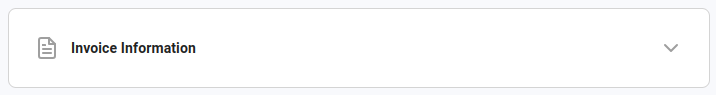

# search invoices

|

||

Source: https://docs.vast.ai/api-reference/billing/search-invoices

|

||

|

||

api-reference/openapi.json get /api/v0/invoices

|

||

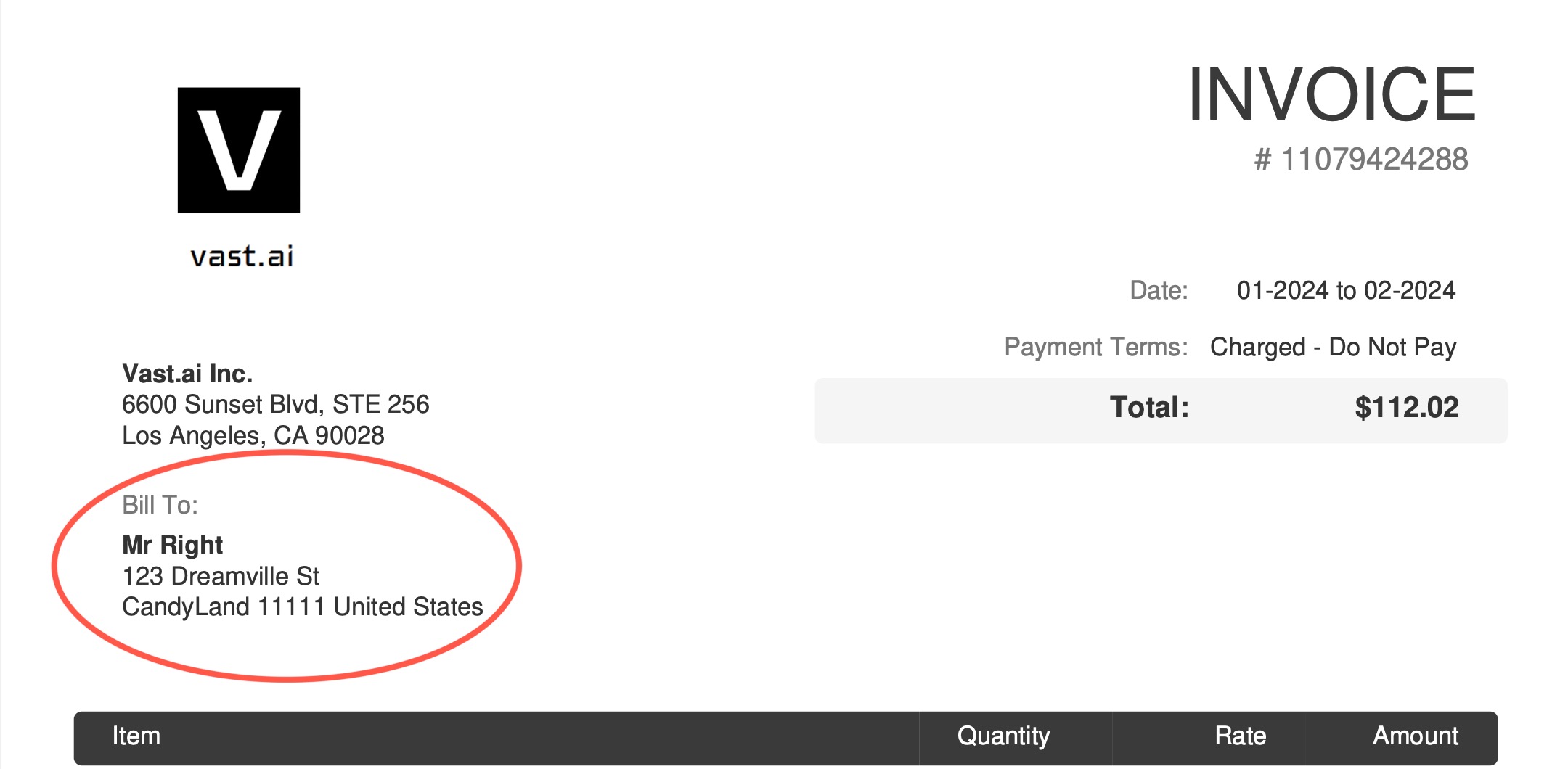

This endpoint allows users to search and retrieve invoices based on specified filters.

|

||

|

||

CLI Usage: `vastai search invoices`

|

||

|

||

|

||

|

||

# show deposit

|

||

Source: https://docs.vast.ai/api-reference/billing/show-deposit

|

||

|

||

api-reference/openapi.json get /api/v0/instances/balance/{id}/

|

||

Retrieves the deposit details for a specified instance.

|

||

|

||

CLI Usage: `vastai show deposit <id>`

|

||

|

||

|

||

|

||

# show earnings

|

||

Source: https://docs.vast.ai/api-reference/billing/show-earnings

|

||

|

||

api-reference/openapi.json get /api/v0/users/{user_id}/machine-earnings/

|

||

Retrieves the earnings history for a specified time range and optionally per machine.

|

||

|

||

CLI Usage: `vastai show earnings [options]`

|

||

|

||

|

||

|

||

# show invoices

|

||

Source: https://docs.vast.ai/api-reference/billing/show-invoices

|

||

|

||

api-reference/openapi.json get /api/v0/users/{user_id}/invoices/

|

||

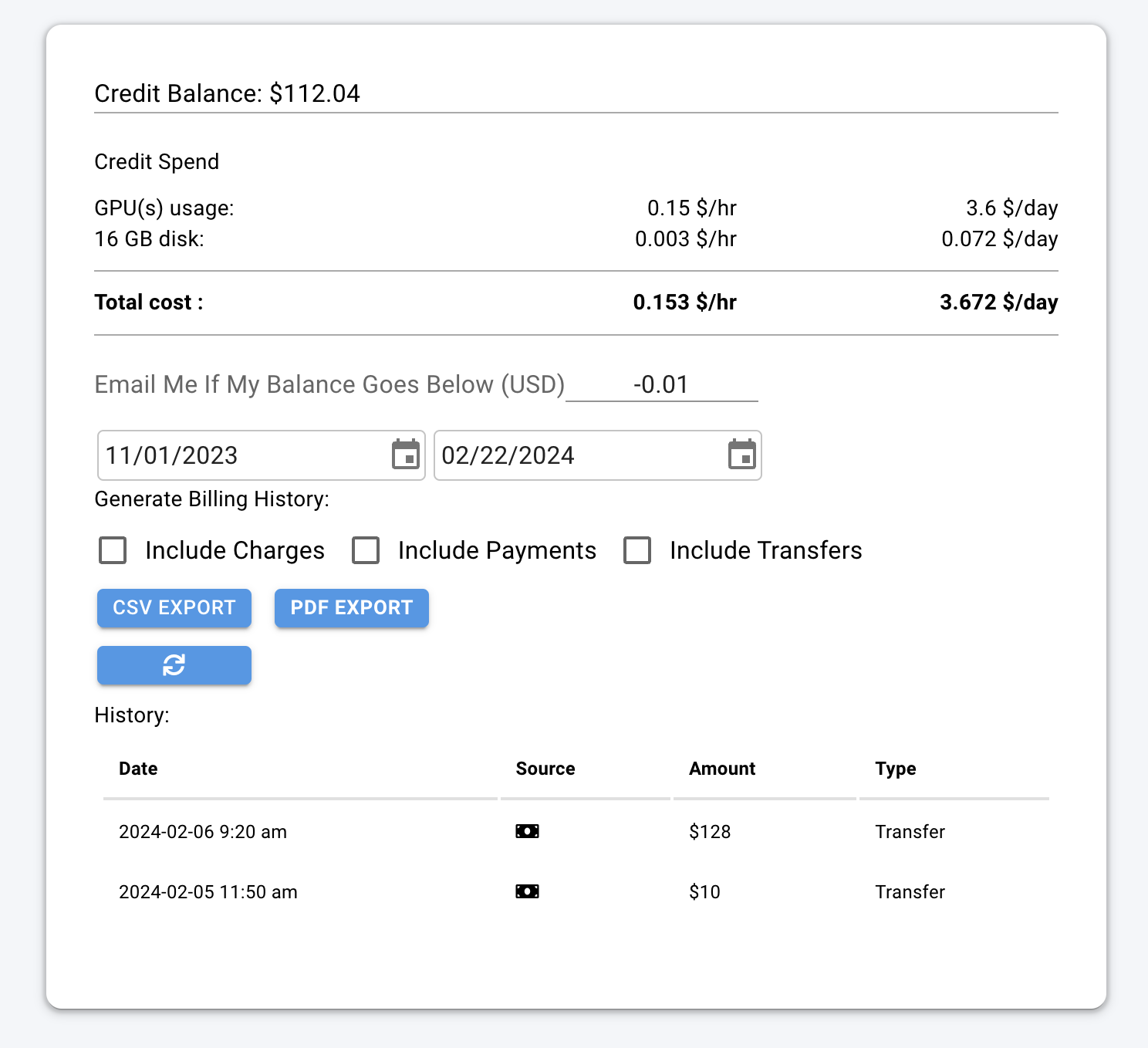

This endpoint retrieves billing history reports for the authenticated user, including charges and credits.

|

||

|

||

CLI Usage: `vastai show invoices`

|

||

|

||

|

||

|

||

# attach ssh-key

|

||

Source: https://docs.vast.ai/api-reference/instances/attach-ssh-key

|

||

|

||

api-reference/openapi.json post /api/v0/instances/{id}/ssh/

|

||

Attaches an SSH key to the specified instance, allowing SSH access using the provided key.

|

||

|

||

CLI Usage: `vastai attach ssh <instance_id> <ssh_key>`

|

||

|

||

|

||

|

||

# cancel copy

|

||

Source: https://docs.vast.ai/api-reference/instances/cancel-copy

|

||

|

||

api-reference/openapi.json delete /api/v0/commands/copy_direct/

|

||

Cancel a remote copy operation specified by the destination ID (dst_id).

|

||

|

||

CLI Usage: `vastai cancel copy --dst_id <destination_id>`

|

||

|

||

|

||

|

||

# cancel sync

|

||

Source: https://docs.vast.ai/api-reference/instances/cancel-sync

|

||

|

||

api-reference/openapi.json delete /api/v0/commands/rclone/

|

||

Cancels an in-progress remote sync operation identified by the destination instance ID.

|

||

This operation cannot be resumed once canceled and must be restarted if needed.

|

||

|

||

CLI Usage: `vastai cancel sync --dst_id <destination_id>`

|

||

|

||

|

||

|

||

# change bid

|

||

Source: https://docs.vast.ai/api-reference/instances/change-bid

|

||

|

||

api-reference/openapi.json put /api/v0/instances/bid_price/{id}/

|

||

Change the current bid price of an instance to a specified price.

|

||

|

||

CLI Usage: `vastai change bid <id> --price <price>`

|

||

|

||

|

||

|

||

# cloud copy

|

||

Source: https://docs.vast.ai/api-reference/instances/cloud-copy

|

||

|

||

api-reference/openapi.json post /api/v0/commands/rclone/

|

||

Starts a cloud copy operation by sending a command to the remote server. The operation can transfer data between an instance and a cloud service.

|

||

|

||

CLI Usage: `vastai cloud copy <instance_id> <src> <dst> [options]`

|

||

|

||

|

||

|

||

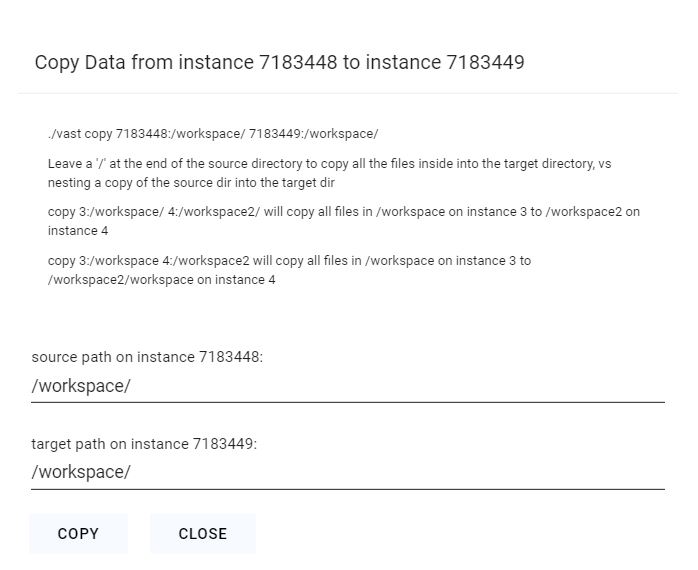

# copy

|

||

Source: https://docs.vast.ai/api-reference/instances/copy

|

||

|

||

api-reference/openapi.json put /api/v0/commands/copy_direct/

|

||

Initiate a remote copy operation to transfer data from one instance to another or between an instance and the local machine.

|

||

|

||

CLI Usage: `vastai copy <src_id> <dst_id> <src_path> <dst_path>`

|

||

|

||

|

||

|

||

# create instance

|

||

Source: https://docs.vast.ai/api-reference/instances/create-instance

|

||

|

||

api-reference/openapi.json put /api/v0/asks/{id}/

|

||

Creates a new instance by accepting an "ask" contract from a provider.

|

||

This is the main endpoint for launching new instances on Vast.ai.

|

||

|

||

CLI Usage: `vastai create instance <offer_id> [options]`

|

||

|

||

|

||

|

||

# destroy instance

|

||

Source: https://docs.vast.ai/api-reference/instances/destroy-instance

|

||

|

||

api-reference/openapi.json delete /api/v0/instances/{id}/

|

||

Destroys/deletes an instance permanently. This is irreversible and will delete all data.

|

||

|

||

CLI Usage: `vastai destroy instance <id>`

|

||

|

||

|

||

|

||

# detach ssh-key

|

||

Source: https://docs.vast.ai/api-reference/instances/detach-ssh-key

|

||

|

||

api-reference/openapi.json delete /api/v0/instances/{id}/ssh/{key}/

|

||

Detaches an SSH key from a specified instance, removing SSH access for that key.

|

||

|

||

CLI Usage: `vastai detach <instance_id> <ssh_key_id>`

|

||

|

||

|

||

|

||

# execute

|

||

Source: https://docs.vast.ai/api-reference/instances/execute

|

||

|

||

api-reference/openapi.json put /api/v0/instances/command/{id}/

|

||

Executes a constrained remote command on a specified instance.

|

||

The command output can be retrieved from the returned result URL.

|

||

|

||

CLI Usage: `vastai execute <instance_id> <command>`

|

||

|

||

|

||

|

||

# manage instance

|

||

Source: https://docs.vast.ai/api-reference/instances/manage-instance

|

||

|

||

api-reference/openapi.json put /api/v0/instances/{id}/

|

||

Manage instance state and labels. The operation is determined by the request body parameters.

|

||

|

||

CLI Usage:

|

||

- To stop: `vastai stop instance <id>`

|

||

- To start: `vastai start instance <id>`

|

||

- To label: `vastai label instance <id> <label>`

|

||

|

||

|

||

|

||

# prepay instance

|

||

Source: https://docs.vast.ai/api-reference/instances/prepay-instance

|

||

|

||

api-reference/openapi.json put /api/v0/instances/prepay/{id}/

|

||

Deposit credits into a reserved instance to receive usage discounts.

|

||

The discount rate is calculated based on how many months of usage the prepaid amount covers. Maximum discount is typically 40%.

|

||

|

||

CLI Usage: `vastai prepay instance <id> <amount>`

|

||

|

||

|

||

|

||

# reboot instance

|

||

Source: https://docs.vast.ai/api-reference/instances/reboot-instance

|

||

|

||

api-reference/openapi.json put /api/v0/instances/reboot/{id}/

|

||

Stops and starts a container without losing GPU priority. Updates container status to 'rebooting' and executes docker stop/start commands on the host machine.

|

||

|

||

CLI Usage: `vastai reboot instance <id>`

|

||

|

||

|

||

|

||

# recycle instance

|

||

Source: https://docs.vast.ai/api-reference/instances/recycle-instance

|

||

|

||

api-reference/openapi.json put /api/v0/instances/recycle/{id}/

|

||

Destroys and recreates container in place (from newly pulled image) without losing GPU priority.

|

||

Updates container status to 'recycling' and executes docker stop/remove commands on the host machine.

|

||

|

||

CLI Usage: `vastai recycle instance <id>`

|

||

|

||

|

||

|

||

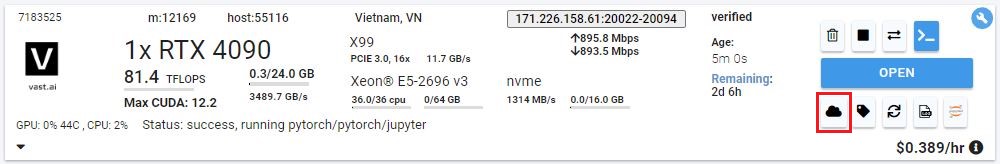

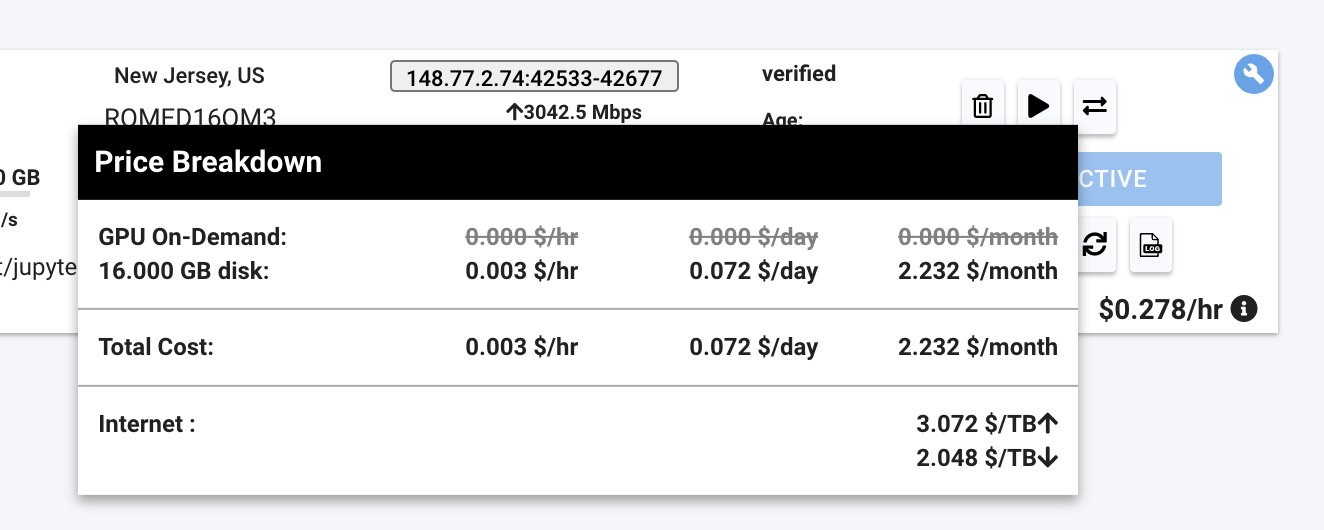

# show instance

|

||

Source: https://docs.vast.ai/api-reference/instances/show-instance

|

||

|

||

api-reference/openapi.json get /api/v0/instances/{id}/

|

||

Retrieves the details of a specific instance for the authenticated user.

|

||

This endpoint returns detailed information including SSH connection parameters, instance state, resource utilization, template data, and pricing details.

|

||

|

||

CLI Usage: `vastai show instance [--api-key <api_key>] [--raw]`

|

||

|

||

|

||

|

||

# show instances

|

||

Source: https://docs.vast.ai/api-reference/instances/show-instances

|

||

|

||

api-reference/openapi.json get /api/v0/instances/

|

||

Retrieve a list of instances for the authenticated user.

|

||

|

||

CLI Usage: `vastai show instances [options] [--api-key <api_key>] [--raw]`

|

||

|

||

|

||

|

||

# show logs

|

||

Source: https://docs.vast.ai/api-reference/instances/show-logs

|

||

|

||

api-reference/openapi.json put /api/v0/instances/request_logs/{id}

|

||

Request logs from a specific instance. The logs will be uploaded to S3 and can be retrieved from a generated URL. Supports both container logs and daemon system logs.

|

||

|

||

CLI Usage: `vastai show logs <instance_id> [--tail <lines>] [--filter <grep>] [--daemon-logs]`

|

||

|

||

|

||

|

||

# show ssh-keys

|

||

Source: https://docs.vast.ai/api-reference/instances/show-ssh-keys

|

||

|

||

api-reference/openapi.json get /api/v0/instances/{instance_id}/ssh/

|

||

Retrieves the SSH keys associated with a specific instance.

|

||

|

||

CLI Usage: `vastai show ssh-keys <instance_id>`

|

||

|

||

|

||

|

||

# API Introduction

|

||

Source: https://docs.vast.ai/api-reference/introduction

|

||

|

||

|

||

|

||

Welcome to Vast.ai 's API documentation. Our API allows you to programmatically manage GPU instances, handle machine operations, and automate your AI/ML workflow. Whether you're running individual GPU instances or managing a fleet of machines, our API provides comprehensive control over all Vast.ai platform features.

|

||

|

||

<Card title="Here's a link to our API docs on Postman" href="https://www.postman.com/vast33/vast-ai-public-api-docs">

|

||

View the Postman collection

|

||

</Card>

|

||

|

||

|

||

# cancel maint

|

||

Source: https://docs.vast.ai/api-reference/machines/cancel-maint

|

||

|

||

api-reference/openapi.json put /api/v0/machines/{machine_id}/cancel_maint/

|

||

Cancel a scheduled maintenance window for a specified machine.

|

||

|

||

CLI Usage: `vastai cancel maint <machine_id>`

|

||

|

||

|

||

|

||

# cleanup machine

|

||

Source: https://docs.vast.ai/api-reference/machines/cleanup-machine

|

||

|

||

api-reference/openapi.json put /api/v0/machines/{machine_id}/cleanup/

|

||

This endpoint removes expired contracts on a specified machine, freeing up space.

|

||

|

||

CLI Usage: `vastai cleanup machine <machine_id>`

|

||

|

||

|

||

|

||

# list machine

|

||

Source: https://docs.vast.ai/api-reference/machines/list-machine

|

||

|

||

api-reference/openapi.json put /api/v0/machines/create_asks/

|

||

Creates or updates ask contracts for a machine to list it for rent on the vast.ai platform.

|

||

Allows setting pricing, minimum GPU requirements, end date and discount rates.

|

||

|

||

CLI Usage: `vastai list machine <machine_id> [options]`

|

||

|

||

|

||

|

||

# remove defjob

|

||

Source: https://docs.vast.ai/api-reference/machines/remove-defjob

|

||

|

||

api-reference/openapi.json delete /api/v0/machines/{machine_id}/defjob/

|

||

Deletes the default job (background instances) for a specified machine.

|

||

|

||

CLI Usage: `vastai remove defjob <machine_id>`

|

||

|

||

|

||

|

||

# schedule maint

|

||

Source: https://docs.vast.ai/api-reference/machines/schedule-maint

|

||

|

||

api-reference/openapi.json put /api/v0/machines/{machine_id}/dnotify

|

||

Schedules a maintenance window for a specified machine and notifies clients.

|

||

|

||

CLI Usage: `vastai schedule maint <machine_id> --sdate <sdate> --duration <duration>`

|

||

|

||

|

||

|

||

# set defjob

|

||

Source: https://docs.vast.ai/api-reference/machines/set-defjob

|

||

|

||

api-reference/openapi.json put /api/v0/machines/create_bids/

|

||

Creates default jobs (background instances) for a specified machine with the given parameters.

|

||

|

||

CLI Usage: `vastai set defjob <machine_id> --price_gpu <price> --price_inetu <price> --price_inetd <price> --image <image> [--args <args>]`

|

||

|

||

|

||

|

||

# set min-bid

|

||

Source: https://docs.vast.ai/api-reference/machines/set-min-bid

|

||

|

||

api-reference/openapi.json put /api/v0/machines/{machine_id}/minbid/

|

||

Sets the minimum bid price for a specified machine.

|

||

|

||

CLI Usage: `vastai set min-bid <machine_id> --price <price>`

|

||

|

||

|

||

|

||

# show machines

|

||

Source: https://docs.vast.ai/api-reference/machines/show-machines

|

||

|

||

api-reference/openapi.json get /api/v0/machines/

|

||

Fetches data for multiple machines associated with the authenticated user.

|

||

|

||

CLI Usage: `vastai show machines [--user_id <user_id>]`

|

||

|

||

|

||

|

||

# show reports

|

||

Source: https://docs.vast.ai/api-reference/machines/show-reports

|

||

|

||

api-reference/openapi.json get /api/v0/machines/{machine_id}/reports/

|

||

Retrieves a list of the most recent reports for a given machine. Each report includes details such as the problem identified, a message describing the issue, and the timestamp when the report was created.

|

||

|

||

CLI Usage: `vastai reports <machine_id>`

|

||

|

||

|

||

|

||

# unlist machine

|

||

Source: https://docs.vast.ai/api-reference/machines/unlist-machine

|

||

|

||

api-reference/openapi.json delete /api/v0/machines/{machine_id}/asks/

|

||

Removes all 'ask' type offer contracts for a specified machine, effectively unlisting it from being available for rent.

|

||

|

||

CLI Usage: `vastai unlist machine <id>`

|

||

|

||

|

||

|

||

# add network-disk

|

||

Source: https://docs.vast.ai/api-reference/network-volumes/add-network-disk

|

||

|

||

api-reference/openapi.json post /api/v0/network_disk/

|

||

Adds a network disk to be used to create network volume offers, or adds machines to an existing network disk.

|

||

|

||

CLI Usage: `vastai add network_disk <machine_id>... <mount_point> [options]`

|

||

|

||

|

||

|

||

# create network-volume

|

||

Source: https://docs.vast.ai/api-reference/network-volumes/create-network-volume

|

||

|

||

api-reference/openapi.json put /api/v0/network_volume/

|

||

Creates a network volume from an offer.

|

||

|

||

CLI Usage: `vastai create network-volume <offer_id> <size> [--name <name>]`

|

||

|

||

|

||

|

||

# list network-volume

|

||

Source: https://docs.vast.ai/api-reference/network-volumes/list-network-volume

|

||

|

||

api-reference/openapi.json post /api/v0/network_volume/

|

||

Lists a network disk for rent as network volumes, or updates an existing listing with a new price/size/end date/discount.

|

||

|

||

CLI Usage: `vastai list network-volume <disk_id> [options]`

|

||

|

||

|

||

|

||

# search network volumes

|

||

Source: https://docs.vast.ai/api-reference/network-volumes/search-network-volumes

|

||

|

||

api-reference/openapi.json post /api/v0/network_volumes/search/

|

||

Search for available network volume offers with advanced filtering and sorting.

|

||

|

||

CLI Usage: `vastai search network-volumes <query> [--order <field>]`

|

||

|

||

|

||

|

||

# unlist network-volume

|

||

Source: https://docs.vast.ai/api-reference/network-volumes/unlist-network-volume

|

||

|

||

api-reference/openapi.json post /api/v0/network_volumes/unlist/

|

||

Unlists a network volume for rent.

|

||

|

||

CLI Usage: `vastai unlist volume <offer_id>`

|

||

|

||

|

||

|

||

# search benchmarks

|

||

Source: https://docs.vast.ai/api-reference/search/search-benchmarks

|

||

|

||

api-reference/openapi.json get /api/v0/benchmarks/

|

||

Retrieve benchmark data based on search parameters.

|

||

|

||

CLI Usage: `vastai search benchmarks`

|

||

|

||

|

||

|

||

# search offers

|

||

Source: https://docs.vast.ai/api-reference/search/search-offers

|

||

|

||

api-reference/openapi.json post /api/v0/bundles/

|

||

Search for available GPU machine offers with advanced filtering and sorting.

|

||

|

||

Each filter parameter (such as `verified`, `gpu_name`, `num_gpus`, etc.) should be an object specifying the operator and value you want to match.

|

||

|

||

**Filter operators:**

|

||

|

||

| Operator | Meaning | Example |

|

||

|:---------|:-----------------------|:-------------------------------|

|

||

| `eq` | Equal to | `{ "eq": true }` |

|

||

| `neq` | Not equal to | `{ "neq": false }` |

|

||

| `gt` | Greater than | `{ "gt": 0.99 }` |

|

||

| `lt` | Less than | `{ "lt": 10000 }` |

|

||

| `gte` | Greater than or equal | `{ "gte": 4 }` |

|

||

| `lte` | Less than or equal | `{ "lte": 8 }` |

|

||

| `in` | Value is in a list | `{ "in": ["RTX_3090", "RTX_4090"] }` |

|

||

| `nin` | Value is not in a list | `{ "nin": ["TW", "SE"] }` |

|

||

|

||

Default filters: verified=true, rentable=true, rented=false (unless --no-default is used)

|

||

|

||

CLI Usage: `vastai search offers 'reliability > 0.99 num_gpus>=4' --order=dph_total`

|

||

|

||

|

||

|

||

# search templates

|

||

Source: https://docs.vast.ai/api-reference/search/search-templates

|

||

|

||

api-reference/openapi.json get /api/v0/template/

|

||

Searches for templates based on query parameters and retrieves matching templates.

|

||

|

||

CLI Usage: `vastai search templates`

|

||

|

||

|

||

|

||

# create endpoint

|

||

Source: https://docs.vast.ai/api-reference/serverless/create-endpoint

|

||

|

||

api-reference/openapi.json post /api/v0/endptjobs/

|

||

This endpoint creates a new job processing endpoint with specified parameters.

|

||

|

||

CLI Usage: `vastai create endpoint [options]`

|

||

|

||

|

||

|

||

# create workergroup

|

||

Source: https://docs.vast.ai/api-reference/serverless/create-workergroup

|

||

|

||

api-reference/openapi.json post /api/v0/workergroups/

|

||

Creates a new workergroup configuration that manages worker instances for a serverless endpoint.

|

||

|

||

CLI Usage: `vastai create workergroup --template_hash <hash> --endpoint_name <name> [options]`

|

||

|

||

|

||

|

||

# delete endpoint

|

||

Source: https://docs.vast.ai/api-reference/serverless/delete-endpoint

|

||

|

||

api-reference/openapi.json delete /api/v0/endptjobs/{id}/

|

||

Deletes an endpoint group by ID. Associated workergroups will also be deleted.

|

||

|

||

CLI Usage: `vastai delete endpoint <id>`

|

||

|

||

|

||

|

||

# delete workergroup

|

||

Source: https://docs.vast.ai/api-reference/serverless/delete-workergroup

|

||

|

||

api-reference/openapi.json delete /api/v0/workergroups/{id}/

|

||

Deletes an existing workergroup.

|

||

|

||

CLI Usage: `vastai delete workergroup <id>`

|

||

|

||

|

||

|

||

# get endpoint logs

|

||

Source: https://docs.vast.ai/api-reference/serverless/get-endpoint-logs

|

||

|

||

api-reference/openapi.json post /get_endpoint_logs/

|

||

Retrieves logs for a specific endpoint by name.

|

||

|

||

CLI Usage: `vastai get endpoint logs <endpoint_name> [--tail <num_lines>]`

|

||

|

||

|

||

|

||

# get endpoint workers

|

||

Source: https://docs.vast.ai/api-reference/serverless/get-endpoint-workers

|

||

|

||

api-reference/openapi.json post /get_endpoint_workers/

|

||

Retrieves the current list and status of workers for a specific endpoint.

|

||

Useful for monitoring, debugging connectivity issues, and understanding resource usage.

|

||

|

||

CLI Usage: `vastai get endpoint workers <id>`

|

||

|

||

|

||

|

||

# get workergroup logs

|

||

Source: https://docs.vast.ai/api-reference/serverless/get-workergroup-logs

|

||

|

||

api-reference/openapi.json post /get_workergroup_logs/

|

||

Retrieves logs for a specific workergroup by ID.

|

||

|

||

CLI Usage: `vastai get workergroup logs <id> [--tail <num_lines>]`

|

||

|

||

|

||

|

||

# get workergroup workers

|

||

Source: https://docs.vast.ai/api-reference/serverless/get-workergroup-workers

|

||

|

||

api-reference/openapi.json post /get_workergroup_workers/

|

||

Retrieves the current list and status of workers for a specific workergroup.

|

||

Useful for monitoring, debugging connectivity issues, and understanding resource usage within a workergroup.

|

||

|

||

CLI Usage: `vastai get workergroup workers <id>`

|

||

|

||

|

||

|

||

# route

|

||

Source: https://docs.vast.ai/api-reference/serverless/route

|

||

|

||

api-reference/openapi.json post /route/

|

||

Calls on the serverless engine to retrieve a GPU instance address within your endpoint for processing a request.

|

||

The engine will return either a ready worker URL or status information if no workers are available.

|

||

|

||

CLI Usage: `vastai route <endpoint> <cost>`

|

||

|

||

|

||

|

||

# show endpoints

|

||

Source: https://docs.vast.ai/api-reference/serverless/show-endpoints

|

||

|

||

api-reference/openapi.json get /api/v0/endptjobs/

|

||

Retrieve a list of endpoint jobs for the authenticated user.

|

||

|

||

CLI Usage: `vastai show endpoints`

|

||

|

||

|

||

|

||

# show workergroup

|

||

Source: https://docs.vast.ai/api-reference/serverless/show-workergroup

|

||

|

||

api-reference/openapi.json get /api/v0/workergroups/

|

||

Retrieves the list of workergroups associated with the authenticated user.

|

||

|

||

CLI Usage: `vastai show workergroups`

|

||

|

||

|

||

|

||

# update workergroup

|

||

Source: https://docs.vast.ai/api-reference/serverless/update-workergroup

|

||

|

||

api-reference/openapi.json put /api/v0/workergroups/{id}/

|

||

Updates the properties of an existing workergroup based on the provided parameters.

|

||

|

||

CLI Usage: `vastai update workergroup <id> [options]`

|

||

|

||

|

||

|

||

# create team

|

||

Source: https://docs.vast.ai/api-reference/team/create-team

|

||

|

||

api-reference/openapi.json post /api/v0/team/

|

||

Creates a new [team](https://docs.vast.ai/documentation/teams/teams-overview) with given name and following default roles:

|

||

- **Owner**: Full access to all team resources, settings, and member management. The team owner is the user who creates the team.

|

||

- **Manager**: All permissions of owner except team deletion.

|

||

- **Member**: Can view, create, and interact with instances, but cannot access billing, team management, autoscaler, or machines.

|

||

|

||

- The API key used to create the team becomes the team key and is used for all team operations (e.g., creating roles, deleting the team).

|

||

- You can optionally transfer credits from your personal account to the new team account using the `transfer_credit` field.

|

||

|

||

CLI Usage: `vastai create team --team_name <team_name> [--transfer_credit <amount>]`

|

||

|

||

|

||

|

||

# create team role

|

||

Source: https://docs.vast.ai/api-reference/team/create-team-role

|

||

|

||

api-reference/openapi.json post /api/v0/team/roles/

|

||

Creates a new role within a team. Only team owners or managers with the appropriate permissions can perform this operation.

|

||

|

||

CLI Usage: `vastai create team role --name <role_name> --permissions <permissions_json>`

|

||

|

||

|

||

|

||

# destroy team

|

||

Source: https://docs.vast.ai/api-reference/team/destroy-team

|

||

|

||

api-reference/openapi.json delete /api/v0/team/

|

||

Deletes a team and all associated data including API keys, rights, invitations, memberships and metadata. The team owner's master API key is converted to a normal client key.

|

||

|

||

CLI Usage: `vastai destroy team`

|

||

|

||

|

||

|

||

# invite team member

|

||

Source: https://docs.vast.ai/api-reference/team/invite-team-member

|

||

|

||

api-reference/openapi.json post /api/v0/team/invite/

|

||

Sends an invitation email to the specified user to join the team with the given role.

|

||

|

||

CLI Usage: `vastai invite team-member --email <email> --role <role>`

|

||

|

||

|

||

|

||

# remove team member

|

||

Source: https://docs.vast.ai/api-reference/team/remove-team-member

|

||

|

||

api-reference/openapi.json delete /api/v0/team/members/{id}

|

||

Removes a member from the team by revoking their team-related API keys and updating membership status. Cannot remove the team owner.

|

||

|

||

CLI Usage: `vastai remove team-member <id>`

|

||

|

||

|

||

|

||

# remove team role

|

||

Source: https://docs.vast.ai/api-reference/team/remove-team-role

|

||

|

||

api-reference/openapi.json delete /api/v0/team/roles/{id}

|

||

Removes a role from the team. Cannot remove the team owner role.

|

||

|

||

CLI Usage: `vastai remove team-role <name>`

|

||

|

||

|

||

|

||

# show team members

|

||

Source: https://docs.vast.ai/api-reference/team/show-team-members

|

||

|

||

api-reference/openapi.json get /api/v0/team/members/

|

||

Retrieve a list of team members associated with the authenticated user's team.

|

||

|

||

CLI Usage: `vastai show team-members`

|

||

|

||

|

||

|

||

# show team roles

|

||

Source: https://docs.vast.ai/api-reference/team/show-team-roles

|

||

|

||

api-reference/openapi.json get /api/v0/team/roles-full/

|

||

Retrieve a list of all roles for a team, excluding the owner' role.

|

||

|

||

CLI Usage: `vastai show team-roles`

|

||

|

||

|

||

|

||

# update team role

|

||

Source: https://docs.vast.ai/api-reference/team/update-team-role

|

||

|

||

api-reference/openapi.json put /api/v0/team/roles/{id}/

|

||

Update an existing team role with new name and permissions.

|

||

|

||

CLI Usage: `vastai update team-role <id> --name <new_name> --permissions <new_permissions_json>`

|

||

|

||

|

||

|

||

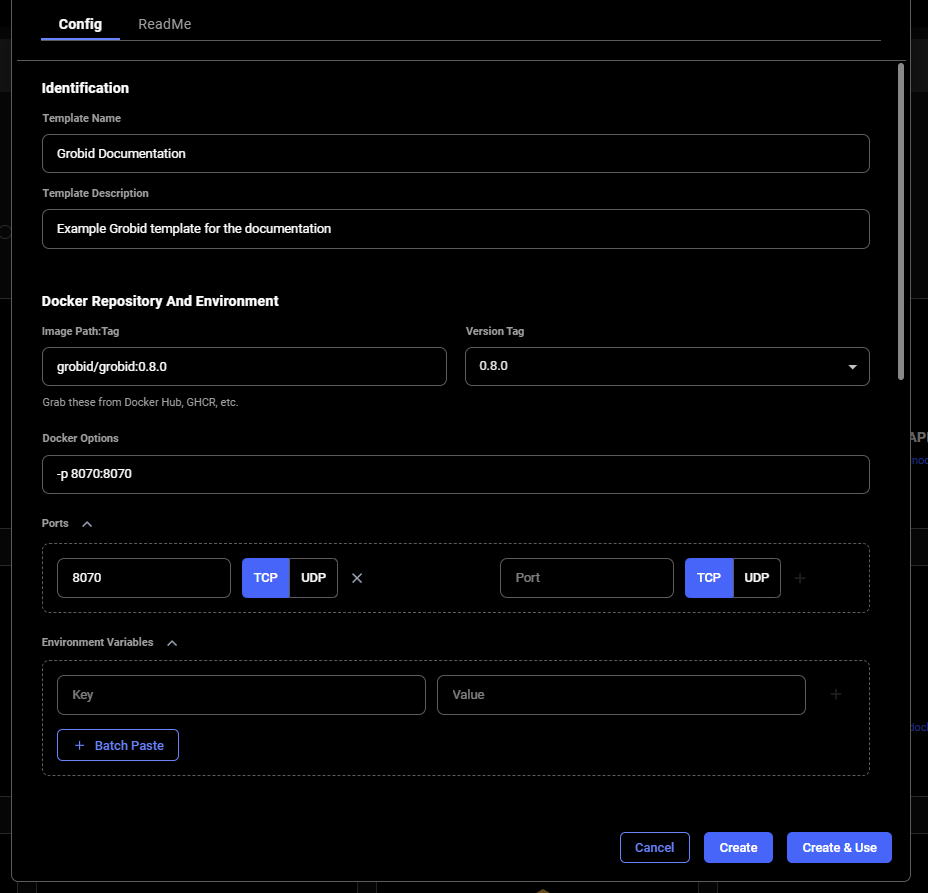

# create template

|

||

Source: https://docs.vast.ai/api-reference/templates/create-template

|

||

|

||

api-reference/openapi.json post /api/v0/template/

|

||

Creates a new template for launching instances. If an identical template already exists, returns the existing template instead of creating a duplicate.

|

||

|

||

CLI Usage: `vastai create template [options]`

|

||

|

||

|

||

|

||

# delete volume

|

||

Source: https://docs.vast.ai/api-reference/volumes/delete-volume

|

||

|

||

api-reference/openapi.json delete /api/v0/volumes/

|

||

Delete a volume by its ID.

|

||

|

||

CLI Usage: `vastai delete volume <volume_id>`

|

||

|

||

|

||

|

||

# list volumes

|

||

Source: https://docs.vast.ai/api-reference/volumes/list-volumes

|

||

|

||

api-reference/openapi.json get /api/v0/volumes/

|

||

Retrieve information about all volumes rented by you.

|

||

|

||

CLI Usage: `vastai show volumes`

|

||

|

||

|

||

|

||

# rent volume

|

||

Source: https://docs.vast.ai/api-reference/volumes/rent-volume

|

||

|

||

api-reference/openapi.json put /api/v0/volumes/

|

||

Rent/create a new volume with specified parameters.

|

||

|

||

CLI Usage: `vastai create volume <id> --size <size_gb>`

|

||

|

||

|

||

|

||

# search volumes

|

||

Source: https://docs.vast.ai/api-reference/volumes/search-volumes

|

||

|

||

api-reference/openapi.json post /api/v0/volumes/search/

|

||

Search for available volumes based on specified criteria.

|

||

|

||

CLI Usage: `vastai search volumes <query> [options]`

|

||

|

||

|

||

|

||

# unlist volume

|

||

Source: https://docs.vast.ai/api-reference/volumes/unlist-volume

|

||

|

||

api-reference/openapi.json post /api/v0/volumes/unlist/

|

||

Remove a volume listing from the marketplace.

|

||

|

||

CLI Usage: `vastai unlist volume <volume_id>`

|

||

|

||

|

||

|

||

# Blender Batch Rendering

|

||

Source: https://docs.vast.ai/blender-batch-rendering

|

||

|

||

|

||

|

||

Blender is a free, open source 3D creation suite. It can be used to create animated films, visual effects, art, 3D-printed models, motion graphics, interactive 3D applications, virtual reality, and video games. It supports the entirety of the 3D pipeline—modeling, rigging, animation, simulation, rendering, compositing and motion tracking, even video editing and game creation. You can find more information about Blender at [blender.org](https://www.blender.org/).

|

||

|

||

Animators, game developers, 3D modelers, visual effects artists, architects, and product designers are some people who use Blender.

|

||

|

||

GPUs can speed up rendering in Blender.

|

||

|

||

You can save time by automating away the rendering of animations for batch of blend files.

|

||

|

||

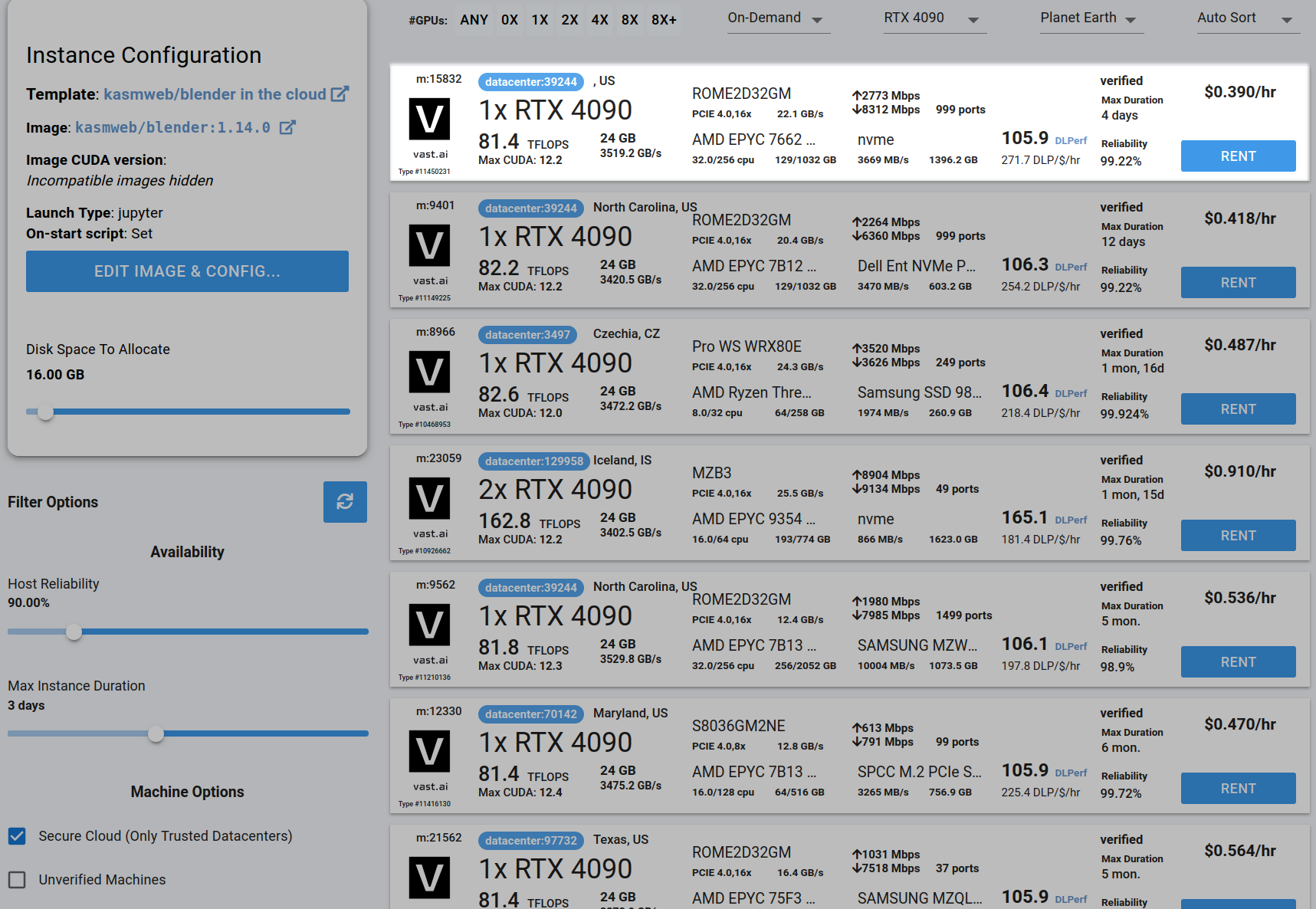

## Step 1 - Open Vast's Blender Batch Renderer Template

|

||

|

||

Click on this link [Blender Batch Renderer Template](https://cloud.vast.ai/?ref_id=142678\&template_id=7b570ea8454e5f2b4b026139709fa0e8) to select the vast/blender-batch-renderer template.

|

||

|

||

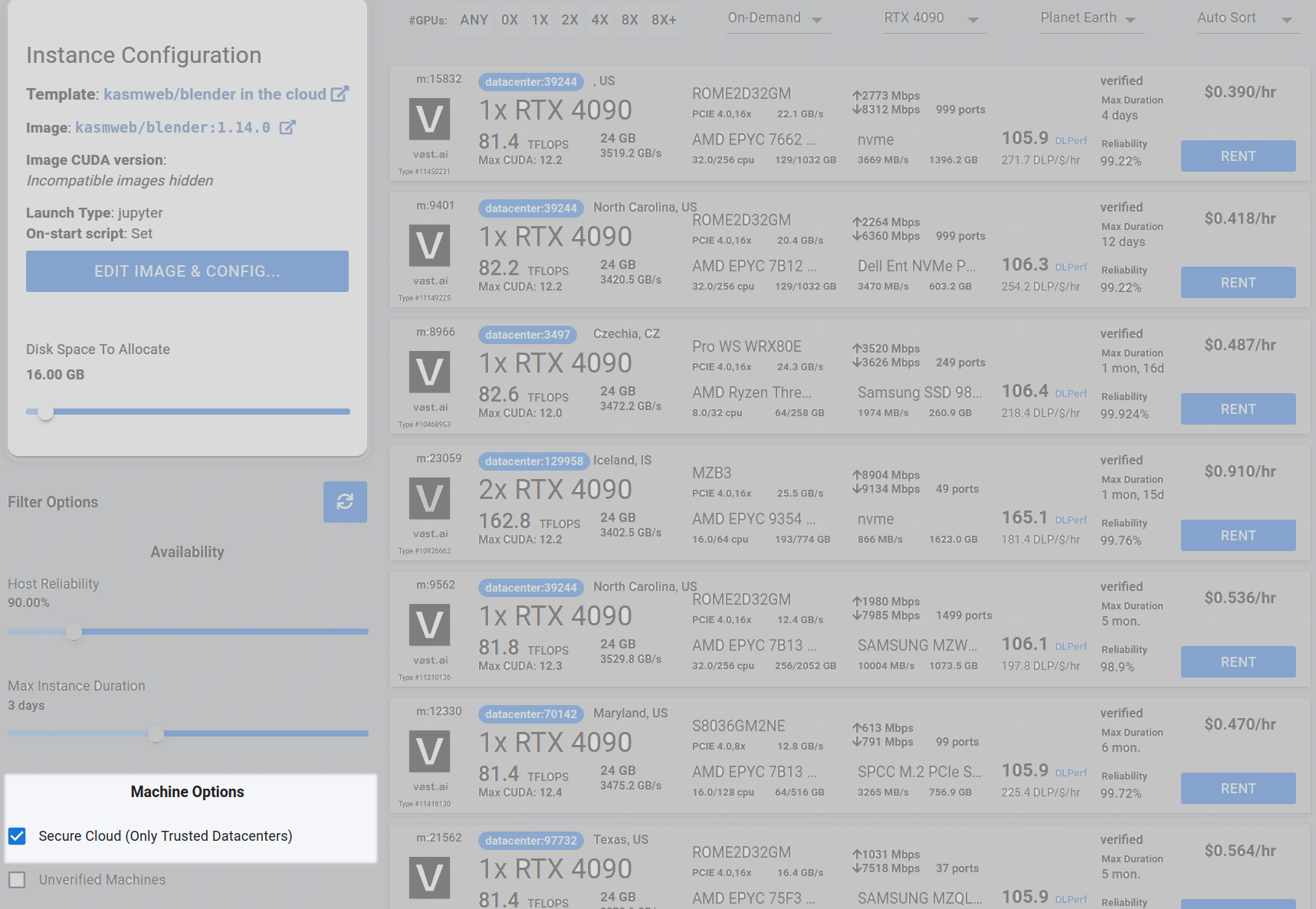

## Step 2 - Check the Secure Cloud box if you want a secure machine from trusted datacenters (Optional)

|

||

|

||

You can narrow your search results to only data center machines if you want insured security standards from our trusted datacenters.

|

||

|

||

<Frame caption="Highlighted Secure Cloud">

|

||

|

||

</Frame>

|

||

|

||

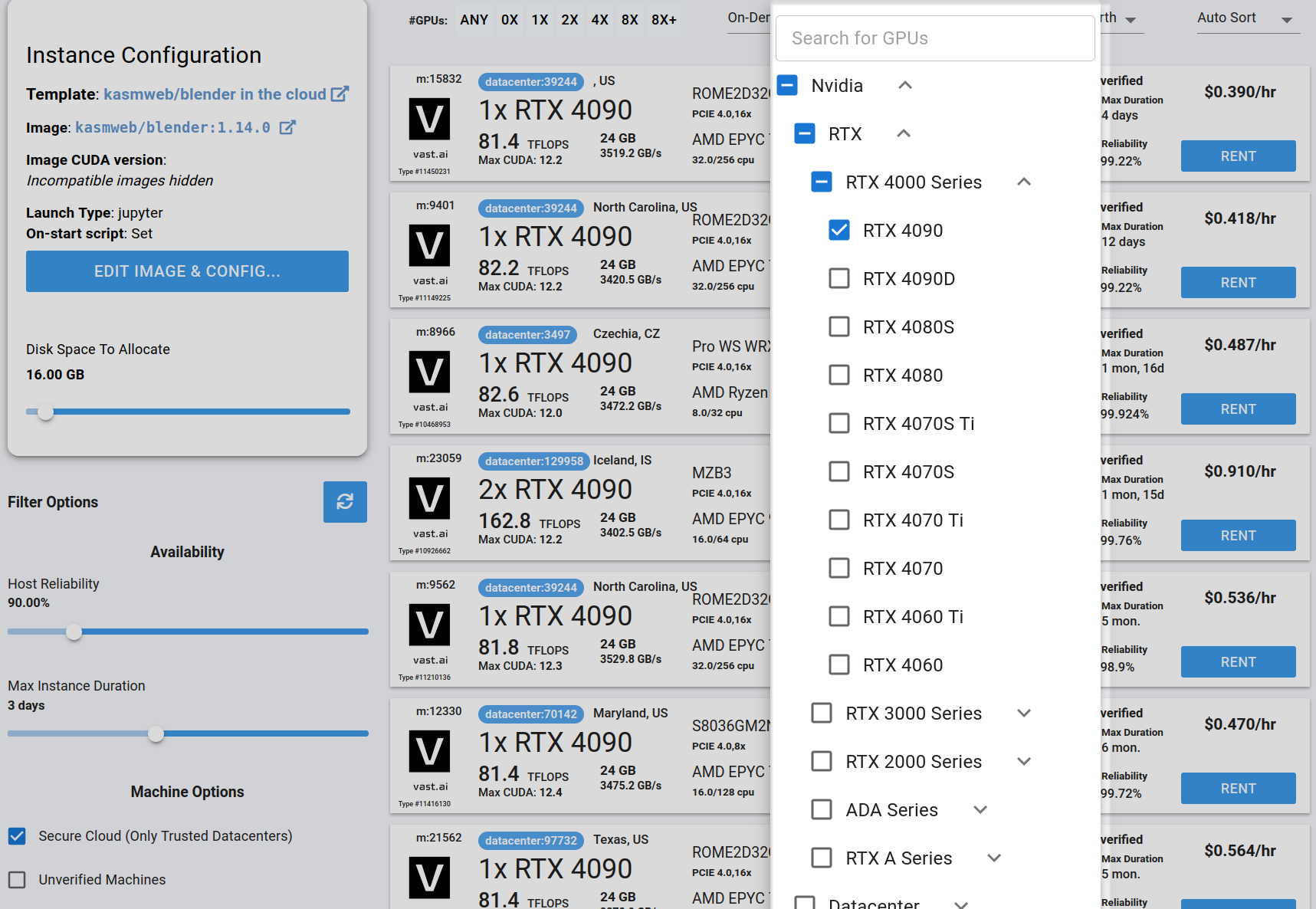

## Step 3 - Filter for a GPU that you feel best suits your needs

|

||

|

||

If you have questions about which GPU to choose, there is some data around NVIDIA Geforce RTX 4090 giving the best render speed with Blender. You can find other GPUs that work well with Blender here [Blender GPU Benchmarks](https://opendata.blender.org/benchmarks/query/?group_by=device_name\&blender_version=3.6.0). You can also find other options by searching on Google or asking ChatGPT.

|

||

|

||

The version of Blender running within Vast while using the template linked above at the time of this writing is 3.6.2.

|

||

|

||

Go to the GPUs filter and check the box for RTX 4090 or another GPU instance.

|

||

|

||

For example,

|

||

|

||

<Frame caption="Highlighted Rtx 4090 Filter Pic">

|

||

|

||

</Frame>

|

||

|

||

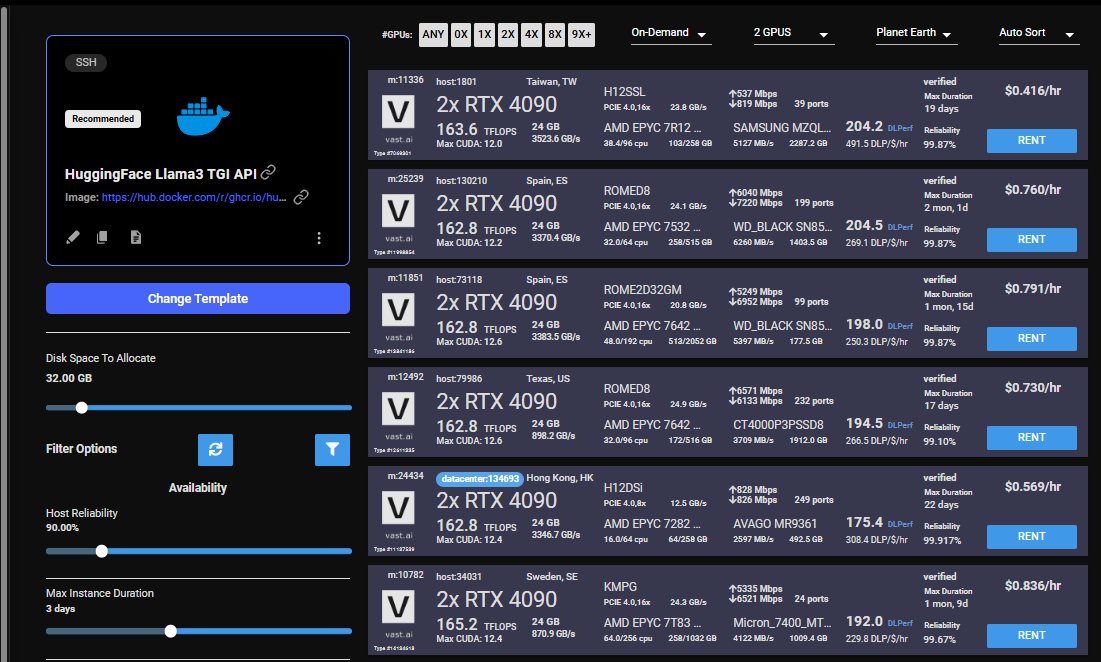

## Step 4 - Choose a GPU by Clicking "RENT"

|

||

|

||

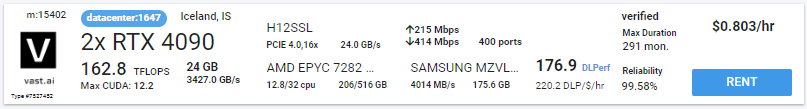

Choose a GPU that meets your budget, desired reliability %, and other constraints by clicking "RENT". GPUs are sorted by a complex proprietary algorithm that aims to give users the best machines for their value by default.

|

||

You can filter GPUs further per your requirements if desired.

|

||

|

||

<Frame caption="Highlighted Rent">

|

||

|

||

</Frame>

|

||

|

||

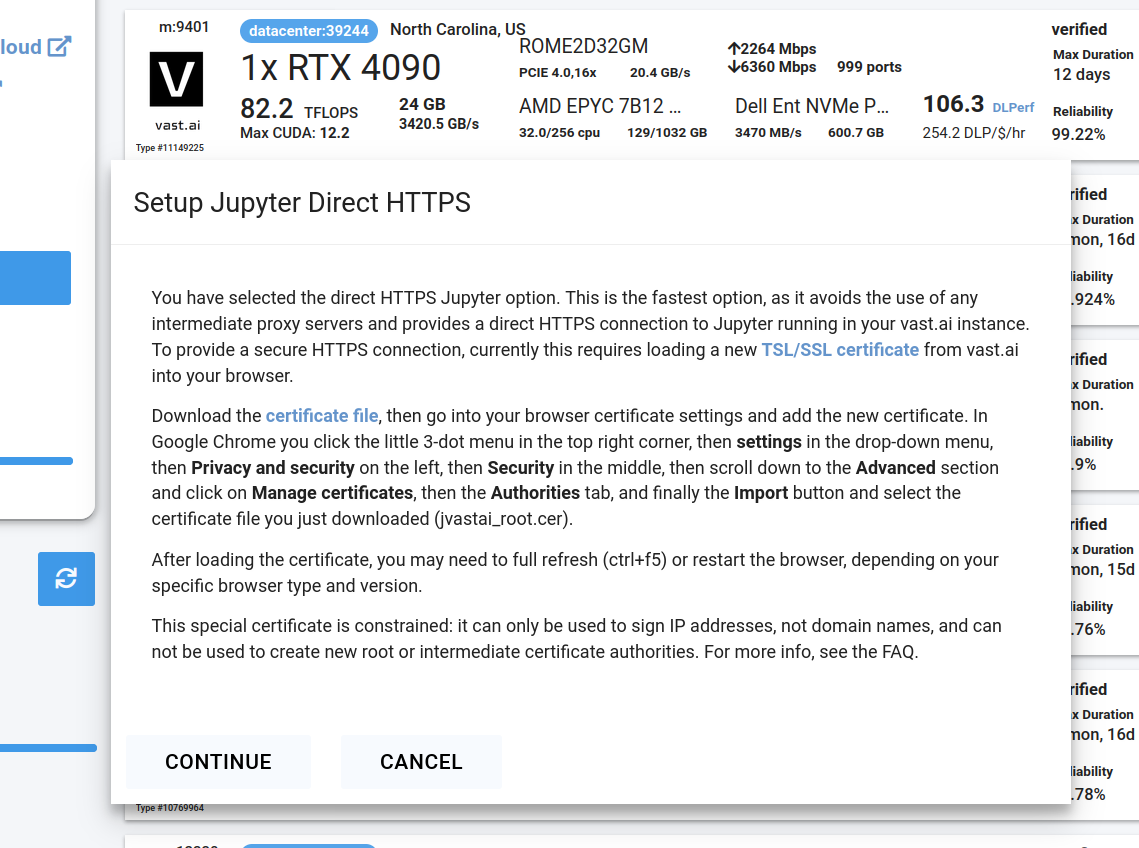

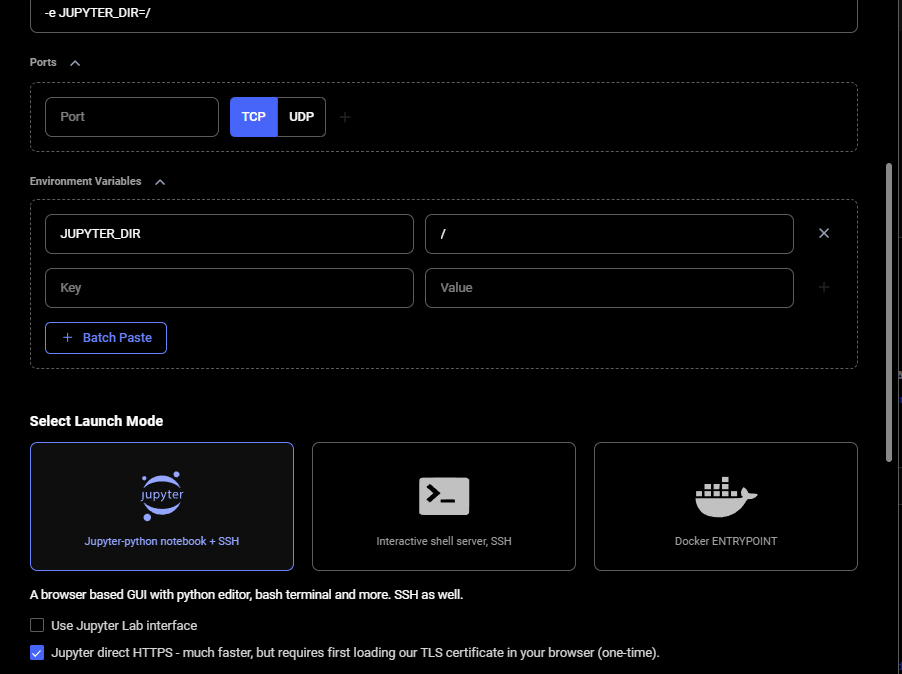

## Step 5 - Use Jupyter Direct HTTPS Launch Mode

|

||

|

||

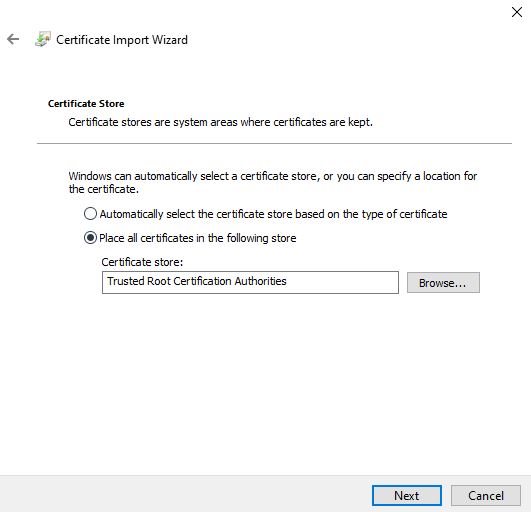

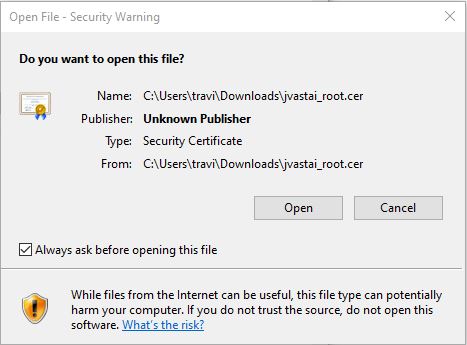

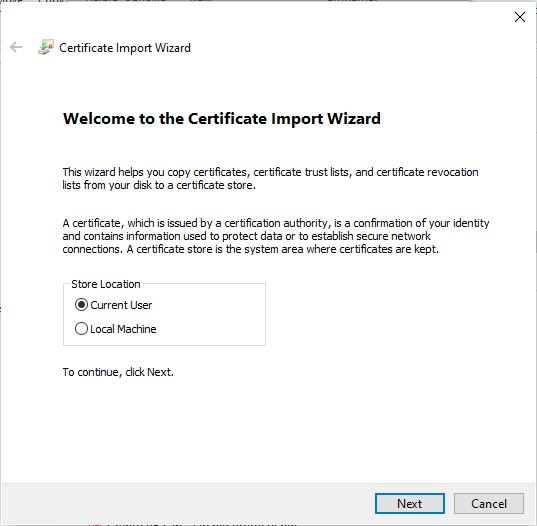

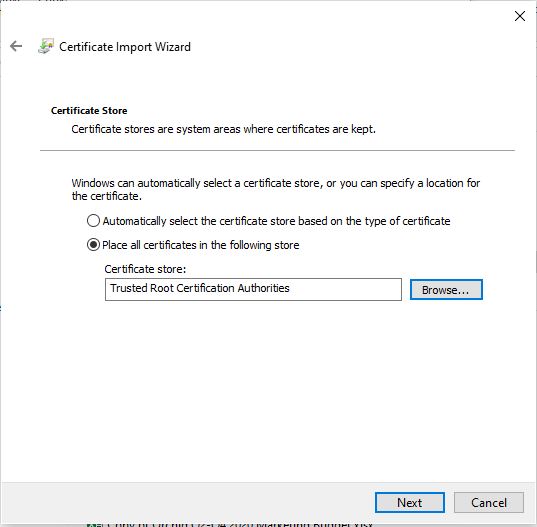

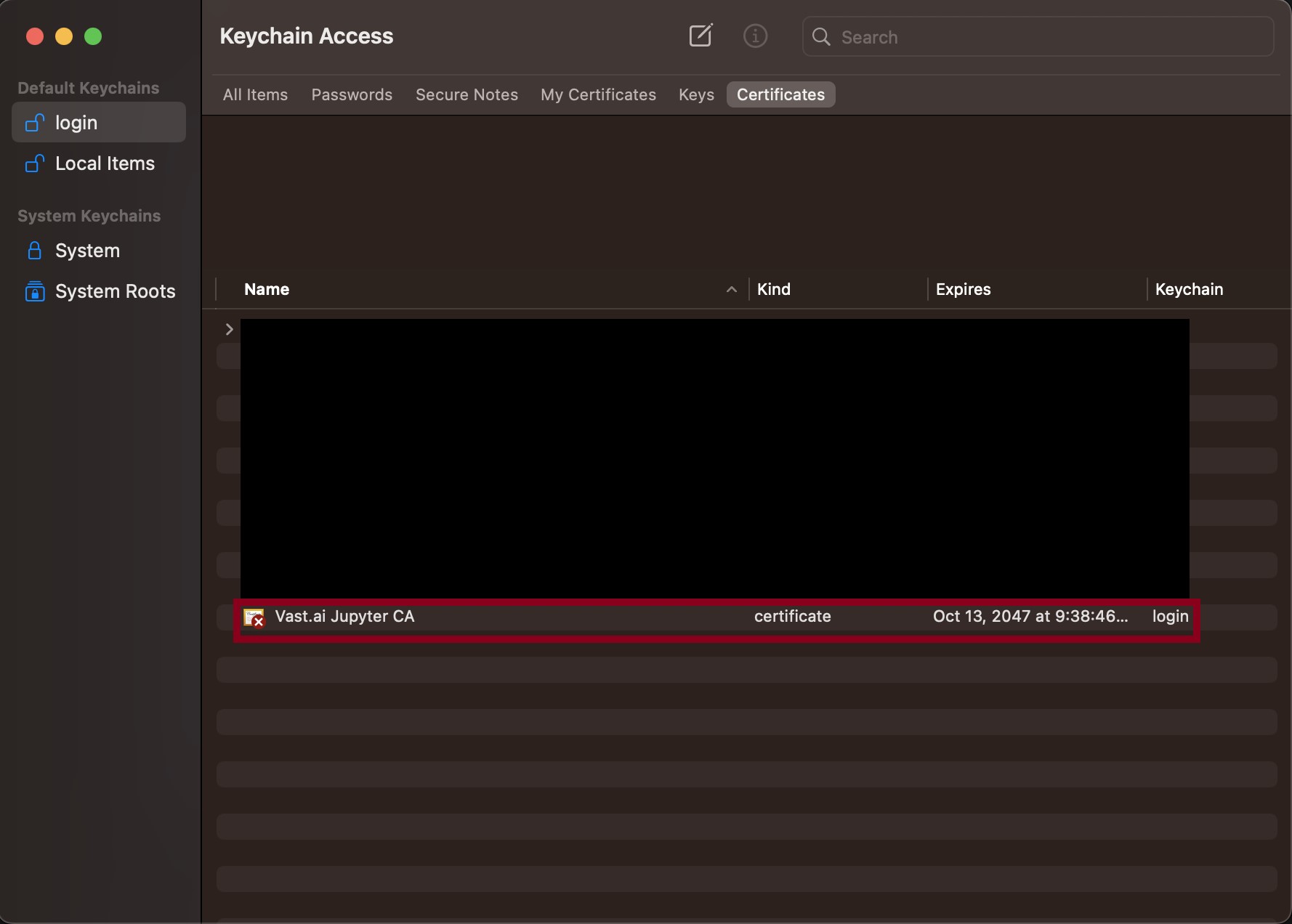

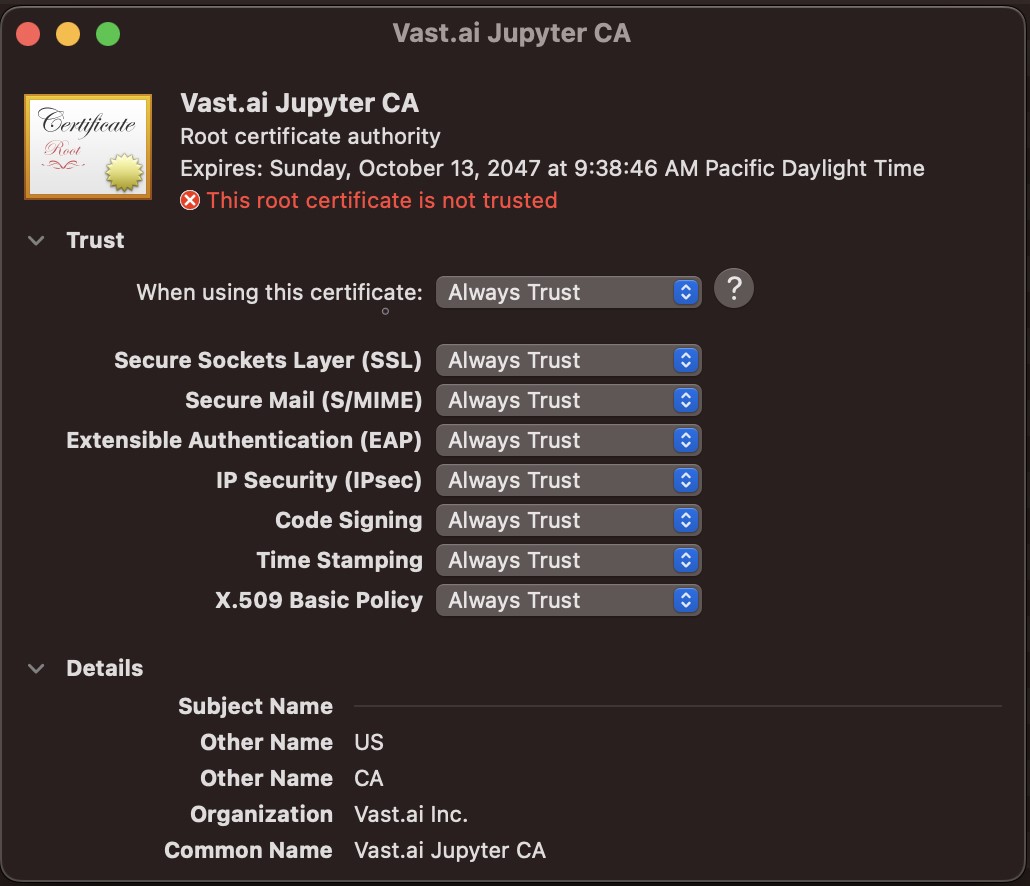

Follow the instructions related to adding a certificate to your browser if you need to when it asks you to "Setup Jupyter Direct HTTPS" and click "CONTINUE". Here's more information on the Jupyter direct HTTPS Launch Mode and Installing the TLS certificate: [Jupyter](/documentation/instances/jupyter).

|

||

|

||

<Frame caption="Updated Jupyter Direct Https Continue">

|

||

|

||

</Frame>

|

||

|

||

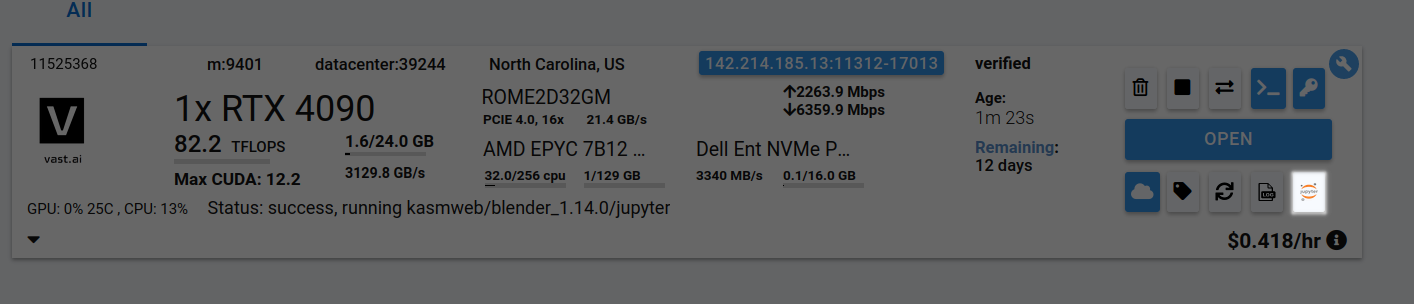

## Step 6 - Click the Open Button or Jupyter Notebook button to open Jupyter Notebook

|

||

|

||

<Frame caption="Jupyter Notebook Button">

|

||

|

||

</Frame>

|

||

|

||

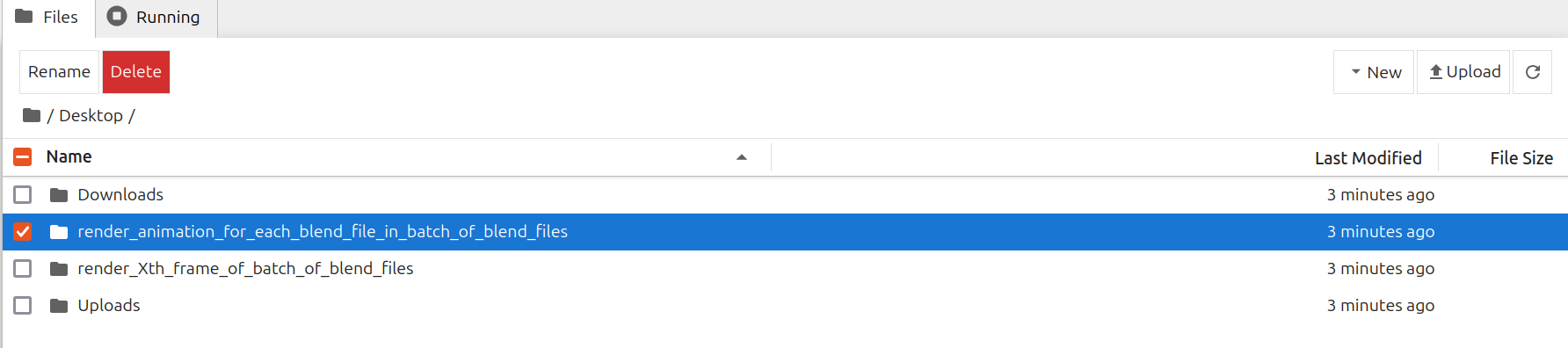

## Step 7 - To Render Animation For Each Blend File In Batch Of Blend Files

|

||

|

||

If you want to render a respective animation for each blend file in a batch of blend files, follow the following steps.

|

||

|

||

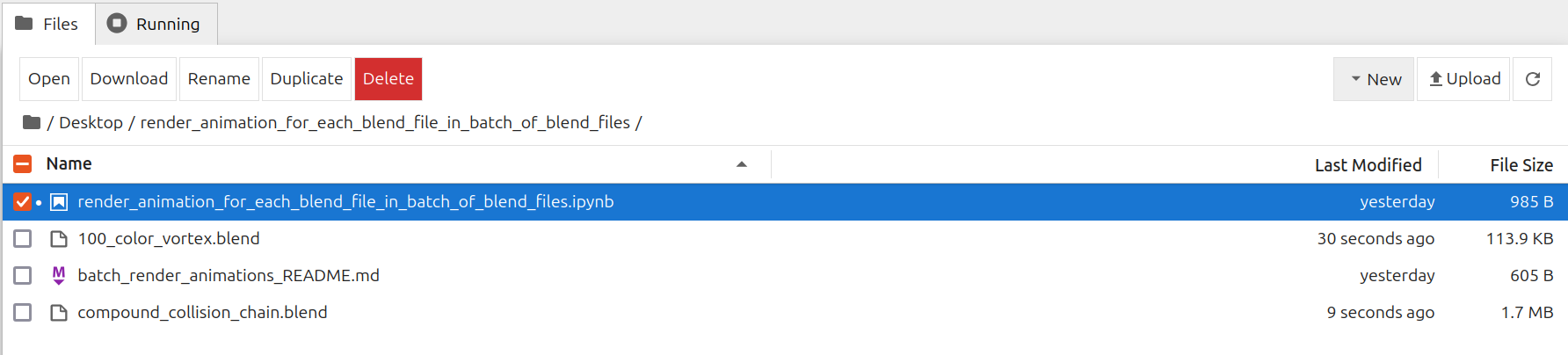

Go to /Desktop/render\_animation\_for\_each\_blend\_file\_in\_batch\_of\_blend\_files/ folder in Jupyter Notebook

|

||

|

||

<Frame caption="Go Render Animation For Batch Folder">

|

||

|

||

</Frame>

|

||

|

||

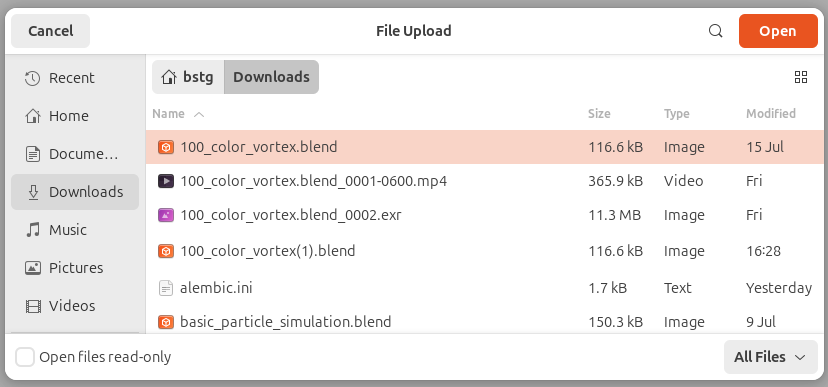

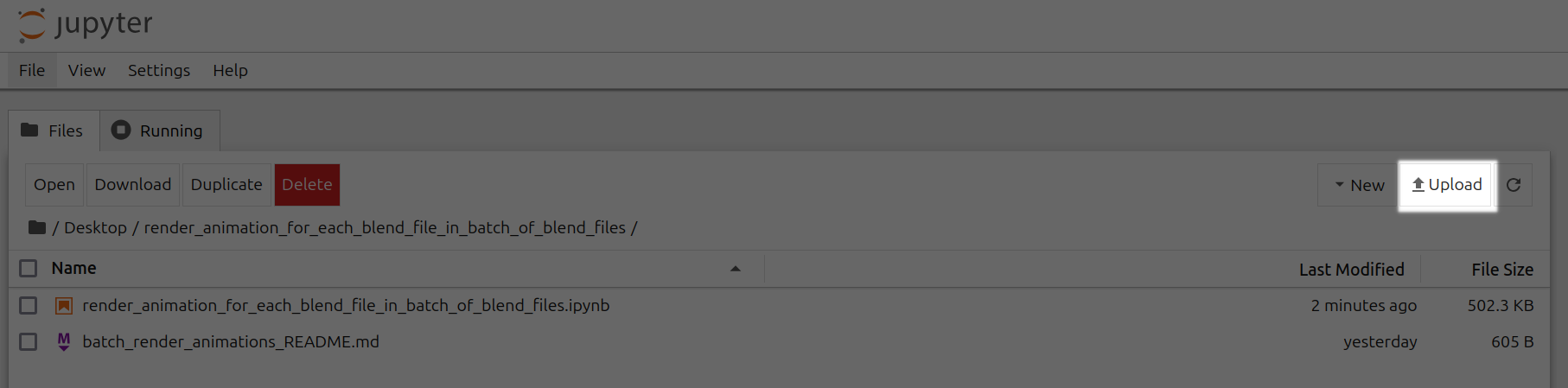

Upload .blend files to /Desktop/render\_animation\_for\_each\_blend\_file\_in\_batch\_of\_blend\_files/ folder

|

||

|

||

<Frame caption="Upload 100 Color Vortex">

|

||

|

||

</Frame>

|

||

|

||

<br />

|

||

|

||

<Frame caption="Upload Render Animation Batch Highlighted">

|

||

|

||

</Frame>

|

||

|

||

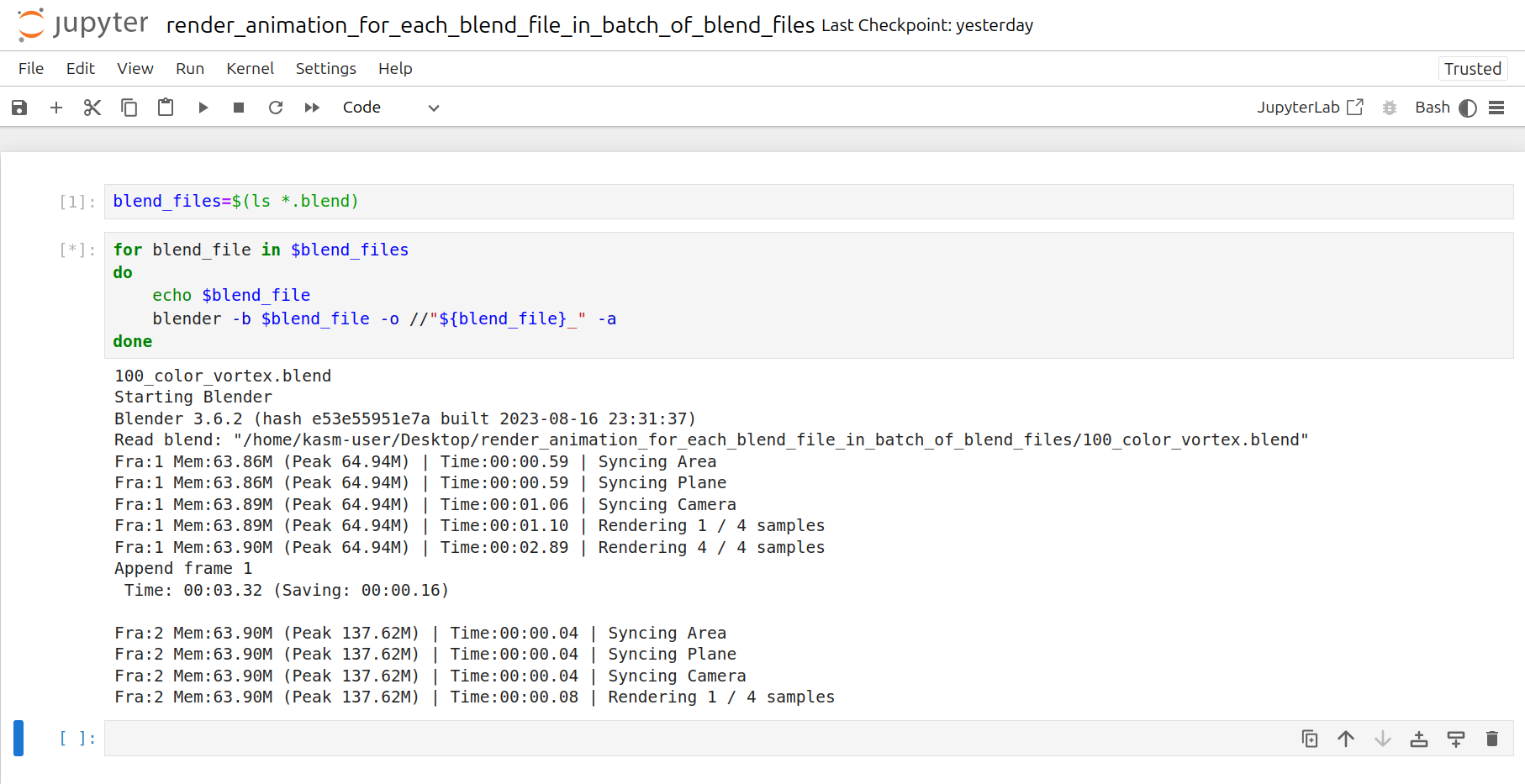

Open render\_animation\_for\_each\_blend\_file\_in\_batch\_of\_blend\_files.ipynb

|

||

|

||

<Frame caption="Open Render Animation For Batch Folder Notebook">

|

||

|

||

</Frame>

|

||

|

||

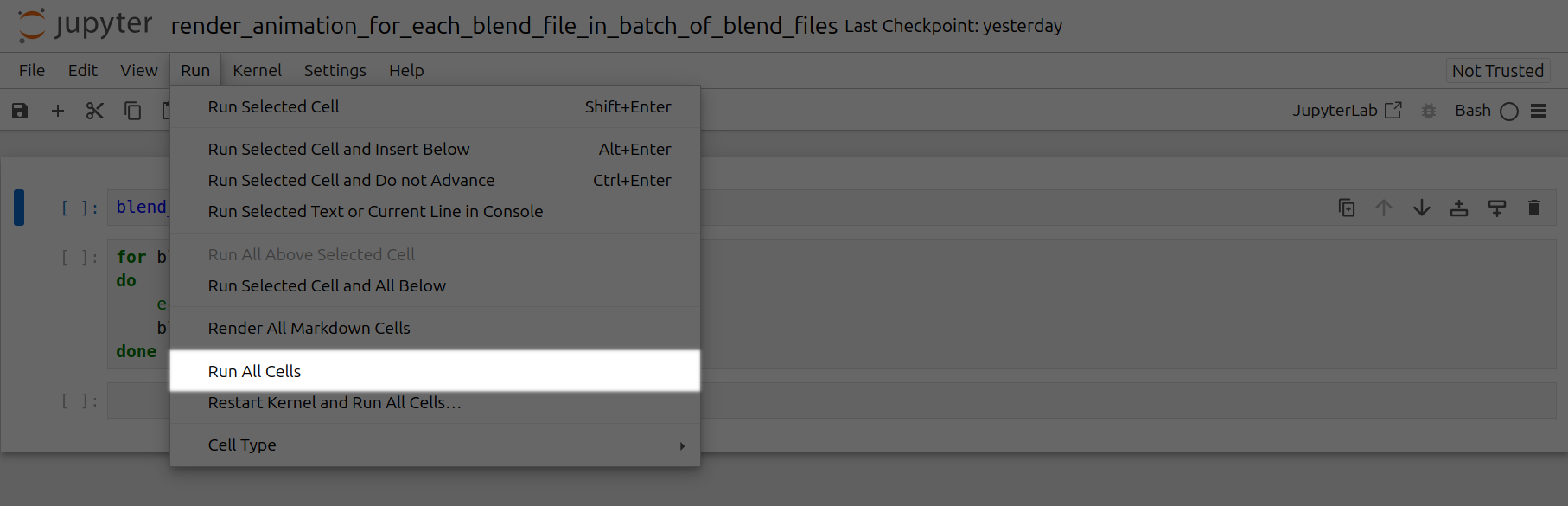

Click the Run tab and click Run All Cells

|

||

|

||

<Frame caption="Show Rendering Animations">

|

||

|

||

</Frame>

|

||

|

||

<br />

|

||

|

||

<Frame caption="Click Run All Cells Highlighted">

|

||

|

||

</Frame>

|

||

|

||

Now a corresponding animation will be rendered for each .blend file you have uploaded to this folder.

|

||

You can also close out your jupyter notebook tab in your browser and this notebook will keep running as long as your instance in Vast is running.

|

||

|

||

## Step 8 - To Render Animation For Xth Frame of Each Blend File In Batch Of Blend Files

|

||

|

||

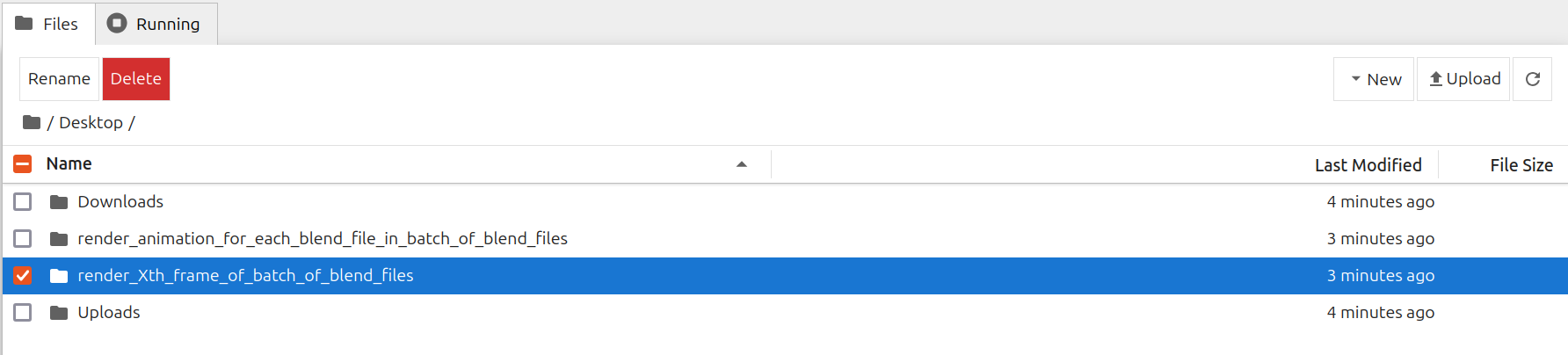

If you want to render a respective animation for the Xth frame of each blend file in a batch of blend files, follow the following steps.

|

||

|

||

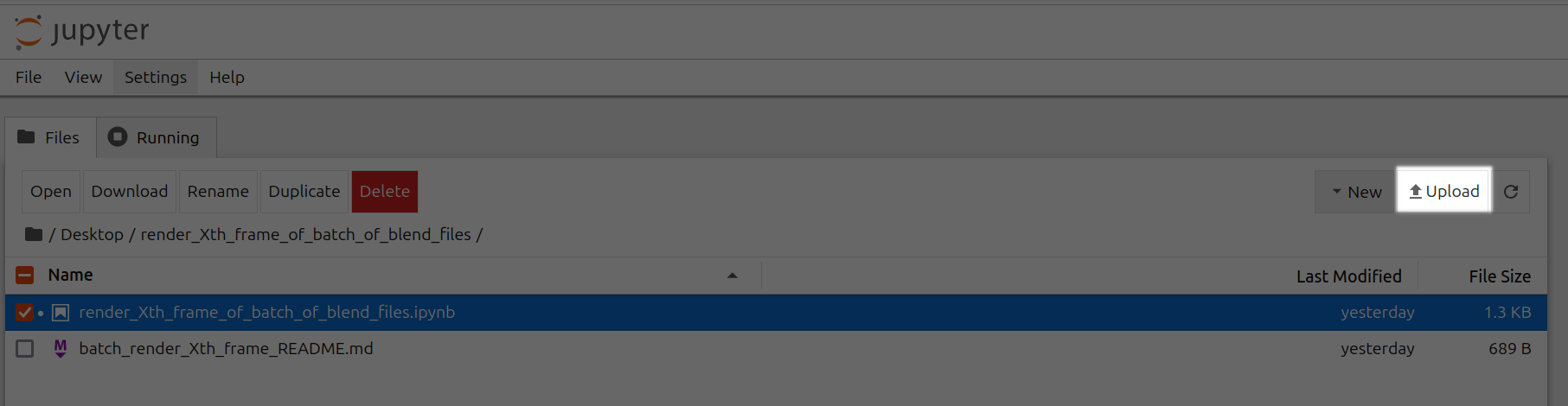

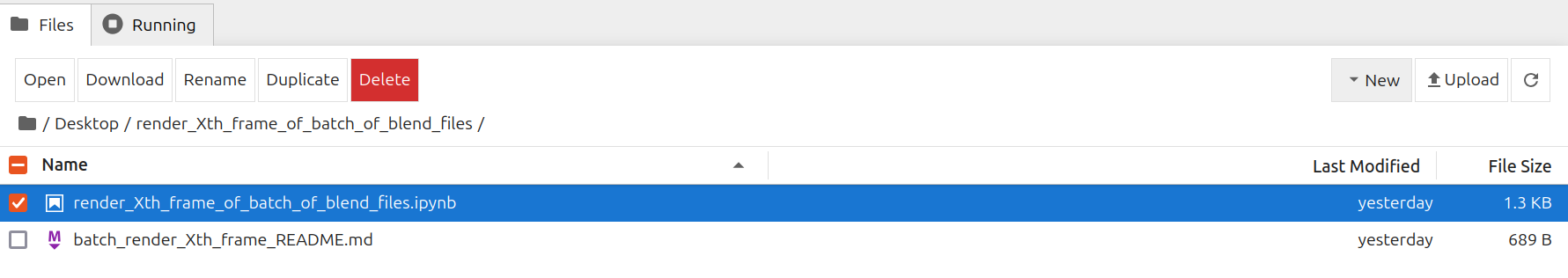

Go to /Desktop/render\_Xth\_frame\_of\_batch\_of\_blend\_files/ folder in Jupyter Notebook

|

||

|

||

<Frame caption="Go To Render Xth Frame For Batch Folder">

|

||

|

||

</Frame>

|

||

|

||

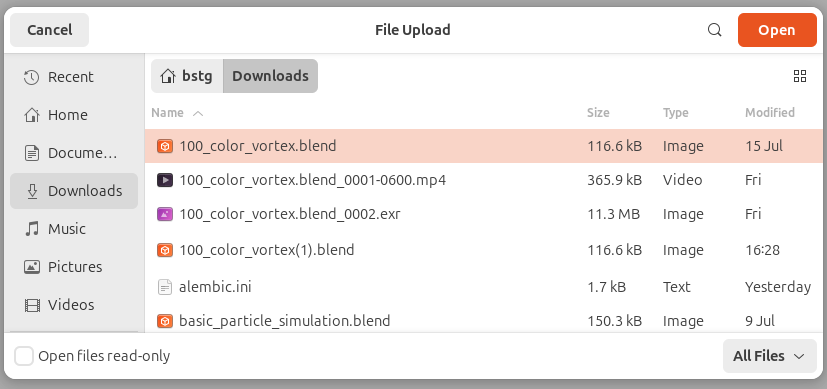

Upload .blend files to /Desktop/render\_Xth\_frame\_of\_batch\_of\_blend\_files/ folder

|

||

|

||

<Frame caption="Upload Blend Files For Xth Frame">

|

||

|

||

</Frame>

|

||

|

||

<br />

|

||

|

||

<Frame caption="Upload Xth Frame Highlighted">

|

||

|

||

</Frame>

|

||

|

||

Open render\_Xth\_frame\_of\_batch\_of\_blend\_files.ipynb

|

||

|

||

<Frame caption="Open Render Animation For Each Blend File In Batch Of Blend Files Ipynb">

|

||

|

||

</Frame>

|

||

|

||

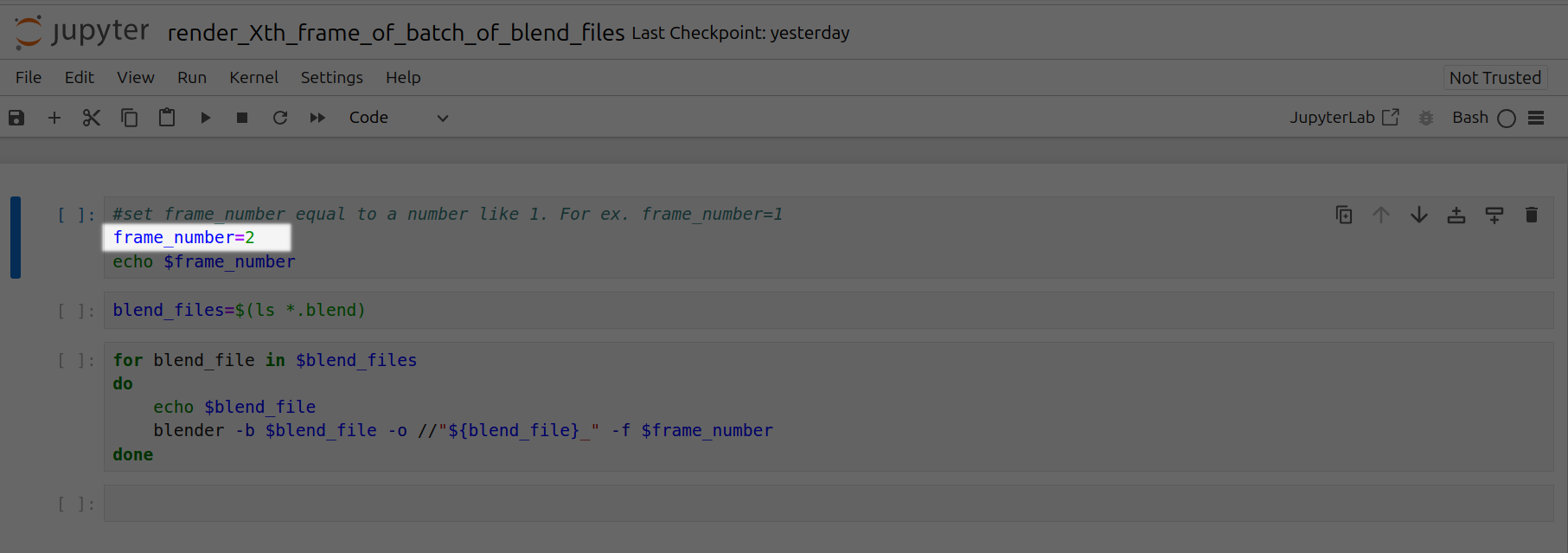

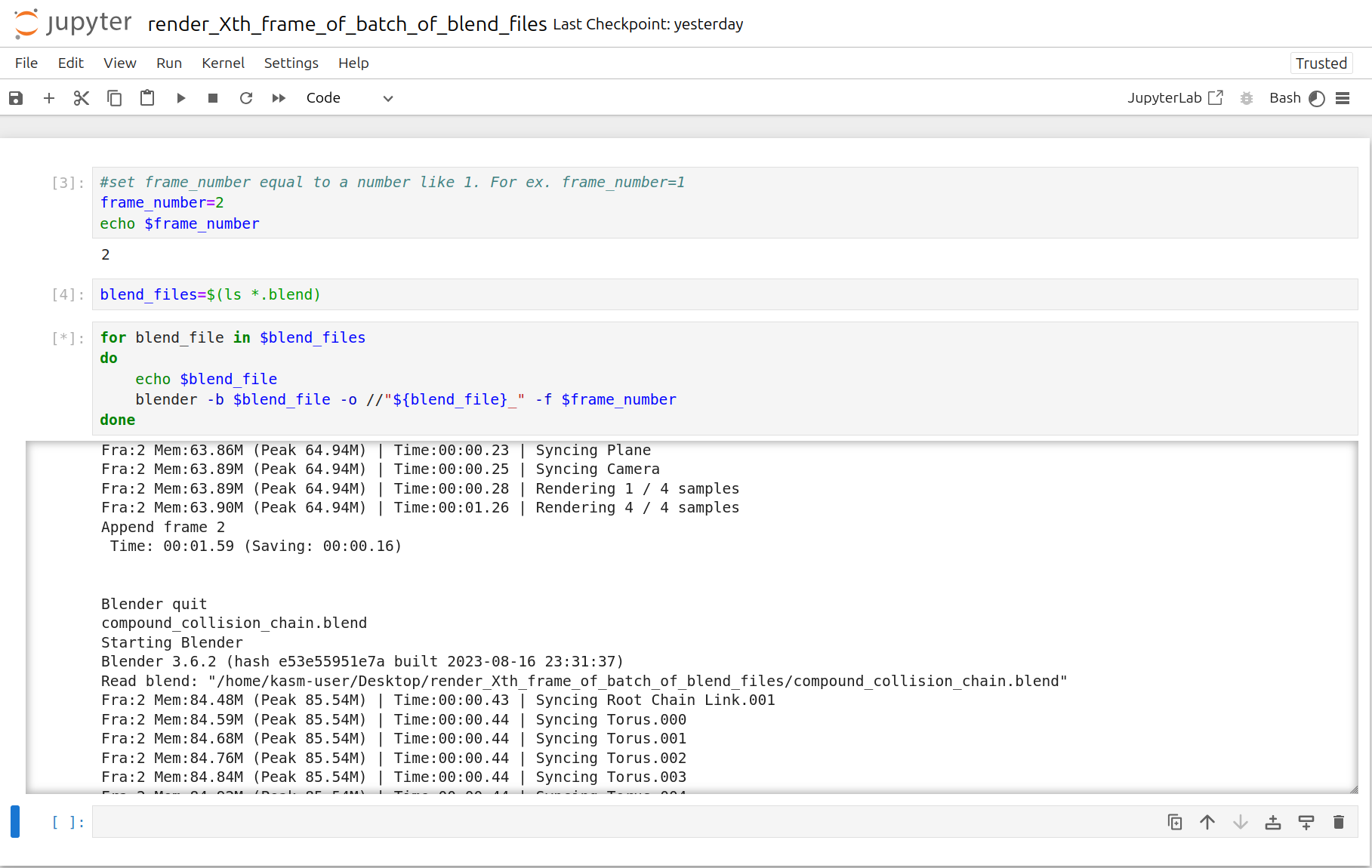

Set frame\_number equal to a particular frame number. For ex. frame\_number=2

|

||

|

||

<Frame caption="Set Frame Number 2">

|

||

|

||

</Frame>

|

||

|

||

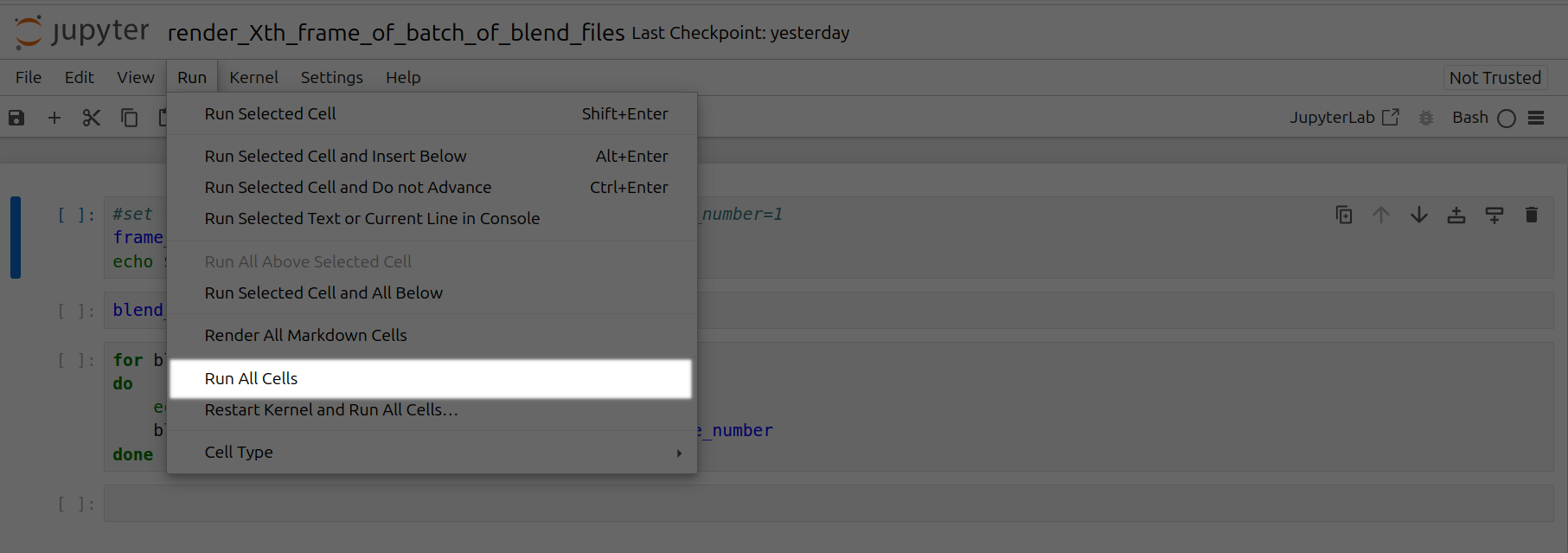

Click the Run tab and click Run All Cells

|

||

|

||

<Frame caption="Xth Frames Rendering">

|

||

|

||

</Frame>

|

||

|

||

<br />

|

||

|

||

<Frame caption="Run All Cells Xth Frame Highlighted">

|

||

|

||

</Frame>

|

||

|

||

Now a corresponding animation will be rendered for each Xth frame of each .blend file you have uploaded to this folder.

|

||

You can also close out your jupyter notebook tab in your browser and this notebook will keep running as long as your instance in Vast is running.

|

||

|

||

|

||

# Blender in the Cloud

|

||

Source: https://docs.vast.ai/blender-in-the-cloud

|

||

|

||

|

||

|

||

Blender is a free, open source 3D creation suite. It can be used to create animated films, visual effects, art, 3D-printed models, motion graphics, interactive 3D applications, virtual reality, and video games. It supports the entirety of the 3D pipeline—modeling, rigging, animation, simulation, rendering, compositing and motion tracking, even video editing and game creation. You can find more information about Blender at [blender.org](https://www.blender.org/).

|

||

|

||

Animators, game developers, 3D modelers, visual effects artists, architects, and product designers are some people who use Blender.

|

||

|

||

GPUs can speed up rendering in Blender.

|

||

|

||

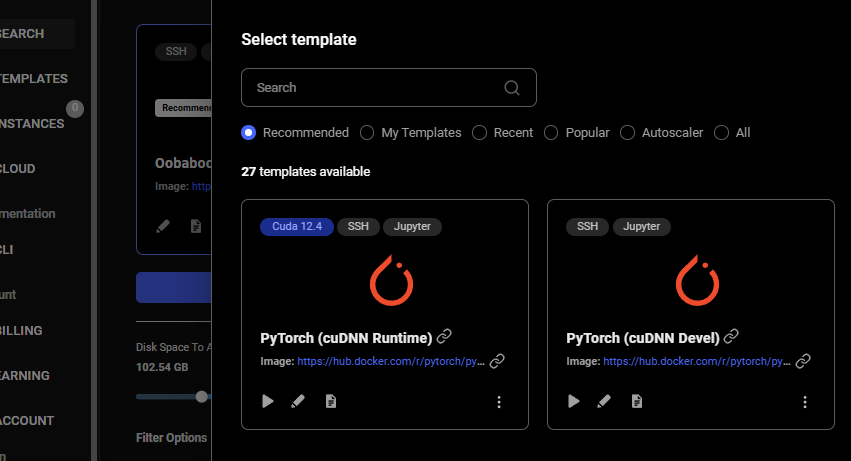

## Step 1 - Open Blender in the Cloud Template

|

||

|

||

Click on this link [Blender in the Cloud Template](https://cloud.vast.ai?ref_id=142678\&template_id=5846e4535b1ff5db56024c1c0711a0ce) to select the kasmweb/blender in the cloud template.

|

||

|

||

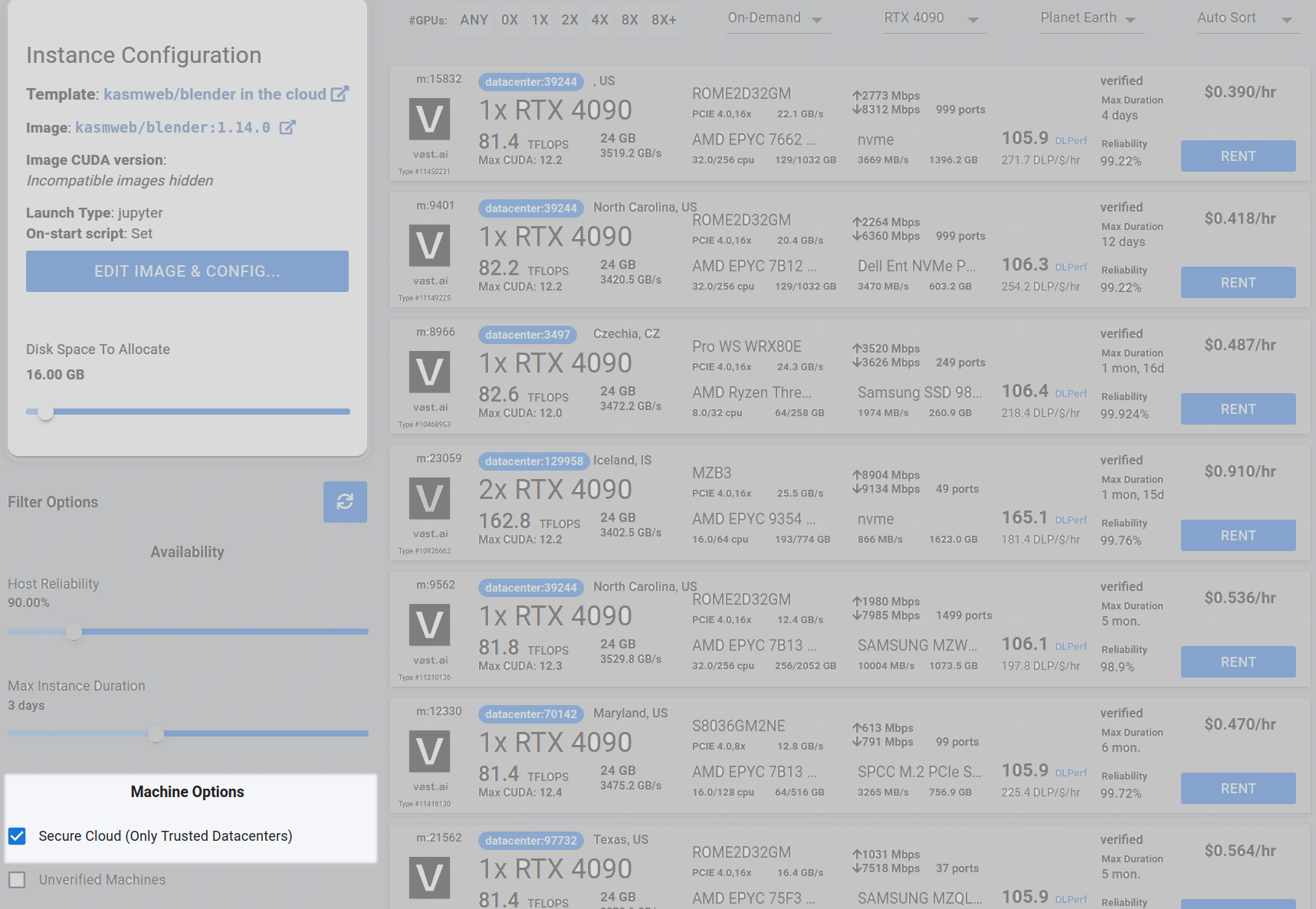

## Step 2 - \[Optional] Check the Secure Cloud box

|

||

|

||

You can narrow your search results to only data center machines if you want insured security standards from our trusted datacenters.

|

||

|

||

<Frame caption="Highlighted Secure Cloud">

|

||

|

||

</Frame>

|

||

|

||

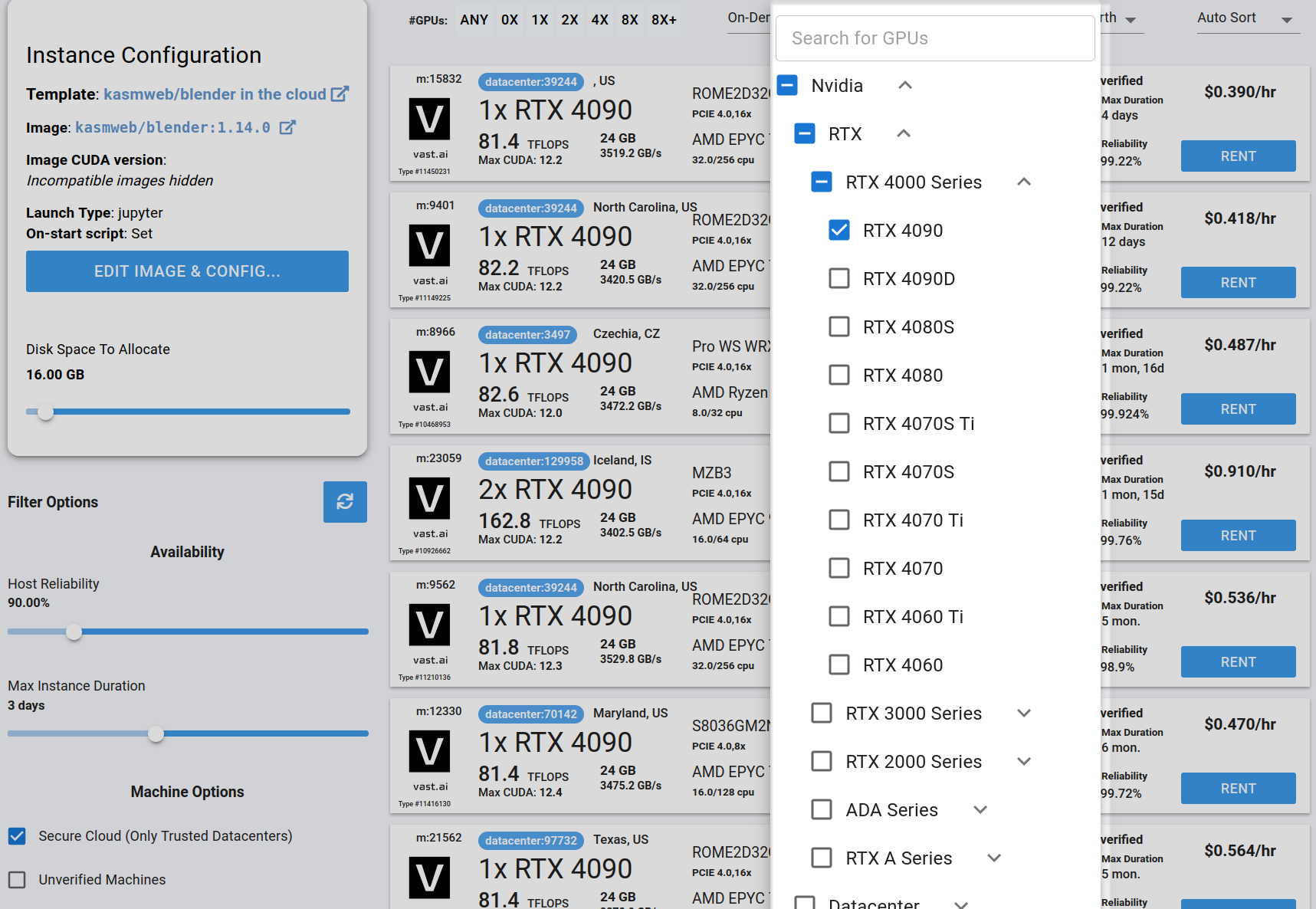

## Step 3 - Filter for a GPU that you feel best suits your needs

|

||

|

||

If you have questions about which GPU to choose, there is some data around NVIDIA Geforce RTX 4090 giving the best render speed with Blender. You can find other GPUs that work well with Blender here [Blender GPU Benchmarks](https://opendata.blender.org/benchmarks/query/?group_by=device_name\&blender_version=3.6.0). You can also find other options by searching on Google or asking ChatGPT.

|

||

|

||

The version of Blender running within Vast while using the template linked above at the time of this writing is 3.6.2.

|

||

|

||

Go to the GPUs filter and check the box for RTX 4090 or another GPU instance.

|

||

|

||

For example,

|

||

|

||

<Frame caption="Highlighted Rtx 4090 Filter Pic">

|

||

|

||

</Frame>

|

||

|

||

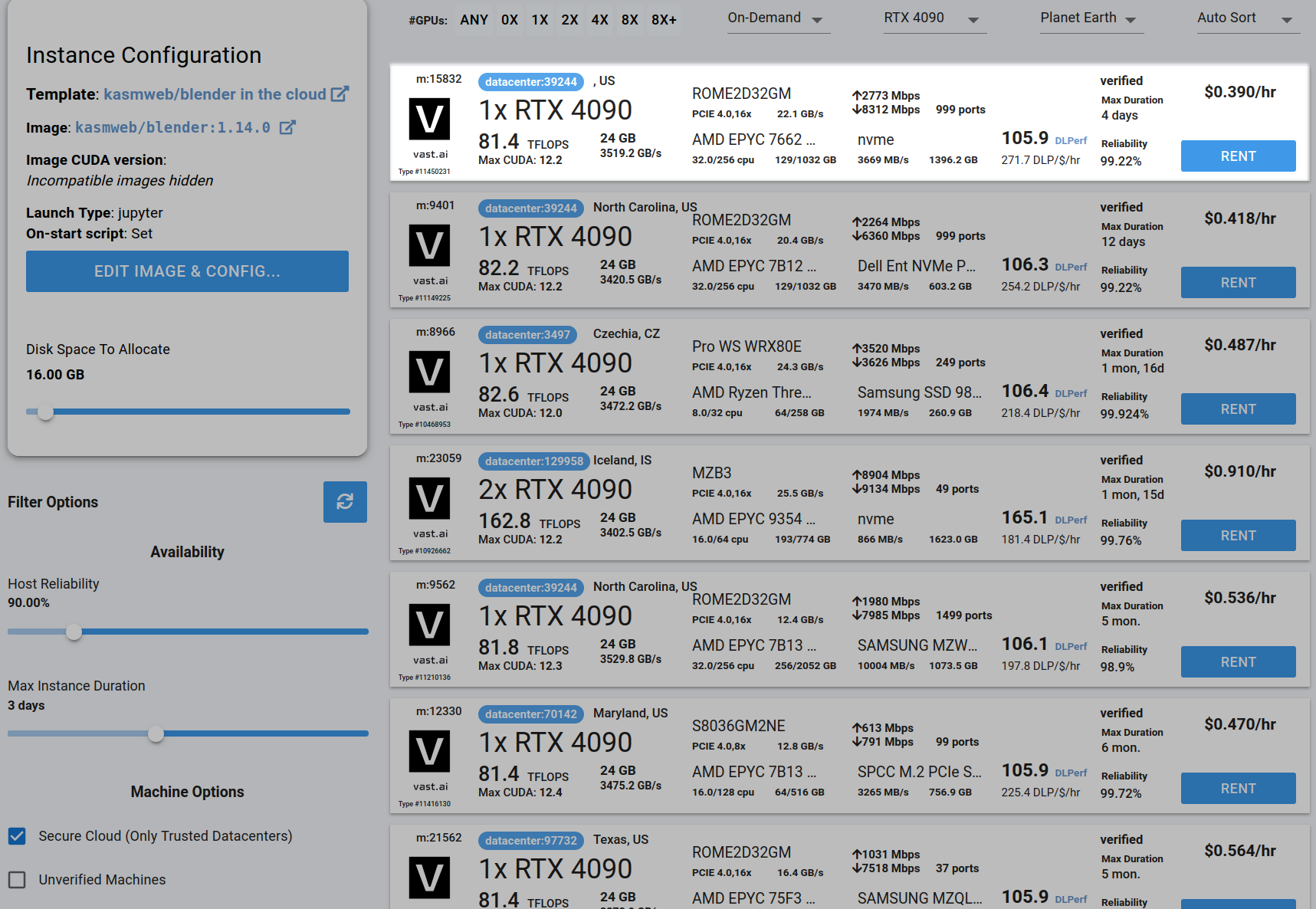

## Step 4 - Choose a GPU by Clicking "RENT"

|

||

|

||

Choose a GPU that meets your budget, desired reliability %, and other constraints by clicking "RENT". GPUs are sorted by a complex proprietary algorithm that aims to give users the best machines for their value by default.

|

||

You can filter GPUs further per your requirements if desired.

|

||

|

||

<Frame caption="Highlighted Rent">

|

||

|

||

</Frame>

|

||

|

||

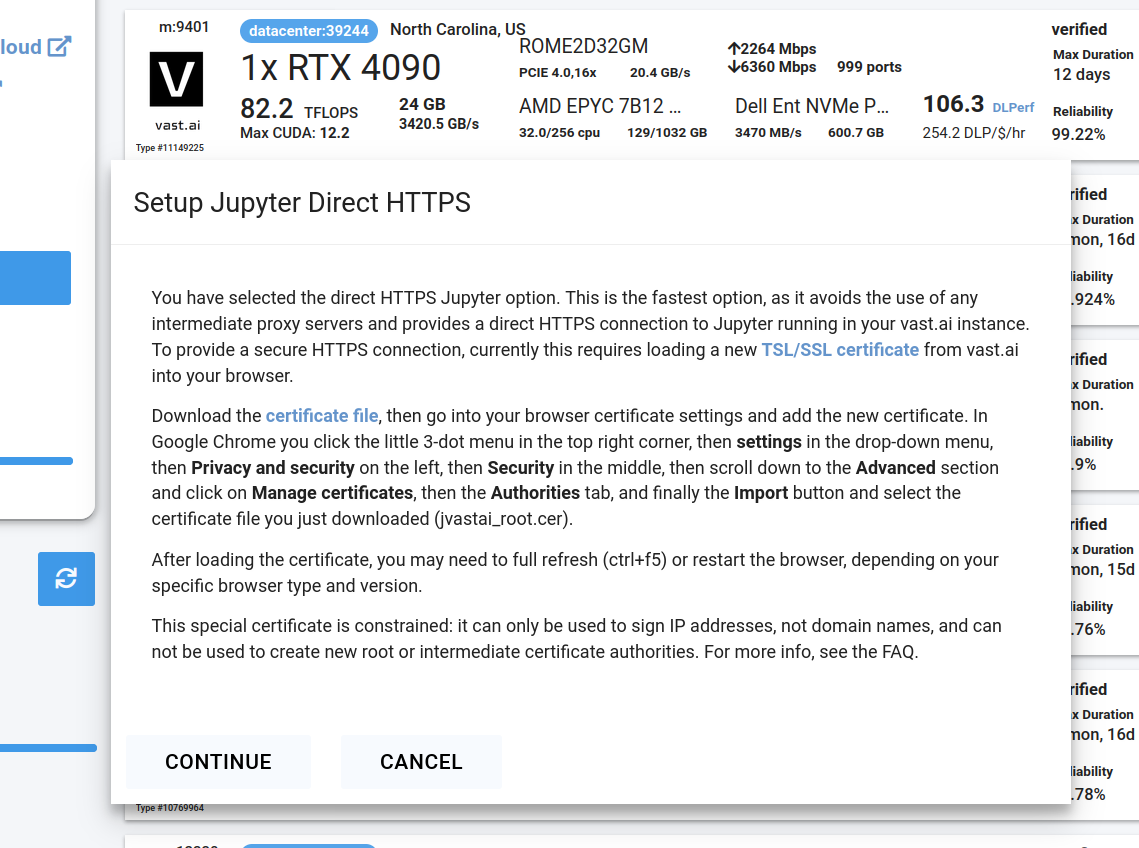

## Step 5 - Use Jupyter Direct HTTPS Launch Mode

|

||

|

||

Follow the instructions related to adding a certificate to your browser if you need to when it asks you to "Setup Jupyter Direct HTTPS" and click "CONTINUE". Here's more information on the Jupyter direct HTTPS Launch Mode and Installing the TLS certificate: [Jupyter](/documentation/instances/jupyter)

|

||

|

||

<Frame caption="Updated Jupyter Direct Https Continue">

|

||

|

||

</Frame>

|

||

|

||

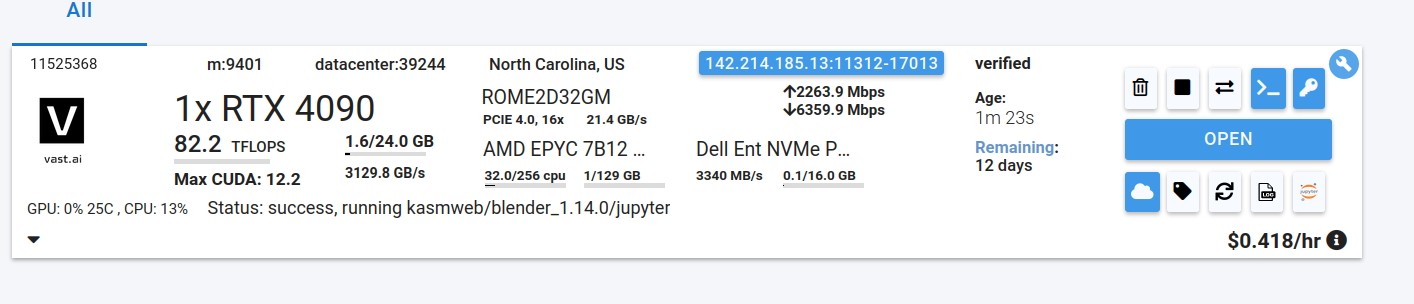

## Step 6 - Open Blender

|

||

|

||

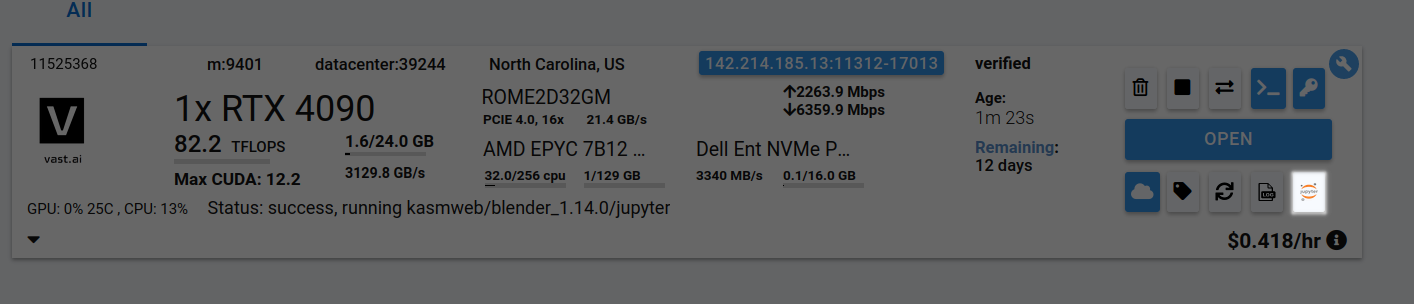

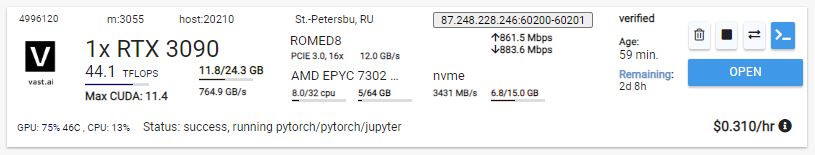

Go to the Instances tab to see your instance being created with it "Creating". When the message on the blue button changes to "Open", click on Open to open Blender.

|

||

|

||

<Frame caption="Original Open Jupyter Notebook">

|

||

|

||

</Frame>

|

||

|

||

Here's more info about instances at Vast if you need to reference it: [Instances Guide](/documentation/instances/managing-instances)

|

||

|

||

If you see an error that says something like "'clipboard-read' is not a valid value for enumeration PermissionName", please close that window.

|

||

|

||

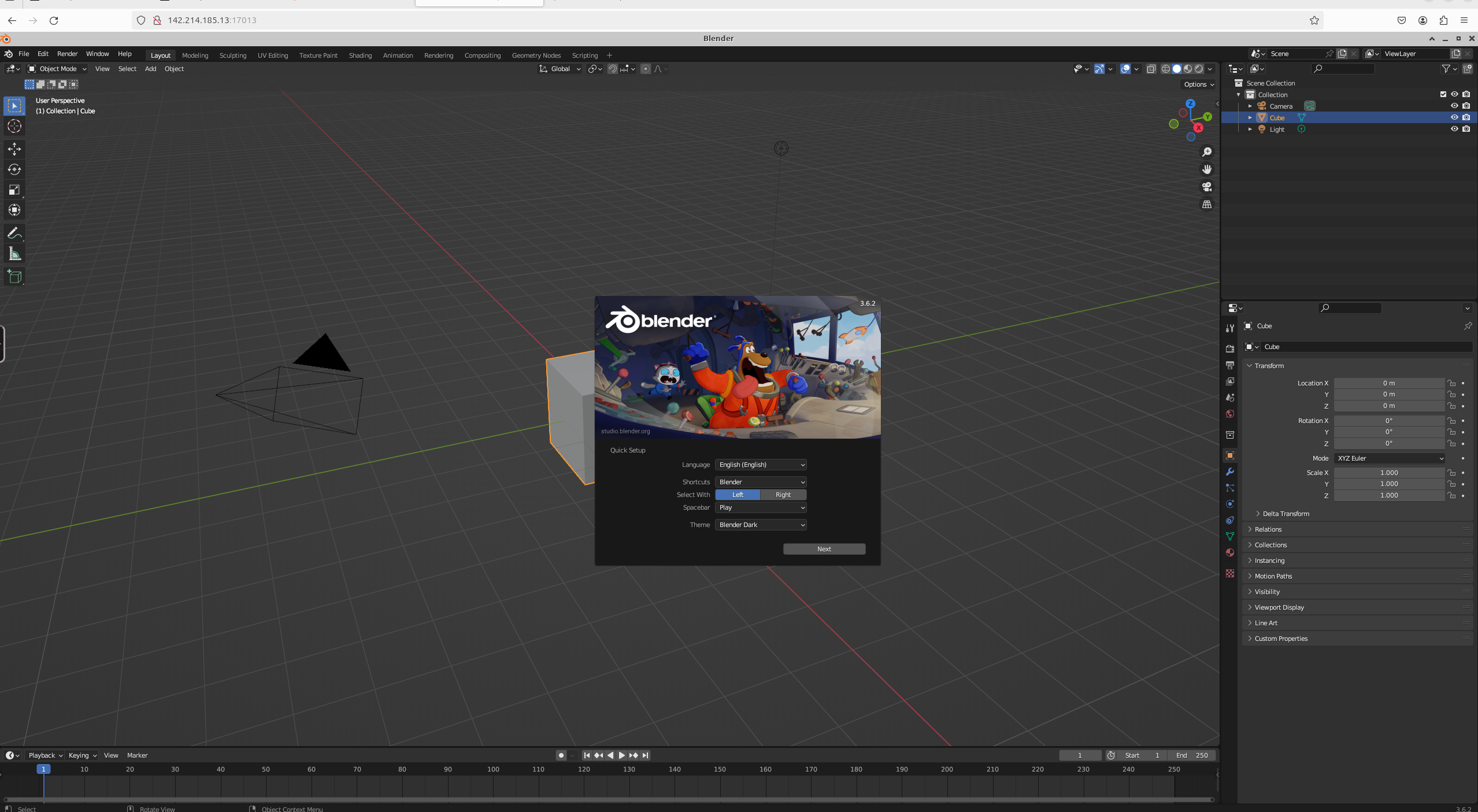

You should now see Blender!

|

||

|

||

<Frame caption="Blender In The Cloud">

|

||

|

||

</Frame>

|

||

|

||

## Step 7 - Upload .blend file(s) through Jupyter Notebook

|

||

|

||

Click the Jupyter Notebook button to open Jupyter Notebook.

|

||

|

||

<Frame caption="Jupyter Notebook Button">

|

||

|

||

</Frame>

|

||

|

||

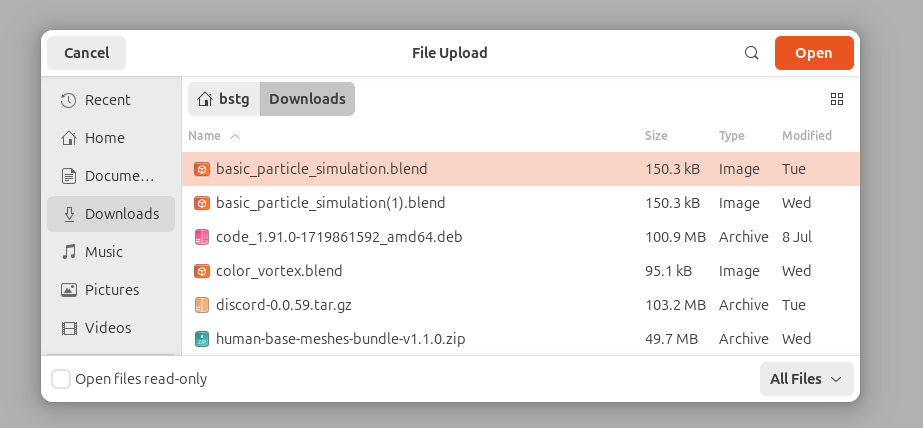

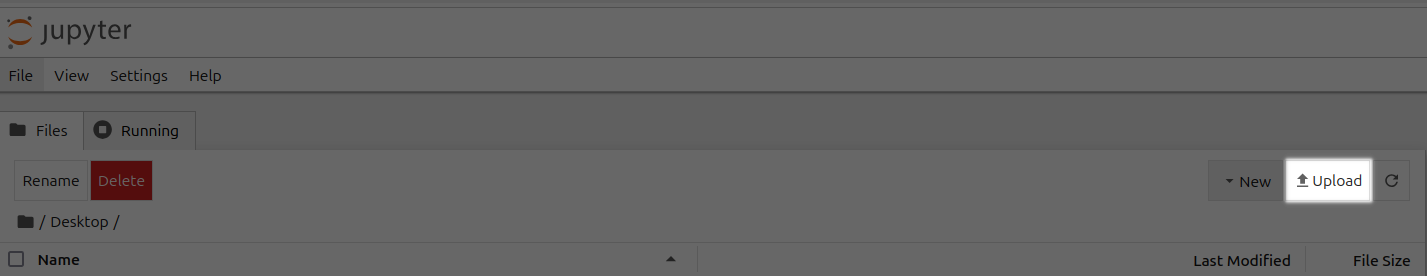

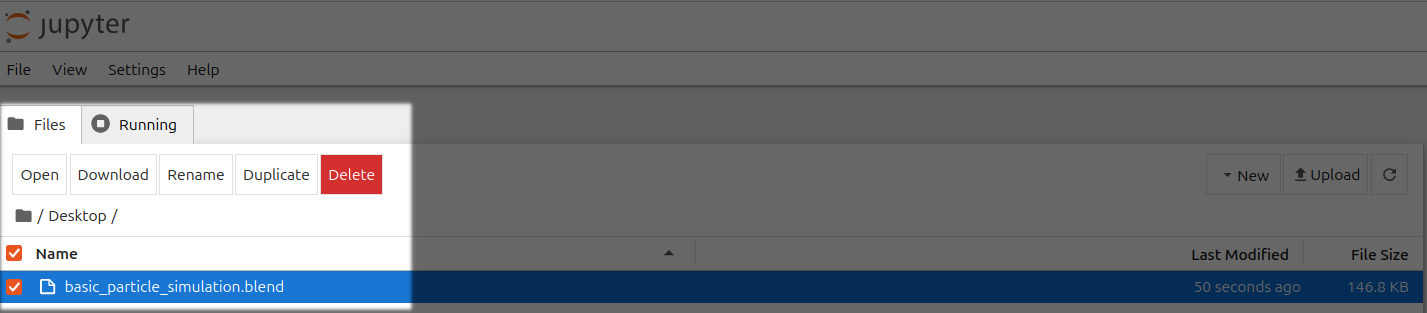

Go to your Jupyter Notebook, click the upload button on the top right, and upload one of your .blend files from your local computer to a directory in the Jupyter Notebook.

|

||

In this case, I'm uploading basic\_particle\_simulation.blend to the Desktop directory.

|

||

|

||

<Frame caption="Original Upload Blend File To Jupyter">

|

||

|

||

</Frame>

|

||

|

||

<br />

|

||

|

||

<Frame caption="Highlighted Upload">

|

||

|

||

</Frame>

|

||

|

||

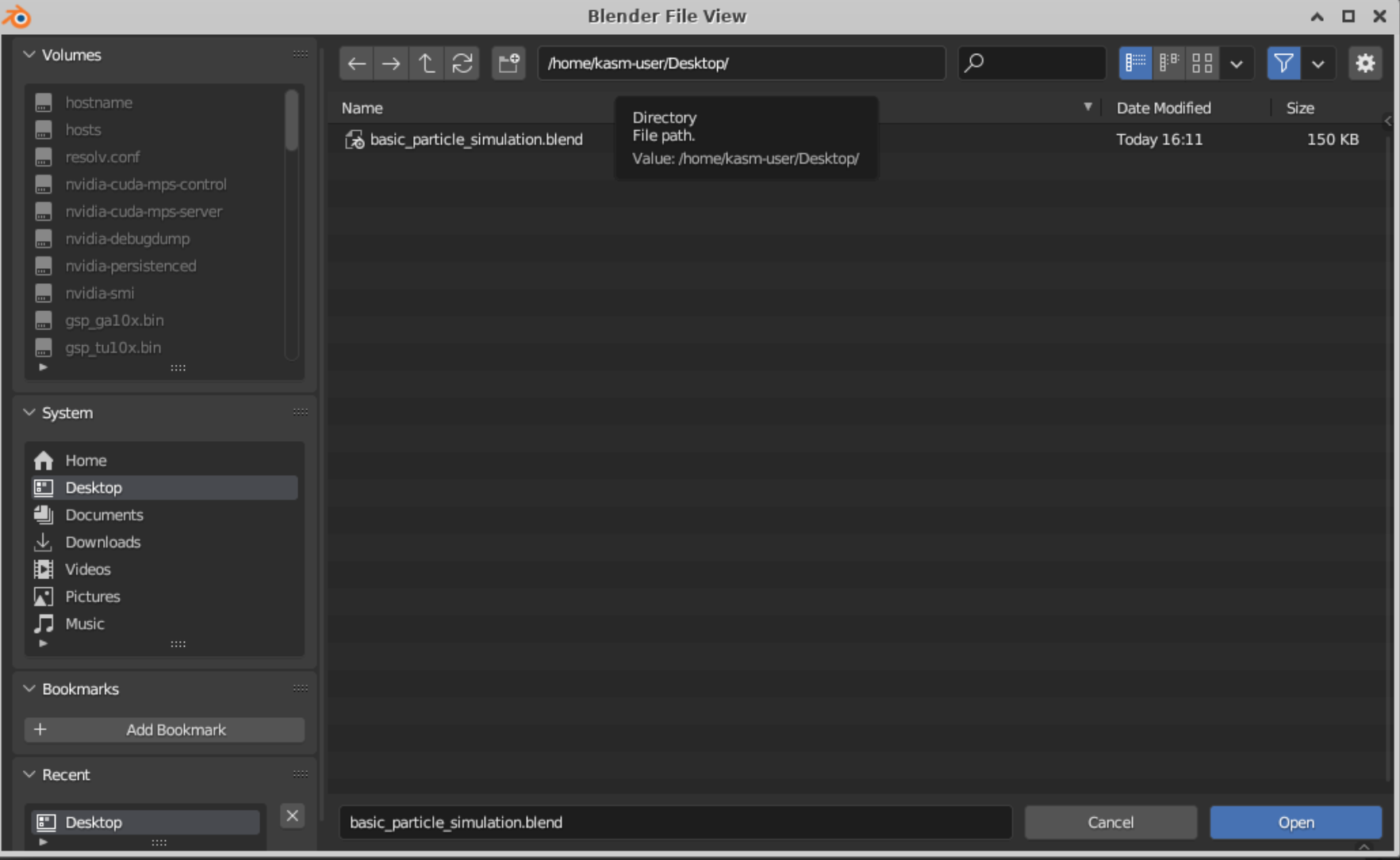

## Step 8 - Open .blend file in Blender

|

||

|

||

Go back to the tab where Blender is running, click on File, click on Open, find your file, and open it. In this case, my basic\_particle\_simulation.blend is in the Desktop directory since that's where I uploaded it in Jupyter Notebook

|

||

|

||

<Frame caption="Open File">

|

||

|

||

</Frame>

|

||

|

||

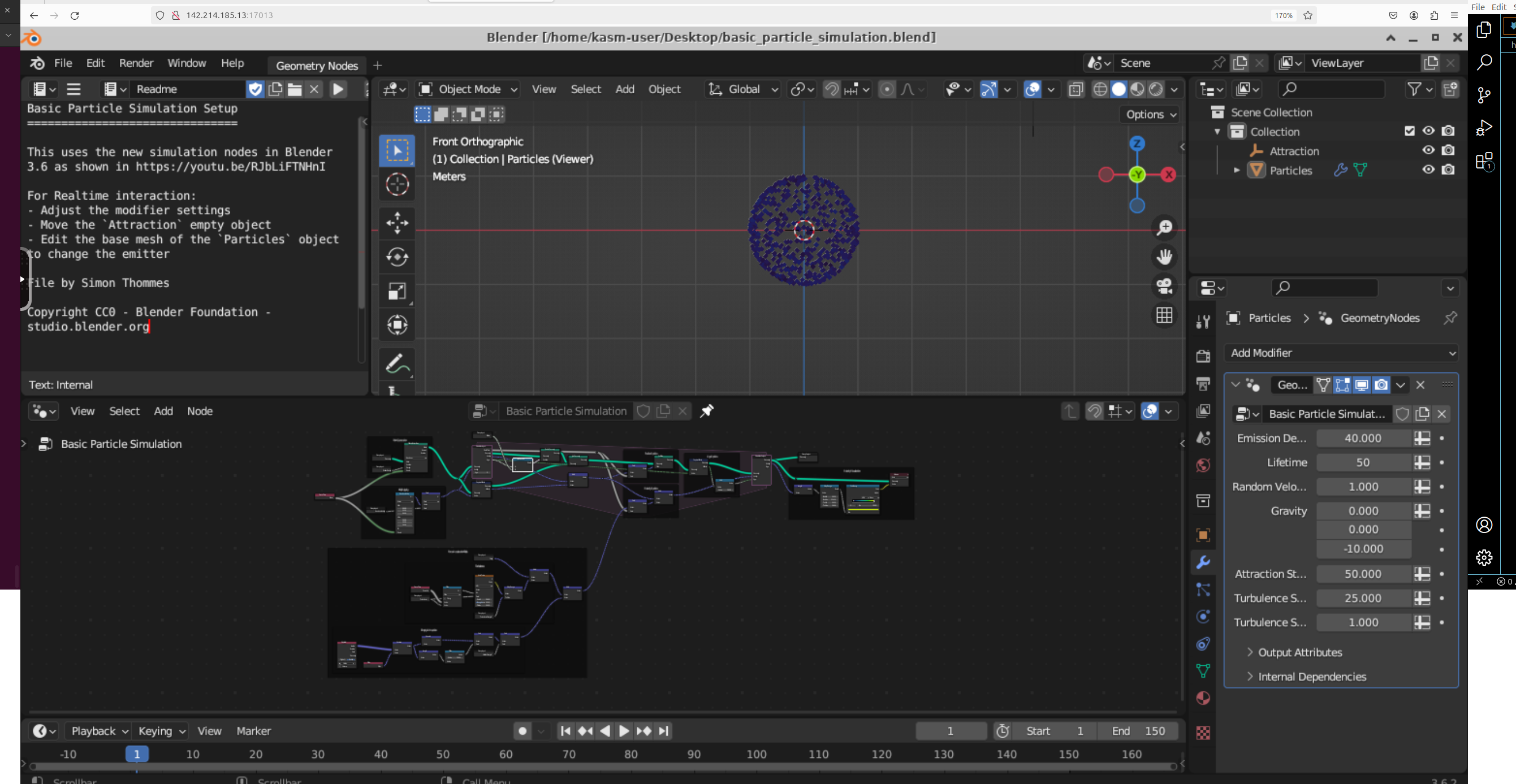

## Step 9 - Work on Your .blend file in Blender!

|

||

|

||

1. There you go! You should now able to see your .blend file in Blender in the Cloud using Vast.

|

||

|

||

<Frame caption="Particle Simulation Blend">

|

||

|

||

</Frame>

|

||

|

||

## Step 10 - Download files as needed from Jupyter Notebook

|

||

|

||

1. You can save files in Blender and download them by selecting the file(s) and clicking the Download button in Jupyter Notebook.

|

||

|

||

<Frame caption="Highlighted File To Download">

|

||

|

||

</Frame>

|

||

|

||

|

||

# Commands

|

||

Source: https://docs.vast.ai/cli/commands

|

||

|

||

|

||

|

||

<script

|

||

type="application/ld+json"

|

||

dangerouslySetInnerHTML={{

|

||

__html: JSON.stringify({

|

||

"@context": "https://schema.org",

|

||

"@type": "TechArticle",

|

||

"headline": "Vast.ai CLI Commands Reference",

|

||

"description": "Complete reference documentation for all Vast.ai CLI commands including client commands for managing instances, host commands for machine management, and detailed usage examples.",

|

||

"author": {

|

||

"@type": "Organization",

|

||

"name": "Vast.ai"

|

||

},

|

||

"datePublished": "2025-01-13",

|

||

"dateModified": "2025-07-12",

|

||

"articleSection": "CLI Reference",

|

||

"keywords": ["CLI", "command line", "API", "GPU", "vast.ai", "reference", "documentation", "Python", "instances", "hosting"],

|

||

"about": {

|

||

"@type": "SoftwareApplication",

|

||

"name": "Vast.ai CLI",

|

||

"applicationCategory": "DeveloperApplication",

|

||

"operatingSystem": ["Linux", "macOS", "Windows"],

|

||

"programmingLanguage": "Python"

|

||

}

|

||

})

|

||

}}

|

||

/>

|

||

|

||

# CLI Commands

|

||

|

||

```text Text theme={null}

|

||

usage: vastai [-h] [--url URL] [--retry RETRY] [--raw] [--explain] [--curl] [--api-key API_KEY] [--version] command ...

|

||

|

||

positional arguments:

|

||

command command to run. one of:

|

||

help print this help message

|

||

attach ssh Attach an ssh key to an instance. This will allow you to connect to the instance with the ssh key

|

||

cancel copy Cancel a remote copy in progress, specified by DST id

|

||

cancel sync Cancel a remote copy in progress, specified by DST id

|

||

change bid Change the bid price for a spot/interruptible instance

|

||

clone volume Clone an existing volume

|

||

copy Copy directories between instances and/or local

|

||

cloud copy Copy files/folders to and from cloud providers

|

||

take snapshot Schedule a snapshot of a running container and push it to your repo in a container registry

|

||

create api-key Create a new api-key with restricted permissions. Can be sent to other users and teammates

|

||

create env-var Create a new user environment variable

|

||

create ssh-key Create a new ssh-key

|

||

create autogroup Create a new autoscale group

|

||

create endpoint Create a new endpoint group

|

||

create instance Create a new instance

|

||

create subaccount Create a subaccount

|

||

create team Create a new team

|

||

create team-role Add a new role to your team

|

||

create template Create a new template

|

||

create volume Create a new volume

|

||

delete api-key Remove an api-key

|

||

delete ssh-key Remove an ssh-key

|

||

delete scheduled-job

|

||

Delete a scheduled job

|

||

delete autogroup Delete an autogroup group

|

||

delete endpoint Delete an endpoint group

|

||

delete env-var Delete a user environment variable

|

||

delete template Delete a Template

|

||

delete volume Delete a volume

|

||

destroy instance Destroy an instance (irreversible, deletes data)

|

||

destroy instances Destroy a list of instances (irreversible, deletes data)

|

||

destroy team Destroy your team

|

||

detach ssh Detach an ssh key from an instance

|

||

execute Execute a (constrained) remote command on a machine

|

||

get endpt-logs Fetch logs for a specific serverless endpoint group

|

||

invite member Invite a team member

|

||

label instance Assign a string label to an instance

|

||

launch instance Launch the top instance from the search offers based on the given parameters

|

||

logs Get the logs for an instance

|

||

prepay instance Deposit credits into reserved instance

|

||

reboot instance Reboot (stop/start) an instance

|

||

recycle instance Recycle (destroy/create) an instance

|

||

remove member Remove a team member

|

||

remove team-role Remove a role from your team

|

||

reports Get the user reports for a given machine

|

||

reset api-key Reset your api-key (get new key from website)

|

||

start instance Start a stopped instance

|

||

start instances Start a list of instances

|

||

stop instance Stop a running instance

|

||

stop instances Stop a list of instances

|

||

search benchmarks Search for benchmark results using custom query

|

||

search invoices Search for benchmark results using custom query

|

||

search offers Search for instance types using custom query

|

||

search templates Search for template results using custom query

|

||

search volumes Search for volume offers using custom query

|

||

set api-key Set api-key (get your api-key from the console/CLI)

|

||

set user Update user data from json file

|

||

ssh-url ssh url helper

|

||

scp-url scp url helper

|

||

show api-key Show an api-key

|

||

show api-keys List your api-keys associated with your account

|

||

show audit-logs Display account's history of important actions

|

||

show scheduled-jobs Display the list of scheduled jobs

|

||

show ssh-keys List your ssh keys associated with your account

|

||

show autogroups Display user's current autogroup groups

|

||

show endpoints Display user's current endpoint groups

|

||

show connections Display user's cloud connections

|

||

show deposit Display reserve deposit info for an instance

|

||

show earnings Get machine earning history reports

|

||

show env-vars Show user environment variables

|

||

show invoices Get billing history reports

|

||

show instance Display user's current instances

|

||

show instances Display user's current instances

|

||

show ipaddrs Display user's history of ip addresses

|

||

show user Get current user data

|

||

show subaccounts Get current subaccounts

|

||

show members Show your team members

|

||

show team-role Show your team role

|

||

show team-roles Show roles for a team

|

||

show volumes Show stats on owned volumes.

|

||

create cluster Create Vast cluster

|

||

join cluster Join Machine to Cluster

|

||

delete cluster Delete Cluster

|

||

remove-machine-from-cluster Removes machine from cluster

|

||

show overlays Show overlays associated with your account.

|

||

create overlay Creates overlay network on top of a physical cluster

|

||

join overlay Adds instance to an overlay network

|

||

delete overlay Deletes overlay and removes all of its associated instances

|

||

show clusters Show clusters associated with your account.

|

||

transfer credit Transfer credits to another account

|

||

update autogroup Update an existing autoscale group

|

||

update endpoint Update an existing endpoint group

|

||

update env-var Update an existing user environment variable

|

||

update instance Update recreate an instance from a new/updated template

|

||

update team-role Update an existing team role

|

||

update template Update an existing template

|

||

update ssh-key Update an existing ssh key

|

||

cancel maint [Host] Cancel maint window

|

||

cleanup machine [Host] Remove all expired storage instances from the machine, freeing up space

|

||

delete machine [Host] Delete machine if the machine is not being used by clients. host jobs on their own machines are disregarded and machine is force deleted.

|

||

list machine [Host] list a machine for rent

|

||

list machines [Host] list machines for rent

|

||

list volume [Host] list disk space for rent as a volume on a machine

|

||

list volumes [Host] list disk space for rent as a volume on machines

|

||

unlist volume [Host] unlist volume offer

|

||

remove defjob [Host] Delete default jobs

|

||

set defjob [Host] Create default jobs for a machine

|

||

set min-bid [Host] Set the minimum bid/rental price for a machine

|

||

schedule maint [Host] Schedule upcoming maint window

|

||

show machine [Host] Show hosted machines

|

||

show machines [Host] Show hosted machines

|

||

show maints [Host] Show maintenance information for host machines

|

||

unlist machine [Host] Unlist a listed machine

|

||

self-test machine [Host] Perform a self-test on the specified machine

|

||

|

||

options:

|

||

-h, --help show this help message and exit

|

||

--url URL server REST api url

|

||

--retry RETRY retry limit

|

||

--raw output machine-readable json

|

||

--explain output verbose explanation of mapping of CLI calls to HTTPS API endpoints

|

||

--curl show a curl equivalency to the call

|

||

--api-key API_KEY api key. defaults to using the one stored in /home/scott_vast/.config/vastai/vast_api_key

|

||

--version show version

|

||

|

||

Use 'vast COMMAND --help' for more info about a command

|

||

|

||

```

|

||

|

||

# Client Commands

|

||

|

||

## cancel copy

|

||

|

||

Cancel a remote copy in progress, specified by DST id

|

||

|

||

```text Text theme={null}

|

||

usage: vastai cancel copy DST

|

||

|

||

positional arguments:

|

||

dst instance_id:/path to target of copy operation.

|

||

|

||

options:

|

||

-h, --help show this help message and exit

|

||

--url URL server REST api url

|

||

--retry RETRY retry limit

|

||

--raw output machine-readable json

|

||

--explain output verbose explanation of mapping of CLI calls to

|

||

HTTPS API endpoints

|

||

--api-key API_KEY api key. defaults to using the one stored in

|

||

~/.vast_api_key

|

||

|

||

Use this command to cancel any/all current remote copy operations copying to a specific named instance, given by DST.

|

||

Examples:

|

||

vastai cancel copy 12371

|

||

|

||

The first example cancels all copy operations currently copying data into instance 12371

|

||

|

||

```

|

||

|

||

## cancel sync

|

||

|

||

Cancel a remote copy in progress, specified by DST id

|

||

|

||

```text Text theme={null}

|

||

usage: vastai cancel sync DST

|

||

|

||

positional arguments:

|

||

dst instance_id:/path to target of sync operation.

|

||

|

||

options:

|

||

-h, --help show this help message and exit

|

||

--url URL server REST api url

|

||

--retry RETRY retry limit

|

||

--raw output machine-readable json

|

||

--explain output verbose explanation of mapping of CLI calls to

|

||

HTTPS API endpoints

|

||

--api-key API_KEY api key. defaults to using the one stored in

|

||

~/.vast_api_key

|

||

|

||

Use this command to cancel any/all current remote cloud sync operations copying to a specific named instance, given by DST.

|

||

Examples:

|

||

vastai cancel sync 12371

|

||

|

||

The first example cancels all copy operations currently copying data into instance 12371

|

||

|

||

```

|

||

|

||

## change bid

|

||

|

||

Change the bid price for a spot/interruptible instance

|

||

|

||

```text Text theme={null}

|

||

usage: vastai change bid id [--price PRICE]

|

||

|

||

positional arguments:

|

||

id id of instance type to change bid

|

||

|

||

options:

|

||

-h, --help show this help message and exit

|

||

--price PRICE per machine bid price in $/hour

|

||

--url URL server REST api url

|

||

--retry RETRY retry limit

|

||

--raw output machine-readable json

|

||

--explain output verbose explanation of mapping of CLI calls to

|

||

HTTPS API endpoints

|

||

--api-key API_KEY api key. defaults to using the one stored in

|

||

~/.vast_api_key

|

||

|

||

Change the current bid price of instance id to PRICE.

|

||

If PRICE is not specified, then a winning bid price is used as the default.

|

||

|

||

```

|

||

|

||

## cloud copy

|

||

|

||

Copy files/folders to and from cloud providers

|

||

|

||

```text Text theme={null}

|

||

usage: vastai cloud_copy SRC DST CLOUD_SERVICE INSTANCE_ID CLOUD_SERVICE_SELECTED TRANSFER

|

||

|

||

options:

|

||

-h, --help show this help message and exit

|

||

--src SRC path to source of object to copy.

|

||

--dst DST path to target of copy operation.

|

||

--instance INSTANCE id of the instance

|

||

--connection CONNECTION

|

||

id of cloud connection on your account

|