868 KiB

Vastai-Api - Llms-Txt

Pages: 283

Workergroup Parameters

URL: llms-txt#workergroup-parameters

Contents:

- gpu_ram

- launch_args

- search_params

- template_hash

- template_id

- test_workers

The following parameters can be specified specifically for a Workergroup and override Endpoint parameters. The Endpoint parameters will continue to apply for other Workergroups contained in it, unless specifically set.

- min_load

- target_util

- cold_mult

The parameters below are specific to only Workergroups, not Endpoints.

The amount of GPU memory (VRAM) in gigabytes that your model or workload requires to run. This parameter tells the serverless engine how much GPU memory your model needs.

If not specified during workergroup creation, the default value is 24.

A command-line style string containing additional parameters for instance creation that will be parsed and applied when the serverless engine creates new workers. This allows you to customize instance configuration beyond what's specified in templates.

There is no default value for launch_args.

A query string, list, or dictionary that specifies the hardware and performance criteria for filtering GPU offers in the vast.ai marketplace. It uses a simple query syntax to define requirements for the machines that your Workergroup will consider when searching for workers to create.

There is no default value for search_params. To see all available search filters, see the CLI docs here.

A unique hexadecimal identifier that references a pre-configured template containing all the configuration needed to create instances. Templates are comprehensive specifications that include the Docker image, environment variables, onstart scripts, resource requirements, and other deployment settings.

There is no default value for template_hash.

A numeric (integer) identifier that uniquely references a template in the Vast.ai database. This is an alternative way to reference the same template that template_hash points to, but using the template's database primary key instead of its hash string.

There is no default value for template_id.

The number of different physical machines that a Workergroup should test during its initial "exploration" phase to gather performance data before transitioning to normal demand-based scaling. The Worker Group remains in "exploring" mode until it has successfully tested at least floor(test_workers / 2) machines.

If not specified during workergroup creation, the default value is 3.

delete endpoint

URL: llms-txt#delete-endpoint

Source: https://docs.vast.ai/api-reference/serverless/delete-endpoint

api-reference/openapi.json delete /api/v0/endptjobs/{id}/ Deletes an endpoint group by ID. Associated workergroups will also be deleted.

CLI Usage: vastai delete endpoint <id>

QuickStart

URL: llms-txt#quickstart

Contents:

-

- Sign Up & Add Credit

- 2. Prepare to Connect

- 3. Pick a Template & Find a Machine

- 4. Manage or End Your Instance

- Common Questions

- What is a minimum deposit amount?

- What happens when my balance runs out? Can I avoid interruptions?

- How can I customize a template?

Source: https://docs.vast.ai/documentation/get-started/quickstart

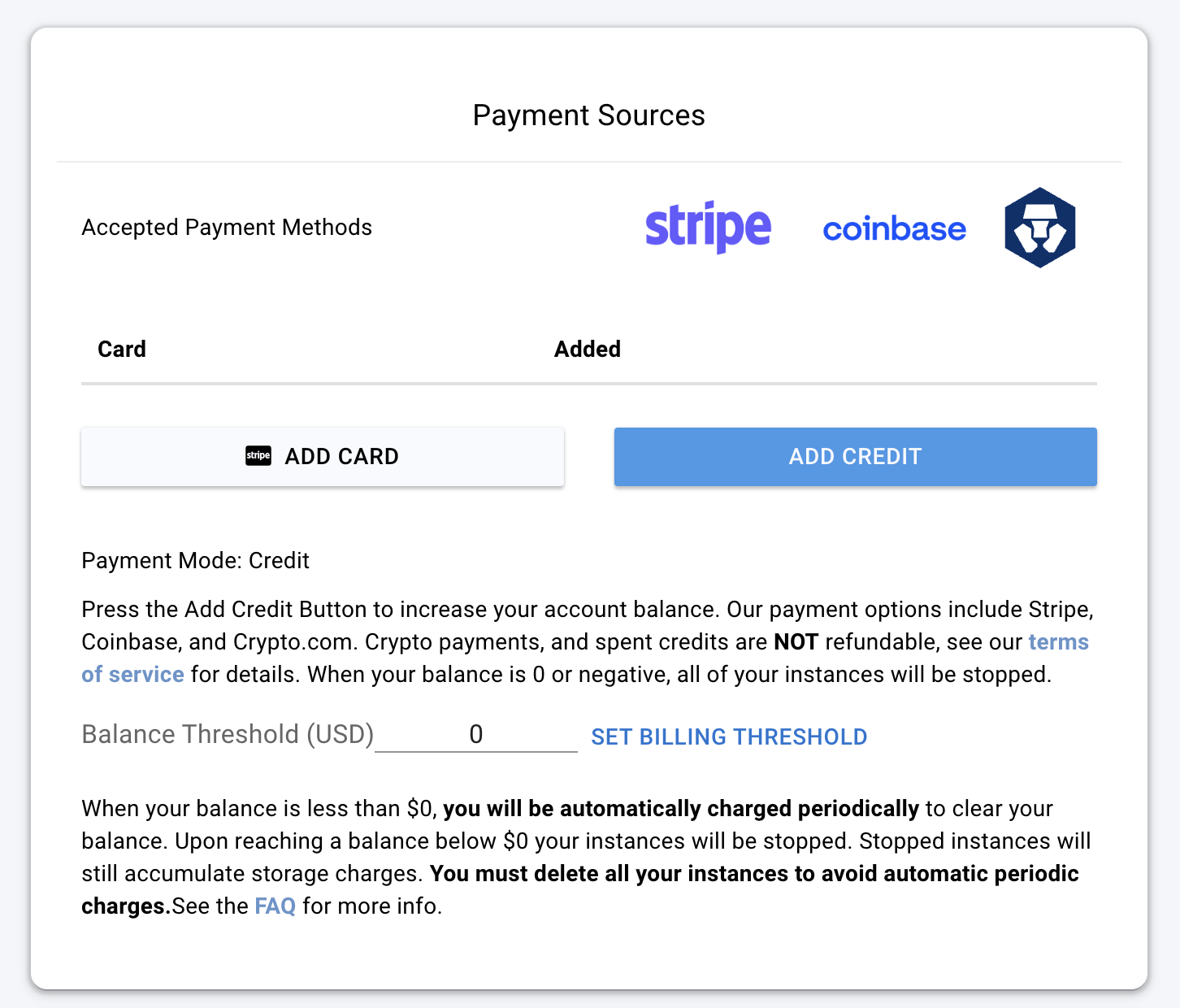

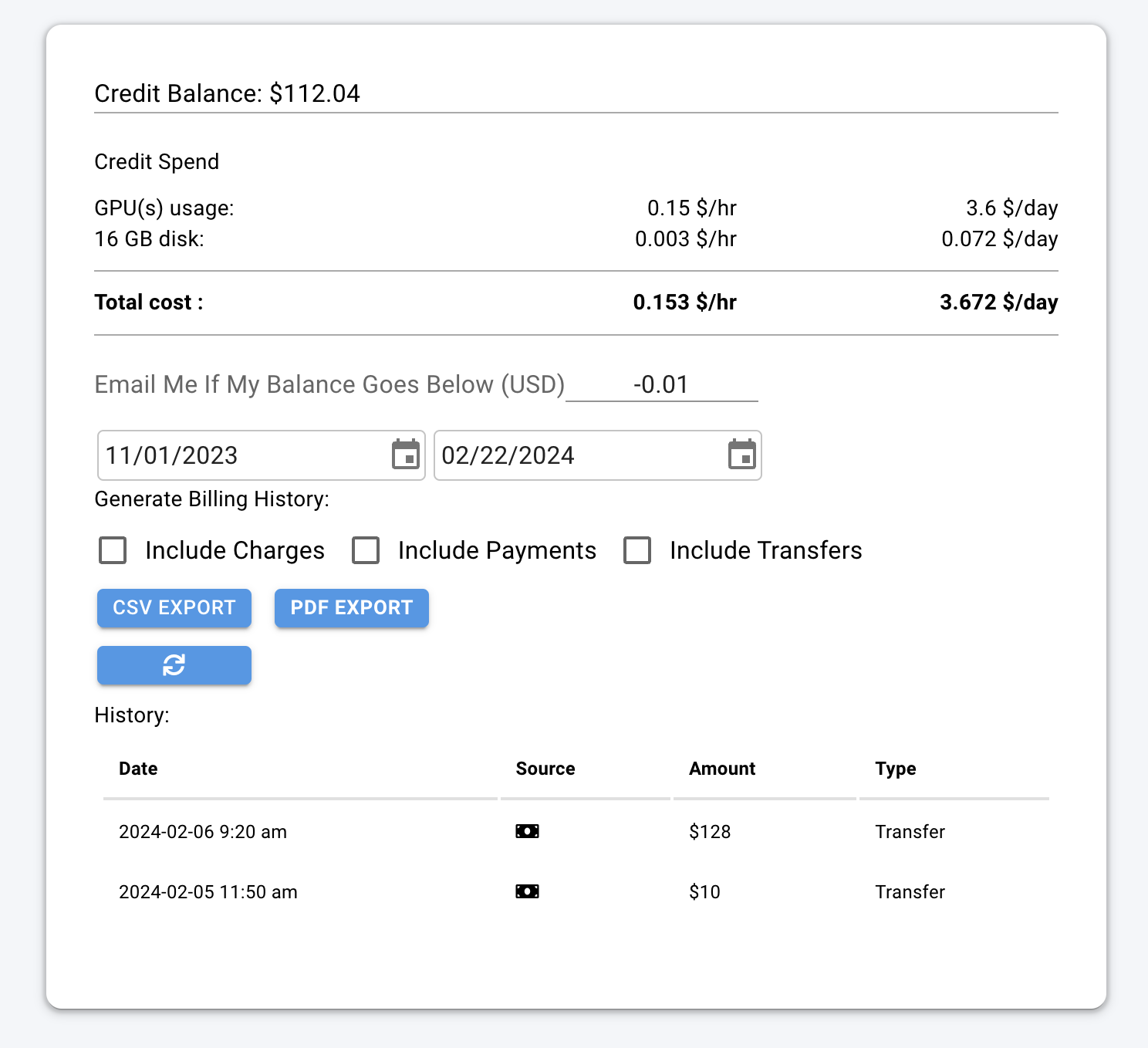

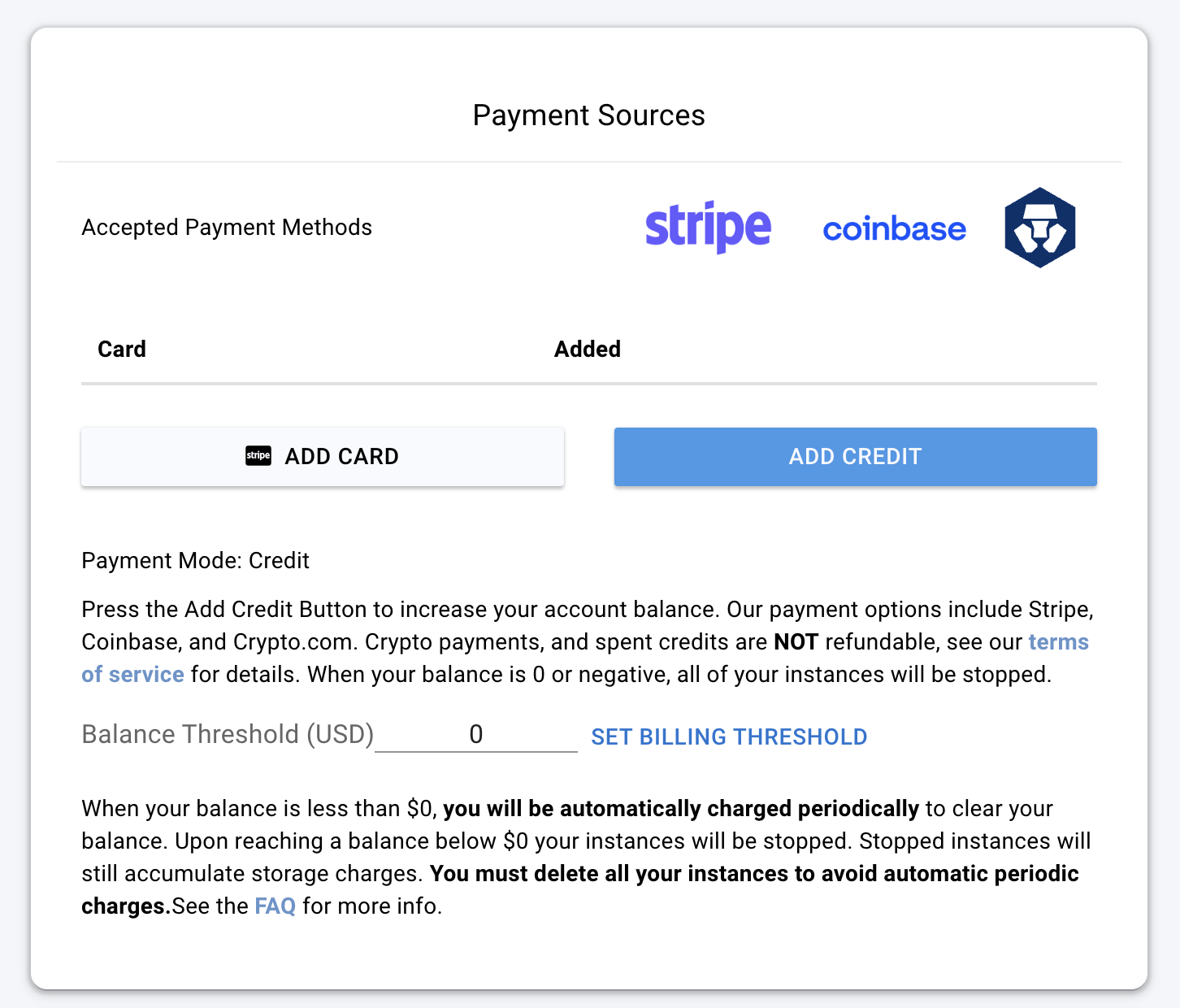

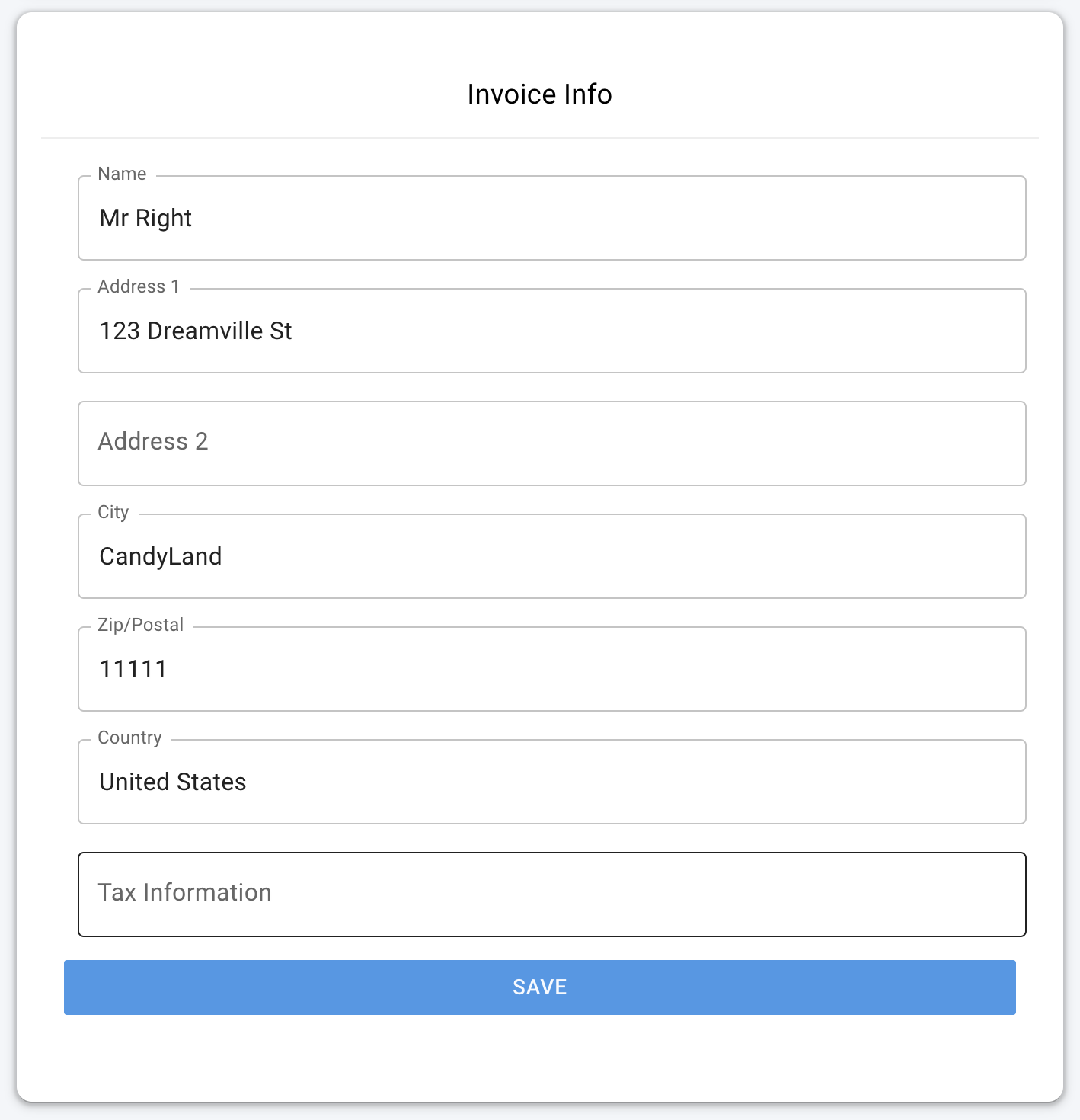

<script type="application/ld+json" dangerouslySetInnerHTML={{ __html: JSON.stringify({ "@context": "https://schema.org", "@type": "HowTo", "name": "How to Get Started with Vast.ai", "description": "A step-by-step guide to setting up your Vast.ai account and running your first GPU instance.", "step": [ { "@type": "HowToStep", "name": "Sign Up & Add Credit", "text": "Create an account on vast.ai, verify your email address, and add credit through Billing using credit card, Coinbase, or Crypto.com. Your balance will appear at the top right of the dashboard." }, { "@type": "HowToStep", "name": "Prepare to Connect", "text": "For SSH access: generate an SSH key pair and upload your public key in the Keys page. For Jupyter access: download and install the provided TSL certificate for secure browser access." }, { "@type": "HowToStep", "name": "Pick a Template & Find a Machine", "text": "Browse Templates for pre-built setups like PyTorch, TensorFlow, or ComfyUI. Go to Search and filter by GPU type, count, RAM, CPU, network speed, and price. Remember that disk space is permanent and cannot be changed later. Click Rent when you find a match and wait for the instance to start." }, { "@type": "HowToStep", "name": "Manage or End Your Instance", "text": "Use Stop to pause GPU billing (storage still accrues charges). Use Delete when finished to stop all charges." } ] }) }} /> <script type="application/ld+json" dangerouslySetInnerHTML={{ __html: JSON.stringify({ "@context": "https://schema.org", "@type": "FAQPage", "mainEntity": [ { "@type": "Question", "name": "What is a minimum deposit amount?", "acceptedAnswer": { "@type": "Answer", "text": "The minimum deposit amount on Vast.ai is $5." } }, { "@type": "Question", "name": "What happens when my balance runs out? Can I avoid interruptions?", "acceptedAnswer": { "@type": "Answer", "text": "When your balance reaches zero, your running instances will automatically stop. To avoid this, you can enable auto-billing on the Billing page. Set an auto-charge threshold higher than your average daily spend, so your card is automatically charged when your balance falls below that amount. We also recommend setting a low-balance email alert at a slightly lower threshold to notify you if the auto-charge fails for any reason." } }, { "@type": "Question", "name": "How can I customize a template?", "acceptedAnswer": { "@type": "Answer", "text": "You can create a new template from scratch, or you can edit an existing template. You can find a guide in the templates documentation." } } ] }) }} /> This Quickstart will guide you through setting up your Vast.ai account and running your first instance in just a few steps. ### 1. Sign Up & Add Credit * Create an account on [vast.ai.](https://cloud.vast.ai/) * Verify your email address. * Go to [**Billing**](https://cloud.vast.ai/billing/) → **Add Credit** and top up using credit card, Coinbase, or Crypto.com. * Your balance appears at the top right of the dashboard.

* Your balance appears at the top right of the dashboard.

Before you can **rent a machine **or **create a team**, you must verify your email address. After signing up, check your inbox (and spam folder) for the verification email and click the link inside. You can resend the verification email anytime from **Settings → Resend Verification Email.**

### 2**. Prepare to Connect**

* **For SSH access**: generate an [SSH key pair](/documentation/instances/sshscp) and upload your **public key** in [Keys page](https://cloud.vast.ai/manage-keys/).

Before you can **rent a machine **or **create a team**, you must verify your email address. After signing up, check your inbox (and spam folder) for the verification email and click the link inside. You can resend the verification email anytime from **Settings → Resend Verification Email.**

### 2**. Prepare to Connect**

* **For SSH access**: generate an [SSH key pair](/documentation/instances/sshscp) and upload your **public key** in [Keys page](https://cloud.vast.ai/manage-keys/).

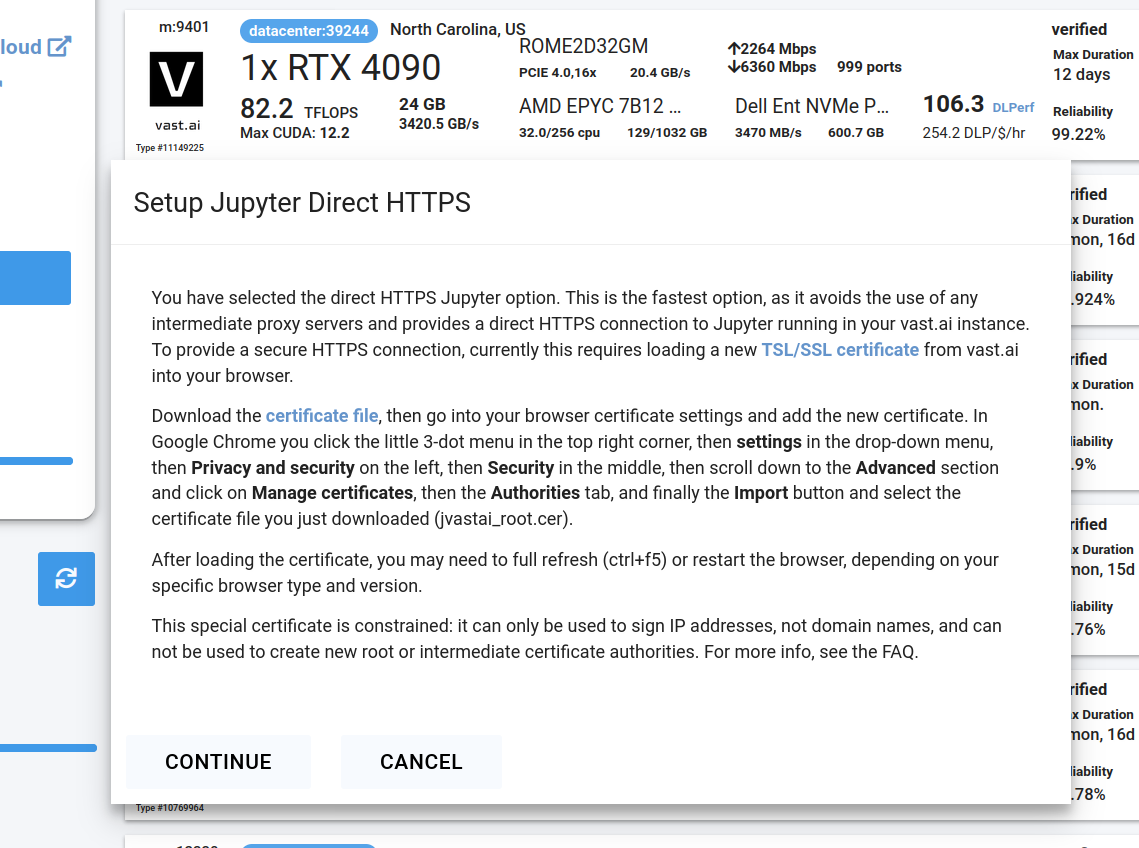

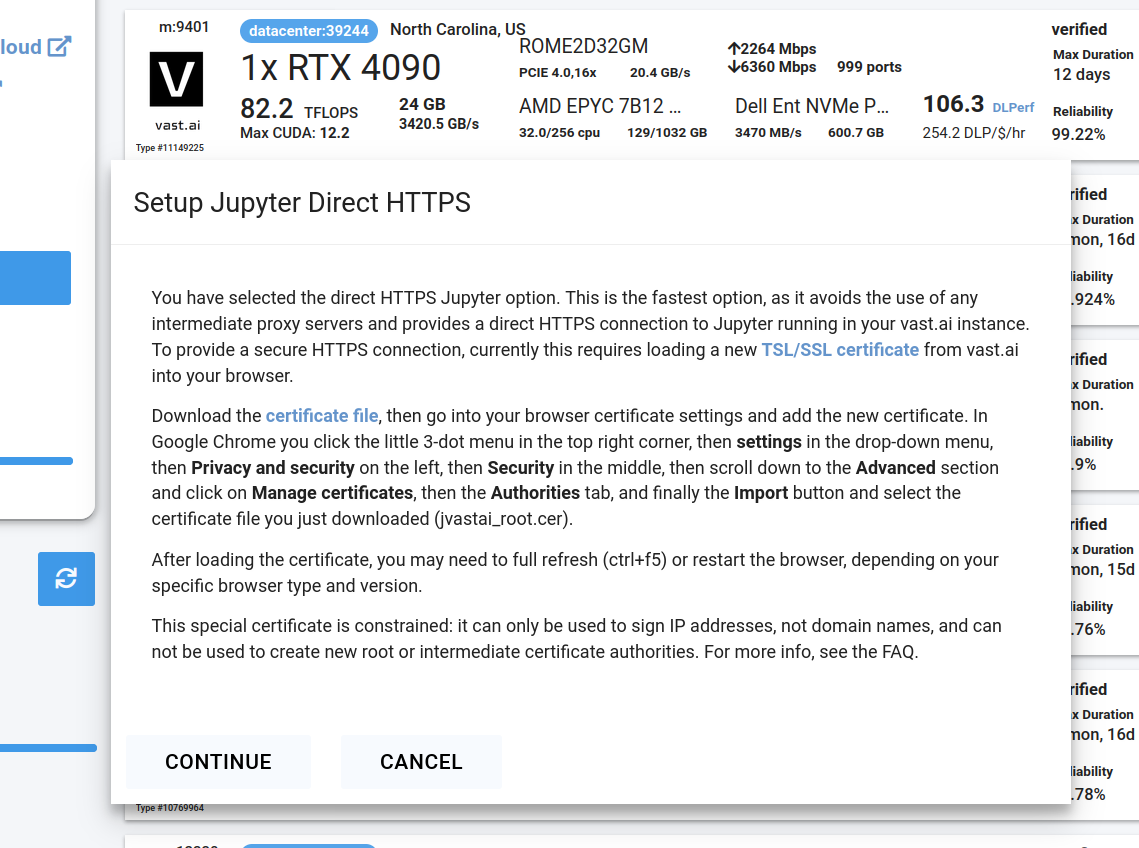

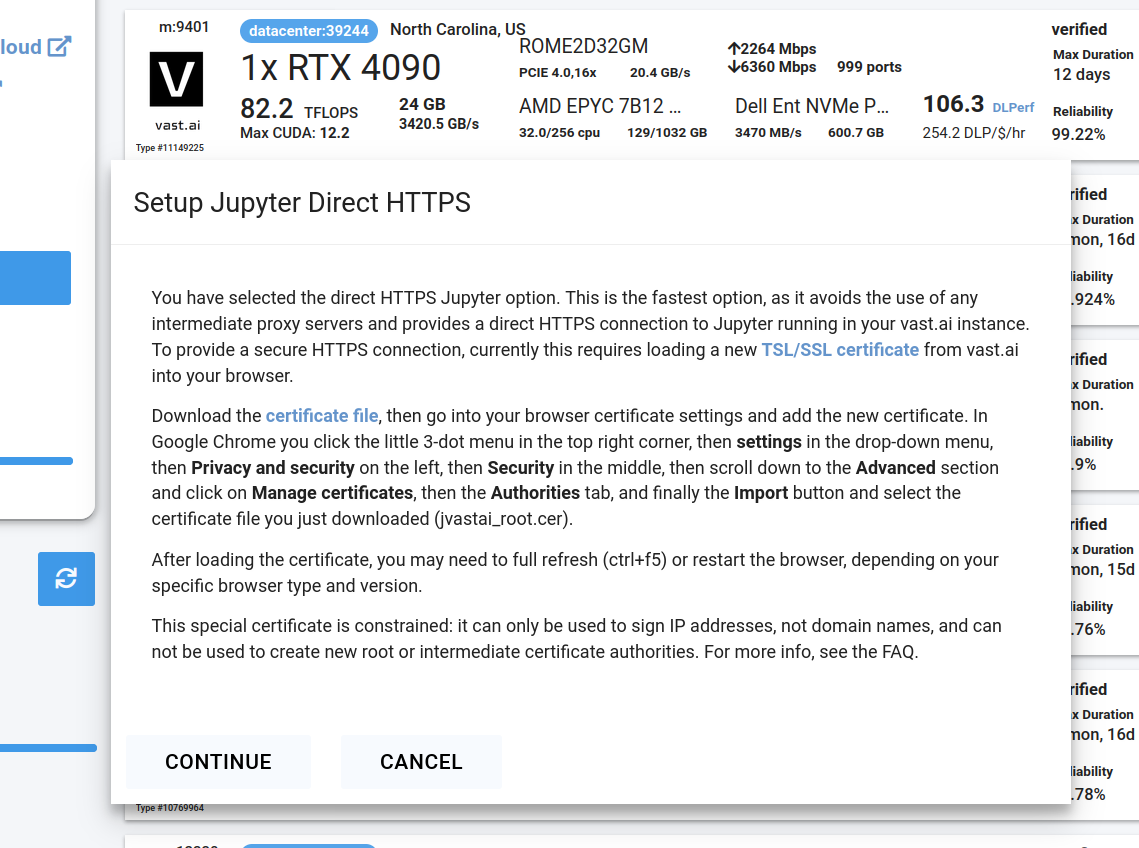

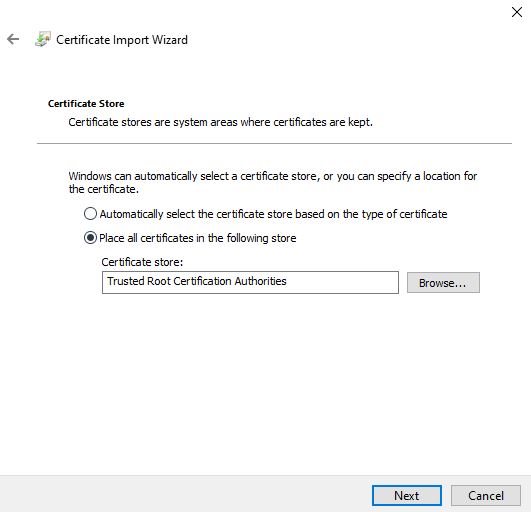

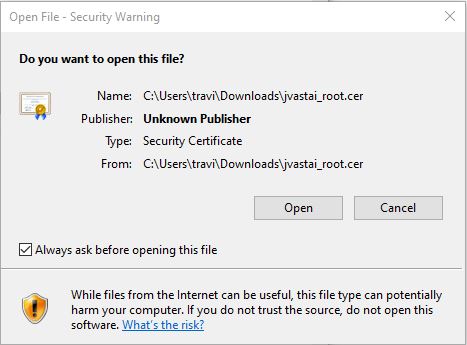

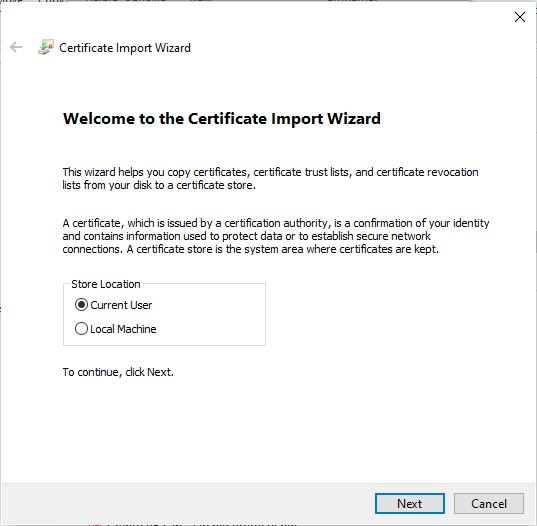

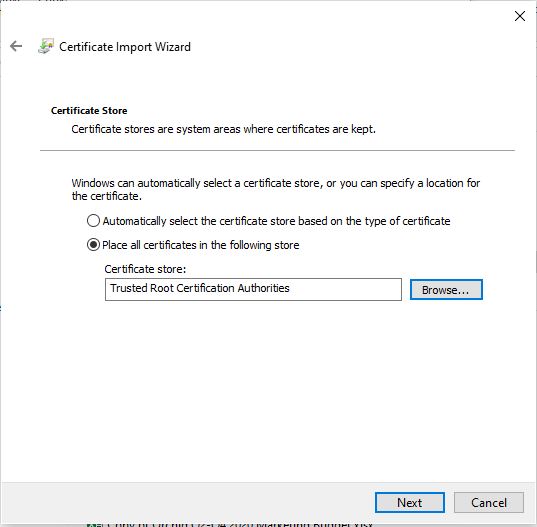

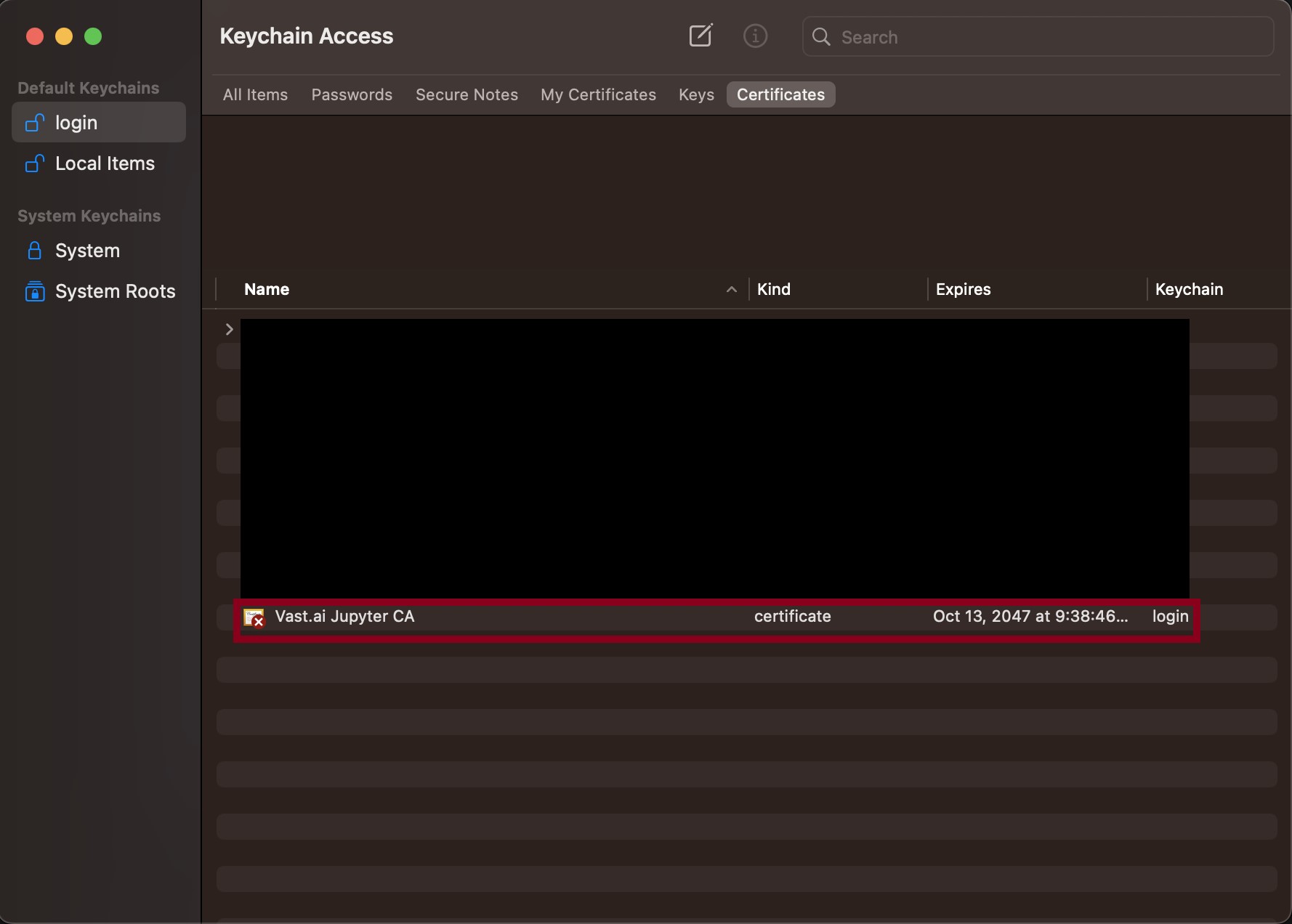

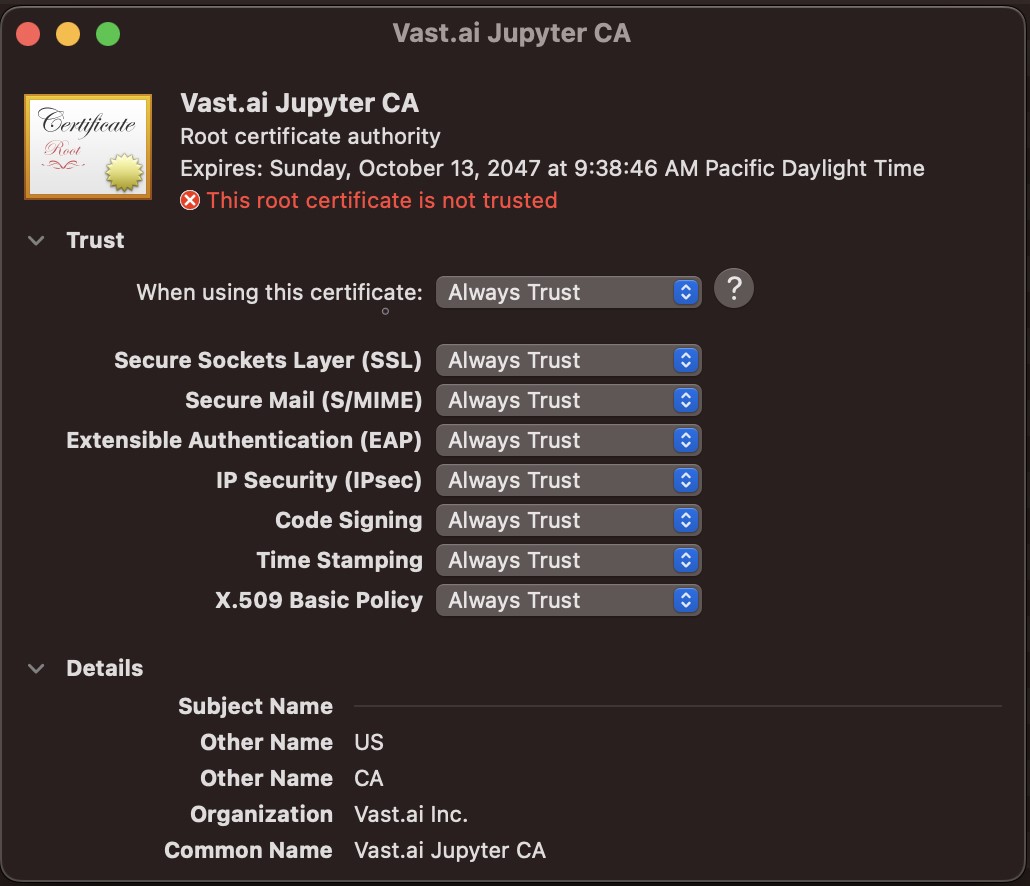

* **For Jupyter access**: download and install the provided [TSL certificate](/documentation/instances/jupyter#1SmCz) (needed for secure browser access).

If you don’t install the provided browser certificate:

* **Windows / Linux** – You’ll see a **“Your connection is not private”** privacy warning. You can still connect by clicking **Advanced** → **Proceed**, but the warning will appear every time.

* **macOS** – Browsers will block Jupyter until you install and trust the provided certificate in **Keychain Access**. Without it, you won’t be able to connect.

Installing the certificate once removes the warning permanently.

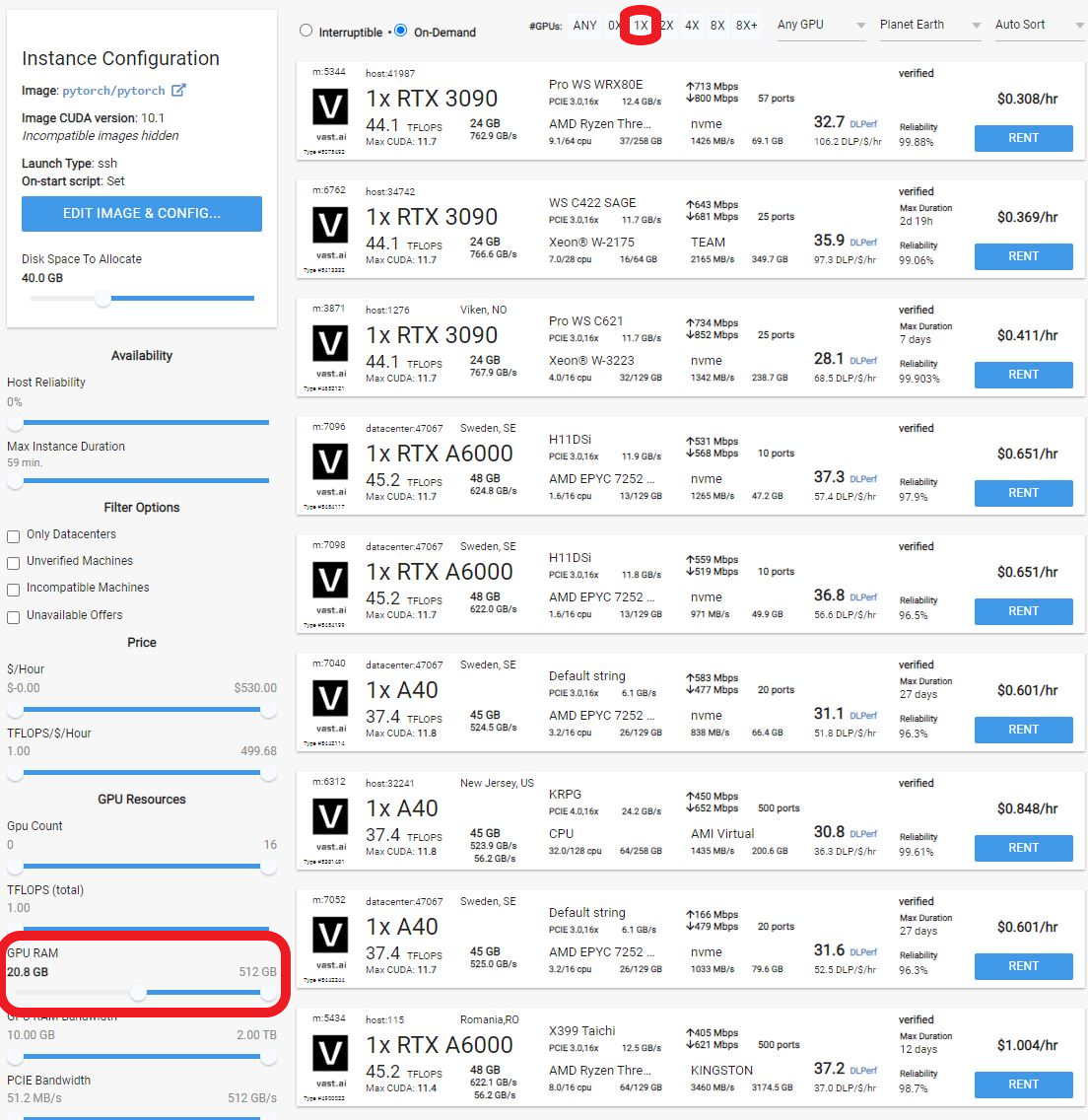

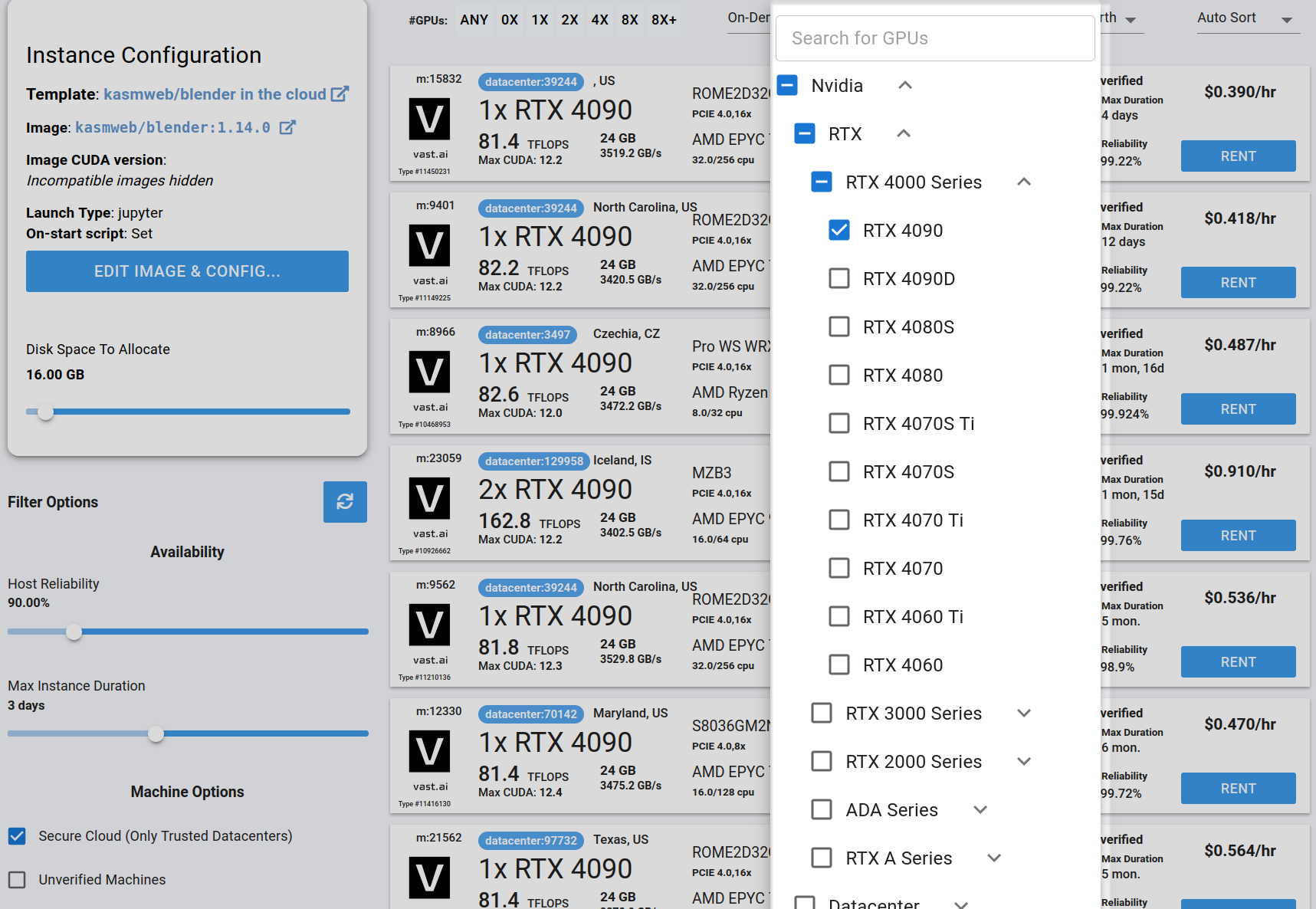

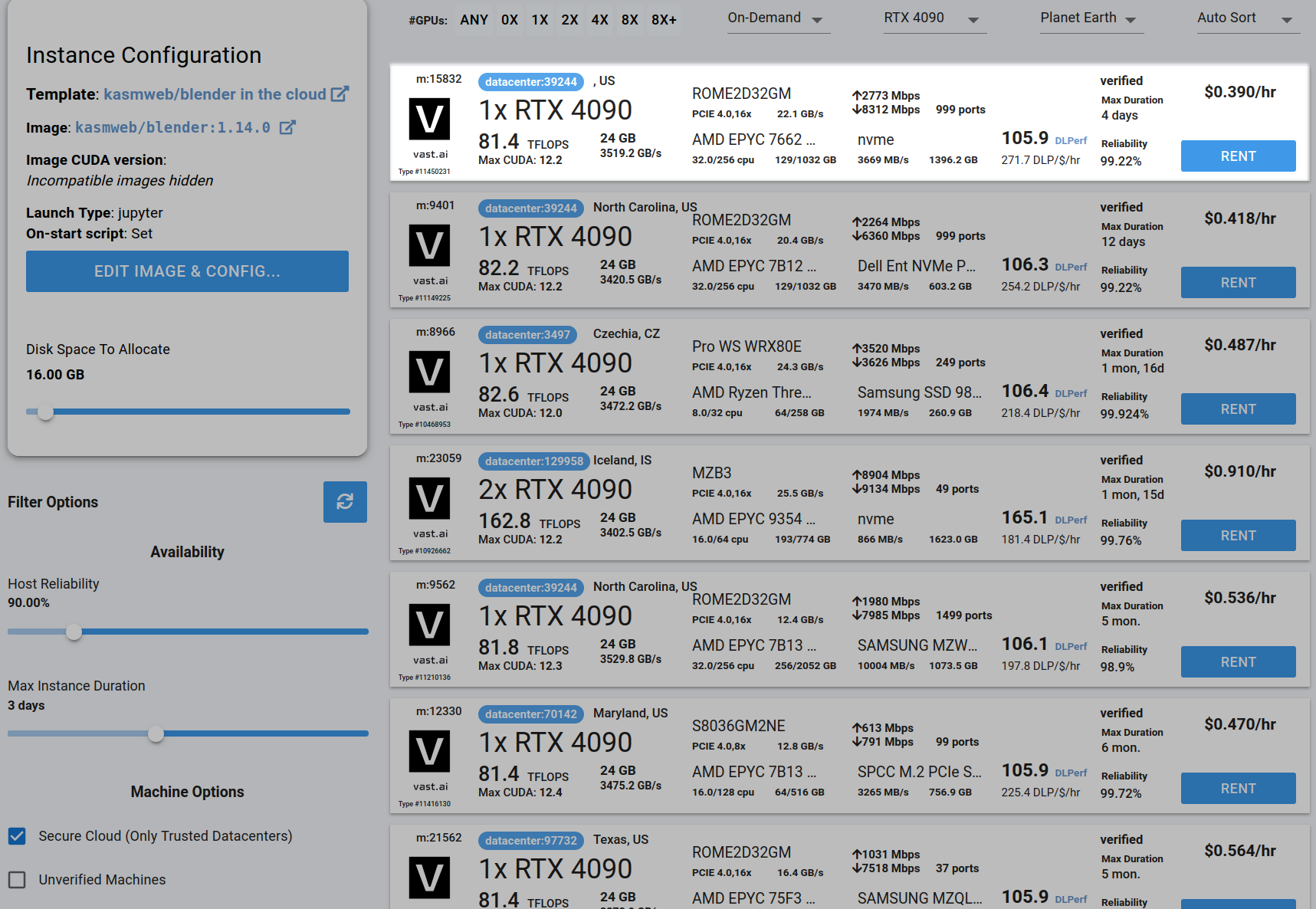

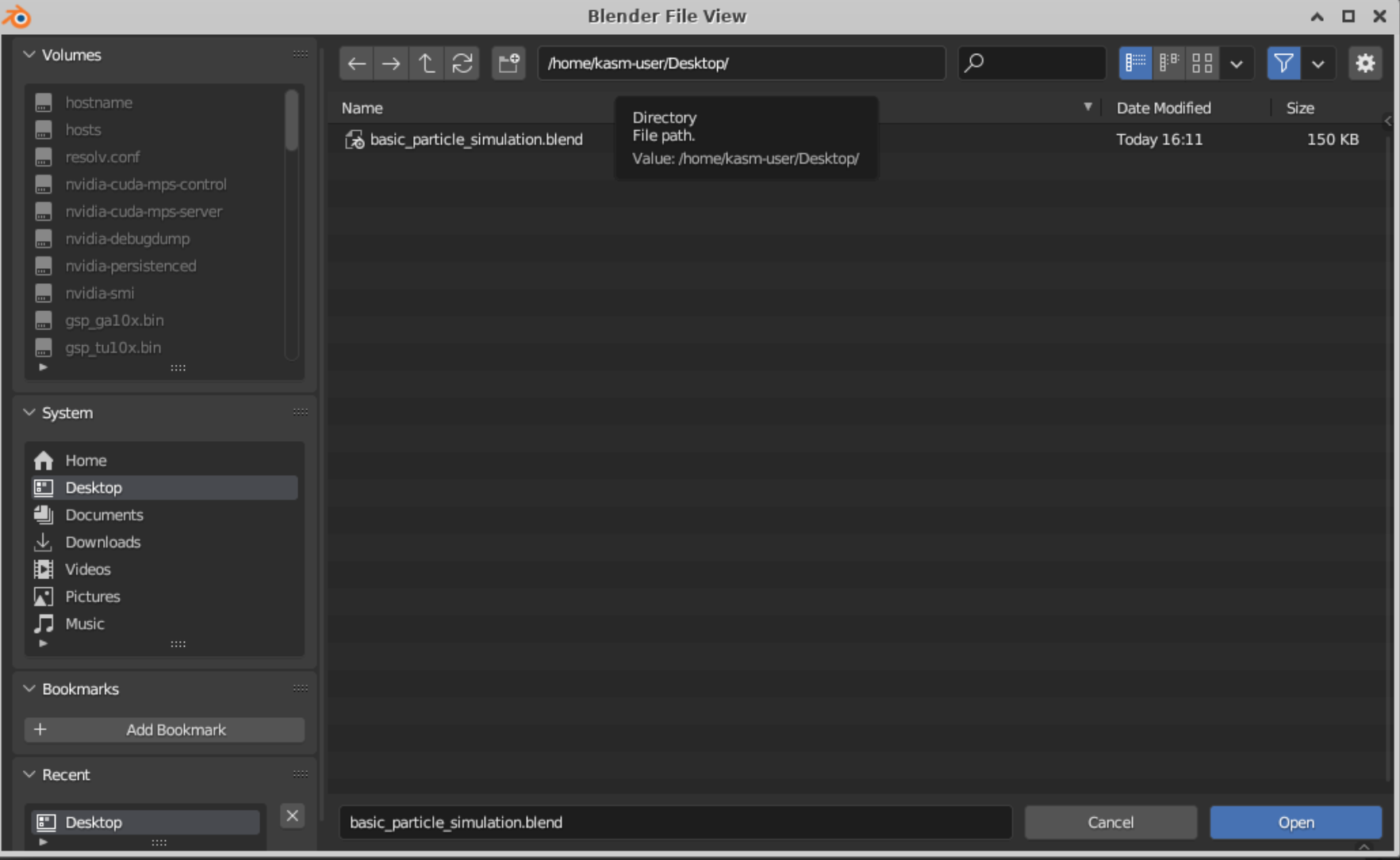

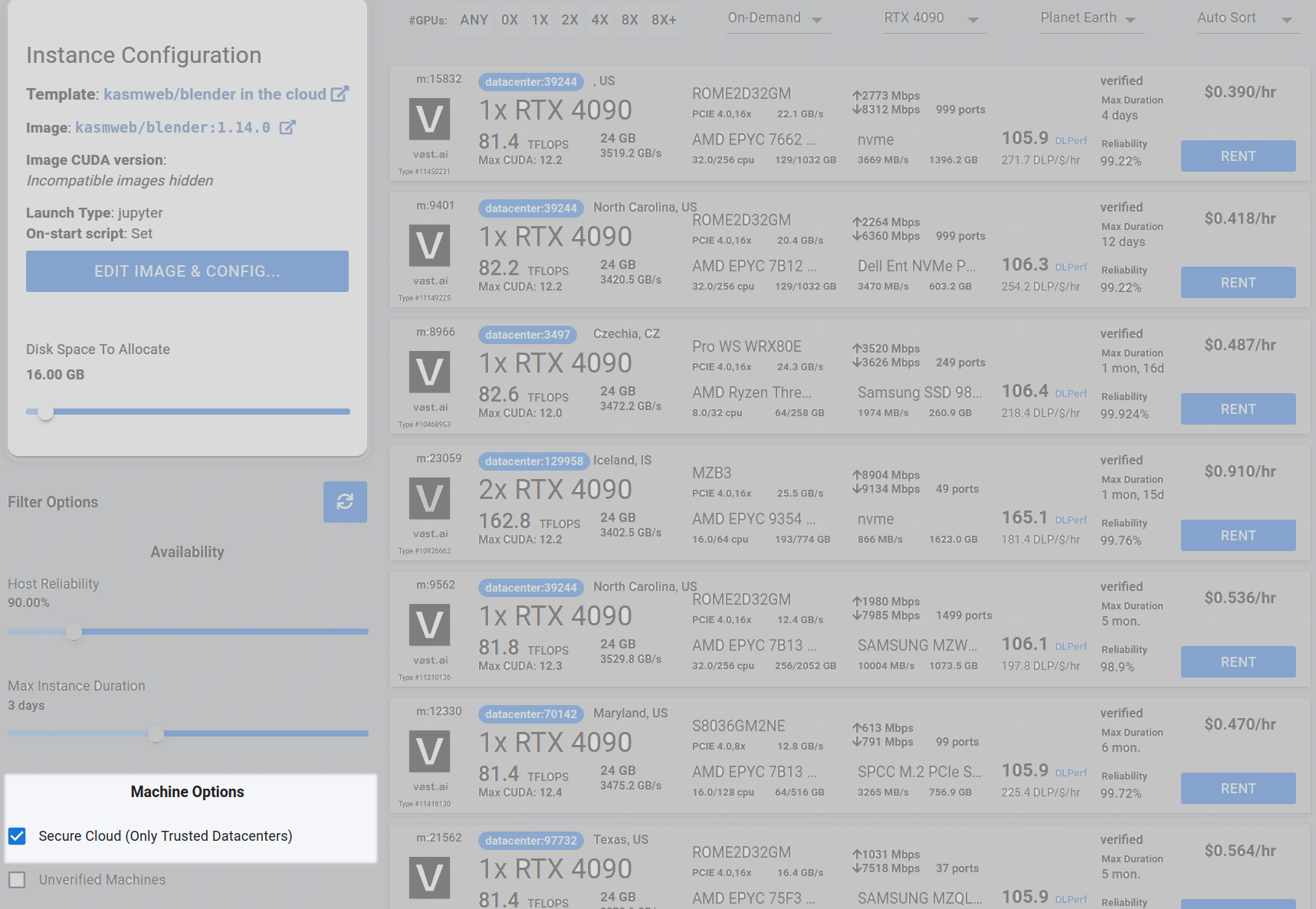

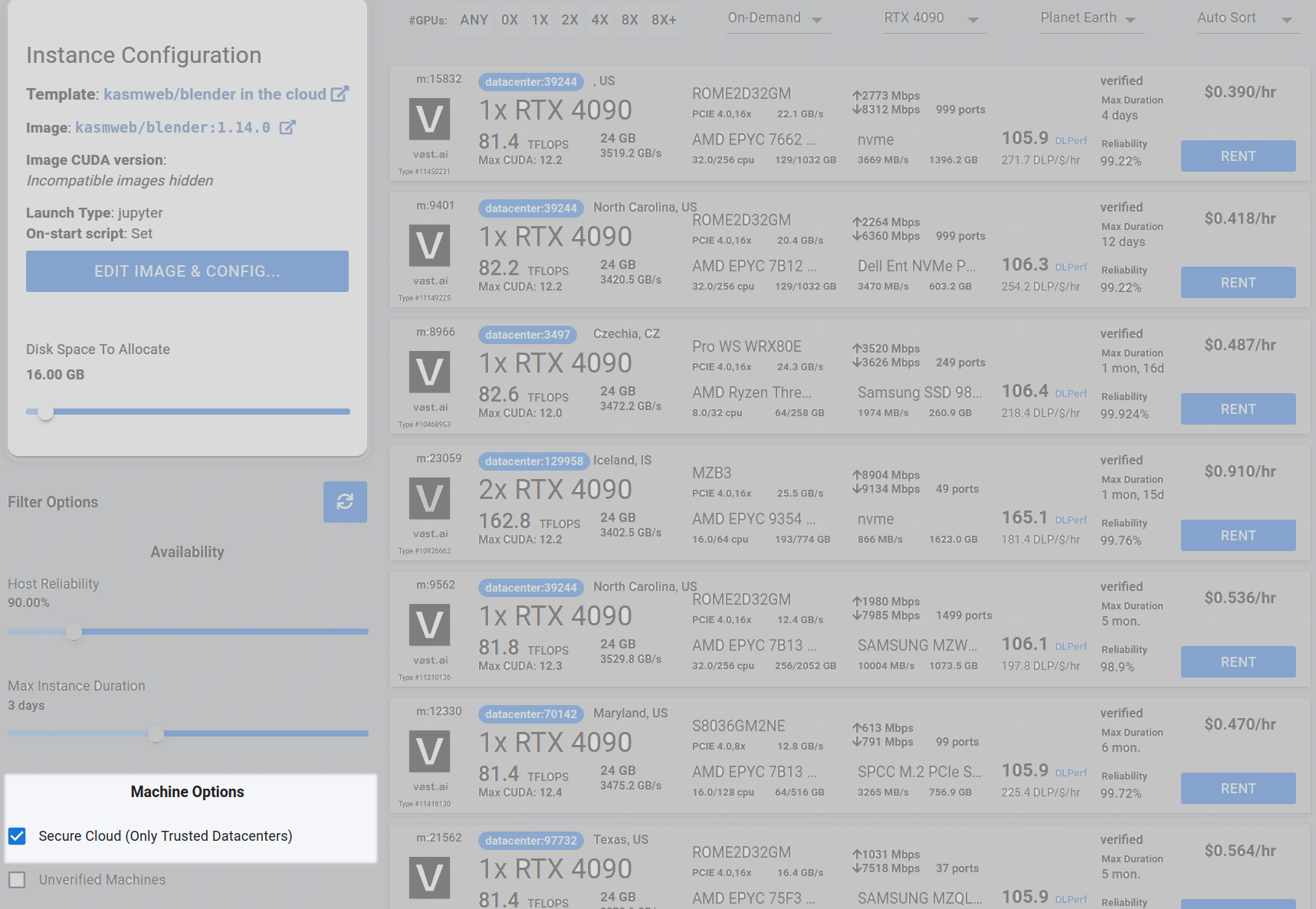

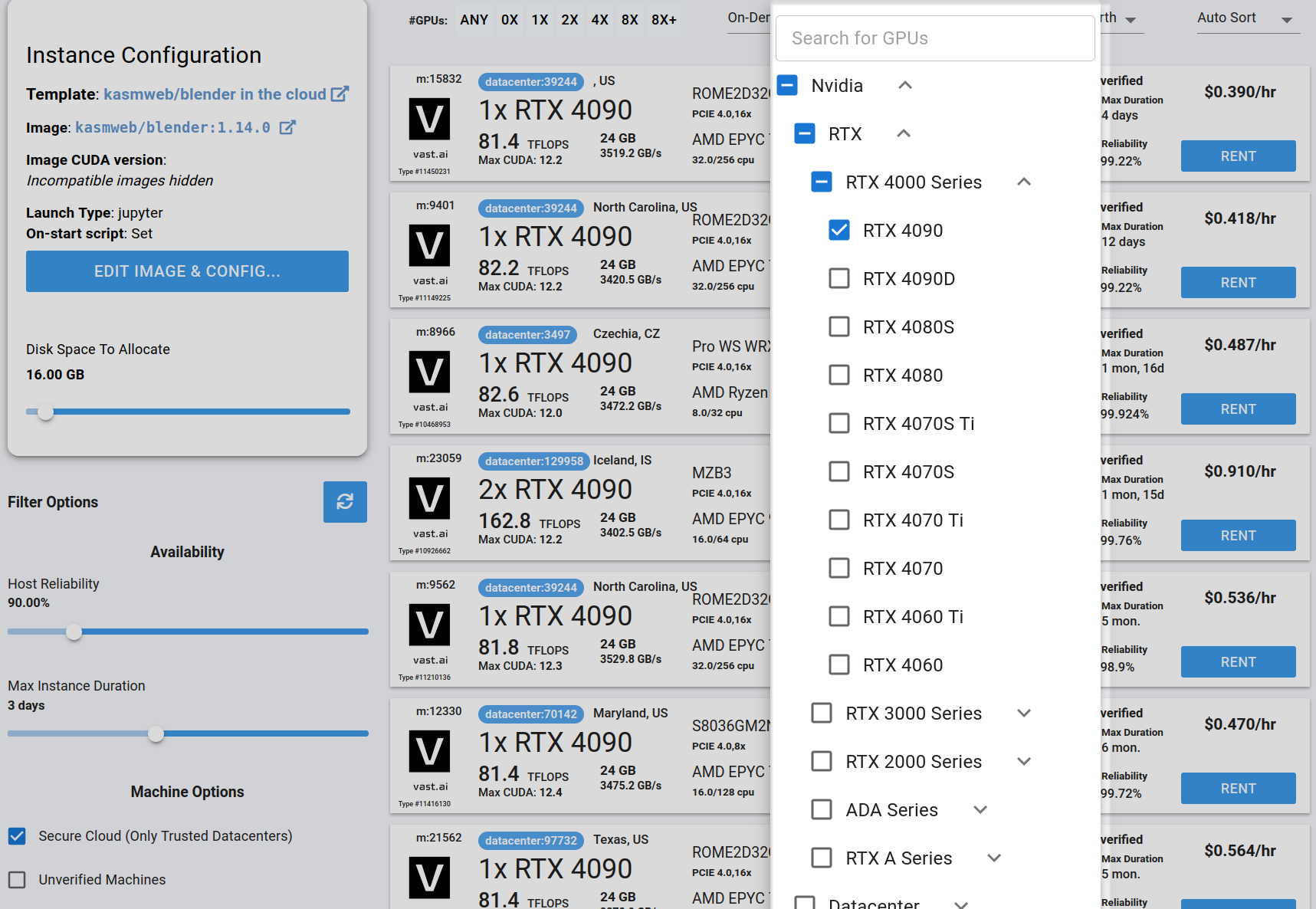

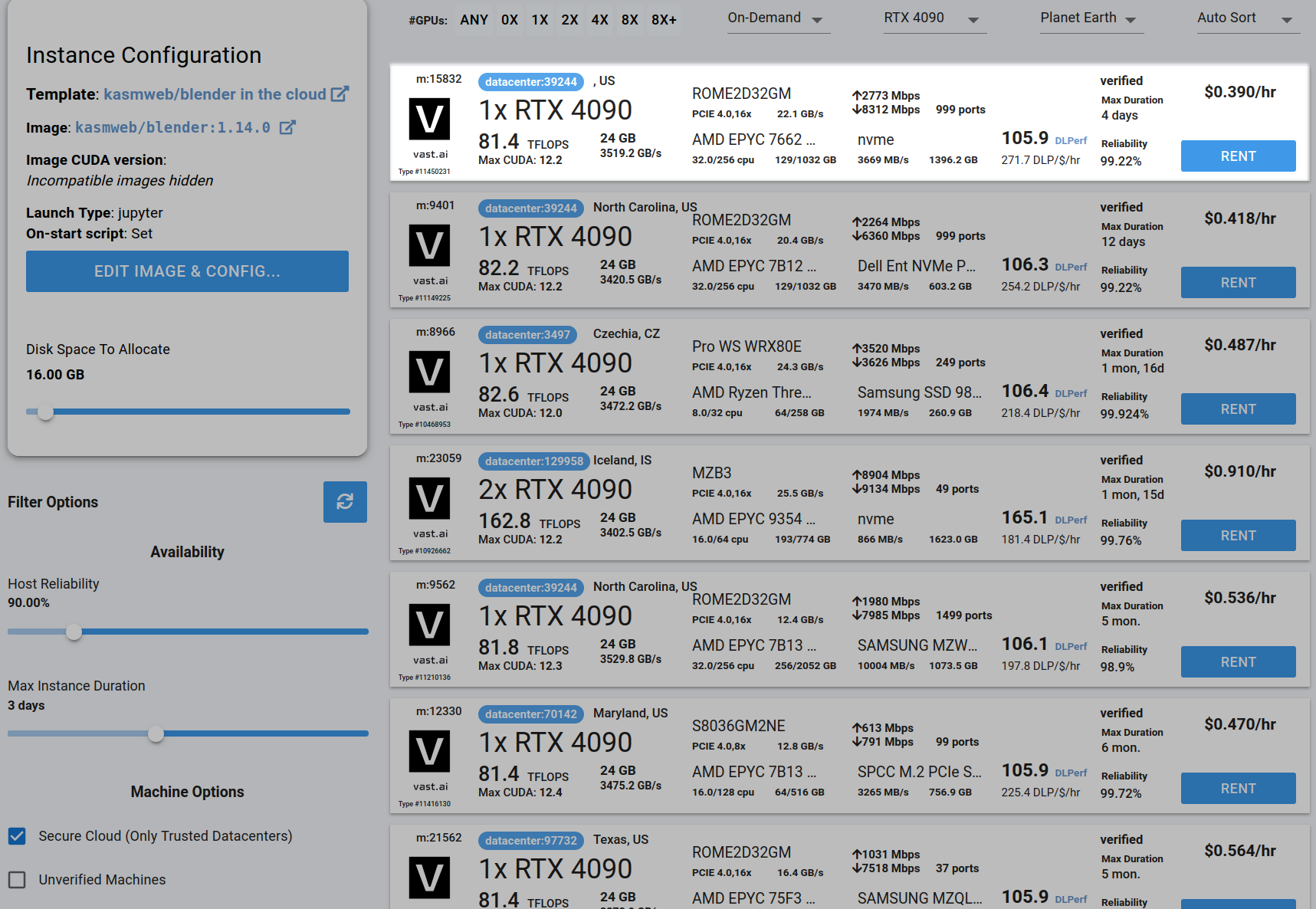

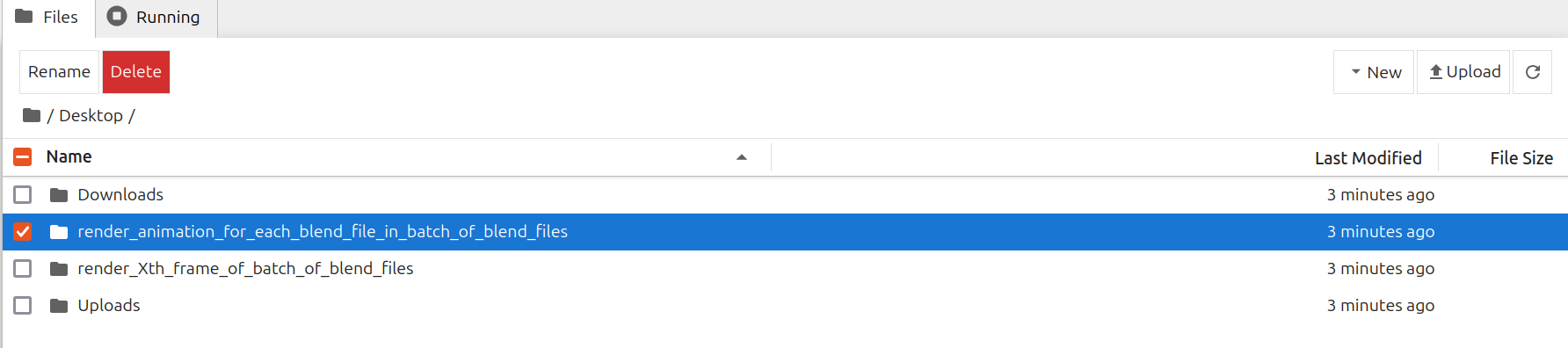

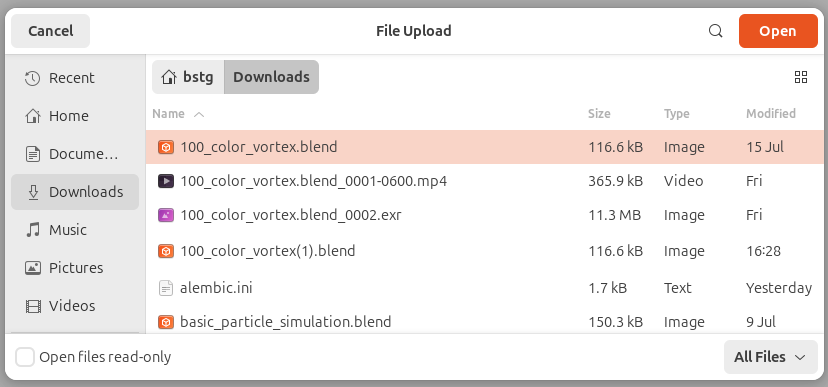

### 3**. Pick a **[**Template**](/documentation/instances/templates)** & Find a Machine**

* Browse [**Templates**](https://cloud.vast.ai/templates/) for pre-built setups (e.g., [PyTorch](/pytorch), TensorFlow, ComfyUI).

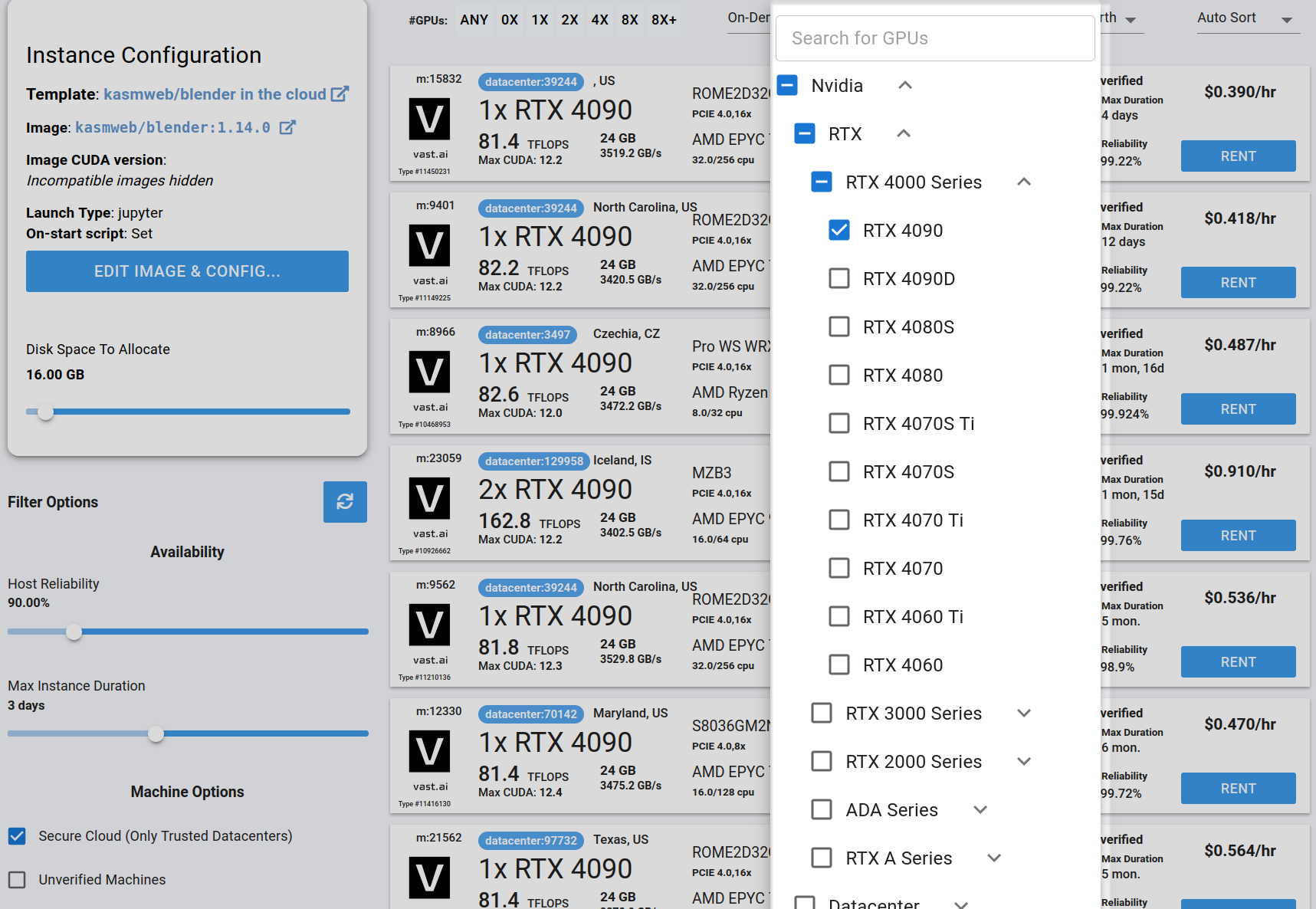

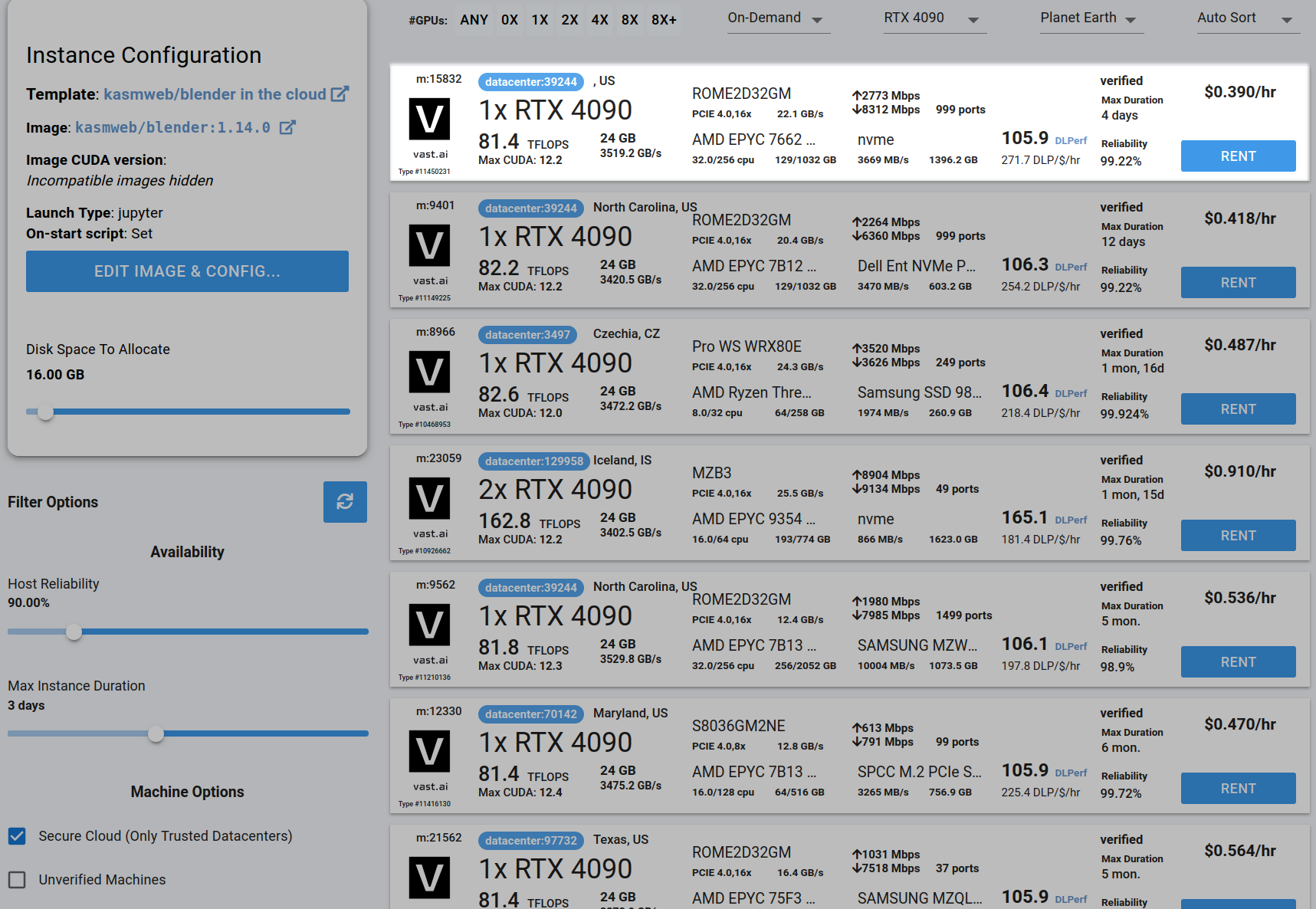

* Go to [**Search**](https://cloud.vast.ai/create/) and filter by GPU type, count, RAM, CPU, network speed, and price.

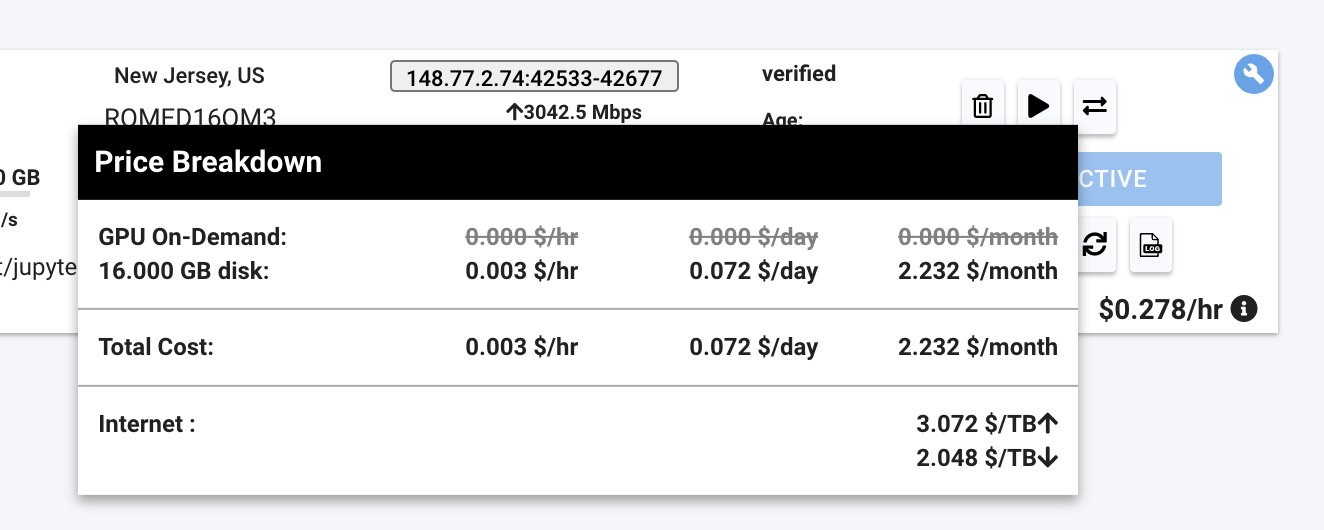

* **Disk Space is Permanent. **The disk size you choose when creating an instance cannot be changed later. If you run out of space, you’ll need to create a new instance with a larger disk. Tip: Allocate a bit more than you think you need to avoid interruptions.

* Click **Rent** when you find a match.

* Wait for the instance to start—cached images launch quickly, fresh pulls may take 10–60 minutes.

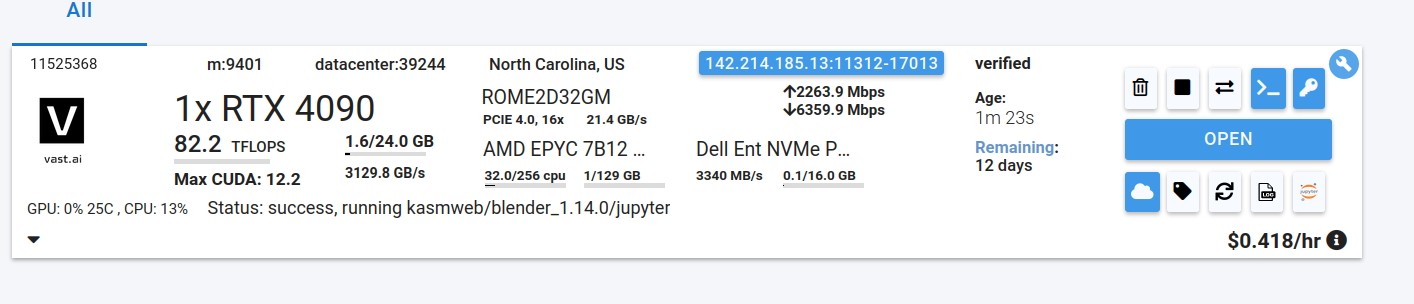

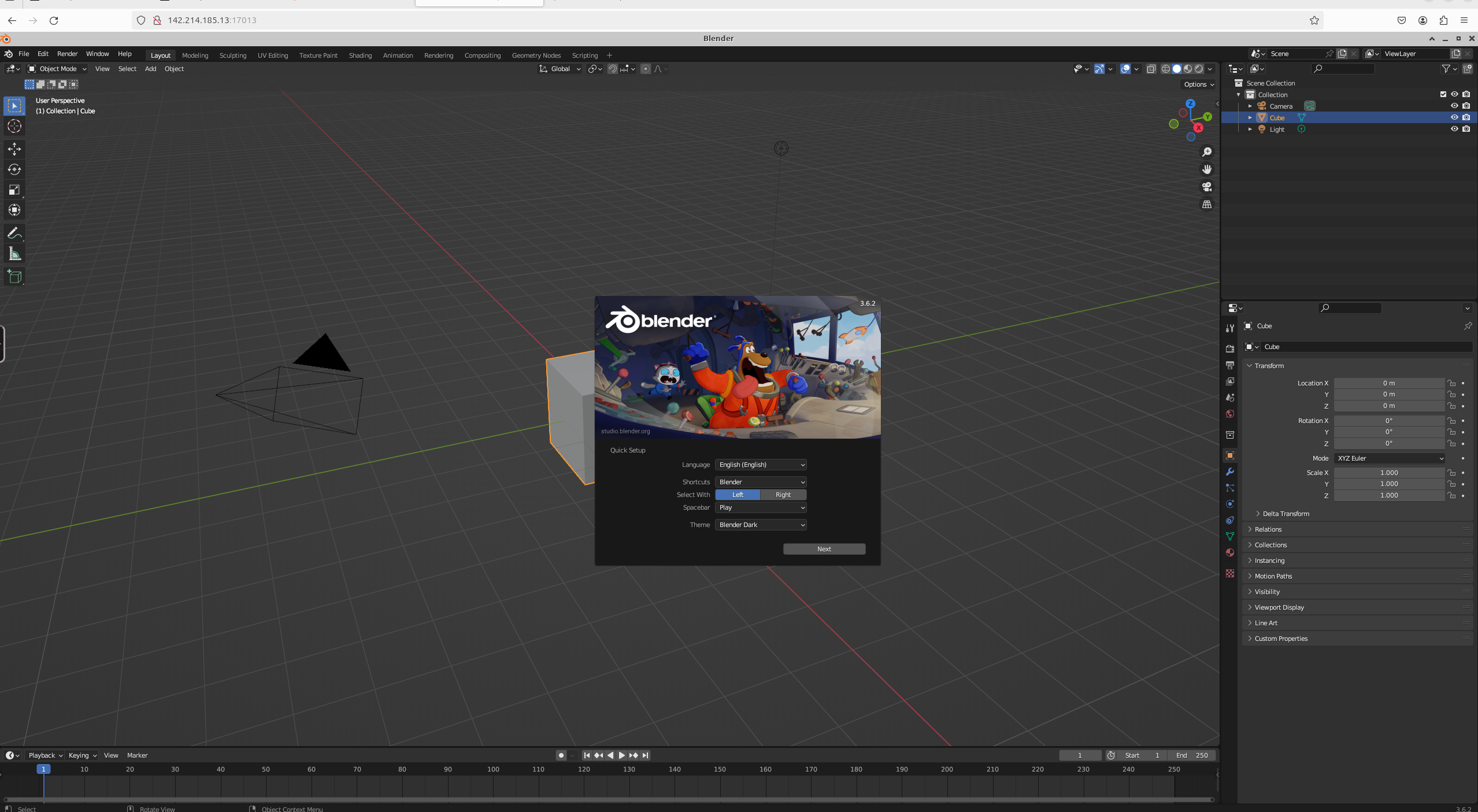

* Click **Open** button to access your instance.

### **4. **[**Manage or End Your Instance**](/documentation/instances/managing-instances)

* Use **Stop** to pause GPU billing (storage still accrues charges).

* Use **Delete** when finished to stop *all* charges.

* **For Jupyter access**: download and install the provided [TSL certificate](/documentation/instances/jupyter#1SmCz) (needed for secure browser access).

If you don’t install the provided browser certificate:

* **Windows / Linux** – You’ll see a **“Your connection is not private”** privacy warning. You can still connect by clicking **Advanced** → **Proceed**, but the warning will appear every time.

* **macOS** – Browsers will block Jupyter until you install and trust the provided certificate in **Keychain Access**. Without it, you won’t be able to connect.

Installing the certificate once removes the warning permanently.

### 3**. Pick a **[**Template**](/documentation/instances/templates)** & Find a Machine**

* Browse [**Templates**](https://cloud.vast.ai/templates/) for pre-built setups (e.g., [PyTorch](/pytorch), TensorFlow, ComfyUI).

* Go to [**Search**](https://cloud.vast.ai/create/) and filter by GPU type, count, RAM, CPU, network speed, and price.

* **Disk Space is Permanent. **The disk size you choose when creating an instance cannot be changed later. If you run out of space, you’ll need to create a new instance with a larger disk. Tip: Allocate a bit more than you think you need to avoid interruptions.

* Click **Rent** when you find a match.

* Wait for the instance to start—cached images launch quickly, fresh pulls may take 10–60 minutes.

* Click **Open** button to access your instance.

### **4. **[**Manage or End Your Instance**](/documentation/instances/managing-instances)

* Use **Stop** to pause GPU billing (storage still accrues charges).

* Use **Delete** when finished to stop *all* charges.

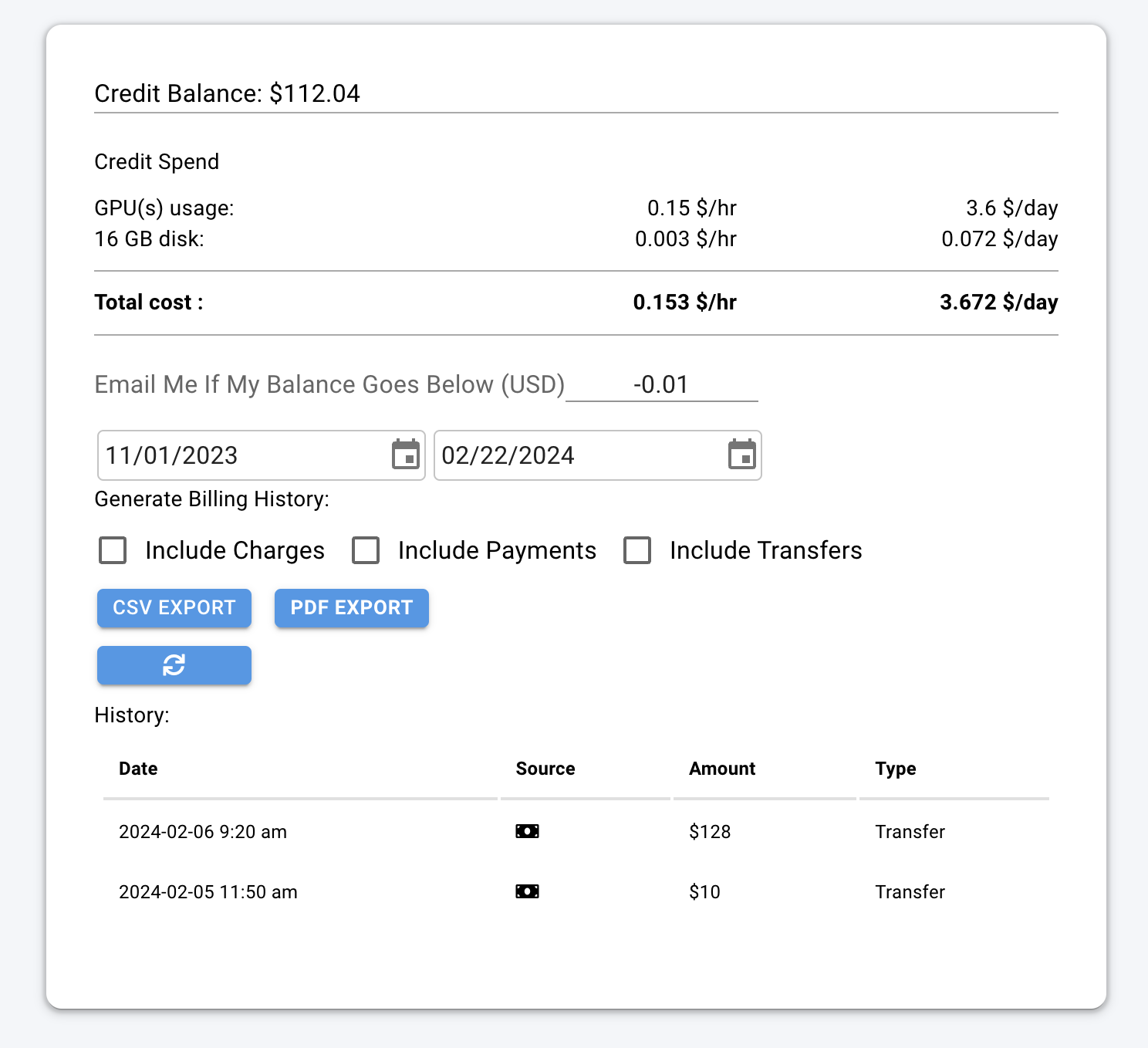

### What is a minimum deposit amount?

The minimum deposit amount on Vast.ai is \$5.

### What happens when my balance runs out? Can I avoid interruptions?

When your balance reaches zero, your running instances will automatically stop. To avoid this, you can enable **auto-billing **on the Billing page. Set an auto-charge threshold higher than your average daily spend, so your card is automatically charged when your balance falls below that amount. We also recommend setting a **low-balance email alert **at a slightly lower threshold to notify you if the auto-charge fails for any reason.

### How can I customize a template?

You can create a new template from scratch, or you can edit an existing template. You can find a guide [here](/documentation/instances/templates#LrOME).

---

## Huggingface TGI with LLama3

**URL:** llms-txt#huggingface-tgi-with-llama3

**Contents:**

- 1) Choose The Huggingface LLama3 TGI API Template From the Recommended Section

- 2) Modifying the Template

- 3) Rent a GPU

- 4) Monitor Your Instance

- 5) Congratulations!

Source: https://docs.vast.ai/huggingface-tgi-with-llama3

This is a guide on how to setup and expose an API for Llama3 Text Generation.

## 1) Choose The Huggingface LLama3 TGI API Template From the Recommended Section

Login to your Vast account on the [console](https://cloud.vast.ai)

Select the [HuggingFace Llama3 TGI API](https://cloud.vast.ai/?template_id=906891f677fb36f21662a92e6092b5fc) template by clicking the link provider

For this template we will be using the meta-llama/Meta-Llama-3-8B-Instruct model, and the TGI 2.0.4 from Huggingface

Templates encapsulate all the information required to run an application with the autoscaler, including machine parameters, docker image, and environment variables.

For this template, the only requirement is that you have your own Huggingface access token. You will also need to apply to have access to Llama3 on huggingface in order to access this gated repository.

The template comes with some filters that are minimum requirements for TGI to run effectively. This includes but is not limited to a disk space requirement of 100GB, and a gpu ram requirement of at least 16GB.

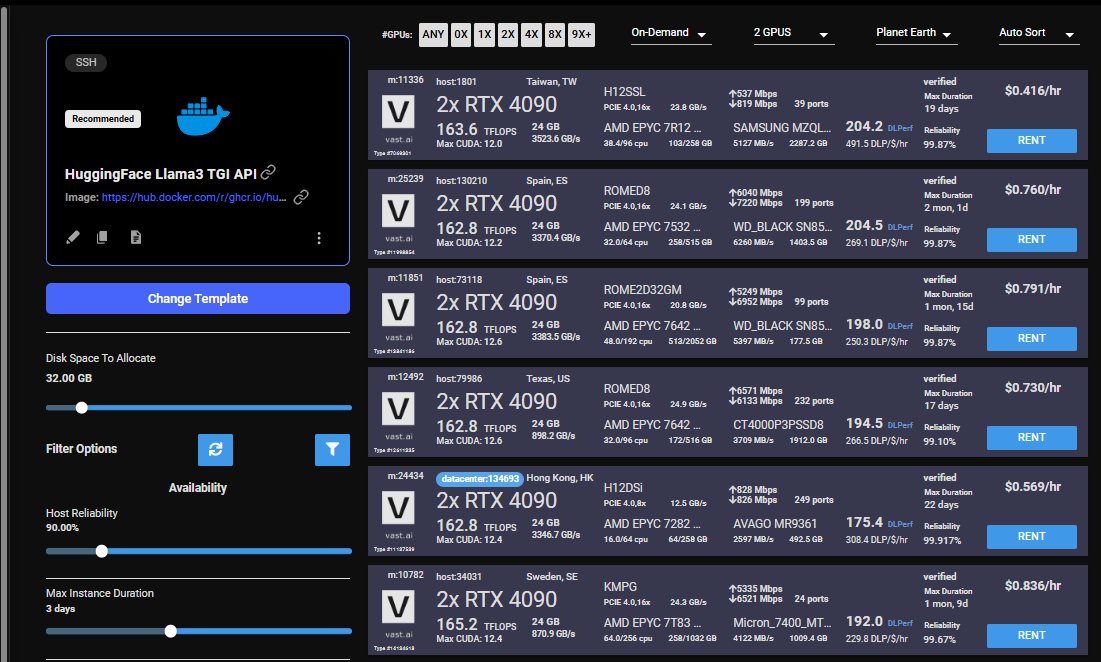

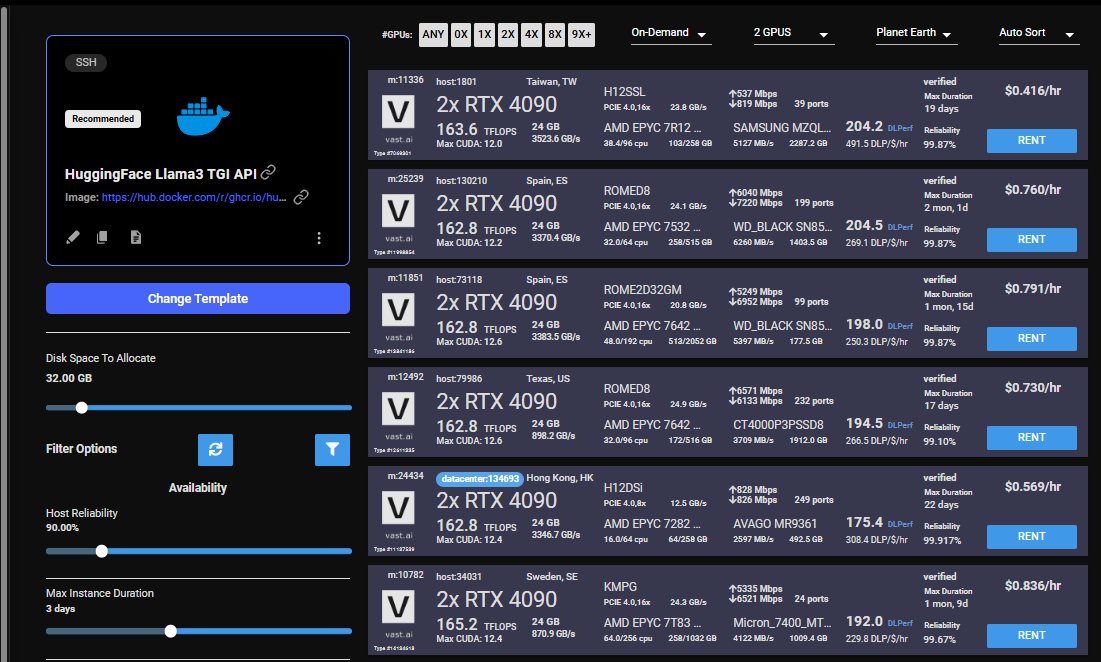

After selecting the template your screen should look like this:

### What is a minimum deposit amount?

The minimum deposit amount on Vast.ai is \$5.

### What happens when my balance runs out? Can I avoid interruptions?

When your balance reaches zero, your running instances will automatically stop. To avoid this, you can enable **auto-billing **on the Billing page. Set an auto-charge threshold higher than your average daily spend, so your card is automatically charged when your balance falls below that amount. We also recommend setting a **low-balance email alert **at a slightly lower threshold to notify you if the auto-charge fails for any reason.

### How can I customize a template?

You can create a new template from scratch, or you can edit an existing template. You can find a guide [here](/documentation/instances/templates#LrOME).

---

## Huggingface TGI with LLama3

**URL:** llms-txt#huggingface-tgi-with-llama3

**Contents:**

- 1) Choose The Huggingface LLama3 TGI API Template From the Recommended Section

- 2) Modifying the Template

- 3) Rent a GPU

- 4) Monitor Your Instance

- 5) Congratulations!

Source: https://docs.vast.ai/huggingface-tgi-with-llama3

This is a guide on how to setup and expose an API for Llama3 Text Generation.

## 1) Choose The Huggingface LLama3 TGI API Template From the Recommended Section

Login to your Vast account on the [console](https://cloud.vast.ai)

Select the [HuggingFace Llama3 TGI API](https://cloud.vast.ai/?template_id=906891f677fb36f21662a92e6092b5fc) template by clicking the link provider

For this template we will be using the meta-llama/Meta-Llama-3-8B-Instruct model, and the TGI 2.0.4 from Huggingface

Templates encapsulate all the information required to run an application with the autoscaler, including machine parameters, docker image, and environment variables.

For this template, the only requirement is that you have your own Huggingface access token. You will also need to apply to have access to Llama3 on huggingface in order to access this gated repository.

The template comes with some filters that are minimum requirements for TGI to run effectively. This includes but is not limited to a disk space requirement of 100GB, and a gpu ram requirement of at least 16GB.

After selecting the template your screen should look like this:

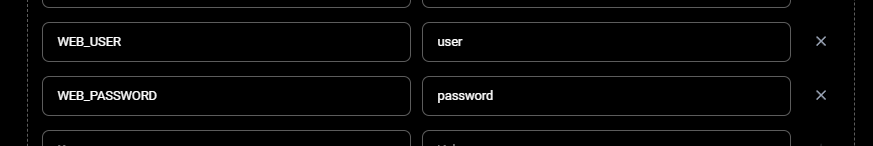

## 2) Modifying the Template

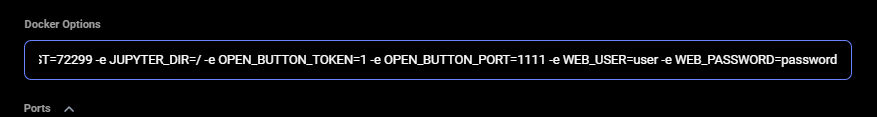

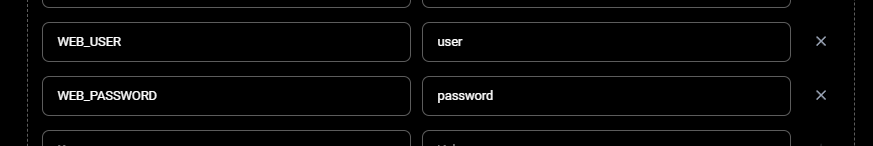

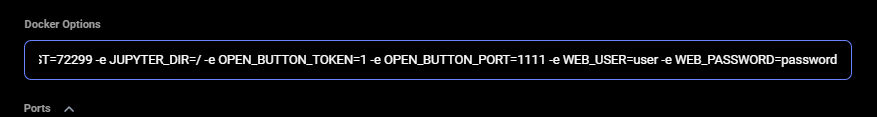

Once you have selected the template, you will need to then add in your huggingface token and click the 'Select & Save' button.

You can add your huggingface token with the rest of the docker run options.

This is the only modification you will need to make on this template.

You can then press 'Select & Save' to get ready to launch your instance.

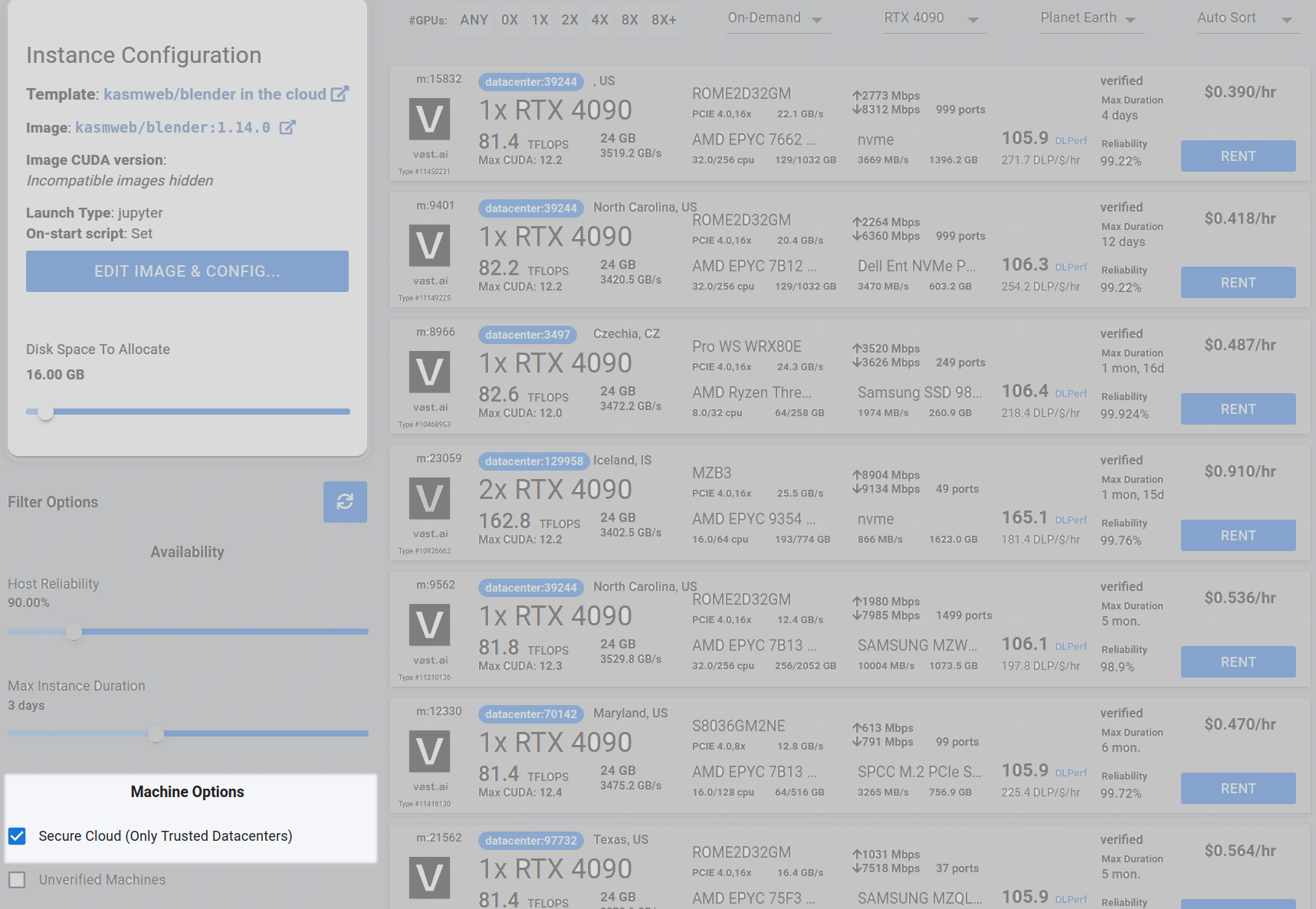

Once you have selected the template, you can then choose to rent a GPU of your choice from either the search page or the CLI/API.

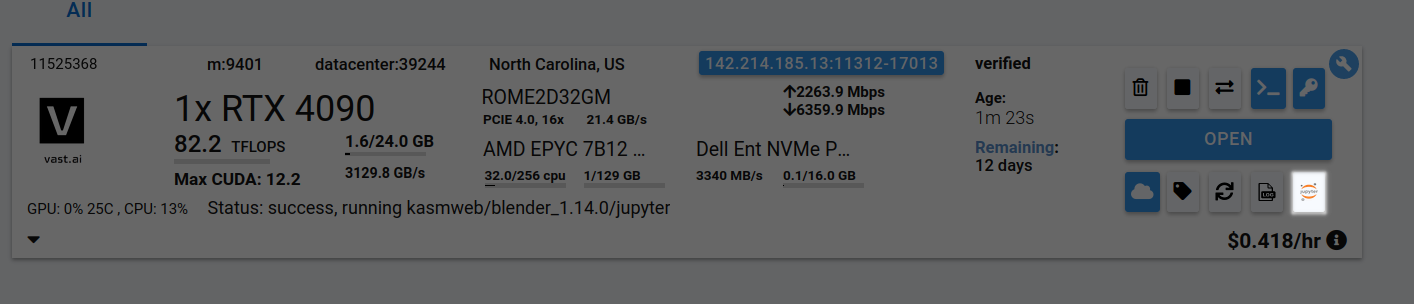

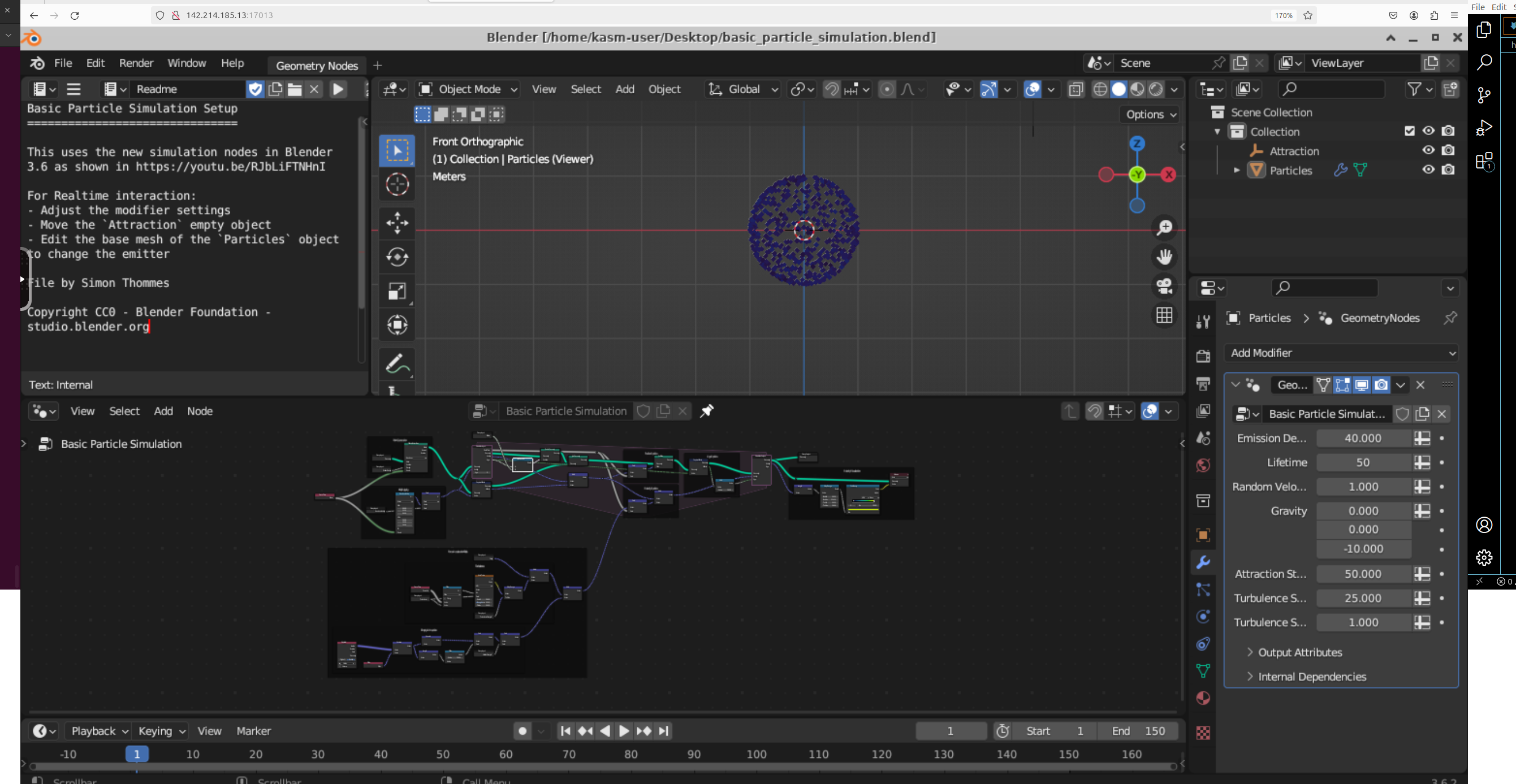

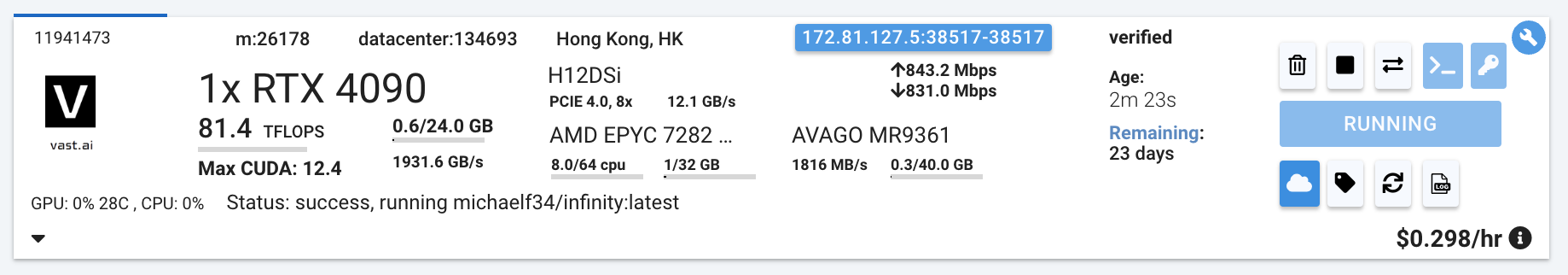

For someone just getting started I recommend either an Nvidia RTX 4090, or an A5000.

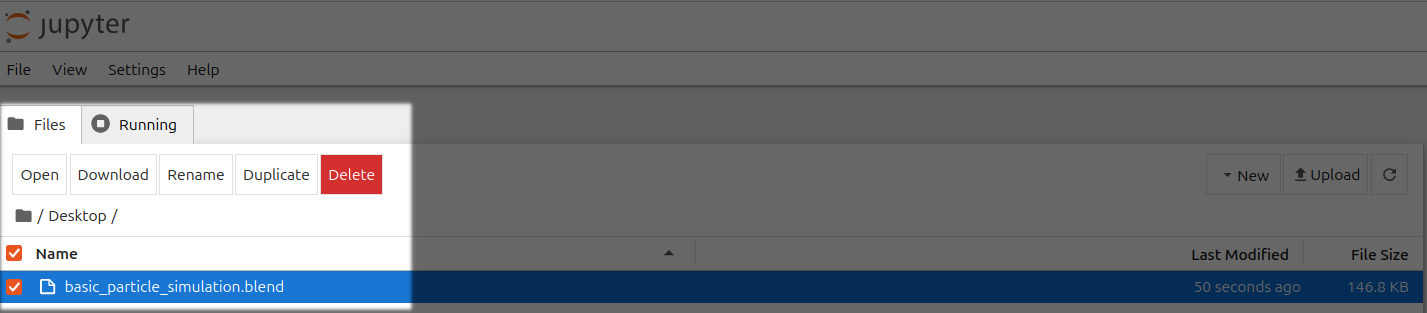

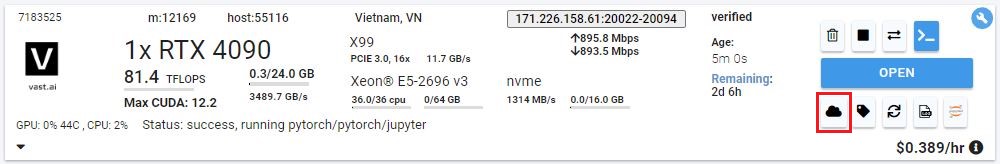

## 4) Monitor Your Instance

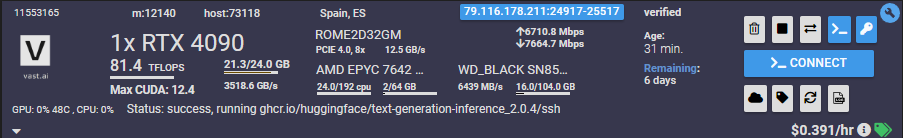

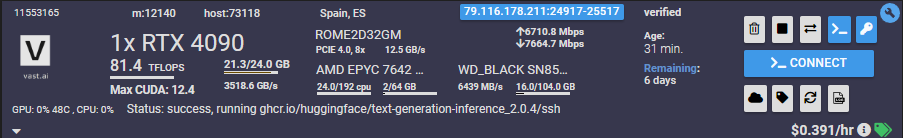

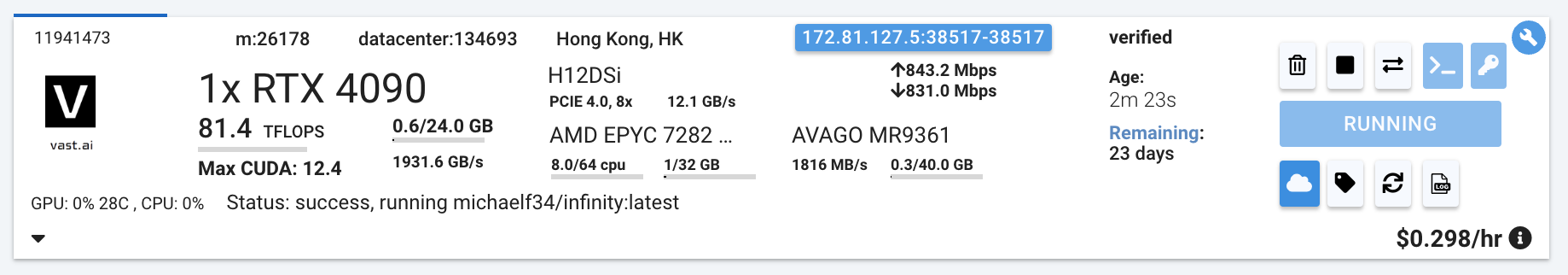

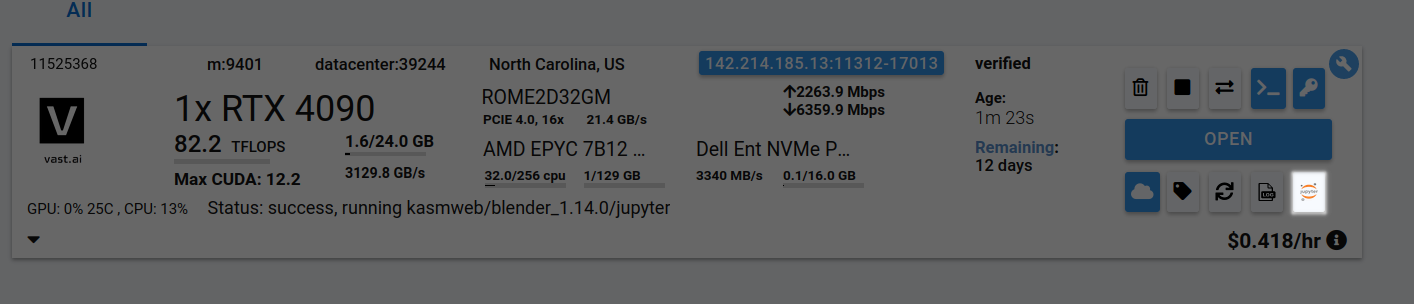

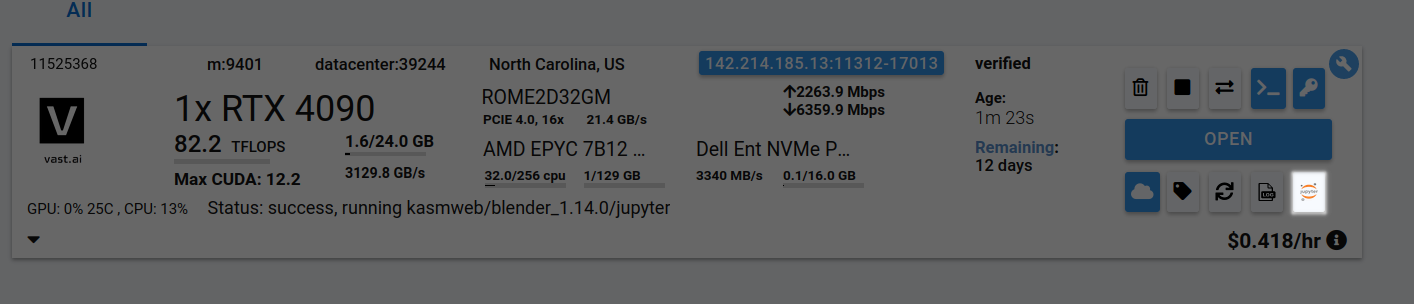

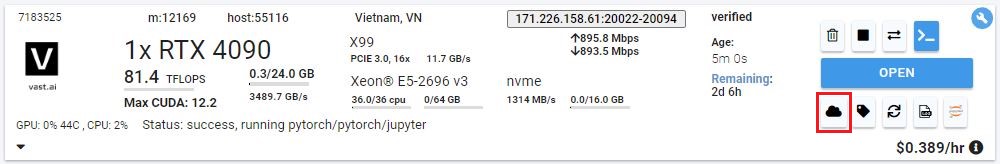

Once you rent a GPU your instance will being spinning up on the Instances page.

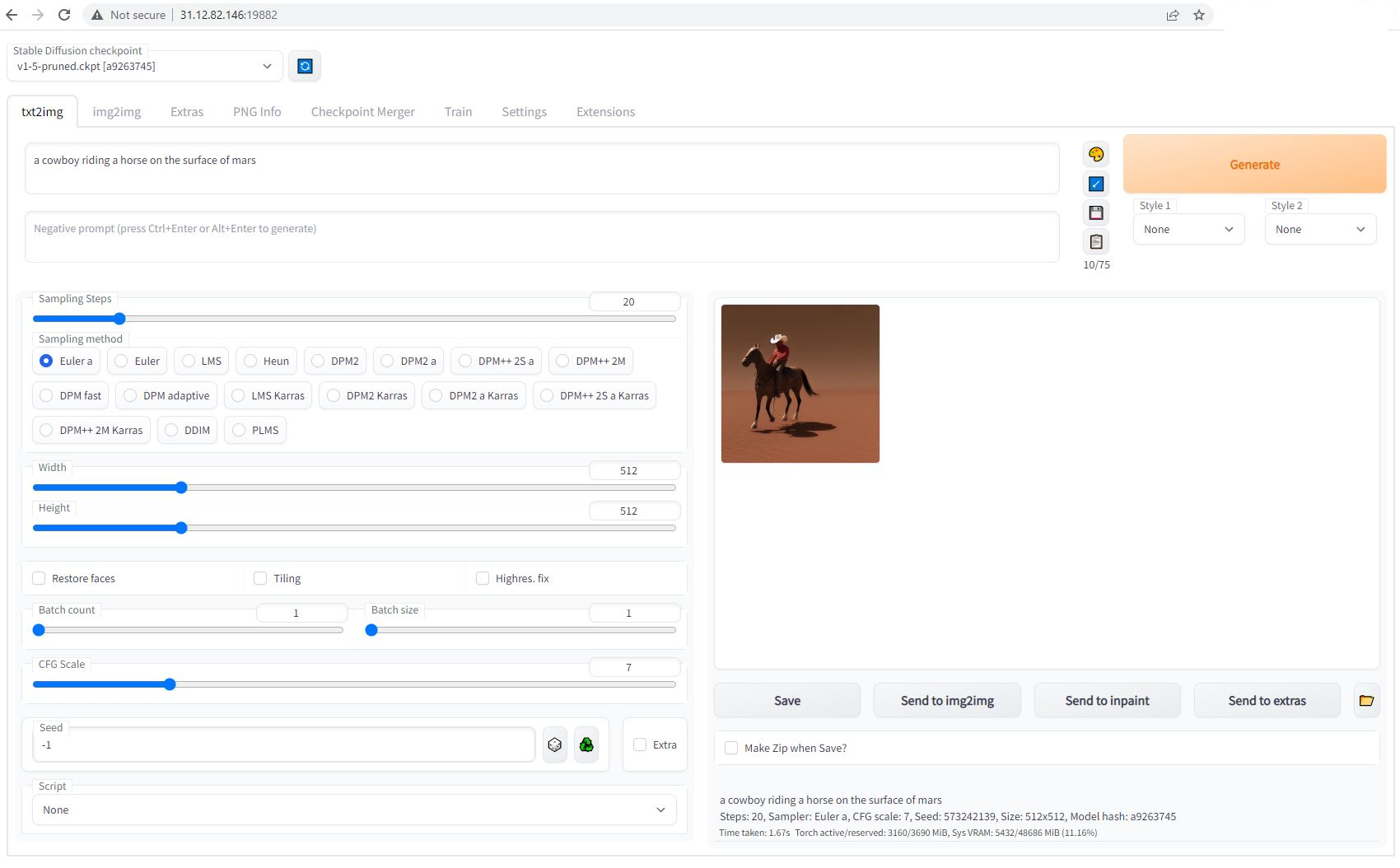

You know the API will be ready when your instance looks like this:

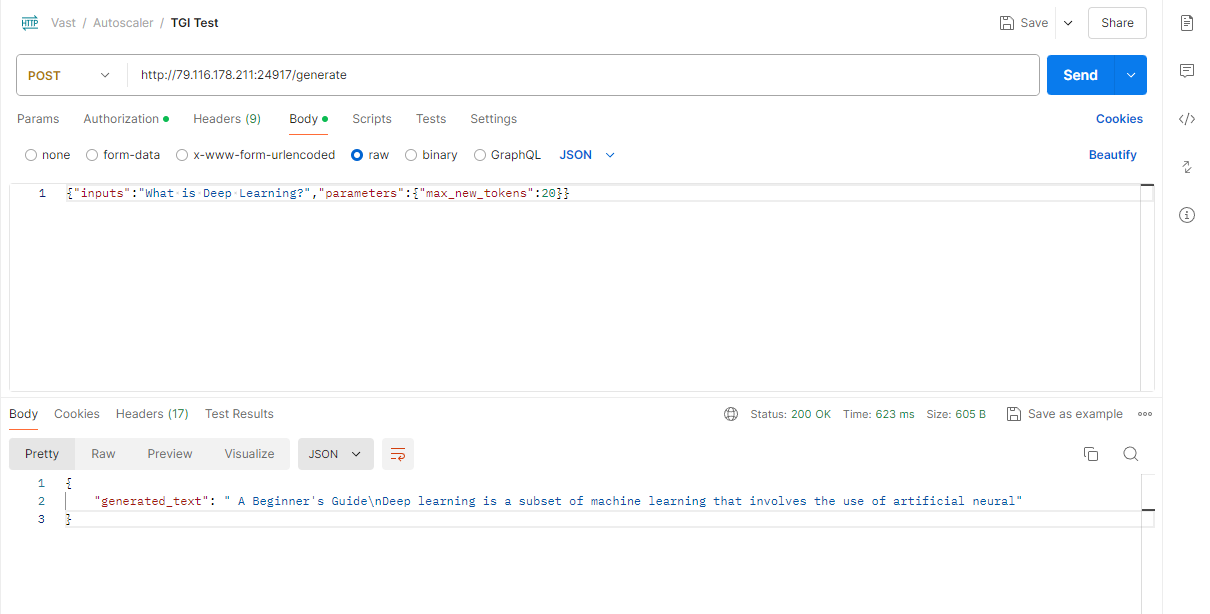

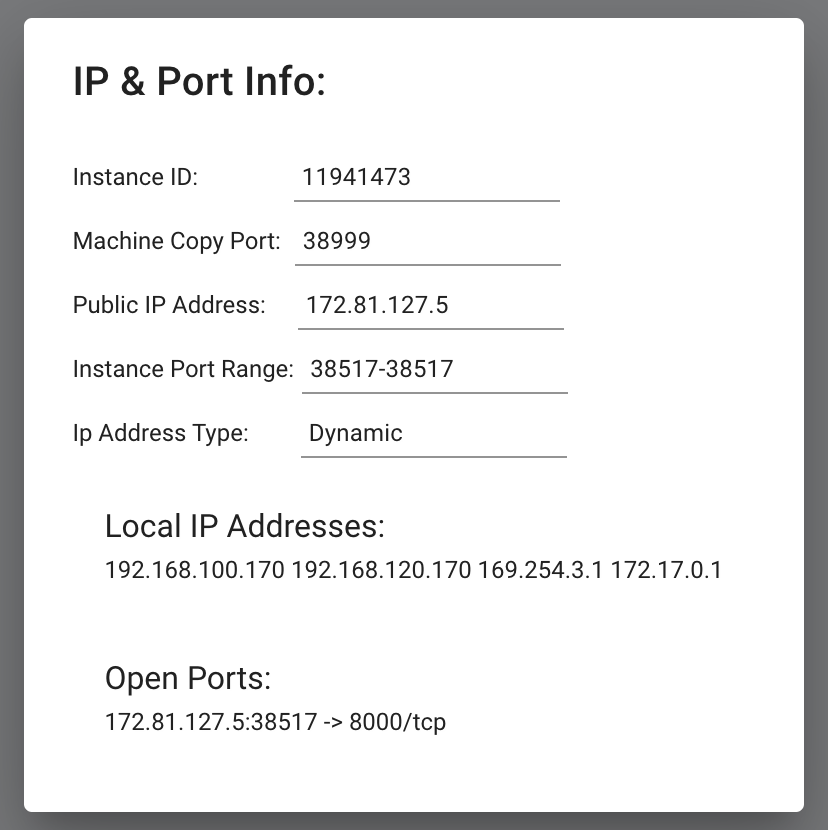

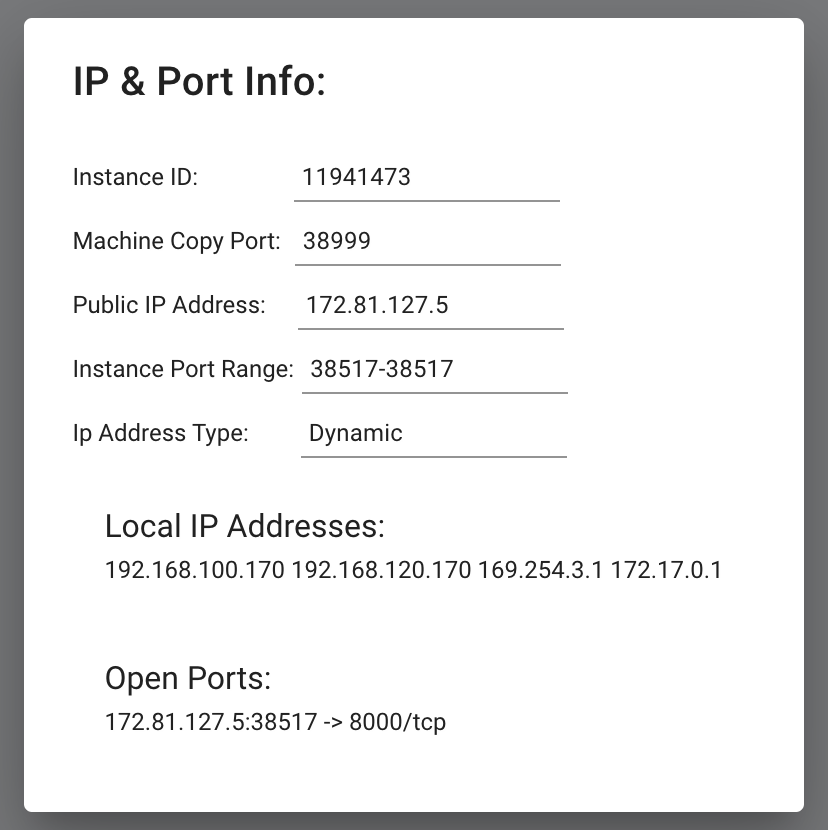

Once your instance is ready you will need to find where your API is exposed. Go to the IP & Config by pressing the blue button on the top of the instance card. You can see the networking configuration here.

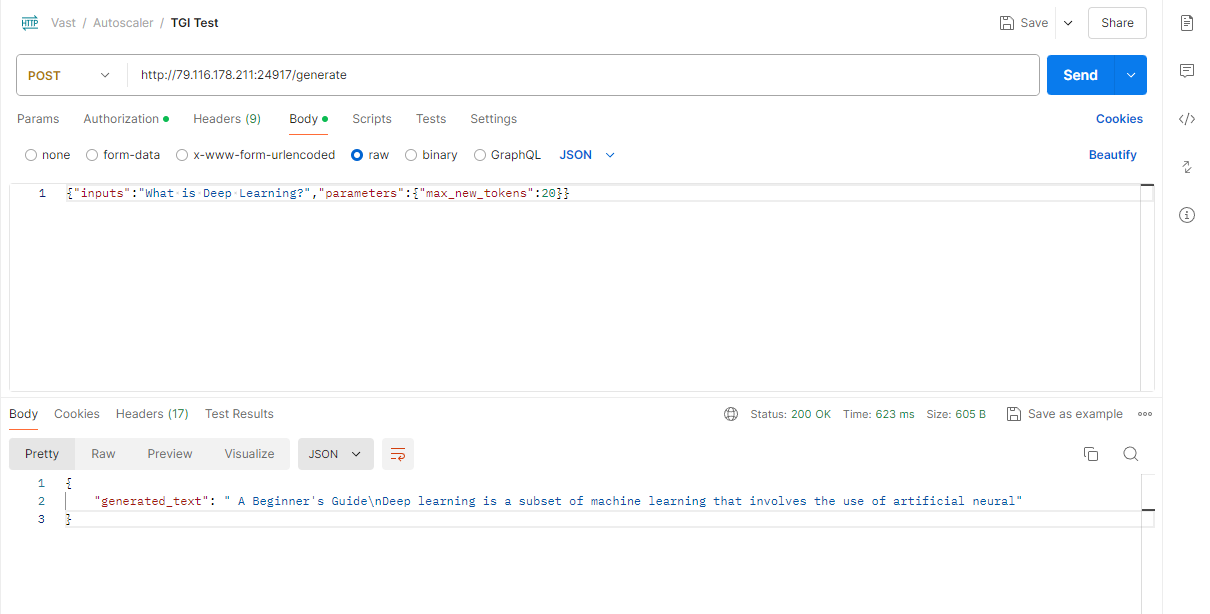

After opening the IP & Port Config you should see a forwarded port from 5001, this is where your API resides. To hit TGI you can use the '/generate' endpoint on that port.

## 5) Congratulations!

You now have a running instance with an API that is using TGI loaded up with Llama3 8B!

---

## SSH Connection

**URL:** llms-txt#ssh-connection

**Contents:**

- About SSH

- Quick start: Generate and add your SSH key to your Vast account

- Connecting to your Instance

- Direct vs Proxy Connections

- Tmux

- SSH Local Port Forwarding

- SSH Alternative - Jupyter Terminal

- Troubleshooting

- Permission Denied (publickey)

- SSH Key Changes

Source: https://docs.vast.ai/documentation/instances/connect/ssh

Learn how to securely connect to Vast.ai instances using SSH. Generate keys, establish connections, use port forwarding, and integrate with VS Code.

**SSH (Secure Shell)** is a protocol for safely connecting to remote servers. It encrypts your connection so you can:

* Log in securely

* Run commands remotely

* Transfer files without exposing your data

Vast.ai instances are configured to accept keys only - Password authentication is disabled for improved security.

## Quick start: Generate and add your SSH key to your Vast account

**1. Generate a SSH key pair in your terminal**

1. Creates two files (by default in \~/.ssh/):

* id\_ed25519 → your **private key** (keep safe, never share).

* id\_ed25519.pub → your **public key** (safe to share, add to servers).

2. -C "[your\_email@example.com](mailto:your_email@example.com)" is optional. Whatever you put there is stored as a comment in the public key file (e.g., id\_ed25519.pub). It's just for identification (helpful if you use multiple keys), not for security.

When you run ssh-keygen -t ed25519 in **Windows PowerShell**, the keys are created in your Windows user profile folder:

`C:\Users\\.ssh\`

**2. Copy your public key.**

**3. Add it in your** [**vast account**](https://cloud.vast.ai/manage-keys/)

## 2) Modifying the Template

Once you have selected the template, you will need to then add in your huggingface token and click the 'Select & Save' button.

You can add your huggingface token with the rest of the docker run options.

This is the only modification you will need to make on this template.

You can then press 'Select & Save' to get ready to launch your instance.

Once you have selected the template, you can then choose to rent a GPU of your choice from either the search page or the CLI/API.

For someone just getting started I recommend either an Nvidia RTX 4090, or an A5000.

## 4) Monitor Your Instance

Once you rent a GPU your instance will being spinning up on the Instances page.

You know the API will be ready when your instance looks like this:

Once your instance is ready you will need to find where your API is exposed. Go to the IP & Config by pressing the blue button on the top of the instance card. You can see the networking configuration here.

After opening the IP & Port Config you should see a forwarded port from 5001, this is where your API resides. To hit TGI you can use the '/generate' endpoint on that port.

## 5) Congratulations!

You now have a running instance with an API that is using TGI loaded up with Llama3 8B!

---

## SSH Connection

**URL:** llms-txt#ssh-connection

**Contents:**

- About SSH

- Quick start: Generate and add your SSH key to your Vast account

- Connecting to your Instance

- Direct vs Proxy Connections

- Tmux

- SSH Local Port Forwarding

- SSH Alternative - Jupyter Terminal

- Troubleshooting

- Permission Denied (publickey)

- SSH Key Changes

Source: https://docs.vast.ai/documentation/instances/connect/ssh

Learn how to securely connect to Vast.ai instances using SSH. Generate keys, establish connections, use port forwarding, and integrate with VS Code.

**SSH (Secure Shell)** is a protocol for safely connecting to remote servers. It encrypts your connection so you can:

* Log in securely

* Run commands remotely

* Transfer files without exposing your data

Vast.ai instances are configured to accept keys only - Password authentication is disabled for improved security.

## Quick start: Generate and add your SSH key to your Vast account

**1. Generate a SSH key pair in your terminal**

1. Creates two files (by default in \~/.ssh/):

* id\_ed25519 → your **private key** (keep safe, never share).

* id\_ed25519.pub → your **public key** (safe to share, add to servers).

2. -C "[your\_email@example.com](mailto:your_email@example.com)" is optional. Whatever you put there is stored as a comment in the public key file (e.g., id\_ed25519.pub). It's just for identification (helpful if you use multiple keys), not for security.

When you run ssh-keygen -t ed25519 in **Windows PowerShell**, the keys are created in your Windows user profile folder:

`C:\Users\\.ssh\`

**2. Copy your public key.**

**3. Add it in your** [**vast account**](https://cloud.vast.ai/manage-keys/)

**Add & Generate SSH Key (using** [**Vast CLI**](/cli/get-started)**)**

1. **Install Vast CLI:**

2. **Generate an API key in your vast account:**

1. Open [CLI page](https://cloud.vast.ai/cli/)

2. Create an API key

**Add & Generate SSH Key (using** [**Vast CLI**](/cli/get-started)**)**

1. **Install Vast CLI:**

2. **Generate an API key in your vast account:**

1. Open [CLI page](https://cloud.vast.ai/cli/)

2. Create an API key

3. **Generate a new SSH key pair** (you will need your vast API key):

* Saves keys as \~/.ssh/id\_ed25519 (private) and \~/.ssh/id\_ed25519.pub (public).

* Backs up existing keys as .backup\_\[timestamp].

* Keys are stored in your Vast account and used for new instances.

* Adding a key to your account keys only applies to **new instances**.

* Existing instances will **not** get the new key automatically. To add a key, use the **instance-specific SSH interface**.

* For **VM instances**, changing keys requires recreating the VM.

## Connecting to your Instance

Start a new instance and click the SSH icon to see your connection information.

3. **Generate a new SSH key pair** (you will need your vast API key):

* Saves keys as \~/.ssh/id\_ed25519 (private) and \~/.ssh/id\_ed25519.pub (public).

* Backs up existing keys as .backup\_\[timestamp].

* Keys are stored in your Vast account and used for new instances.

* Adding a key to your account keys only applies to **new instances**.

* Existing instances will **not** get the new key automatically. To add a key, use the **instance-specific SSH interface**.

* For **VM instances**, changing keys requires recreating the VM.

## Connecting to your Instance

Start a new instance and click the SSH icon to see your connection information.

Now you can enter the connection command string into your terminal

You should now see a screen similar to this. You will, by default, be placed into a tmux session.

Now you can enter the connection command string into your terminal

You should now see a screen similar to this. You will, by default, be placed into a tmux session.

### Direct vs Proxy Connections

Vast offers both proxy (default) and direct connection methods for SSH:

* **Proxy SSH**: Works on all machines, slower for data transfer, uses Vast proxy server

* **Direct SSH**: Requires machines with open ports, faster and more reliable, preferred method

We connect you to a tmux session by default for reliability and to prevent unintentional termination of foreground processes. You can create a new bash terminal window with `ctrl+b` + `c`. Cycle through your windows with `ctrl+b` + `n`

There is an excellent guide for getting to grips with tmux at [https://tmuxcheatsheet.com](https://tmuxcheatsheet.com/)

If, however, you would prefer to disable TMUX, you can apply the following either in a terminal or from your template's on-start section.

## SSH Local Port Forwarding

An often overlooked feature of SSH is its ability to forward local ports to another machine. When you access a server remotely over SSH, you can make ports from the remote machine available as if they were listening on your own device. This is a secure alternative to opening ports on the public interface as all data is transported over the SSH connection.

This SSH command connects to the remote instance and sets up **local port forwarding** (SSH tunneling):

**Connection details:**

* Connects to IP 180.123.123.123 as user root

* Uses port 1234 instead of the default SSH port 22

**Port forwarding (the key part):**

* `-L 8080:localhost:8080` - Creates a tunnel so when you access localhost:8080 on your local machine, it forwards to port `8080` on the remote server

* `-L 5000:localhost:5000` - Same thing for port `5000`

You can repeat the `-L` arguments to forward as many ports as you need.

**What this means:** After connecting, you can open your web browser and go to [https://localhost:8080](https://localhost:8080) or [http://localhost:5000](http://localhost:5000) on your local computer, and you'll actually be accessing services running on those ports on the remote server. It's like creating secure "tunnels" through the SSH connection to reach applications on the remote machine that might not be directly accessible from the internet.

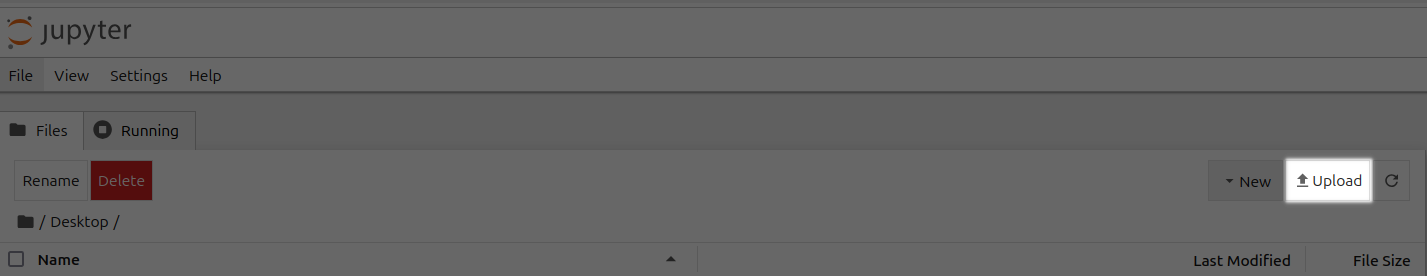

## SSH Alternative - Jupyter Terminal

As a simple alternative to SSH, you might like to consider Jupyter Terminal instead. All instances started in Jupyter launch mode will have this enabled. It is a very straightforward web-based terminal with session persistence. It's great for a quick CLI session.

Access the terminal from the SSH connections interface.

### Direct vs Proxy Connections

Vast offers both proxy (default) and direct connection methods for SSH:

* **Proxy SSH**: Works on all machines, slower for data transfer, uses Vast proxy server

* **Direct SSH**: Requires machines with open ports, faster and more reliable, preferred method

We connect you to a tmux session by default for reliability and to prevent unintentional termination of foreground processes. You can create a new bash terminal window with `ctrl+b` + `c`. Cycle through your windows with `ctrl+b` + `n`

There is an excellent guide for getting to grips with tmux at [https://tmuxcheatsheet.com](https://tmuxcheatsheet.com/)

If, however, you would prefer to disable TMUX, you can apply the following either in a terminal or from your template's on-start section.

## SSH Local Port Forwarding

An often overlooked feature of SSH is its ability to forward local ports to another machine. When you access a server remotely over SSH, you can make ports from the remote machine available as if they were listening on your own device. This is a secure alternative to opening ports on the public interface as all data is transported over the SSH connection.

This SSH command connects to the remote instance and sets up **local port forwarding** (SSH tunneling):

**Connection details:**

* Connects to IP 180.123.123.123 as user root

* Uses port 1234 instead of the default SSH port 22

**Port forwarding (the key part):**

* `-L 8080:localhost:8080` - Creates a tunnel so when you access localhost:8080 on your local machine, it forwards to port `8080` on the remote server

* `-L 5000:localhost:5000` - Same thing for port `5000`

You can repeat the `-L` arguments to forward as many ports as you need.

**What this means:** After connecting, you can open your web browser and go to [https://localhost:8080](https://localhost:8080) or [http://localhost:5000](http://localhost:5000) on your local computer, and you'll actually be accessing services running on those ports on the remote server. It's like creating secure "tunnels" through the SSH connection to reach applications on the remote machine that might not be directly accessible from the internet.

## SSH Alternative - Jupyter Terminal

As a simple alternative to SSH, you might like to consider Jupyter Terminal instead. All instances started in Jupyter launch mode will have this enabled. It is a very straightforward web-based terminal with session persistence. It's great for a quick CLI session.

Access the terminal from the SSH connections interface.

### Permission Denied (publickey)

If you get this error when trying to SSH:

1. Ensure your SSH key is added to your [Vast account](https://cloud.vast.ai/manage-keys/)

2. Verify you're using the correct private key

3. Check key file permissions: `chmod 600 ~/.ssh/id_ed25519`

4. Use `-vv` flag for detailed debug info: `ssh -vv -p PORT root@IP`

* New account keys only apply to NEW instances created after adding the key

* Existing instances keep their original keys (won't get new keys automatically)

* For VM instances, changing keys requires recreating the VM

* To add keys to existing instances, use the instance-specific SSH interface

### General Connection Issues

You can often determine the exact cause of a connection failure by using the -vv arguments with ssh to get more information.

Common reasons include:

* Using the wrong private key

* Incorrect permissions for your private key

* Public key not added to instance or account

* Connecting to the wrong port

## SCP & SFTP File Transfer

Both **SCP** (Secure Copy Protocol) and **SFTP** (SSH File Transfer Protocol) are tools for securely transferring files that piggyback on the SSH protocol. They use the same authentication and encryption as SSH.

### SCP (Secure Copy Protocol)

* **What it is:** Simple, command-line tool for copying files between local and remote machines

* **Best for:** Quick, one-time file transfers

* **Syntax:** `scp -P source destination`

```bash Bash theme={null}

**Examples:**

Example 1 (unknown):

```unknown

```

Example 2 (unknown):

```unknown

1. Creates two files (by default in \~/.ssh/):

* id\_ed25519 → your **private key** (keep safe, never share).

* id\_ed25519.pub → your **public key** (safe to share, add to servers).

2. -C "[your\_email@example.com](mailto:your_email@example.com)" is optional. Whatever you put there is stored as a comment in the public key file (e.g., id\_ed25519.pub). It's just for identification (helpful if you use multiple keys), not for security.

When you run ssh-keygen -t ed25519 in **Windows PowerShell**, the keys are created in your Windows user profile folder:

`C:\Users\\.ssh\`

**2. Copy your public key.**

```

Example 3 (unknown):

```unknown

```

Example 4 (unknown):

```unknown

**3. Add it in your** [**vast account**](https://cloud.vast.ai/manage-keys/)

### Permission Denied (publickey)

If you get this error when trying to SSH:

1. Ensure your SSH key is added to your [Vast account](https://cloud.vast.ai/manage-keys/)

2. Verify you're using the correct private key

3. Check key file permissions: `chmod 600 ~/.ssh/id_ed25519`

4. Use `-vv` flag for detailed debug info: `ssh -vv -p PORT root@IP`

* New account keys only apply to NEW instances created after adding the key

* Existing instances keep their original keys (won't get new keys automatically)

* For VM instances, changing keys requires recreating the VM

* To add keys to existing instances, use the instance-specific SSH interface

### General Connection Issues

You can often determine the exact cause of a connection failure by using the -vv arguments with ssh to get more information.

Common reasons include:

* Using the wrong private key

* Incorrect permissions for your private key

* Public key not added to instance or account

* Connecting to the wrong port

## SCP & SFTP File Transfer

Both **SCP** (Secure Copy Protocol) and **SFTP** (SSH File Transfer Protocol) are tools for securely transferring files that piggyback on the SSH protocol. They use the same authentication and encryption as SSH.

### SCP (Secure Copy Protocol)

* **What it is:** Simple, command-line tool for copying files between local and remote machines

* **Best for:** Quick, one-time file transfers

* **Syntax:** `scp -P source destination`

```bash Bash theme={null}

**Examples:**

Example 1 (unknown):

```unknown

```

Example 2 (unknown):

```unknown

1. Creates two files (by default in \~/.ssh/):

* id\_ed25519 → your **private key** (keep safe, never share).

* id\_ed25519.pub → your **public key** (safe to share, add to servers).

2. -C "[your\_email@example.com](mailto:your_email@example.com)" is optional. Whatever you put there is stored as a comment in the public key file (e.g., id\_ed25519.pub). It's just for identification (helpful if you use multiple keys), not for security.

When you run ssh-keygen -t ed25519 in **Windows PowerShell**, the keys are created in your Windows user profile folder:

`C:\Users\\.ssh\`

**2. Copy your public key.**

```

Example 3 (unknown):

```unknown

```

Example 4 (unknown):

```unknown

**3. Add it in your** [**vast account**](https://cloud.vast.ai/manage-keys/)

**Add & Generate SSH Key (using** [**Vast CLI**](/cli/get-started)**)**

1. **Install Vast CLI:**

```

---

## unlist volume

**URL:** llms-txt#unlist-volume

Source: https://docs.vast.ai/api-reference/volumes/unlist-volume

api-reference/openapi.json post /api/v0/volumes/unlist/

Remove a volume listing from the marketplace.

CLI Usage: `vastai unlist volume `

---

## streaming response from model API to client

**URL:** llms-txt#streaming-response-from-model-api-to-client

class GenerateStreamHandler(EndpointHandler[InputData]):

@property

def endpoint(self) -> str:

return "/generate_stream"

@classmethod

def payload_cls(cls) -> Type[InputData]:

return InputData

def generate_payload_json(self, payload: InputData) -> Dict[str, Any]:

return dataclasses.asdict(payload)

def make_benchmark_payload(self) -> InputData:

return InputData.for_test()

async def generate_client_response(

self, client_request: web.Request, model_response: ClientResponse

) -> Union[web.Response, web.StreamResponse]:

match model_response.status:

case 200:

log.debug("Streaming response...")

res = web.StreamResponse()

res.content_type = "text/event-stream"

await res.prepare(client_request)

async for chunk in model_response.content:

await res.write(chunk)

await res.write_eof()

log.debug("Done streaming response")

return res

case code:

log.debug("SENDING RESPONSE: ERROR: unknown code")

return web.Response(status=code)

---

## remove defjob

**URL:** llms-txt#remove-defjob

Source: https://docs.vast.ai/api-reference/machines/remove-defjob

api-reference/openapi.json delete /api/v0/machines/{machine_id}/defjob/

Deletes the default job (background instances) for a specified machine.

CLI Usage: `vastai remove defjob `

---

## Creating Templates for GROBID

**URL:** llms-txt#creating-templates-for-grobid

**Contents:**

- Introduction

- Find The Image and Tag You Want to Use

- Step 1 - Find a Suitable Image

- Step 2 - Selecting the Version Tag

- Configuring The Template

- Step 1 - Setting Your Chosen Image and Tag in Your Vast.ai Template

- Step 2 - Map Ports and Specify Your Image and Tag Combination

- Step 3 - Select the Launch Mode

- Step 4 - Look for CMD or ENTRYPOINT command

- Step 5 - Fill Out On-start Script section using the CMD command we just found

Source: https://docs.vast.ai/documentation/templates/examples/grobid

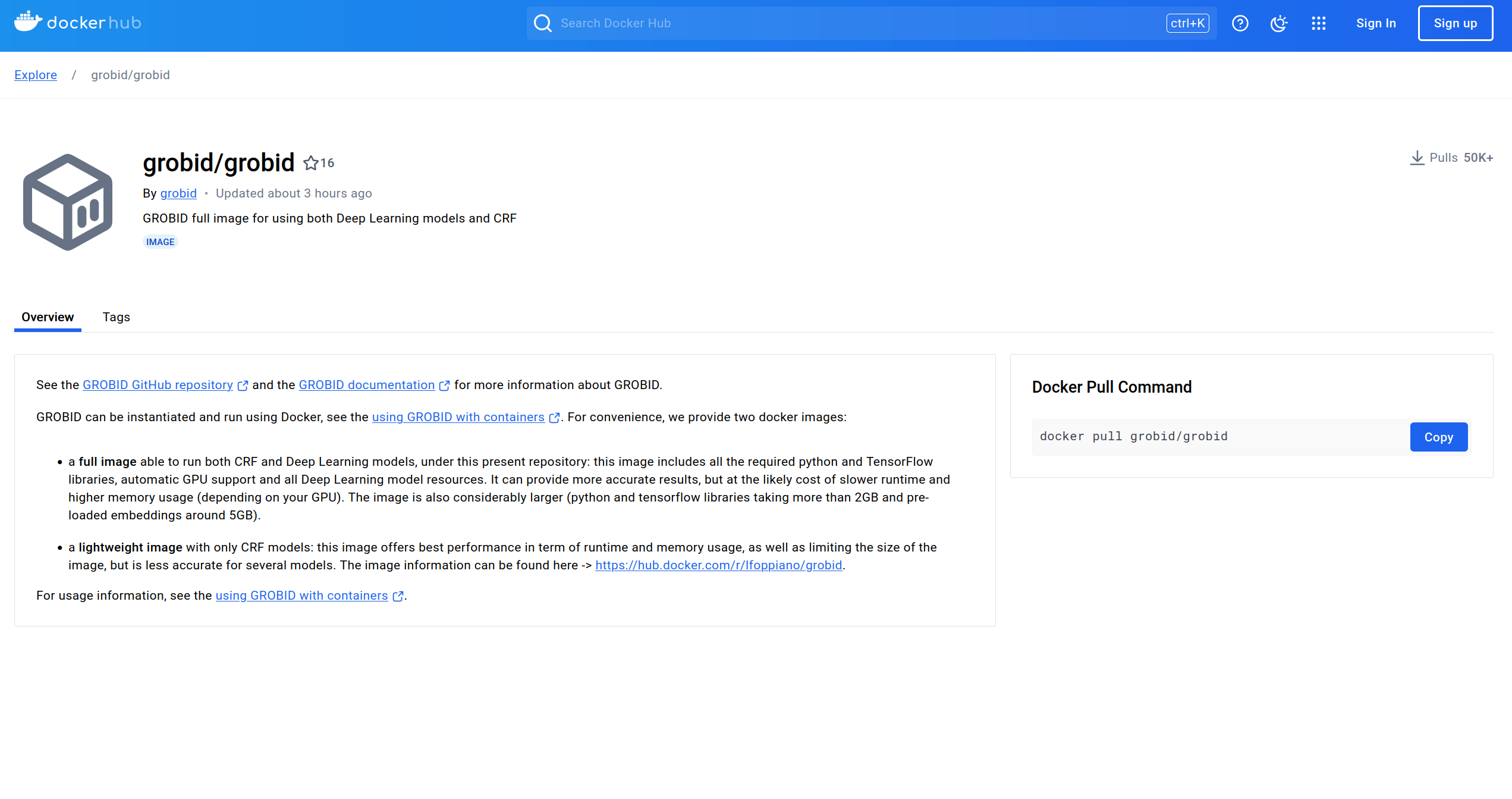

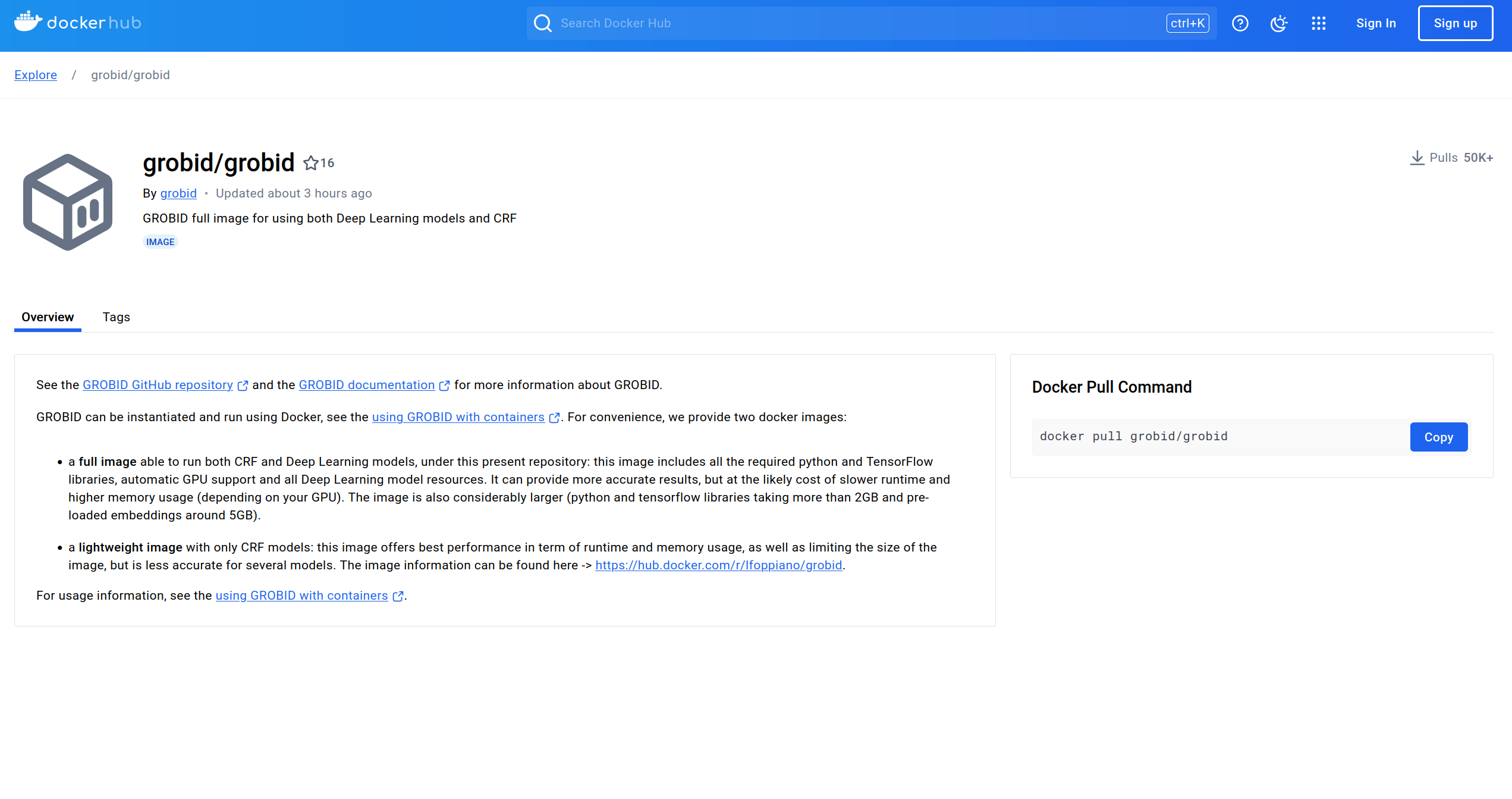

This guide demonstrates creating a template using an existing Docker image. See our [Creating Templates](/documentation/templates/creating-templates) guide for more details on template configuration. We will be using the image from [GROBID on dockerhub](https://hub.docker.com/r/grobid/grobid).

## Find The Image and Tag You Want to Use

### Step 1 - Find a Suitable Image

There are multiple GROBID images in dockerhub, but for this guide we will be using the official GROBID image.

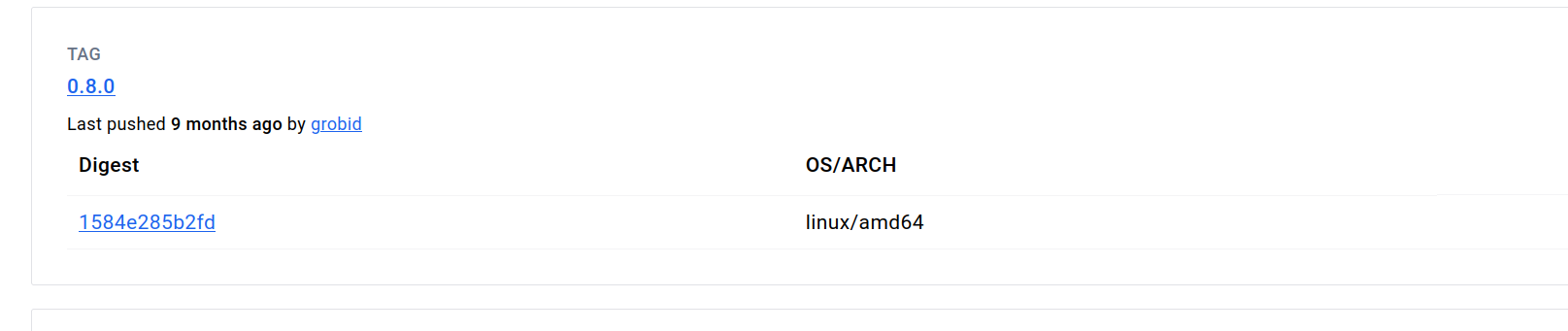

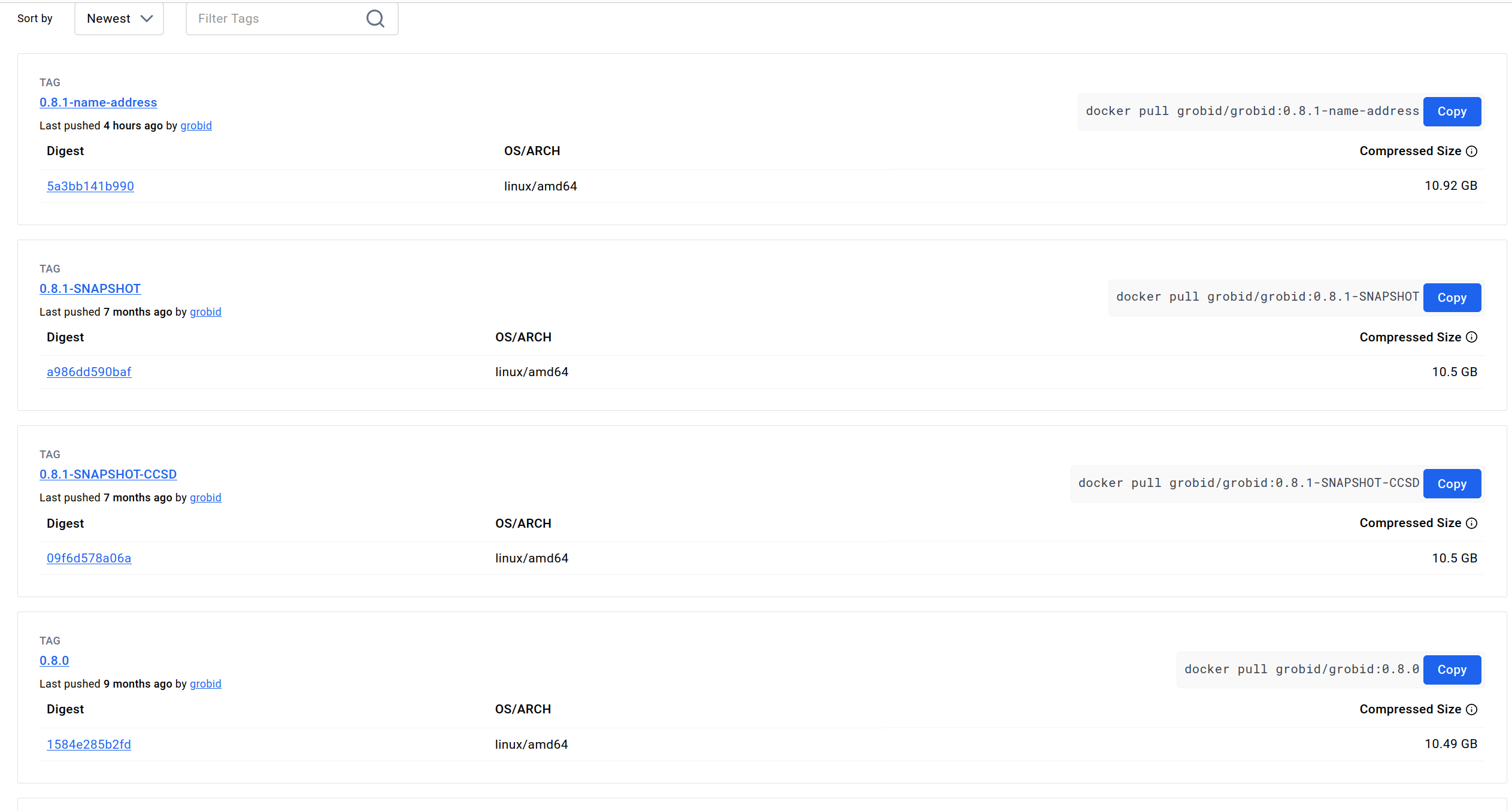

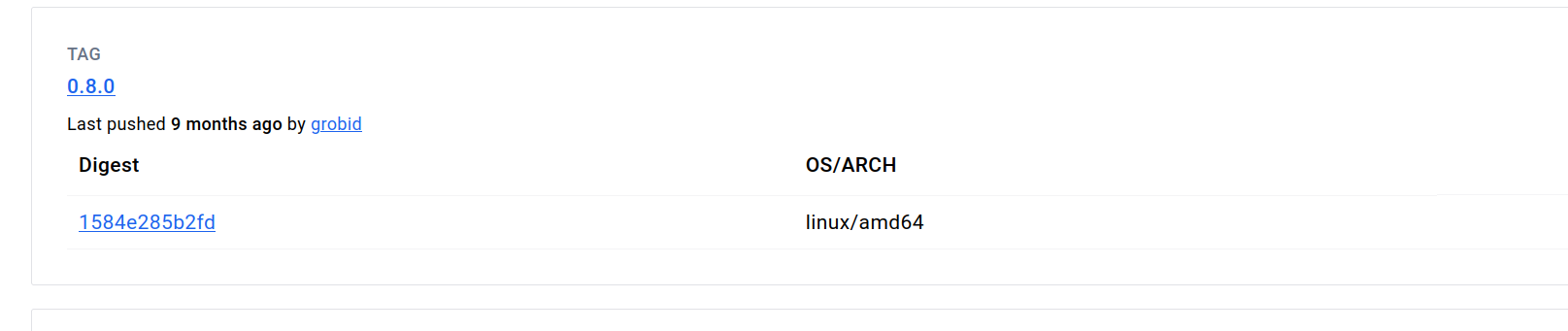

### Step 2 - Selecting the Version Tag

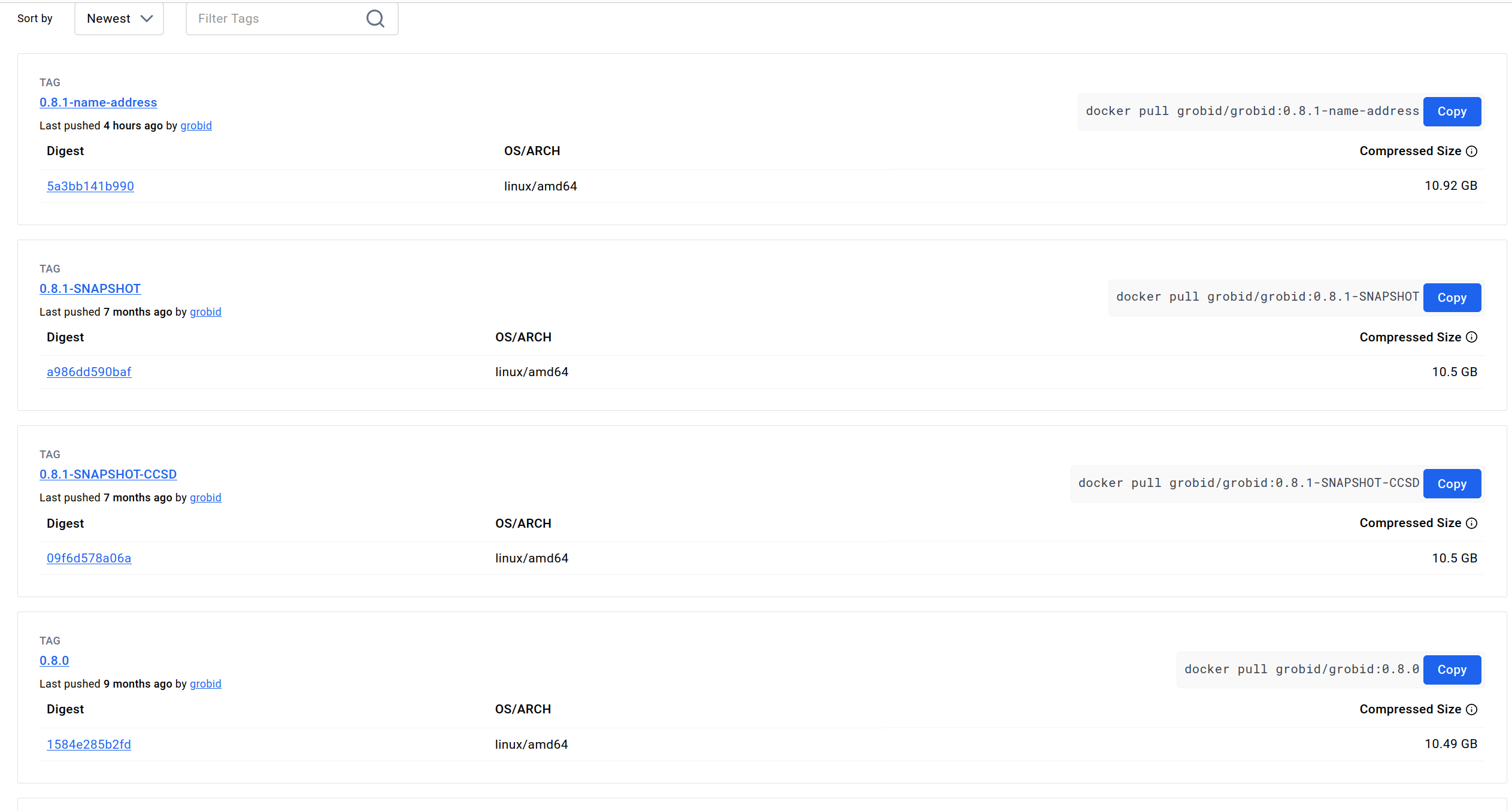

If you don't already have a version you intend to use, we recommend selecting the latest stable version.

At the time of writing, the current stable version is 0.8.0, so that is the version we'll be using here.

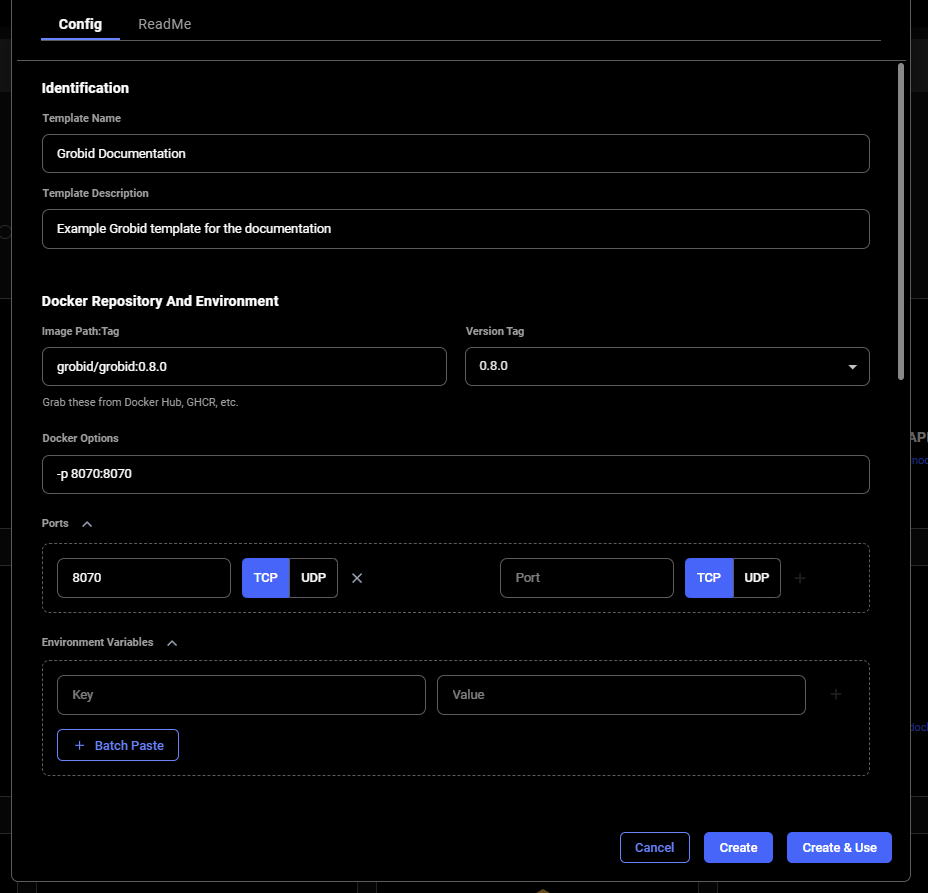

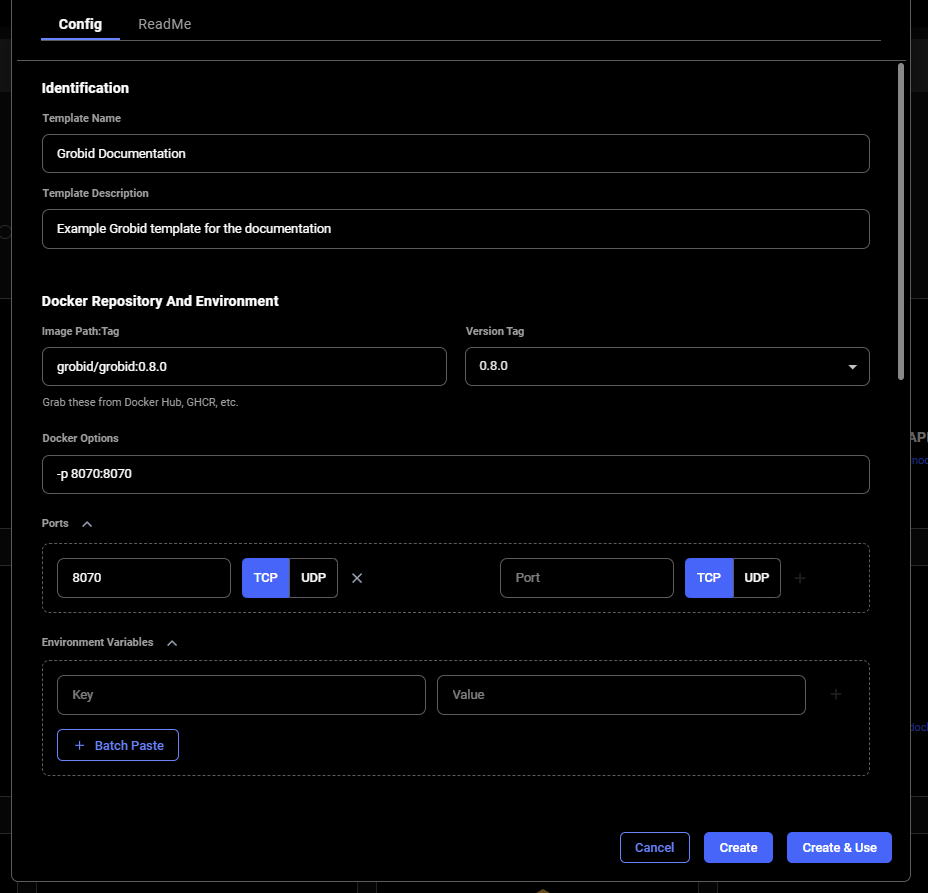

## Configuring The Template

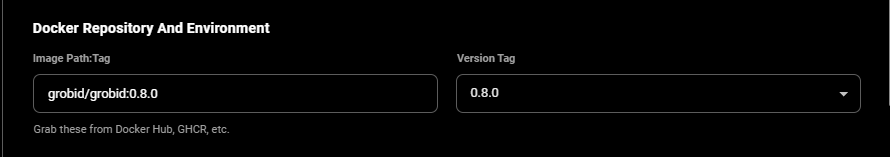

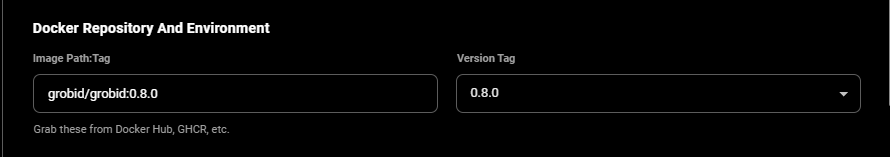

### Step 1 - Setting Your Chosen Image and Tag in Your Vast.ai Template

In the Docker Repository And Environment section, you will enter your image path and tag.

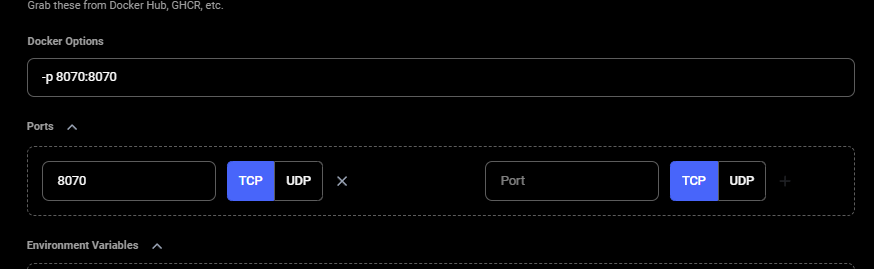

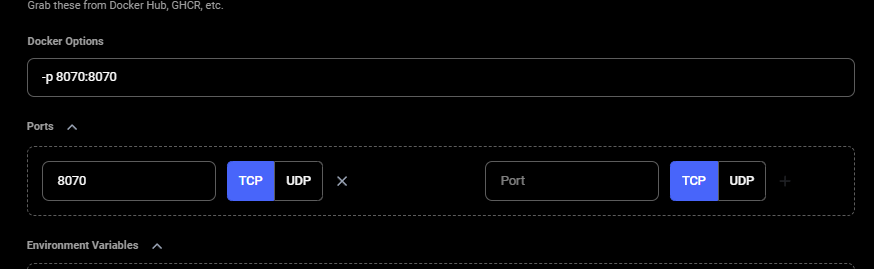

### Step 2 - Map Ports and Specify Your Image and Tag Combination

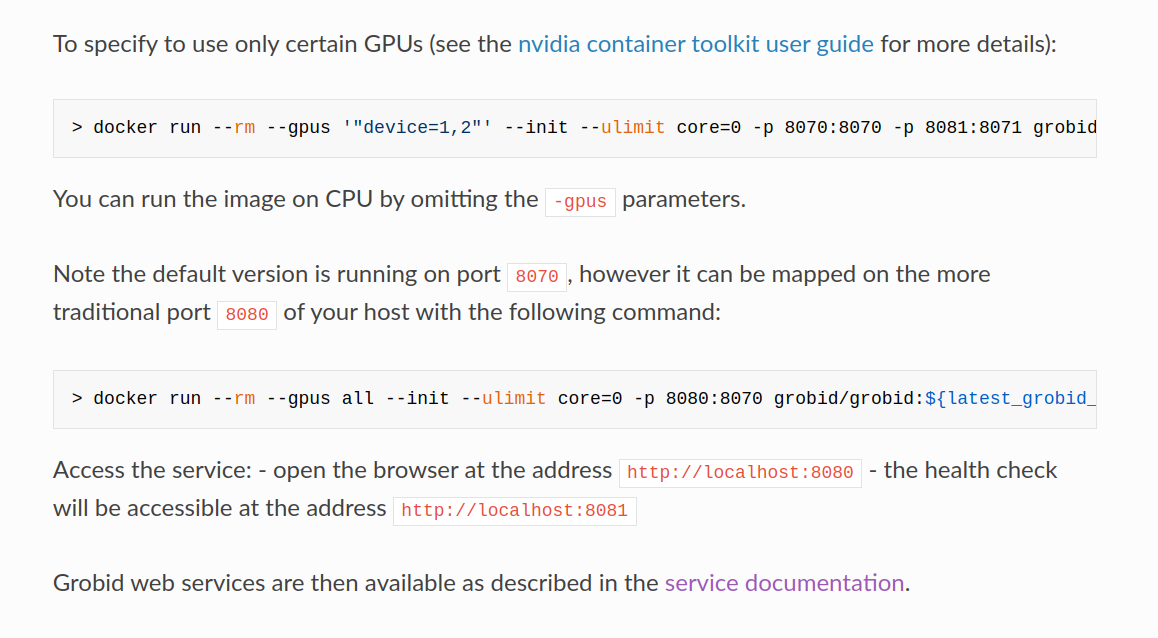

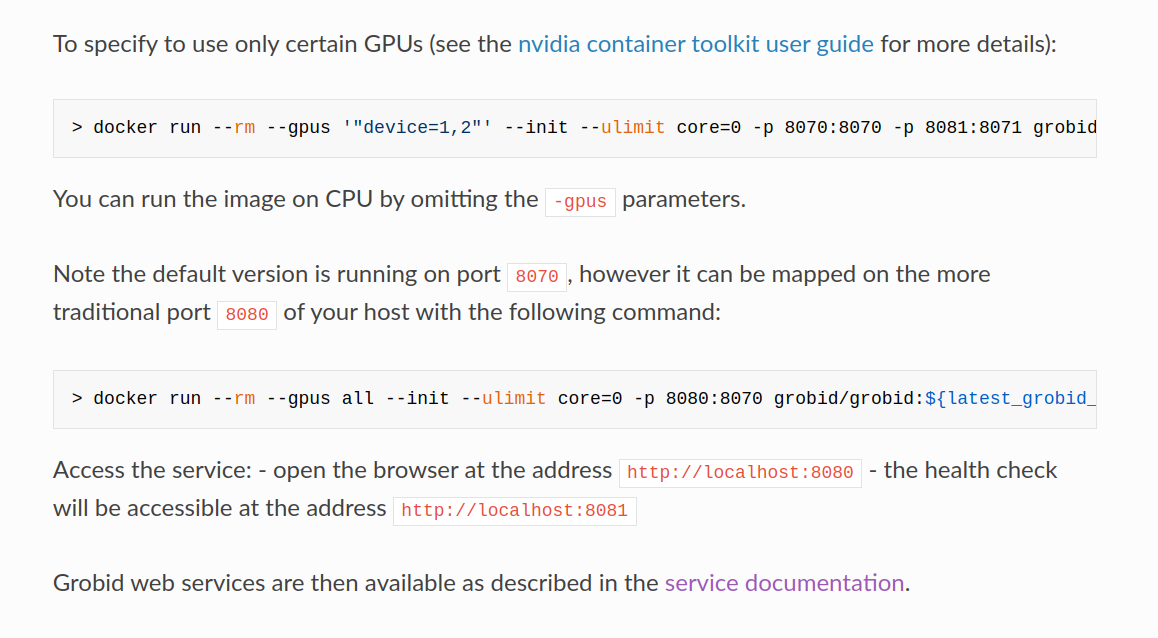

The overview page for this image at dockerhub has a link to their guide to [using GROBID with containers](https://grobid.readthedocs.io/en/latest/Grobid-docker/#crf-and-deep-learning-image), which you can read to get their recommendations for containerizing GROBID.

As we follow their guide to containerizing GROBID, we'll need to make sure the container's port 8070 is set to the host machine's port 8070. We will do that in the Vast.ai template. We use -p 8070:8070 as one of the docker run options.

**Note:** Vast only allows -e and -p docker run options to set environment variables and expose ports.

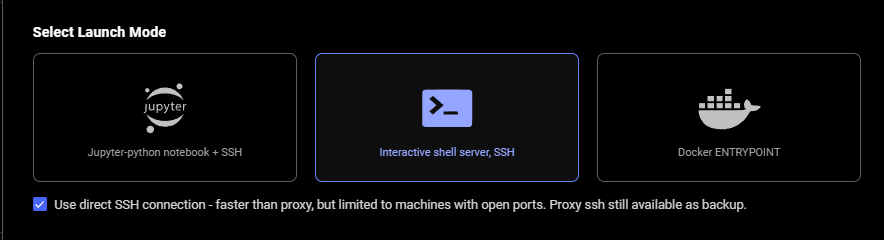

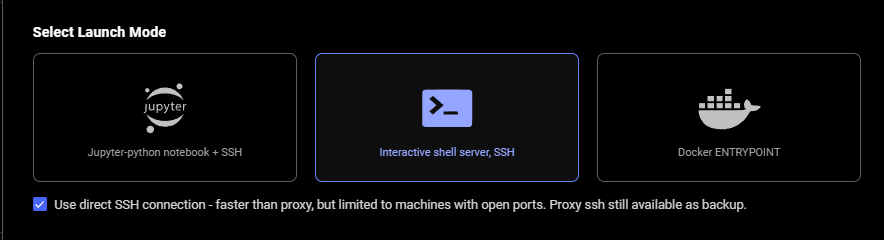

### Step 3 - Select the Launch Mode

Here we will select the SSH launch mode.

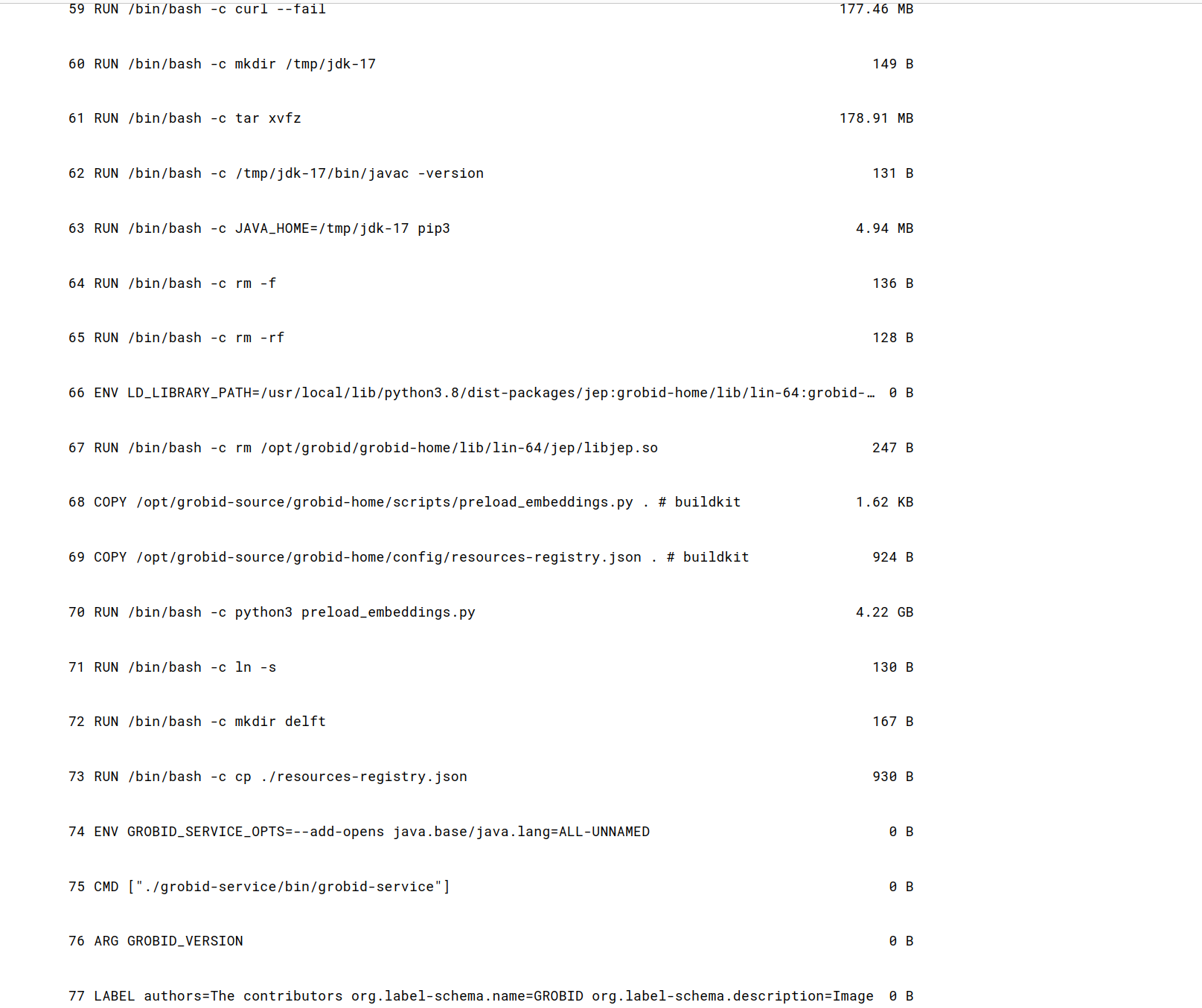

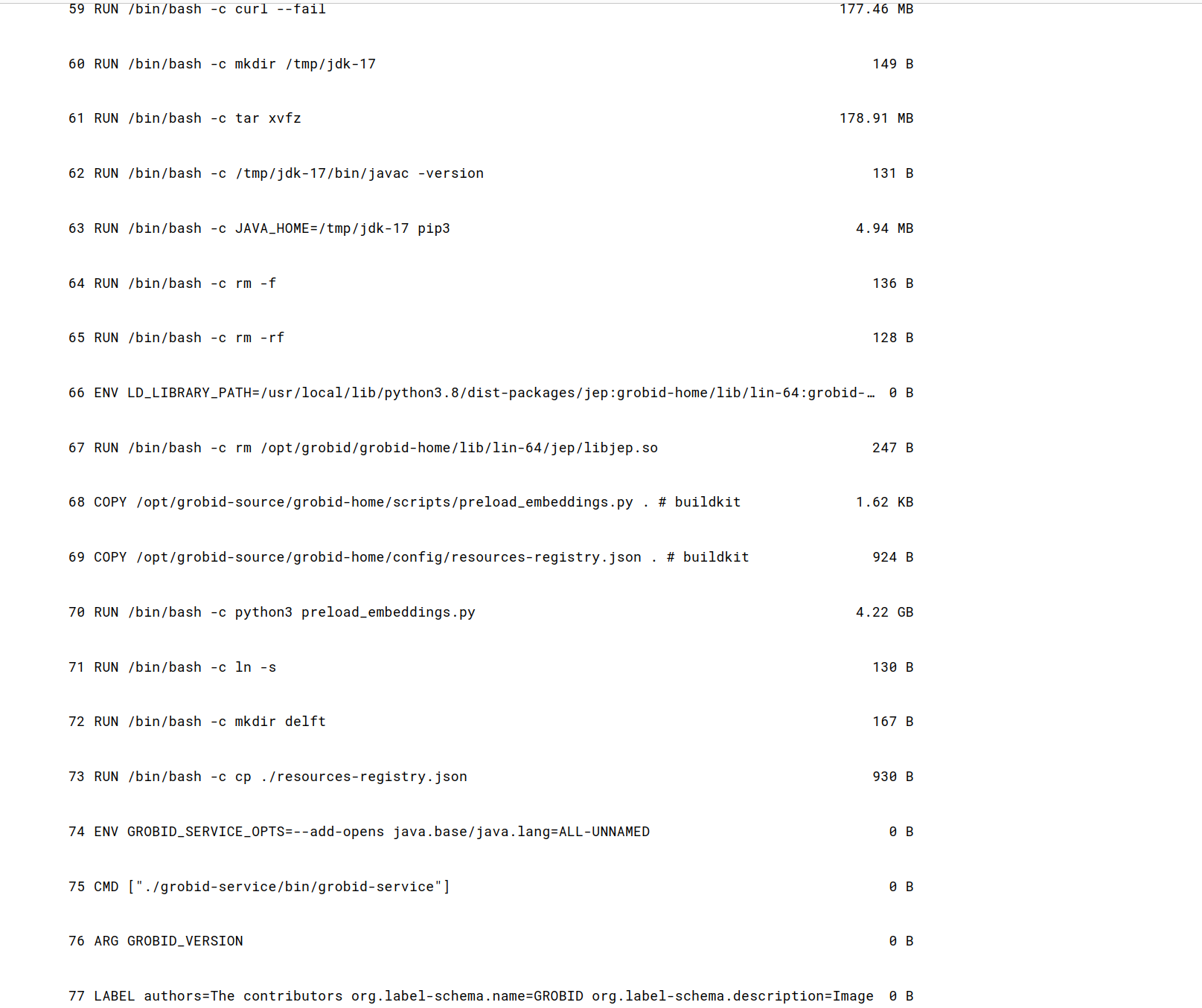

### Step 4 - Look for CMD or ENTRYPOINT command

To find this for the template we are creating, we searched the [image's page in Dockerhub](https://hub.docker.com/r/grobid/grobid) and found the **CMD **command in the **Tags** tab under the link "0.8.0" highlighted in blue.

### Step 5 - Fill Out On-start Script section using the CMD command we just found

Next, we add the contents of the **CMD **command to the end of the bash commands section of the **On-start Script** fields.

Also, appended environment variables to /etc/environment file in our on-start section.

**Add & Generate SSH Key (using** [**Vast CLI**](/cli/get-started)**)**

1. **Install Vast CLI:**

```

---

## unlist volume

**URL:** llms-txt#unlist-volume

Source: https://docs.vast.ai/api-reference/volumes/unlist-volume

api-reference/openapi.json post /api/v0/volumes/unlist/

Remove a volume listing from the marketplace.

CLI Usage: `vastai unlist volume `

---

## streaming response from model API to client

**URL:** llms-txt#streaming-response-from-model-api-to-client

class GenerateStreamHandler(EndpointHandler[InputData]):

@property

def endpoint(self) -> str:

return "/generate_stream"

@classmethod

def payload_cls(cls) -> Type[InputData]:

return InputData

def generate_payload_json(self, payload: InputData) -> Dict[str, Any]:

return dataclasses.asdict(payload)

def make_benchmark_payload(self) -> InputData:

return InputData.for_test()

async def generate_client_response(

self, client_request: web.Request, model_response: ClientResponse

) -> Union[web.Response, web.StreamResponse]:

match model_response.status:

case 200:

log.debug("Streaming response...")

res = web.StreamResponse()

res.content_type = "text/event-stream"

await res.prepare(client_request)

async for chunk in model_response.content:

await res.write(chunk)

await res.write_eof()

log.debug("Done streaming response")

return res

case code:

log.debug("SENDING RESPONSE: ERROR: unknown code")

return web.Response(status=code)

---

## remove defjob

**URL:** llms-txt#remove-defjob

Source: https://docs.vast.ai/api-reference/machines/remove-defjob

api-reference/openapi.json delete /api/v0/machines/{machine_id}/defjob/

Deletes the default job (background instances) for a specified machine.

CLI Usage: `vastai remove defjob `

---

## Creating Templates for GROBID

**URL:** llms-txt#creating-templates-for-grobid

**Contents:**

- Introduction

- Find The Image and Tag You Want to Use

- Step 1 - Find a Suitable Image

- Step 2 - Selecting the Version Tag

- Configuring The Template

- Step 1 - Setting Your Chosen Image and Tag in Your Vast.ai Template

- Step 2 - Map Ports and Specify Your Image and Tag Combination

- Step 3 - Select the Launch Mode

- Step 4 - Look for CMD or ENTRYPOINT command

- Step 5 - Fill Out On-start Script section using the CMD command we just found

Source: https://docs.vast.ai/documentation/templates/examples/grobid

This guide demonstrates creating a template using an existing Docker image. See our [Creating Templates](/documentation/templates/creating-templates) guide for more details on template configuration. We will be using the image from [GROBID on dockerhub](https://hub.docker.com/r/grobid/grobid).

## Find The Image and Tag You Want to Use

### Step 1 - Find a Suitable Image

There are multiple GROBID images in dockerhub, but for this guide we will be using the official GROBID image.

### Step 2 - Selecting the Version Tag

If you don't already have a version you intend to use, we recommend selecting the latest stable version.

At the time of writing, the current stable version is 0.8.0, so that is the version we'll be using here.

## Configuring The Template

### Step 1 - Setting Your Chosen Image and Tag in Your Vast.ai Template

In the Docker Repository And Environment section, you will enter your image path and tag.

### Step 2 - Map Ports and Specify Your Image and Tag Combination

The overview page for this image at dockerhub has a link to their guide to [using GROBID with containers](https://grobid.readthedocs.io/en/latest/Grobid-docker/#crf-and-deep-learning-image), which you can read to get their recommendations for containerizing GROBID.

As we follow their guide to containerizing GROBID, we'll need to make sure the container's port 8070 is set to the host machine's port 8070. We will do that in the Vast.ai template. We use -p 8070:8070 as one of the docker run options.

**Note:** Vast only allows -e and -p docker run options to set environment variables and expose ports.

### Step 3 - Select the Launch Mode

Here we will select the SSH launch mode.

### Step 4 - Look for CMD or ENTRYPOINT command

To find this for the template we are creating, we searched the [image's page in Dockerhub](https://hub.docker.com/r/grobid/grobid) and found the **CMD **command in the **Tags** tab under the link "0.8.0" highlighted in blue.

### Step 5 - Fill Out On-start Script section using the CMD command we just found

Next, we add the contents of the **CMD **command to the end of the bash commands section of the **On-start Script** fields.

Also, appended environment variables to /etc/environment file in our on-start section.

This makes environment variables available to all users and processes and ensures they are persistent even if our instance/docker container is rebooted. We suggest doing the same for your templates.

### Step 6 - Name and Save The Template

When you are finished setting up your template, If you haven't already done so, specify the template name and description.

Finally, click **Create & Use** to save the template and navigate to the GPU offers search page. You'll notice that your template is selected and ready to be used.

## Rent an Instance Using Your Template and Open GROBID Web App

Once you have selected an instance offer, You'll click on the **INSTANCES **link in the left menu and see your rented GPU instance that has your template applied.

This makes environment variables available to all users and processes and ensures they are persistent even if our instance/docker container is rebooted. We suggest doing the same for your templates.

### Step 6 - Name and Save The Template

When you are finished setting up your template, If you haven't already done so, specify the template name and description.

Finally, click **Create & Use** to save the template and navigate to the GPU offers search page. You'll notice that your template is selected and ready to be used.

## Rent an Instance Using Your Template and Open GROBID Web App

Once you have selected an instance offer, You'll click on the **INSTANCES **link in the left menu and see your rented GPU instance that has your template applied.

When the instance is done loading and the **>\_CONNECT** state on the blue button appears, you should be able to see the ip range button at the top of the instance card.

When the instance is done loading and the **>\_CONNECT** state on the blue button appears, you should be able to see the ip range button at the top of the instance card.

If you click the IP range button you will see a new modal has the IP and port information for your instance. You'll see the port 8070 that we set listed in Open Ports.

If you click the IP range button you will see a new modal has the IP and port information for your instance. You'll see the port 8070 that we set listed in Open Ports.

You can copy the machine IP and port and load the address (in this example: 195.0.159.206:55734) in a new browser tab or window. This address will load the GROBID web app.

You can copy the machine IP and port and load the address (in this example: 195.0.159.206:55734) in a new browser tab or window. This address will load the GROBID web app.

## Additional Resources

[GROBID Documentation](https://grobid.readthedocs.io/en/latest/)

---

## delete volume

**URL:** llms-txt#delete-volume

Source: https://docs.vast.ai/api-reference/volumes/delete-volume

api-reference/openapi.json delete /api/v0/volumes/

Delete a volume by its ID.

CLI Usage: `vastai delete volume `

---

## Oobabooga (LLM webui)

**URL:** llms-txt#oobabooga-(llm-webui)

**Contents:**

- 1) Setup your Vast account

- 2) Pick the Oobabooga template

- 3) Allocate storage

- 4) Pick a GPU offer

- 5) Open Oobabooga

- 6) Download the LLM

- 7) Load the LLM

- 8) Start chatting!

- 9) Done? Destroy the instance

Source: https://docs.vast.ai/oobabooga-llm-webui

A large language model(LLM) learns to predict the next word in a sentence by analyzing the patterns and structures in the text it has been trained on. This enables it to generate human-like text based on the input it receives.

There are many popular Open Source LLMs: Falcon 40B, Guanaco 65B, LLaMA and Vicuna. Hugging Face maintains [a leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard) of the most popular Open Source models that they have available.

[Oobabooga](https://github.com/oobabooga/text-generation-webui) is a front end that uses Gradio to serve a simple web UI for interacting with the Open Source model. In this guide, we will show you how to run an LLM using Oobabooga on Vast.

## 1) Setup your Vast account

The first thing to do if you are new to Vast is to create an account and verify your email address. Then head to the Billing tab and add credits. Vast uses Stripe to processes credit card payments and also accepts major cryptocurrencies through Coinbase or Crypto.com. \$20 should be enough to start. You can setup auto-top ups so that your credit card is charged when your balance is low.

## 2) Pick the Oobabooga template

Go to the [Templates tab](https://cloud.vast.ai/templates/) and search for "Oobabooga" among recommended templates and select it.

## 3) Allocate storage

The default storage amount will not be enough for downloading an LLM. Use the slider under the Instance Configuration to allocate more storage. 100GB should be enough.

## Additional Resources

[GROBID Documentation](https://grobid.readthedocs.io/en/latest/)

---

## delete volume

**URL:** llms-txt#delete-volume

Source: https://docs.vast.ai/api-reference/volumes/delete-volume

api-reference/openapi.json delete /api/v0/volumes/

Delete a volume by its ID.

CLI Usage: `vastai delete volume `

---

## Oobabooga (LLM webui)

**URL:** llms-txt#oobabooga-(llm-webui)

**Contents:**

- 1) Setup your Vast account

- 2) Pick the Oobabooga template

- 3) Allocate storage

- 4) Pick a GPU offer

- 5) Open Oobabooga

- 6) Download the LLM

- 7) Load the LLM

- 8) Start chatting!

- 9) Done? Destroy the instance

Source: https://docs.vast.ai/oobabooga-llm-webui

A large language model(LLM) learns to predict the next word in a sentence by analyzing the patterns and structures in the text it has been trained on. This enables it to generate human-like text based on the input it receives.

There are many popular Open Source LLMs: Falcon 40B, Guanaco 65B, LLaMA and Vicuna. Hugging Face maintains [a leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard) of the most popular Open Source models that they have available.

[Oobabooga](https://github.com/oobabooga/text-generation-webui) is a front end that uses Gradio to serve a simple web UI for interacting with the Open Source model. In this guide, we will show you how to run an LLM using Oobabooga on Vast.

## 1) Setup your Vast account

The first thing to do if you are new to Vast is to create an account and verify your email address. Then head to the Billing tab and add credits. Vast uses Stripe to processes credit card payments and also accepts major cryptocurrencies through Coinbase or Crypto.com. \$20 should be enough to start. You can setup auto-top ups so that your credit card is charged when your balance is low.

## 2) Pick the Oobabooga template

Go to the [Templates tab](https://cloud.vast.ai/templates/) and search for "Oobabooga" among recommended templates and select it.

## 3) Allocate storage

The default storage amount will not be enough for downloading an LLM. Use the slider under the Instance Configuration to allocate more storage. 100GB should be enough.

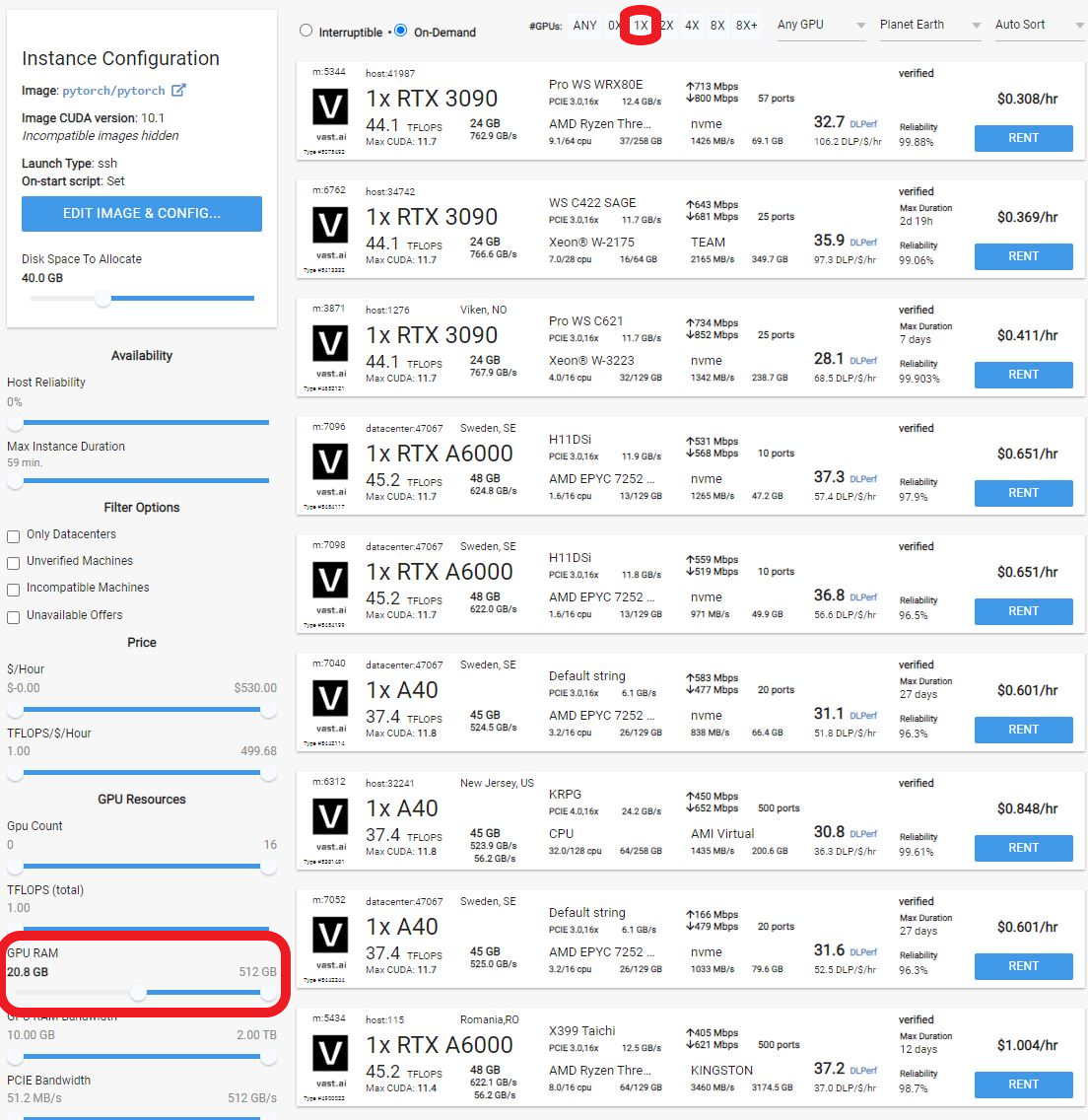

## 4) Pick a GPU offer

You will need to understand how much GPU RAM the LLM requires before you pick a GPU. For example, the [Falcon 40B Instruct](https://huggingface.co/tiiuae/falcon-40b-instruct) model requires 85-100 GB of GPU RAM. Falcon 7B only requires 16GB. Other models do not have great documentation on how much GPU RAM they require. If the instance doesn't have enough GPU RAM, there will be an error when trying to load the model. You can use multiple GPUs in a single instance and add their GPU RAM together.

For this guide, we will load the Falcon 40B Instruct model on a 2X A6000 instance, which has 96GB of GPU RAM in total.

## 4) Pick a GPU offer

You will need to understand how much GPU RAM the LLM requires before you pick a GPU. For example, the [Falcon 40B Instruct](https://huggingface.co/tiiuae/falcon-40b-instruct) model requires 85-100 GB of GPU RAM. Falcon 7B only requires 16GB. Other models do not have great documentation on how much GPU RAM they require. If the instance doesn't have enough GPU RAM, there will be an error when trying to load the model. You can use multiple GPUs in a single instance and add their GPU RAM together.

For this guide, we will load the Falcon 40B Instruct model on a 2X A6000 instance, which has 96GB of GPU RAM in total.

Click on the RENT button to start the instance which will download the docker container and boot up.

Once the instance boots up, the Open button will open port 7860 in a new browser window. This is the Oobabooga web interface.

The web gui can take an additional 1-2 minutes to load. If the button is stuck on "Connecting" for more than 10 minutes, then something has gone wrong. You can check the log for an error and/or contact us on website chat support for 24/7 help.

## 6) Download the LLM

Click on the Model tab in the interface. Enter the Hugging Face username/model path, for instance: tiiuae/falcon-40b-instruct. To specify a branch, add it at the end after a ":" character like this: tiiuae/falcon-40b-instruct

The download will take 15-20 minutes depending on the machine's internet connection.

Click on the RENT button to start the instance which will download the docker container and boot up.

Once the instance boots up, the Open button will open port 7860 in a new browser window. This is the Oobabooga web interface.

The web gui can take an additional 1-2 minutes to load. If the button is stuck on "Connecting" for more than 10 minutes, then something has gone wrong. You can check the log for an error and/or contact us on website chat support for 24/7 help.

## 6) Download the LLM

Click on the Model tab in the interface. Enter the Hugging Face username/model path, for instance: tiiuae/falcon-40b-instruct. To specify a branch, add it at the end after a ":" character like this: tiiuae/falcon-40b-instruct

The download will take 15-20 minutes depending on the machine's internet connection.

To check the progress of the download, you can click on the log button on the Vast instance card on [cloud.vast.ai/instances/](https://cloud.vast.ai/instances/) which will show you the download speed for each of the LLM file segments.

If you are using multiple GPUs such as the 2X A6000 selected in this guide, you will need to move the memory slider all the way over for all the GPUs. You may also have to select the "trust-remote-code" option if you get that error. Once those items are fixed, you can reload the model.

To check the progress of the download, you can click on the log button on the Vast instance card on [cloud.vast.ai/instances/](https://cloud.vast.ai/instances/) which will show you the download speed for each of the LLM file segments.

If you are using multiple GPUs such as the 2X A6000 selected in this guide, you will need to move the memory slider all the way over for all the GPUs. You may also have to select the "trust-remote-code" option if you get that error. Once those items are fixed, you can reload the model.

Any errrors loading the model will appear under the download button.

## 8) Start chatting!

Navigate to the Text generation tab to start chatting with the model. This is the most basic way to use Oobabooga, there are many other settings and things you can do with the interface.

## 9) Done? Destroy the instance

If you STOP the instance using the stop button, you will no longer pay the hourly GPU charges. **However you will still incur storage charges** because the data is still stored on the host machine. When you hit the START button to restart the instance, you are also not guaranteed that you can rent the GPU as someone else might have rented it while it was stopped.

To incur no other charges you have to DESTROY the instance using the trash can icon. **We recommend you destroy instances** so as not to incur storage charges while you are not using the system.

---

## Worker List

**URL:** llms-txt#worker-list

Source: https://docs.vast.ai/documentation/serverless/worker-list

Learn how to use the /get_endpoint_workers/ and /get_autogroup_workers/ endpoints to retrieve a list of GPU instances under an Endpoint and Worker Group. Understand the inputs, outputs, and examples for using the endpoints.

The `/get_endpoint_workers/` and `/get_autogroup_workers/` endpoints return a list of GPU instances under an Endpoint and \{\{Worker\_Group}}, respectively.

---

## Access Tokens

**URL:** llms-txt#access-tokens

CF_TUNNEL_TOKEN="" # Cloudflare Zero Trust token

CIVITAI_TOKEN="" # Access gated Civitai models

HF_TOKEN="" # Access gated HuggingFace models

---

## Video Generation Guide: Using ComfyUI on Vast.ai

**URL:** llms-txt#video-generation-guide:-using-comfyui-on-vast.ai

**Contents:**

- Prerequisites

- Setting Up Your Instance

- 1. Select the Right Template

- 2. **Edit your Template Configuration**

This guide will walk you through setting up and using ComfyUI for video generation on Vast.ai. ComfyUI provides a powerful node-based interface for creating advanced stable diffusion pipelines, making it ideal for video generation workflows.

* A Vast.ai account

* Basic familiarity with image or video generation models

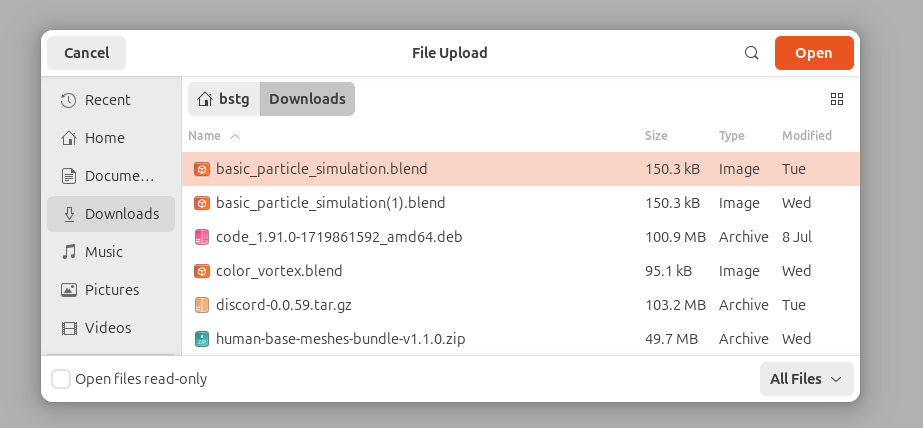

* [(Optional) Read Jupyter guide](/documentation/instances/jupyter)

* [(Optional) SSH client installed on your local machine and SSH public key added in Account tab at cloud.vast.ai](/documentation/instances/sshscp)

## Setting Up Your Instance

### 1. Select the Right Template

Navigate to the Templates tab to view available templates. For video generation, we recommend searching for "ComfyUI" among the recommended templates. [The ComfyUI template](https://cloud.vast.ai/?ref_id=62897\&creator_id=62897\&name=ComfyUI) provides a powerful and modular stable diffusion GUI for designing and executing advanced pipelines using a graph/nodes/flowchart based interface.

**Template Features:**

* Access through both Jupyter and SSH

* Instance Portal

* Token-based authentication enabled by default

* Built-in provisioning script for models and custom nodes

### 2. **Edit your Template Configuration**

**Add/update these environment variables as needed:**

```bash Bash theme={null}

---

## show ssh-keys

**URL:** llms-txt#show-ssh-keys

Source: https://docs.vast.ai/api-reference/instances/show-ssh-keys

api-reference/openapi.json get /api/v0/instances/{instance_id}/ssh/

Retrieves the SSH keys associated with a specific instance.

CLI Usage: `vastai show ssh-keys `

---

## get endpoint workers

**URL:** llms-txt#get-endpoint-workers

Source: https://docs.vast.ai/api-reference/serverless/get-endpoint-workers

api-reference/openapi.json post /get_endpoint_workers/

Retrieves the current list and status of workers for a specific endpoint.

Useful for monitoring, debugging connectivity issues, and understanding resource usage.

CLI Usage: `vastai get endpoint workers `

---

## destroy team

**URL:** llms-txt#destroy-team

Source: https://docs.vast.ai/api-reference/team/destroy-team

api-reference/openapi.json delete /api/v0/team/

Deletes a team and all associated data including API keys, rights, invitations, memberships and metadata. The team owner's master API key is converted to a normal client key.

CLI Usage: `vastai destroy team`

---

## vLLM (LLM inference and serving)

**URL:** llms-txt#vllm-(llm-inference-and-serving)

**Contents:**

- Set Up Your Account

- Configure the vLLM Template

- Launch Your Instance

- vLLM API Usage

- Authentication Token

- Sample Curl Command

- vLLM with Python

- Further Reading

Source: https://docs.vast.ai/vllm-llm-inference-and-serving

Below is a guide for runing the [vLLM template](https://cloud.vast.ai/?creator_id=62897\&name=vLLM) on Vast. The template contains everything you need to get started, so you will only need to specify the model you want to serve and the corresponding vLLM configuration.

For simplicity, we have set the default template model as [DeepSeek-R1-Distill-Llama-8B](https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Llama-8B) with a limited context window because it can run on a single GPU with only 21GB VRAM, but vLLM can scale easily over multiple GPUs to handle much larger models.

## Set Up Your Account

1. **Setup your Vast account and add credit:** Review the [quickstart guide](/documentation/get-started/quickstart) to get familar with the service if you do not have an account with credits loaded.

## Configure the vLLM Template

vLLM serve is launched automatically by the template and it will use the configuration defined in the environment variables `VLLM_MODEL` and `VLLM_ARGS`. Here's how you can set it up

1. Vist the [templates](https://cloud.vast.ai/templates/) page and find the recommended vLLM template.

2. Click the pencil button to open up the template editor.

3. If you would like to run a model other than the default, edit the `VLLM_MODEL`environment variable. The default value is `deepseek-ai/DeepSeek-R1-Distill-Llama-8B` which is a HuggingFace repository.

4. You can also set the arguments to pass to `vllm serve` by modifying the `VLLM_ARGS` environment variable. vLLM is highly configurable so it's a good idea to check the official documentation before changing anything here. All available startup arguments are listed in the [official vLLM documentation](https://docs.vllm.ai/en/latest/serving/engine_args.html).

5. Save the template. You will be able to find the version you have just modified in the templates page in the 'My Templates' section.

## Launch Your Instance

1. **Select the template** you just saved from the 'My Templates' section of the templates page.

2. Click the **Play icon** on this template to be taken to view the available offers.

3. Use the search filters to select a suitable GPU, ensuring that you have **sufficient VRAM** to load all of the model's layers to GPU.

4. From the search menu, ensure you have **sufficient disk space** for the model you plan to run. The disk slider is located under the template icon on the left hand column. Large models (e.g., 70B parameters) can require dozens of gigabytes of storage. For Deep Seek R1 8B, make sure to allocate over 17Gb of disk space using the slider.

5. Click **Rent** on a suitable instance and wait for it to load

Once the instance has loaded you'll be able to click the Open button to access the instance portal where you'll see links to the interactive vLLM API documentation and the Ray control panel.

As vLLM must download your model upon first run it may take some time before the API is available. You can follow the startup progress in the instance logs.

The vLLM API can be accessed programmatically at:

### Authentication Token

* When making requests, you must include an **Authorization** header with the token value of OPEN\_BUTTON\_TOKEN.

### Sample Curl Command

* -k: Allows curl to perform insecure SSL connections and transfers as Vast.ai uses a self-signed certificate.

* Replace **INSTANCE\_IP** and **EXTERNAL\_PORT** with the externally mapped port for 8000 from the IP button on the instance.

* Update the Authorization header value to match your **OPEN\_BUTTON\_TOKEN**. You can get that from any of the links in the Instance Portal or from the Open button on the instance card.

* Modify the prompt, model, and other fields (max\_tokens, temperature, etc.) as needed.

Although the instance starts the vllm serve function to provide an inference API, the template has been configured with Jupyter and SSH access so you can also interact with vLLM in code from your instance. To do this simply include the vllm modules at the top of your Python script:

Please see the template Readme file on our recommended vLLM template for advanced template configuration and other methods of connecting to and interacting with your instance.

**Examples:**

Example 1 (unknown):

```unknown

### Authentication Token

* When making requests, you must include an **Authorization** header with the token value of OPEN\_BUTTON\_TOKEN.

### Sample Curl Command

```

Example 2 (unknown):

```unknown

* -k: Allows curl to perform insecure SSL connections and transfers as Vast.ai uses a self-signed certificate.

* Replace **INSTANCE\_IP** and **EXTERNAL\_PORT** with the externally mapped port for 8000 from the IP button on the instance.

* Update the Authorization header value to match your **OPEN\_BUTTON\_TOKEN**. You can get that from any of the links in the Instance Portal or from the Open button on the instance card.

* Modify the prompt, model, and other fields (max\_tokens, temperature, etc.) as needed.

## vLLM with Python

Although the instance starts the vllm serve function to provide an inference API, the template has been configured with Jupyter and SSH access so you can also interact with vLLM in code from your instance. To do this simply include the vllm modules at the top of your Python script:

```

---

## Rental Types FAQ

**URL:** llms-txt#rental-types-faq

**Contents:**

- Rental Type Overview

- On-Demand (High Priority)

- Interruptible (Low Priority)

- How do interruptible instances compare to AWS Spot?

- What happens when my interruptible instance loses the bid?

- DLPerf Scoring

- What is DLPerf?

- Is DLPerf accurate for my workload?

Source: https://docs.vast.ai/documentation/reference/faq/rental-types

Understanding on-demand vs interruptible instances

<script

type="application/ld+json"

dangerouslySetInnerHTML={{

__html: JSON.stringify({

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What are the rental types available?",

"acceptedAnswer": {

"@type": "Answer",

"text": "We offer two rental types: On-Demand (High Priority) with fixed price set by the host, runs as long as you want, cannot be interrupted, more expensive but reliable. Interruptible (Low Priority) where you set a bid price, can be stopped by higher bids, saves 50-80% on costs, good for fault-tolerant workloads."

}

},

{

"@type": "Question",

"name": "How do interruptible instances compare to AWS Spot?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Similarities: Both can be interrupted and offer significant savings. Differences: Vast.ai uses direct bidding (you control your bid price) while AWS uses market pricing. No 24-hour limit like GCE preemptible instances. Vast.ai instances can run indefinitely if not outbid."

}

},

{

"@type": "Question",

"name": "What happens when my interruptible instance loses the bid?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Your instance is stopped (killing running processes). Important considerations: Save work frequently to disk, use cloud storage for backups, instance may wait long to resume, implement checkpointing for long jobs. Always design your workload to handle interruptions gracefully."

}

},

{

"@type": "Question",

"name": "What is DLPerf?",

"acceptedAnswer": {

"@type": "Answer",

"text": "DLPerf (Deep Learning Performance) is our scoring function that estimates performance for typical deep learning tasks. It predicts iterations/second for common tasks like training ResNet50 CNNs. Example scores: V100: ~21 DLPerf, 2080 Ti: ~14 DLPerf, 1080 Ti: ~10 DLPerf. A V100 (21) is roughly 2x faster than a 1080 Ti (10) for typical deep learning."

}

},

{

"@type": "Question",

"name": "Is DLPerf accurate for my workload?",

"acceptedAnswer": {

"@type": "Answer",

"text": "DLPerf is optimized for common deep learning tasks like CNN training (ResNet, VGG, etc.), Transformer models, and standard computer vision. It's less accurate for unusual compute patterns and not optimized for non-ML workloads. For specialized workloads, benchmark on different GPUs yourself. While not perfect, DLPerf is more useful than raw TFLOPS for most ML tasks."

}

}

]

})

}}

/>

## Rental Type Overview

We currently offer two rental types:

### On-Demand (High Priority)

* Fixed price set by the host

* Runs as long as you want

* Cannot be interrupted

* More expensive but reliable

### Interruptible (Low Priority)

* You set a bid price

* Can be stopped by higher bids

* Saves 50-80% on costs

* Good for fault-tolerant workloads

## How do interruptible instances compare to AWS Spot?

* Both can be interrupted

* Both offer significant savings

* Vast.ai uses direct bidding (you control your bid price)

* AWS uses market pricing

* No 24-hour limit like GCE preemptible instances

* Vast.ai instances can run indefinitely if not outbid

## What happens when my interruptible instance loses the bid?

Your instance is stopped (killing running processes). Important considerations:

* **Save work frequently** to disk

* **Use cloud storage** for backups

* **Instance may wait long** to resume

* **Implement checkpointing** for long jobs

When using interruptible instances, always design your workload to handle interruptions gracefully.

DLPerf (Deep Learning Performance) is our scoring function that estimates performance for typical deep learning tasks. It predicts iterations/second for common tasks like training ResNet50 CNNs.

* V100: \~21 DLPerf

* 2080 Ti: \~14 DLPerf

* 1080 Ti: \~10 DLPerf

A V100 (21) is roughly 2x faster than a 1080 Ti (10) for typical deep learning.

### Is DLPerf accurate for my workload?

DLPerf is optimized for common deep learning tasks:

* ✅ CNN training (ResNet, VGG, etc.)

* ✅ Transformer models

* ✅ Standard computer vision

* ⚠️ Less accurate for unusual compute patterns

* ⚠️ Not optimized for non-ML workloads

For specialized workloads, benchmark on different GPUs yourself. While not perfect, DLPerf is more useful than raw TFLOPS for most ML tasks.

---

## Teams Overview

**URL:** llms-txt#teams-overview

**Contents:**

- Introduction

- Key Features:

- Getting Started with Teams

- Creating Multiple Teams

- Conclusion

Source: https://docs.vast.ai/documentation/teams/teams-overview

<script

type="application/ld+json"

dangerouslySetInnerHTML={{

__html: JSON.stringify({

"@context": "https://schema.org",

"@type": "TechArticle",

"headline": "Vast.ai Teams Overview",

"description": "An introduction to Vast.ai's Teams feature for collaborative GPU computing environments, including key features like resource management, consolidated billing, and access controls.",

"author": {

"@type": "Organization",

"name": "Vast.ai"

},

"datePublished": "2025-01-13",

"dateModified": "2025-04-04",

"articleSection": "Teams Documentation",

"keywords": ["teams", "collaboration", "GPU computing", "resource management", "billing", "access controls", "vast.ai"]

})

}}

/>

Vast.ai's Teams feature extends our powerful GPU compute services to collaborative environments. It allows multiple users to work together seamlessly in a shared space, managing serverless workers for AI Inference and GPU computing tasks collectively.

* **Collaborative Environment**: Enable teams to work together in a shared space, managing resources and tasks collectively.

* **Resource Allocation & Management**: Team managers can manage access among team members, ensuring efficient use of GPU workers. (In the future, resource allocation will also be in play)

* **Consolidated Billing**: Simplifies the financial management by consolidating usage and billing across the team.

* **Performance Metrics & Access Controls**: Each team member can access shared metrics and logs, with custom access controls set by team owners.

## Getting Started with Teams

Ready to create your first team? Check out our [Team Creation guide](https://docs.vast.ai/teams-quickstart) for a step-by-step tutorial on creating a team, inviting members, and assigning roles.

## Creating Multiple Teams

Teams are created as separate accounts, allowing multiple teams to be created by a single user. Note: This feature is unavailble for Legacy Teams (accounts that were converted into teams directly). Each team operates independently, with its own members, roles, and permissions. Users can seamlessly switch between their personal and team accounts using the Context Switcher.

* **Independent Team Management:** Each team has its own and members and roles.

* **Shared Resources:** Each team shares resources such as instances, templates, machines, and certain settings with all team members.

* **Separate Billing & Credits:** Teams maintain their own separate balance/credit, billing information, and payment history, separate from personal accounts.

* **Easy Switching:** Users can navigate between personal and team accounts without affecting their workflow.

The Teams feature at Vast.ai is designed to bring a new level of collaboration and efficiency to your GPU computing tasks. Additionally, by bringing together the power of our Autoscaling system with these collaborative tools, your team will be well-equipped to tackle all kinds of complex, dynamic workloads effectively.

---

## Test with start (no-op if already running)

**URL:** llms-txt#test-with-start-(no-op-if-already-running)

vastai start instance $CONTAINER_ID

---

## show workergroup

**URL:** llms-txt#show-workergroup

Source: https://docs.vast.ai/api-reference/serverless/show-workergroup

api-reference/openapi.json get /api/v0/workergroups/

Retrieves the list of workergroups associated with the authenticated user.

CLI Usage: `vastai show workergroups`

---

## Overview & Prerequisites

**URL:** llms-txt#overview-&-prerequisites

Vast.ai provides pre-made serverless templates ([vLLM](/documentation/serverless/vllm), [ComfyUI](/documentation/serverless/comfy-ui)) for popular use cases, and can be used with minimal setup effort. In this guide, we will setup a serverless engine to handle inference requests to a model using vLLM, namely Qwen3-8B , using the pre-made Vast.ai vLLM serverless template. This prebuilt template bundles vLLM with scaling logic so you don’t have to write custom orchestration code. By the end of this guide, you will be able to host the Qwen3-8B model with dynamic scaling to meet your demand.

This guide assumes knowledge of the Vast CLI. An introduction for it can be found [here](/cli/get-started).

Before we start, there are a few things you will need:

1. A Vast.ai account with credits

2. A Vast.ai [API Key](/documentation/reference/keys)

3. A HuggingFace account with a [read-access API token](https://huggingface.co/docs/hub/en/security-tokens)

---

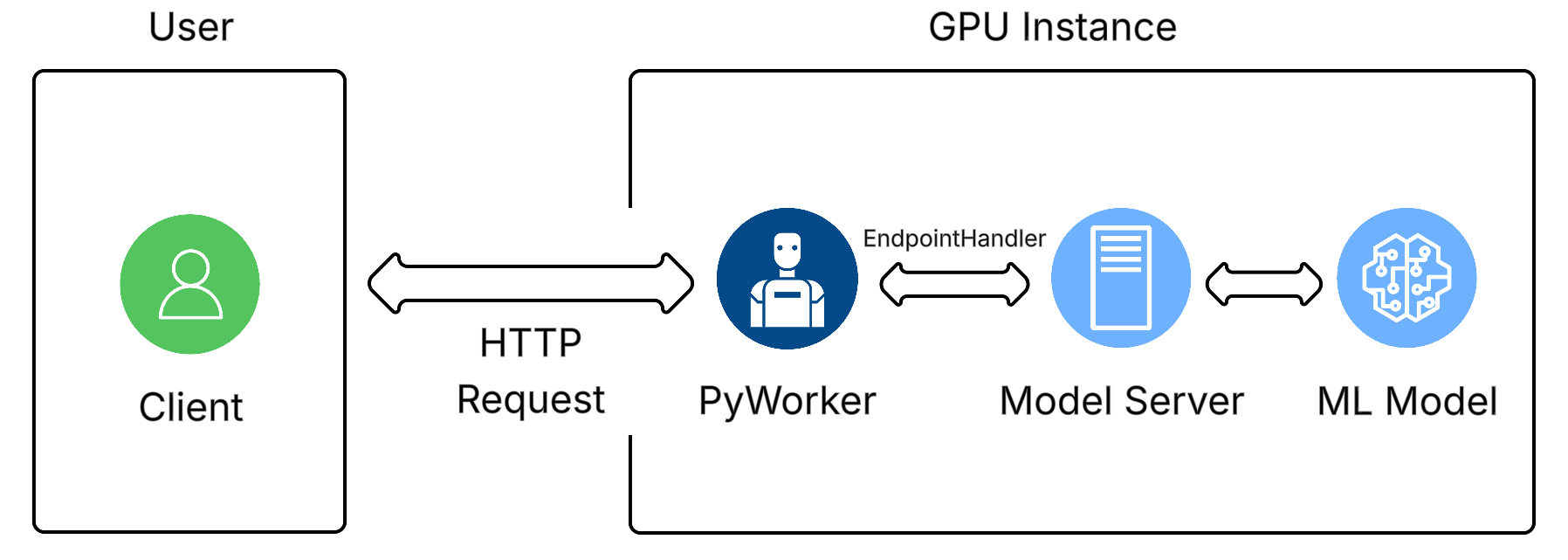

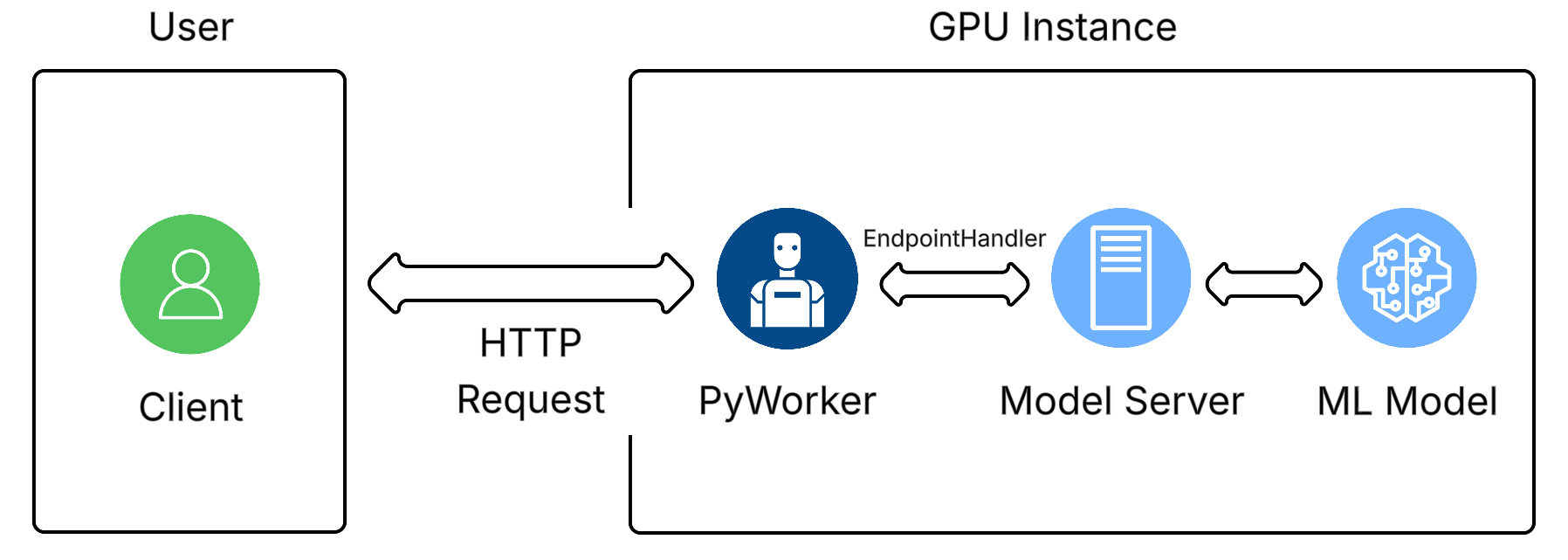

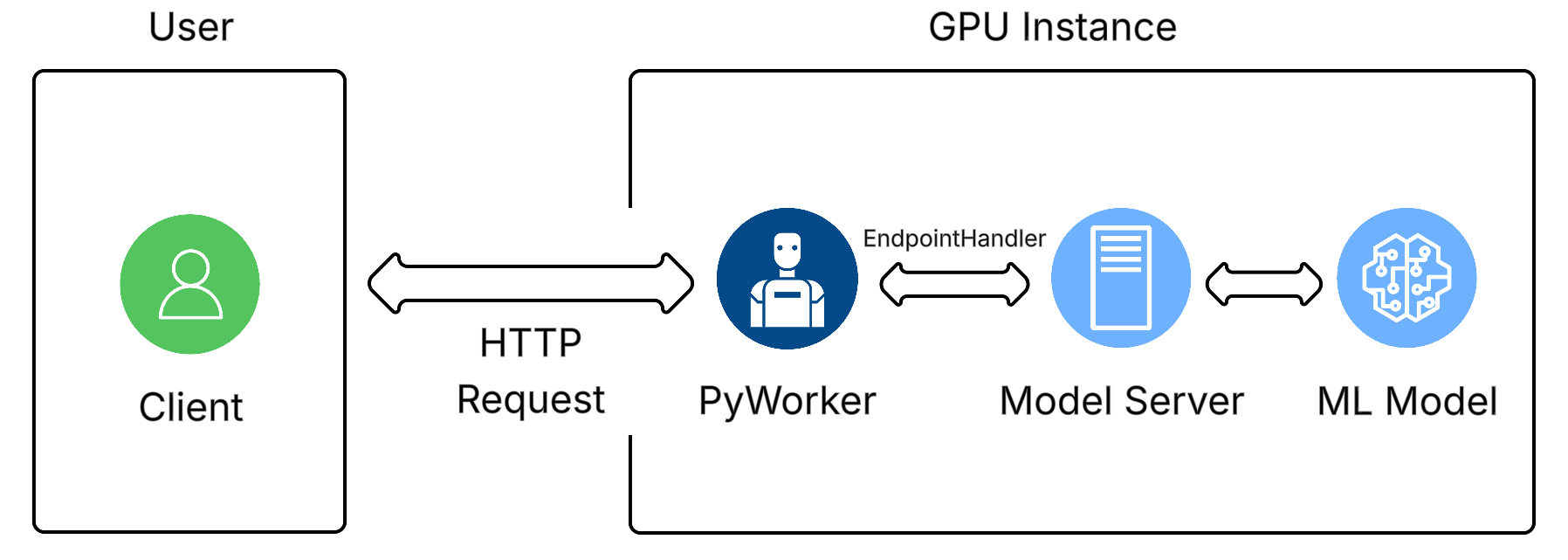

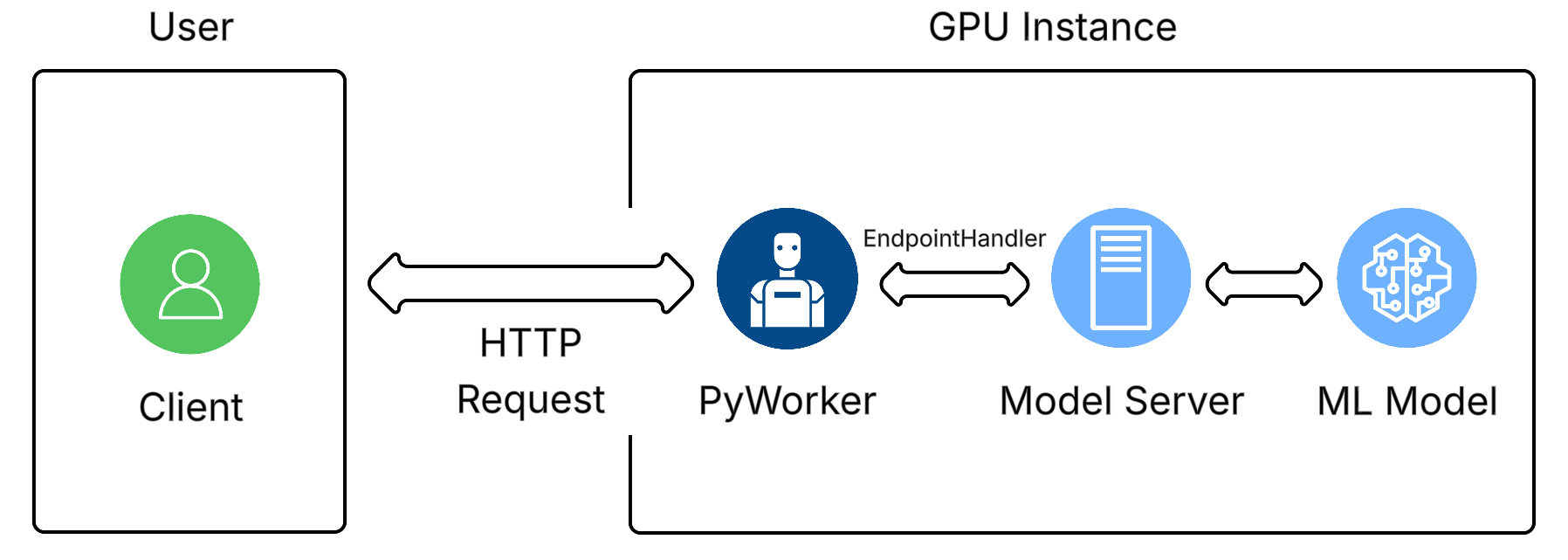

## This is the backend instance of pyworker. Only one must be made which uses EndpointHandlers to process

**URL:** llms-txt#this-is-the-backend-instance-of-pyworker.-only-one-must-be-made-which-uses-endpointhandlers-to-process

---

## create template

**URL:** llms-txt#create-template

Source: https://docs.vast.ai/api-reference/templates/create-template

api-reference/openapi.json post /api/v0/template/

Creates a new template for launching instances. If an identical template already exists, returns the existing template instead of creating a duplicate.

CLI Usage: `vastai create template [options]`

---

## This ensures files can be properly synced between instances

**URL:** llms-txt#this-ensures-files-can-be-properly-synced-between-instances

WORKDIR /opt/workspace-internal/

---

## Export variables with underscores

**URL:** llms-txt#export-variables-with-underscores

env | grep _ >> /etc/environment

---

## Instance Portal

**URL:** llms-txt#instance-portal

**Contents:**

- What is the Instance Portal?

- Loading Process

- Landing Page

- Tunnels Page

- Instance Logs Page

- Tools & Help Page

- Configuration

- In Place Configuration

- Disable Default Applictions

- Named Tunnels

Source: https://docs.vast.ai/documentation/instances/connect/instance-portal

## What is the Instance Portal?

The Instance Portal is the first application you will see after clicking the 'Open' button to access an instance that has been loaded with a [Vast.ai Docker image](https://github.com/vast-ai/base-image/). Many of our recommended templates include the Instance Portal.

Any errrors loading the model will appear under the download button.

## 8) Start chatting!

Navigate to the Text generation tab to start chatting with the model. This is the most basic way to use Oobabooga, there are many other settings and things you can do with the interface.

## 9) Done? Destroy the instance

If you STOP the instance using the stop button, you will no longer pay the hourly GPU charges. **However you will still incur storage charges** because the data is still stored on the host machine. When you hit the START button to restart the instance, you are also not guaranteed that you can rent the GPU as someone else might have rented it while it was stopped.

To incur no other charges you have to DESTROY the instance using the trash can icon. **We recommend you destroy instances** so as not to incur storage charges while you are not using the system.

---

## Worker List

**URL:** llms-txt#worker-list

Source: https://docs.vast.ai/documentation/serverless/worker-list

Learn how to use the /get_endpoint_workers/ and /get_autogroup_workers/ endpoints to retrieve a list of GPU instances under an Endpoint and Worker Group. Understand the inputs, outputs, and examples for using the endpoints.