5.4 MiB

Apify Documentation

The entire content of Apify documentation is available in a single Markdown file at https://docs.apify.com/llms-full.txt

Apify API

- Apify API

- Apify API: The Apify API (version 2) provides programmatic access to the Apify platform.

- Abort build: :::caution deprecated This endpoint has been deprecated and may be replaced or removed in future versions of the API.

- Get default build: Clients Python JavaScriptGet the default build for an Actor.

- Get build: :::caution deprecated API endpoints related to build of the Actor were moved under new namespace

actor-builds. - Get list of builds: Clients Python JavaScriptGets the list of builds of a specific Actor.

- Build Actor: Clients Python JavaScriptBuilds an Actor.

- Delete Actor: Clients JavaScriptDeletes an Actor.

- Get Actor: Clients Python JavaScriptGets an object that contains all the details about a specific Actor.

- Get OpenAPI definition: Get the OpenAPI definition for Actor builds.

- Update Actor: Clients Python JavaScriptUpdates settings of an Actor using values specified by an Actor object passed as JSON in the POST payload.

- Abort run: :::caution deprecated This endpoint has been deprecated and may be replaced or removed in future versions of the API.

- Get run: :::caution deprecated This endpoint has been deprecated and may be replaced or removed in future versions of the API.

- Metamorph run: :::caution deprecated This endpoint has been deprecated and may be replaced or removed in future versions of the API.

- Resurrect run: [DEPRECATED] API endpoints related to run of the Actor were moved under new namespace

actor-runs.Resurrects a finished Actor run and returns an object that contains all the details about the resurrected run. - Without input: Runs a specific Actor and returns its output.

- Run Actor synchronously without input and get dataset items: Runs a specific Actor and returns its dataset items.

- Run Actor synchronously with input and get dataset items: Runs a specific Actor and returns its dataset items.

- Run Actor synchronously with input and return output: Runs a specific Actor and returns its output.

- Get list of runs: Clients Python JavaScriptGets the list of runs of a specific Actor.

- Get last run: This is not a single endpoint, but an entire group of endpoints that lets you to retrieve and manage the last run of given Actor or any of its default storages.

- Run Actor: Clients Python JavaScriptRuns an Actor and immediately returns without waiting for the run to finish.

- Delete version: Deletes a specific version of Actor's source code.

- Delete environment variable: Deletes a specific environment variable.

- Get environment variable: Clients PythonGets a EnvVar object that contains all the details about a specific environment variable of an Actor.

- Update environment variable: Clients PythonUpdates Actor environment variable using values specified by a EnvVar object passed as JSON in the POST payload.

- Get list of environment variables: Clients PythonGets the list of environment variables for a specific version of an Actor.

- Create environment variable: Clients PythonCreates an environment variable of an Actor using values specified in a EnvVar object passed as JSON in the POST payload.

- Get version: Clients PythonGets a Version object that contains all the details about a specific version of an Actor.

- Update version: Clients PythonUpdates Actor version using values specified by a Version object passed as JSON in the POST payload.

- Get list of versions: Clients PythonGets the list of versions of a specific Actor.

- Create version: Clients PythonCreates a version of an Actor using values specified in a Version object passed as JSON in the POST payload.

- Get list of webhooks: Gets the list of webhooks of a specific Actor.

- Abort build: Clients Python JavaScriptAborts an Actor build and returns an object that contains all the details about the build.

- Delete build: Clients JavaScriptDelete the build.

- Get build: Clients Python JavaScriptGets an object that contains all the details about a specific build of an Actor.

- Get log: Check out Logs for full reference.

- Get OpenAPI definition: Clients Python JavaScriptGet the OpenAPI definition for Actor builds.

- Actor builds - Introduction: The API endpoints described in this section enable you to manage, and delete Apify Actor builds.

- Get user builds list: Gets a list of all builds for a user.

- Abort run: Clients Python JavaScriptAborts an Actor run and returns an object that contains all the details about the run.

- Delete run: Clients JavaScriptDelete the run.

- Get run: This is not a single endpoint, but an entire group of endpoints that lets you retrieve the run or any of its default storages.

- Metamorph run: Clients Python JavaScriptTransforms an Actor run into a run of another Actor with a new input.

- Update status message: You can set a single status message on your run that will be displayed in the Apify Console UI.

- Reboot run: Clients Python JavaScriptReboots an Actor run and returns an object that contains all the details about the rebooted run.

- Actor runs - Introduction: The API endpoints described in this section enable you to manage, and delete Apify Actor runs.

- Get user runs list: Gets a list of all runs for a user.

- Delete task: Clients JavaScriptDelete the task specified through the

actorTaskIdparameter. - Get task: Clients Python JavaScriptGet an object that contains all the details about a task.

- Get task input: Clients Python JavaScriptReturns the input of a given task.

- Update task input: Clients Python JavaScriptUpdates the input of a task using values specified by an object passed as JSON in the PUT payload.

- Update task: Clients Python JavaScriptUpdate settings of a task using values specified by an object passed as JSON in the POST payload.

- Run task synchronously: Run a specific task and return its output.

- Run task synchronously and get dataset items: Run a specific task and return its dataset items.

- Run task synchronously and get dataset items: Runs an Actor task and synchronously returns its dataset items.

- Run task synchronously: Runs an Actor task and synchronously returns its output.

- Get list of task runs: Get a list of runs of a specific task.

- Get last run: This is not a single endpoint, but an entire group of endpoints that lets you to retrieve and manage the last run of given actor task or any of its default storages.

- Run task: Clients Python JavaScriptRuns an Actor task and immediately returns without waiting for the run to finish.

- Get list of webhooks: Gets the list of webhooks of a specific Actor task.

- Actor tasks - Introduction: The API endpoints described in this section enable you to create, manage, delete, and run Apify Actor tasks.

- Get list of tasks: Clients Python JavaScriptGets the complete list of tasks that a user has created or used.

- Create task: Clients Python JavaScriptCreate a new task with settings specified by the object passed as JSON in the POST payload.

- Actors - Introduction: The API endpoints in this section allow you to manage Apify Actors.

- Actor builds - Introduction: The API endpoints in this section allow you to manage your Apify Actors builds.

- Actor runs - Introduction: The API endpoints in this section allow you to manage your Apify Actors runs.

- Actor versions - Introduction: The API endpoints in this section allow you to manage your Apify Actors versions.

- Webhook collection - Introduction: The API endpoint in this section allows you to get a list of webhooks of a specific Actor.

- Get list of Actors: Clients Python JavaScriptGets the list of all Actors that the user created or used.

- Create Actor: Clients Python JavaScriptCreates a new Actor with settings specified in an Actor object passed as JSON in the POST payload.

- Delete dataset: Clients JavaScriptDeletes a specific dataset.

- Get dataset: Clients Python JavaScriptReturns dataset object for given dataset ID.

- Get items: Clients Python JavaScriptReturns data stored in the dataset in a desired format.

- Store items: Clients Python JavaScriptAppends an item or an array of items to the end of the dataset.

- Update dataset: Clients Python JavaScriptUpdates a dataset's name using a value specified by a JSON object passed in the PUT payload.

- Get dataset statistics: Returns statistics for given dataset.

- Get list of datasets: Clients Python JavaScriptLists all of a user's datasets.

- Create dataset: Clients Python JavaScriptCreates a dataset and returns its object.

- Getting started with Apify API: The Apify API provides programmatic access to the Apify platform.

- Delete store: Clients JavaScriptDeletes a key-value store.

- Get store: Clients Python JavaScriptGets an object that contains all the details about a specific key-value store.

- Get list of keys: Clients Python JavaScriptReturns a list of objects describing keys of a given key-value store, as well as some information about the values (e.g.

- Update store: Clients Python JavaScriptUpdates a key-value store's name using a value specified by a JSON object passed in the PUT payload.

- Delete record: Clients Python JavaScriptRemoves a record specified by a key from the key-value store.

- Get record: Clients Python JavaScriptGets a value stored in the key-value store under a specific key.

- Check if a record exists: Clients Python JavaScriptCheck if a value is stored in the key-value store under a specific key.

- Store record: Clients Python JavaScriptStores a value under a specific key to the key-value store.

- Get list of key-value stores: Clients Python JavaScriptGets the list of key-value stores owned by the user.

- Create key-value store: Clients Python JavaScriptCreates a key-value store and returns its object.

- Get log: Clients Python JavaScriptRetrieves logs for a specific Actor build or run.

- Logs - Introduction: The API endpoints described in this section are used the download the logs generated by Actor builds and runs.

- Charge events in run: Clients Python JavaScriptCharge for events in the run of your pay per event Actor.

- Resurrect run: Clients Python JavaScriptResurrects a finished Actor run and returns an object that contains all the details about the resurrected run.

- Delete request queue: Clients JavaScriptDeletes given queue.

- Get request queue: Clients Python JavaScriptReturns queue object for given queue ID.

- Get head: Clients Python JavaScriptReturns given number of first requests from the queue.

- Get head and lock: Clients Python JavaScriptReturns the given number of first requests from the queue and locks them for the given time.

- Update request queue: Clients Python JavaScriptUpdates a request queue's name using a value specified by a JSON object passed in the PUT payload.

- Delete request: Clients JavaScriptDeletes given request from queue.

- Get request: Clients Python JavaScriptReturns request from queue.

- Delete request lock: Clients Python JavaScriptDeletes a request lock.

- Prolong request lock: Clients Python JavaScriptProlongs request lock.

- Update request: Clients Python JavaScriptUpdates a request in a queue.

- Delete requests: Clients Python JavaScriptBatch-deletes given requests from the queue.

- Add requests: Clients Python JavaScriptAdds requests to the queue in batch.

- List requests: Clients Python JavaScriptReturns a list of requests.

- Add request: Clients Python JavaScriptAdds request to the queue.

- Unlock requests: Clients Python JavaScriptUnlocks requests in the queue that are currently locked by the client.

- Get list of request queues: Clients Python JavaScriptLists all of a user's request queues.

- Create request queue: Clients Python JavaScriptCreates a request queue and returns its object.

- Delete schedule: Clients JavaScriptDeletes a schedule.

- Get schedule: Clients Python JavaScriptGets the schedule object with all details.

- Get schedule log: Clients Python JavaScriptGets the schedule log as a JSON array containing information about up to a 1000 invocations of the schedule.

- Update schedule: Clients Python JavaScriptUpdates a schedule using values specified by a schedule object passed as JSON in the POST payload.

- Schedules - Introduction: This section describes API endpoints for managing schedules.

- Get list of schedules: Clients Python JavaScriptGets the list of schedules that the user created.

- Create schedule: Clients Python JavaScriptCreates a new schedule with settings provided by the schedule object passed as JSON in the payload.

- Datasets - Introduction: This section describes API endpoints to manage Datasets.

- Key-value stores - Introduction: This section describes API endpoints to manage Key-value stores.

- Request queues - Introduction: This section describes API endpoints to create, manage, and delete request queues.

- Requests- Introduction: This section describes API endpoints to create, manage, and delete requests within request queues.

- Requests locks - Introduction: This section describes API endpoints to create, manage, and delete request locks within request queues.

- Store - Introduction: Apify Store is home to thousands of public Actors available to the Apify community.

- Get list of Actors in store: Gets the list of public Actors in Apify Store.

- Get public user data: Returns public information about a specific user account, similar to what can be seen on public profile pages (e.g.

- Users - Introduction: The API endpoints described in this section return information about user accounts.

- Get private user data: Returns information about the current user account, including both public and private information.

- Get limits: Returns a complete summary of your account's limits.

- Update limits: Updates the account's limits manageable on your account's Limits page.

- Get monthly usage: Returns a complete summary of your usage for the current usage cycle, an overall sum, as well as a daily breakdown of usage.

- Delete webhook: Clients JavaScriptDeletes a webhook.

- Get webhook dispatch: Clients Python JavaScriptGets webhook dispatch object with all details.

- Get list of webhook dispatches: Clients Python JavaScriptGets the list of webhook dispatches that the user have.

- Get webhook: Clients Python JavaScriptGets webhook object with all details.

- Update webhook: Clients Python JavaScriptUpdates a webhook using values specified by a webhook object passed as JSON in the POST payload.

- Test webhook: Clients Python JavaScriptTests a webhook.

- Get collection: Clients PythonGets a given webhook's list of dispatches.

- Get list of webhooks: Clients Python JavaScriptGets the list of webhooks that the user created.

- Create webhook: Clients Python JavaScriptCreates a new webhook with settings provided by the webhook object passed as JSON in the payload.

- Webhook dispatches - Introduction: This section describes API endpoints to get webhook dispatches.

- Webhooks - Introduction: This section describes API endpoints to manage webhooks.

open-source

sdk

search

Apify academy

- Web Scraping Academy: Learn everything about web scraping and automation with our free courses that will turn you into an expert scraper developer.

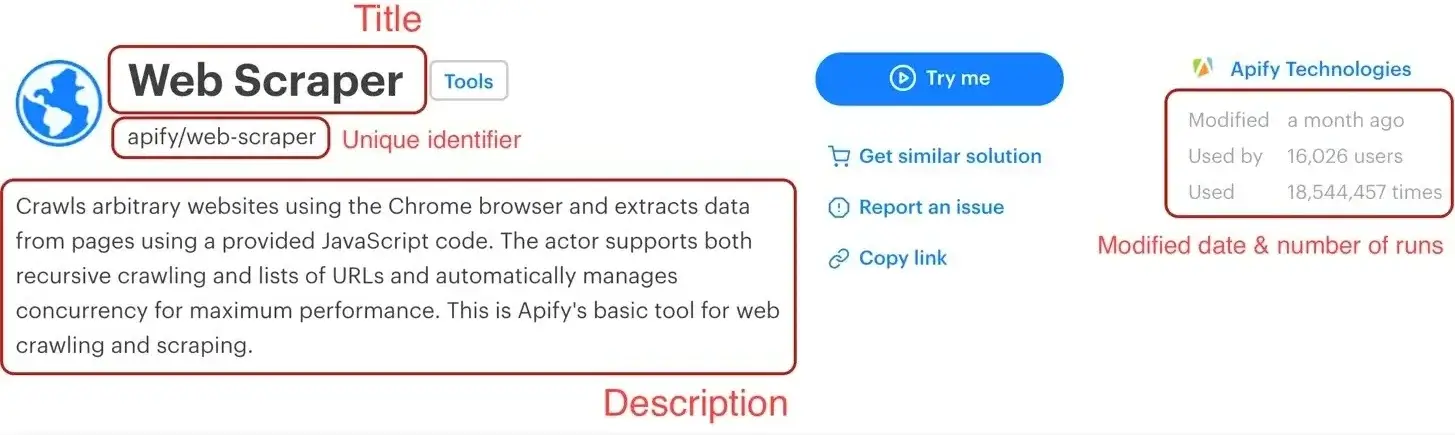

- Actor marketing playbook: Learn how to optimize and monetize your Actors on Apify Store by sharing them with other platform users. --- Apify Store is a marketplace featuring thousands of ready-made automation tools called Actors.

- Actor description & SEO description: Learn about Actor description and meta description.

- Actors and emojis: Using emojis in Actors is a science on its own.

- How to create an Actor README: Learn how to write a comprehensive README to help users better navigate, understand and run public Actors in Apify Store. --- ## What's a README in the Apify sense?

- Importance of Actor URL: **Actor URL (or technical name, as we call it), is the page URL of the Actor shown on the web.

- Name your Actor: **Apify's standards for Actor naming.

- Emails to Actor users: **Getting users is one thing, but keeping them is another.

- Handle Actor issues: **Once you publish your Actor in Apify Store, it opens the door to new users, feedback, and… issue reports.

- Your Apify Store bio: ## Your Apify Store bio and Store “README” To help our community showcase their talents and projects, we introduced public profile pages for developers.

- Actor bundles: Learn what an Actor bundle is, explore existing examples, and discover how to promote them. --- ## What is an Actor bundle?

- How to create a great input schema: Optimizing your input schema.

- Affiliates: The Apify Affiliate Program offers you a way to earn recurring commissions while helping others discover automation and web scraping solutions.

- Blogs and blog resources: **Blogs remain a powerful tool for promoting your Actors and establishing authority in the field.

- Marketing checklist: You're a developer, not a marketer.

- Parasite SEO: **Do you want to attract more users to your Actors?

- Product Hunt: Product Hunt is one of the best platforms for introducing new tools, especially in the tech community.

- SEO: SEO means optimizing your content to rank high for your target queries in search engines such as Google, Bing, etc.

- Social media: **Social media is a powerful way to connect with your Actor users and potential users.

- Video tutorials: **Videos and live streams are powerful tools for connecting with users and potential users, especially when promoting your Actors.

- Webinars: Webinars and live streams are a fantastic way to connect with your audience, showcase your Actor's capabilities, and gather feedback from users.

- How Actor monetization works: **You can turn your web scrapers into a source of income by publishing them on Apify Store.

- How Apify Store works: **Out of thousands of Actors on Apify Store marketplace, most of them were created by developers just like you.

- How to build Actors: At Apify, we try to make building web scraping and automation straightforward.

- Wrap open-source as an Actor: Apify is a cloud platform with a marketplace of 6,000+ web scraping and automation tools called Actors.

- Advanced web scraping: In the Web scraping basics for JavaScript devs course, we have learned the necessary basics required to create a scraper.

- Crawling sitemaps: In the previous lesson, we learned what is the utility (and dangers) of crawling sitemaps.

- Scraping websites with search: # Scraping websites with search In this lesson, we will start with a simpler example of scraping HTML based websites with limited pagination.

- Sitemaps vs search: The core crawling problem comes to down to ensuring that we reliably find all detail pages on the target website or inside its categories.

- Tips and tricks for robustness: **Learn how to make your automated processes more effective.

- AI agent tutorial: **In this section of the Apify Academy, we show you how to build an AI agent with the CrewAI Python framework.

- Anti-scraping protections: # Anti-scraping protections {#anti-scraping-protections} Understand the various anti-scraping measures different sites use to prevent bots from accessing them, and how to appear more human to fix these issues. --- If at any point in time you've strayed away from the Academy's demo content, and into the Wild West by writing some scrapers of your own, you may have been hit with anti-scraping measures.

- Anti-scraping mitigation: # Anti-scraping mitigation {#anti-scraping-mitigation} After learning about the various different anti-scraping techniques websites use, learn how to mitigate them with a few different techniques. --- In the techniques section of this course, you learned about multiple methods websites use to prevent bots from accessing their content.

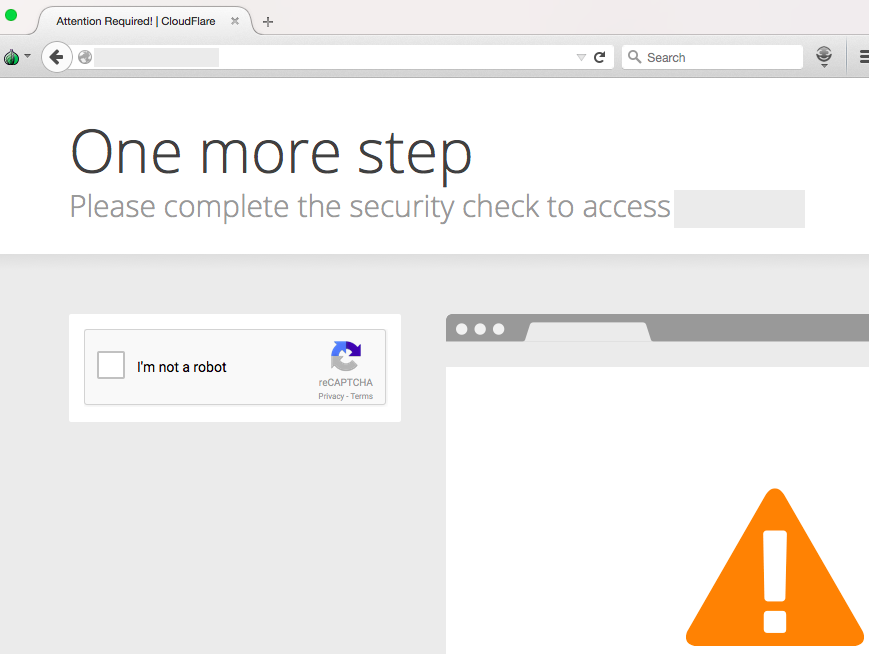

- Bypassing Cloudflare browser check: # Bypassing Cloudflare browser check {#cloudflare-challenge} Learn how to bypass Cloudflare browser challenge with Crawlee. --- If you find yourself stuck, there are a few strategies that you can employ.

- Generating fingerprints: # Generating fingerprints {#generating-fingerprints} Learn how to use two super handy npm libraries to generate fingerprints and inject them into a Playwright or Puppeteer page. --- In Crawlee, you can use FingerprintOptions on a crawler to automatically generate fingerprints.

- Proxies: # Proxies {#about-proxies} Learn all about proxies, how they work, and how they can be leveraged in a scraper to avoid blocking and other anti-scraping tactics. --- A proxy server provides a gateway between users and the internet, to be more specific in our case - between the crawler and the target website.

- Using proxies: # Using proxies {#using-proxies} Learn how to use and automagically rotate proxies in your scrapers by using Crawlee, and a bit about how to obtain pools of proxies. --- In the Web scraping basics for JavaScript devs course, we learned about the power of Crawlee, and how it can streamline the development process of web crawlers.

- Anti-scraping techniques: # Anti-scraping techniques {#anti-scraping-techniques} Understand the various common (and obscure) anti-scraping techniques used by websites to prevent bots from accessing their content. --- In this section, we'll be discussing some of the most common (as well as some obscure) anti-scraping techniques used by websites to detect and block/limit bots from accessing their content.

- Browser challenges: # Browser challenges {#fingerprinting} > Learn how to navigate browser challenges like Cloudflare's to effectively scrape data from protected websites.

- Captchas: # Captchas {#captchas} Learn about the reasons a bot might be presented a captcha, the best ways to avoid captchas in the first place, and how to programmatically solve them. --- In general, a website will present a user (or scraper) a captcha for 2 main reasons: 1.

- Fingerprinting: # Fingerprinting {#fingerprinting} Understand browser fingerprinting, an advanced technique used by browsers to track user data and even block bots from accessing them. --- Browser fingerprinting is a method that some websites use to collect information about a browser's type and version, as well as the operating system being used, any active plugins, the time zone and language of the machine, the screen resolution, and various other active settings.

- Firewalls: # Firewalls {#firewalls} Understand what a web-application firewall is, how they work, and the various common techniques for avoiding them altogether. --- A web-application firewall (or WAF) is a tool for website admins which allows them to set various access rules for their visitors.

- Geolocation: # Geolocation {#geolocation} Learn about the geolocation techniques to determine where requests are coming from, and a bit about how to avoid being blocked based on geolocation. --- Geolocation is yet another way websites can detect and block access or show limited data.

- Rate-limiting: # Rate-limiting {#rate-limiting} Learn about rate-limiting, a common tactic used by websites to avoid a large and non-human rate of requests coming from a single IP address. --- When crawling a website, a web scraping bot will typically send many more requests from a single IP address than a human user could generate over the same period.

- Using Apify API: # Using Apify API A collection of various tutorials explaining how to interact with the Apify platform programmatically using its API. --- This section explains how you can run Apify Actors using Apify's API, retrieve their results, and integrate them into your own product and workflows.

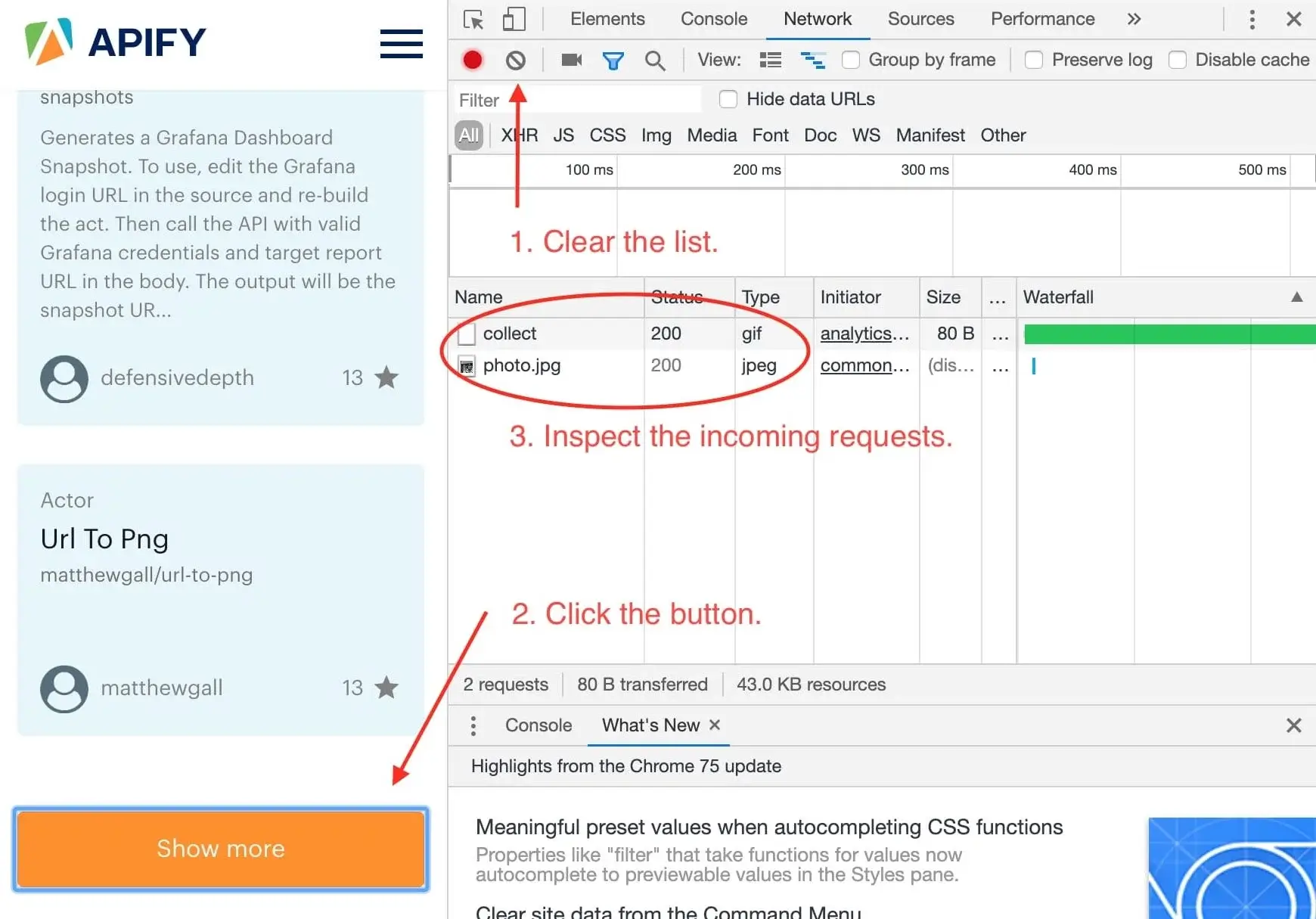

- API scraping: # API scraping Learn all about how the professionals scrape various types of APIs with various configurations, parameters, and requirements. --- API scraping is locating a website's API endpoints, and fetching the desired data directly from their API, as opposed to parsing the data from their rendered HTML pages.

- General API scraping: # General API scraping {#general-api-scraping} Learn the benefits and drawbacks of API scraping, how to locate an API, how to utilize its features, and how to work around common roadblocks. --- This section will teach you everything you should know about API scraping before moving into the next sections in the API Scraping module.

- Dealing with headers, cookies, and tokens: # Dealing with headers, cookies, and tokens {#challenges} Learn about how some APIs require certain cookies, headers, and/or tokens to be present in a request in order for data to be received. --- Unfortunately, most APIs will require a valid cookie to be included in the

cookiefield within a request's headers in order to be authorized. - Handling pagination: # Handling pagination {#handling-pagination} Learn about the three most popular API pagination techniques and how to handle each of them when scraping an API with pagination. --- When scraping large APIs, you'll quickly realize that most APIs limit the number of results it responds back with.

- Locating API endpoints: # Locating API endpoints {#locating-endpoints} Learn how to effectively locate a website's API endpoints, and learn how to use them to get the data you want faster and more reliably. --- In order to retrieve a website's API endpoints, as well as other data about them, the Network tab within Chrome's (or another browser's) DevTools can be used.

- GraphQL scraping: # GraphQL scraping {#graphql-scraping} **Dig into the topic of scraping APIs which use the latest and greatest API technology - GraphQL.

- Custom queries: # Custom queries {#custom-queries} Learn how to write custom GraphQL queries, how to pass input values into GraphQL requests as variables, and how to retrieve and output the data from a scraper. --- Sometimes, the queries found in the Network tab aren't good enough for your use case.

- Introspection: # Introspection {#introspection} Understand what introspection is, and how it can help you understand a GraphQL API to take advantage of the features it has to offer before writing any code. --- Introspection is when you make a query to the target GraphQL API requesting information about its schema.

- Modifying variables: # Modifying variables {#modifying-variables} Learn how to modify the variables of a JSON format GraphQL query to use the API without needing to write any GraphQL language or create custom queries. --- In the introduction of this course, we searched for the term test on the Cheddar website and discovered a request to their GraphQL API.

- How to retry failed requests: Learn how to re-scrape only failed requests in your run. --- Requests of a scraper can fail for many reasons.

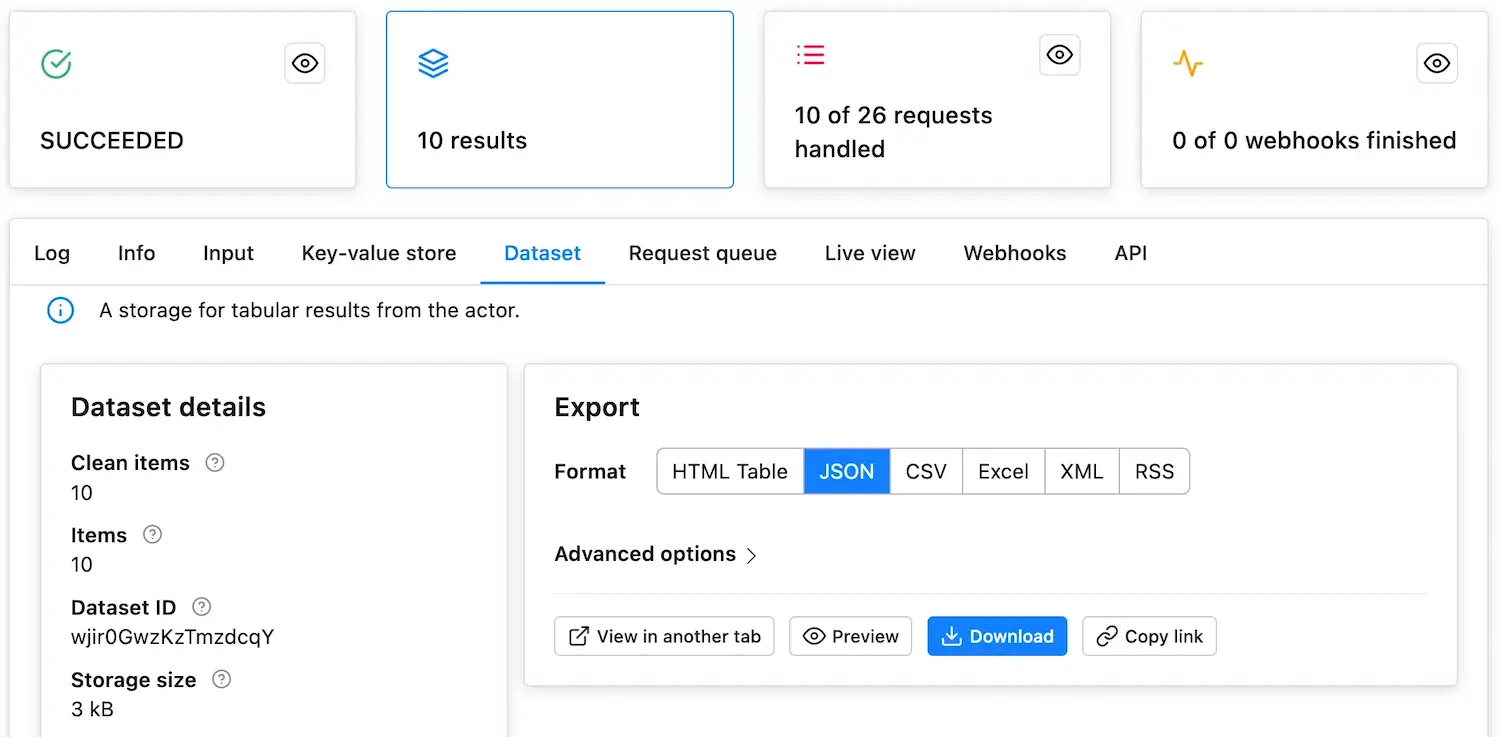

- Run Actor and retrieve data via API: **Learn how to run an Actor/task via the Apify API, wait for the job to finish, and retrieve its output data.

- Tutorials on Apify Actors: Learn how to deploy your API project to the Apify platform. --- This tutorial shows you how to add your existing RapidAPI project to Apify, giving you access to managed hosting, data storage, and a broader user base through Apify Store while maintaining your RapidAPI presence.

- Adding your RapidAPI project to Apify: If you've published an API project on RapidAPI, you can expand your project's visibility by listing it on Apify Store.

- Introduction to the Apify platform: # Introduction to the Apify platform {#about-the-platform} Learn all about the Apify platform, all of the tools it offers, and how it can improve your overall development experience. --- The Apify platform was built to serve large-scale and high-performance web scraping and automation needs.

- Using ready-made Apify scrapers: # Using ready-made Apify scrapers **Discover Apify's ready-made web scraping and automation tools.

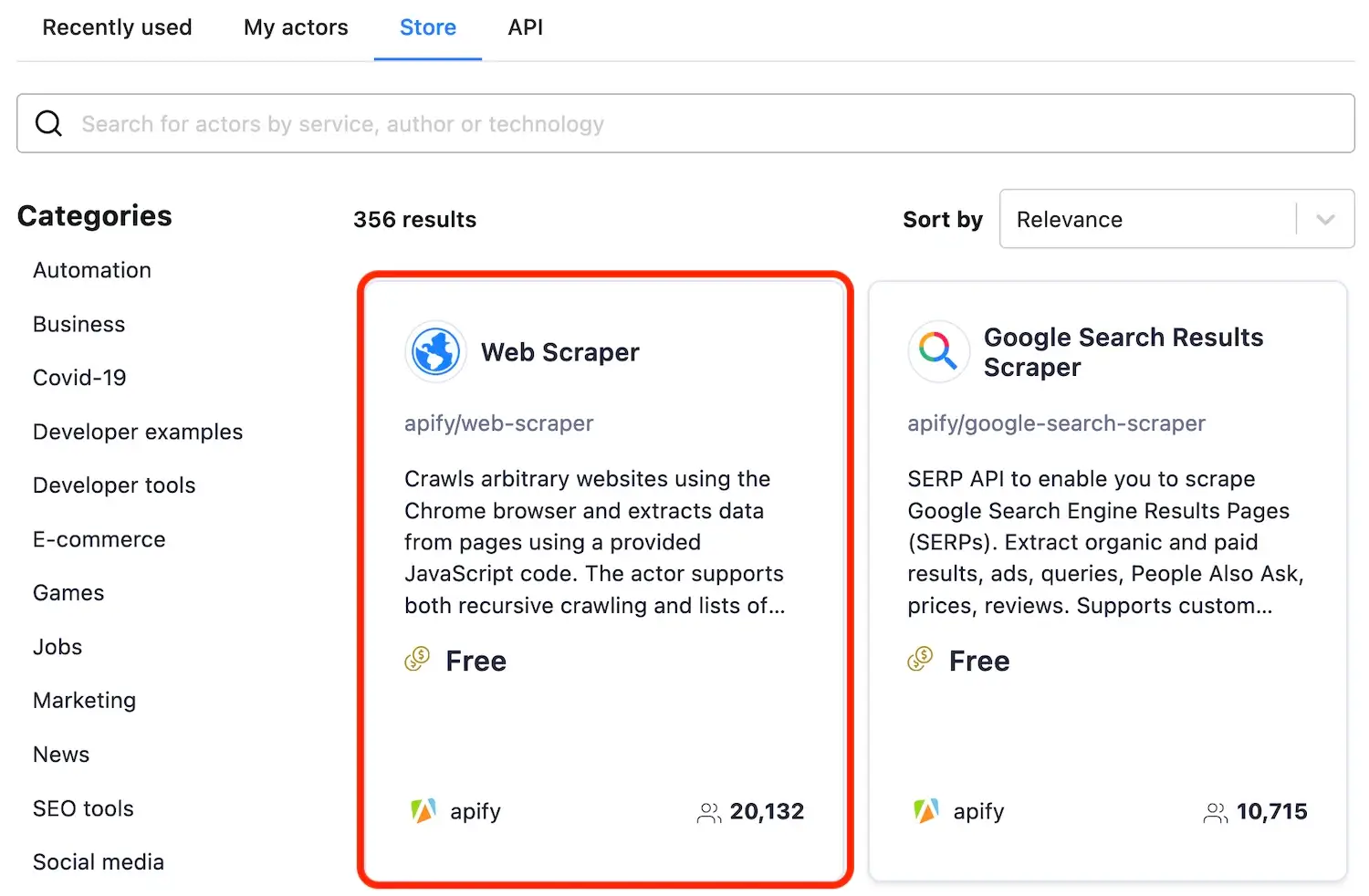

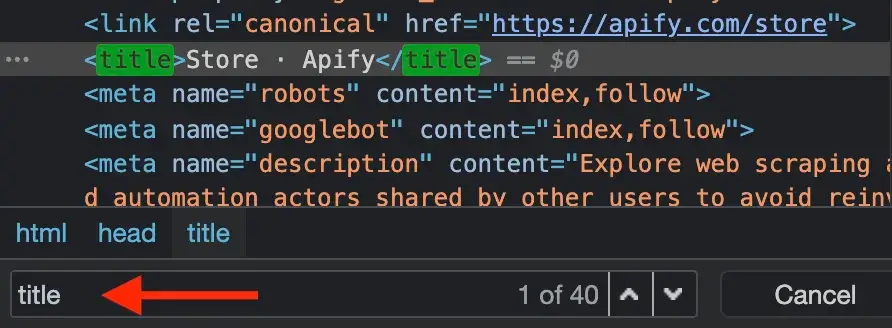

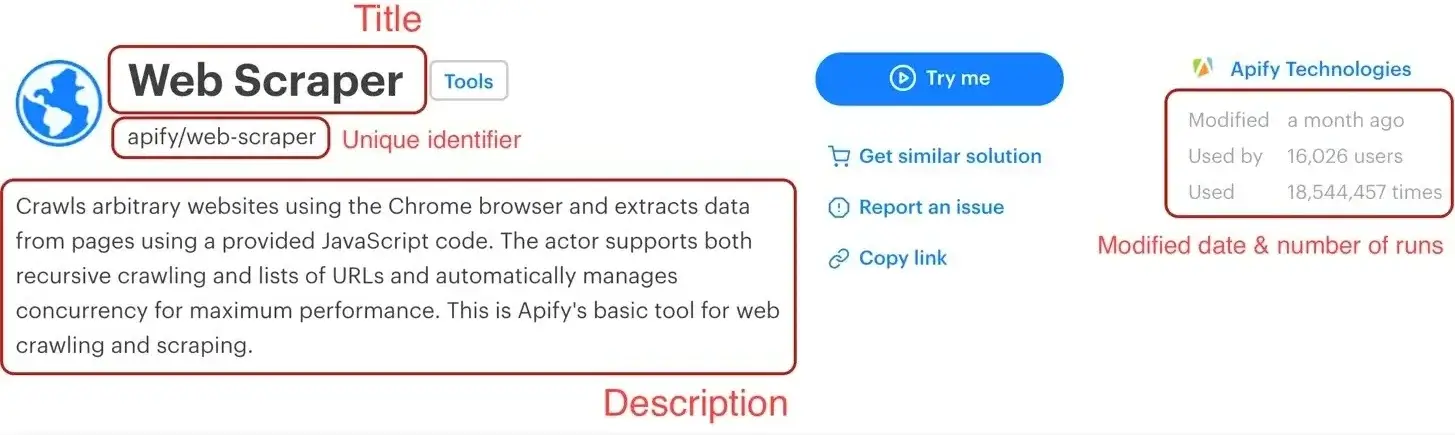

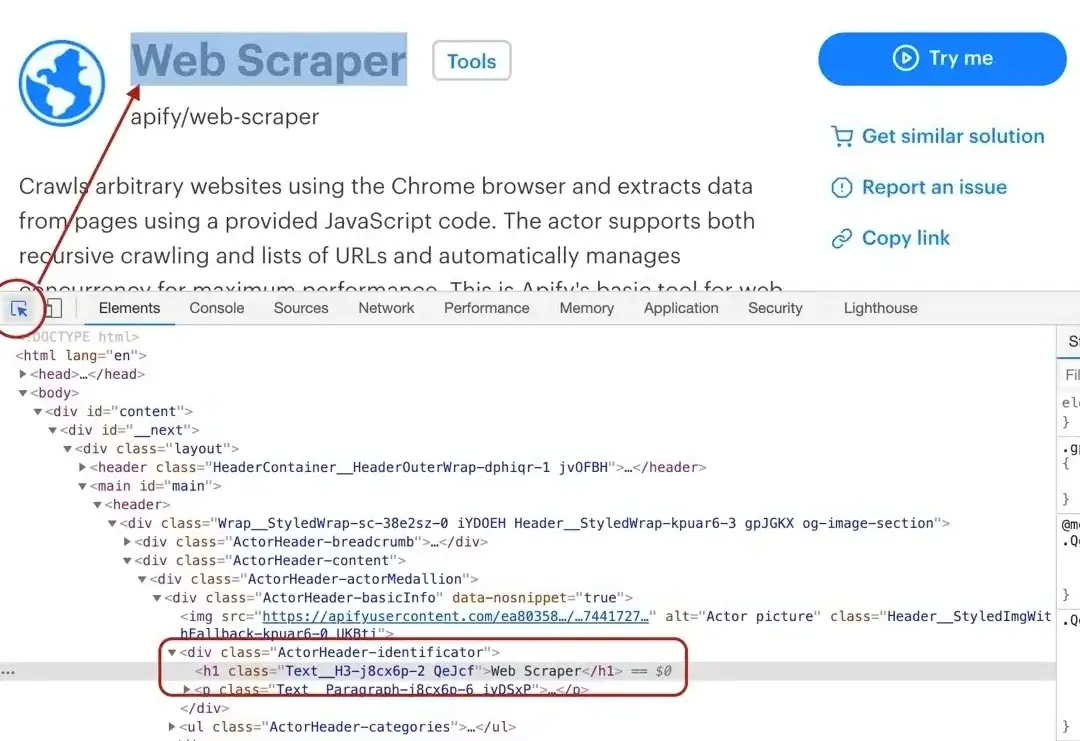

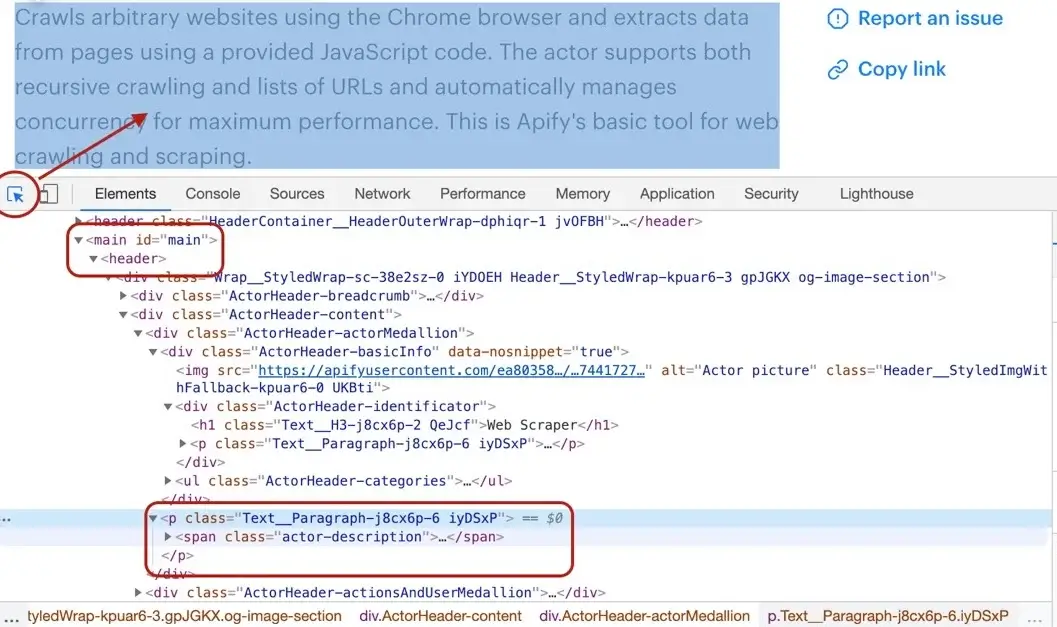

- Scraping with Cheerio Scraper: [//]: # (TODO: Should be updated) # This scraping tutorial will go into the nitty gritty details of extracting data from https://apify.com/store using Cheerio Scraper (apify/cheerio-scraper).

- Getting started with Apify scrapers: [//]: # (TODO: Should be updated) # Welcome to the getting started tutorial!

- Scraping with Puppeteer Scraper: [//]: # (TODO: Should be updated) # This scraping tutorial will go into the nitty gritty details of extracting data from https://apify.com/store using Puppeteer Scraper (apify/puppeteer-scraper).

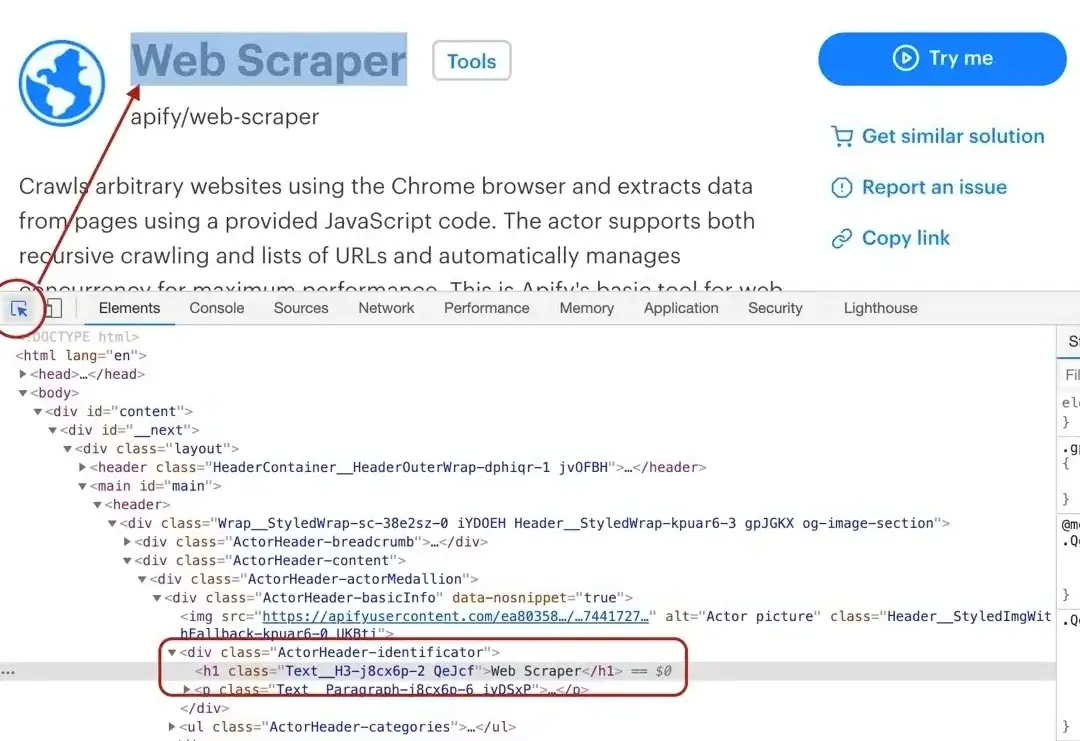

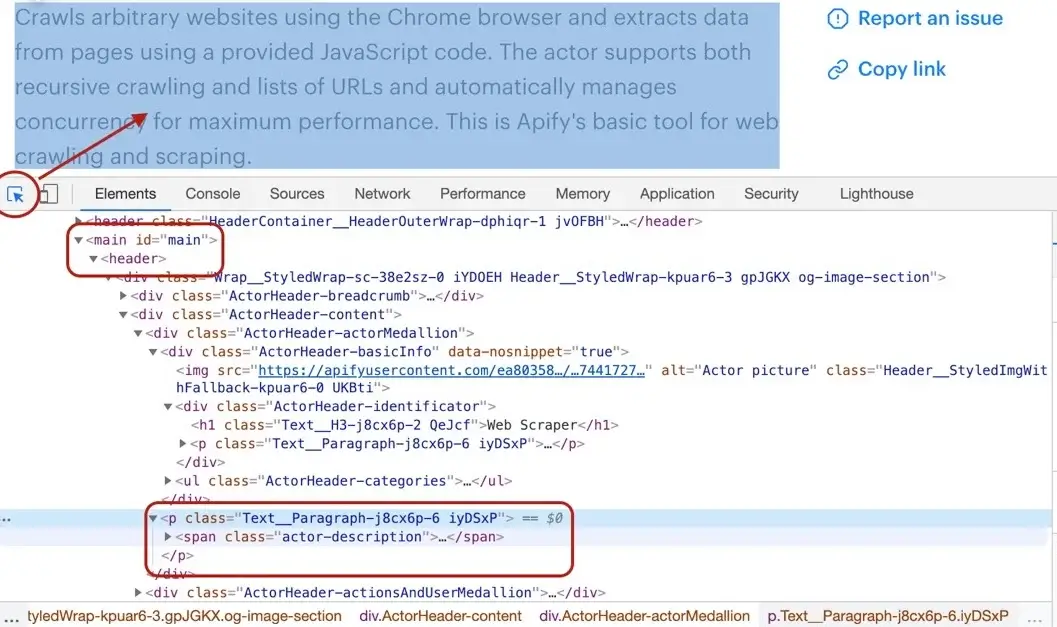

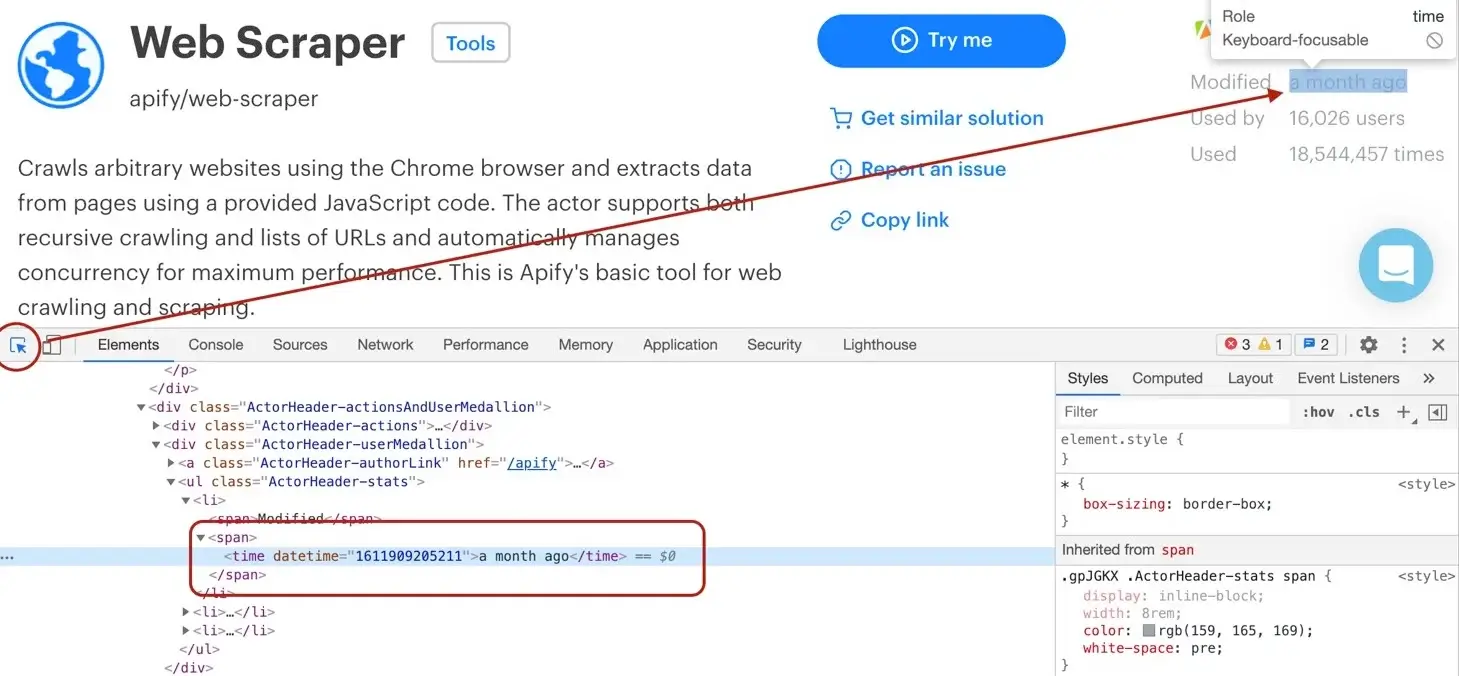

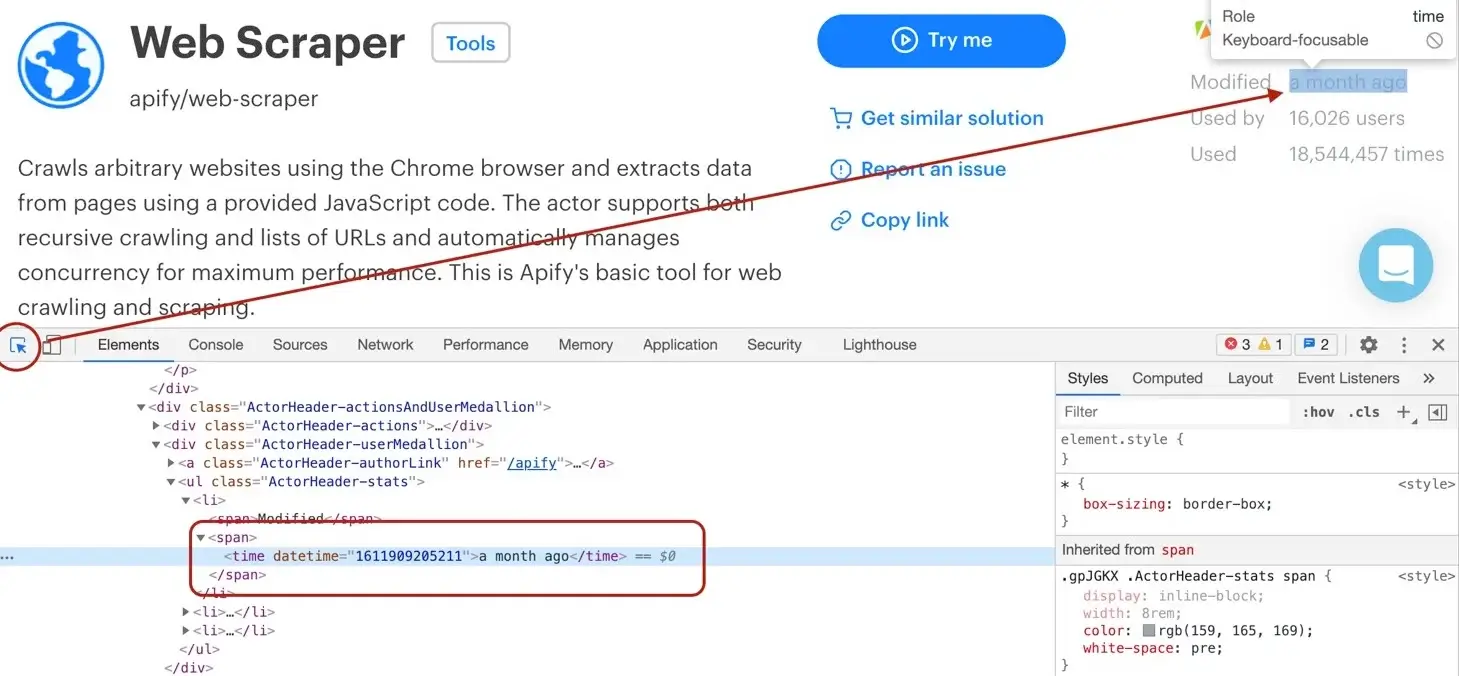

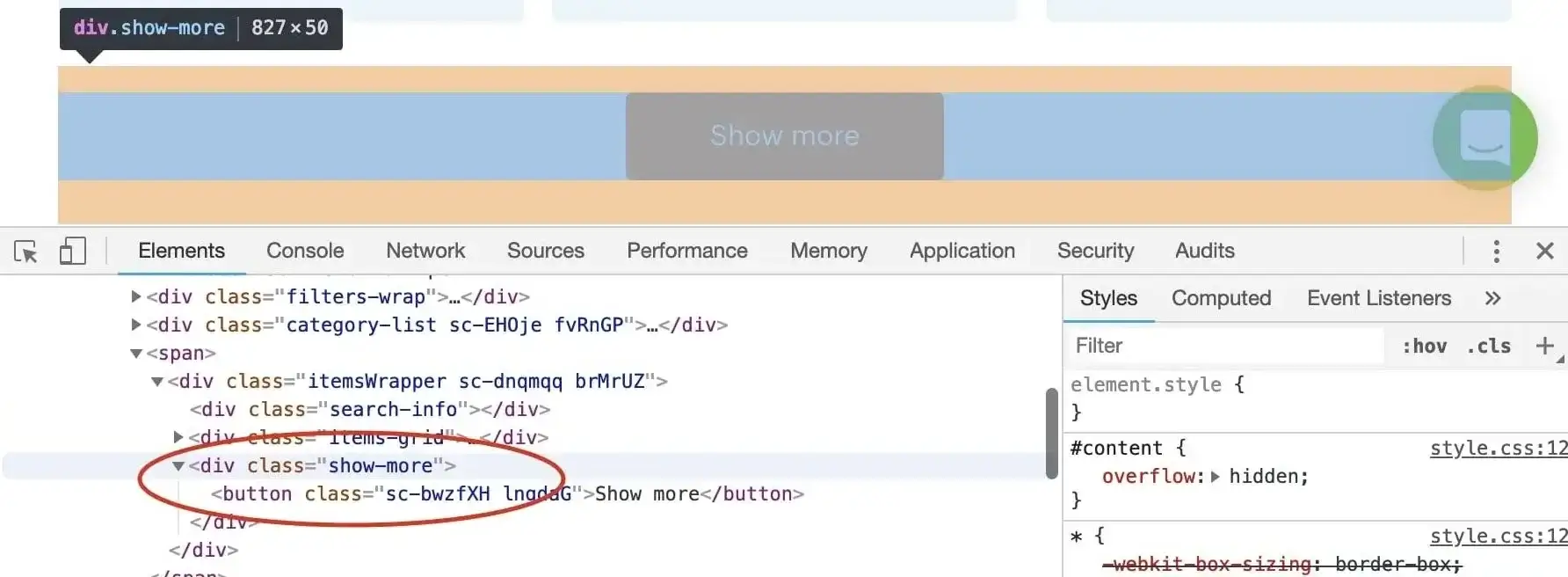

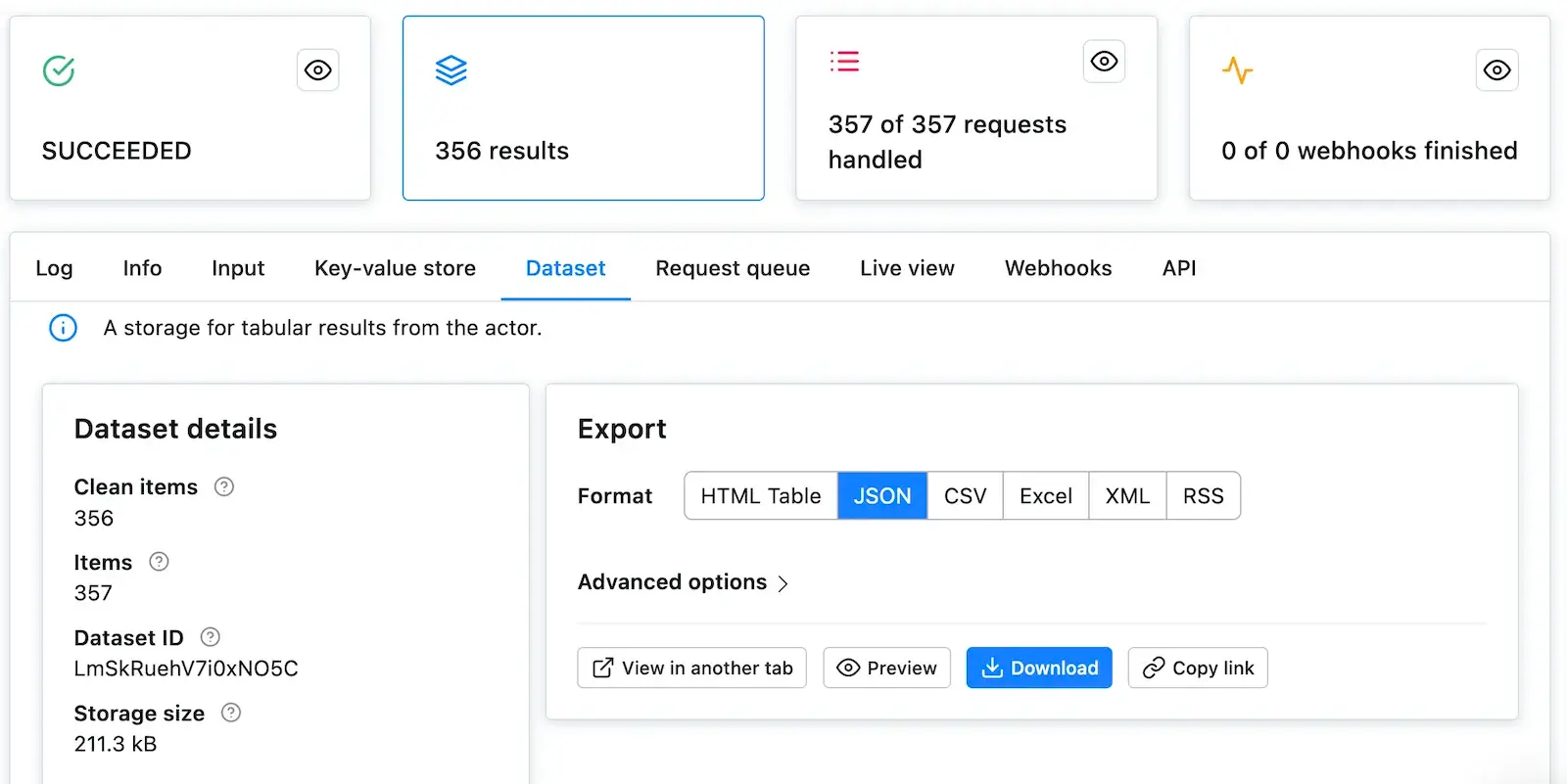

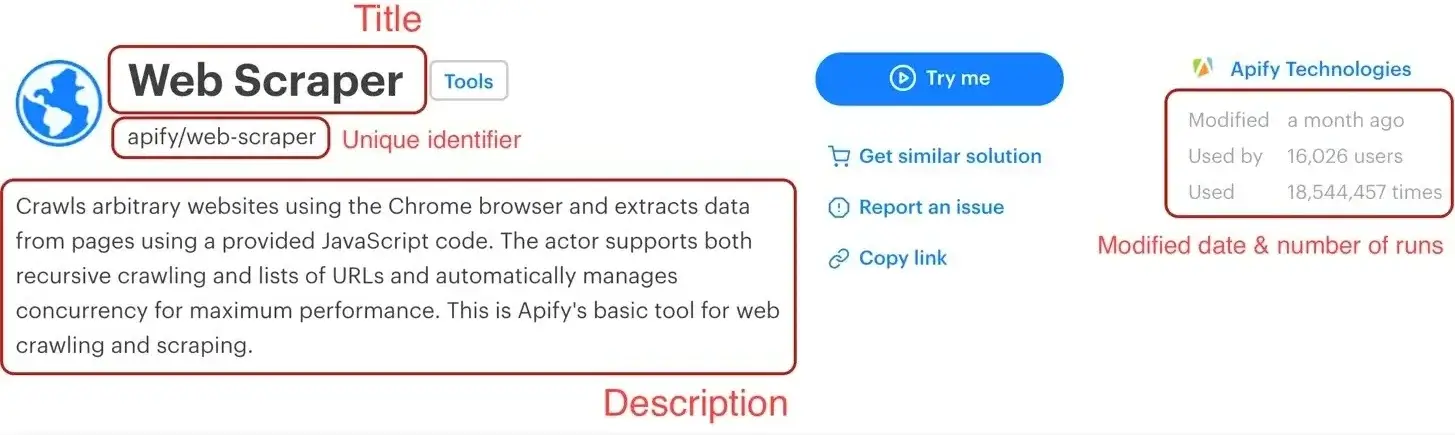

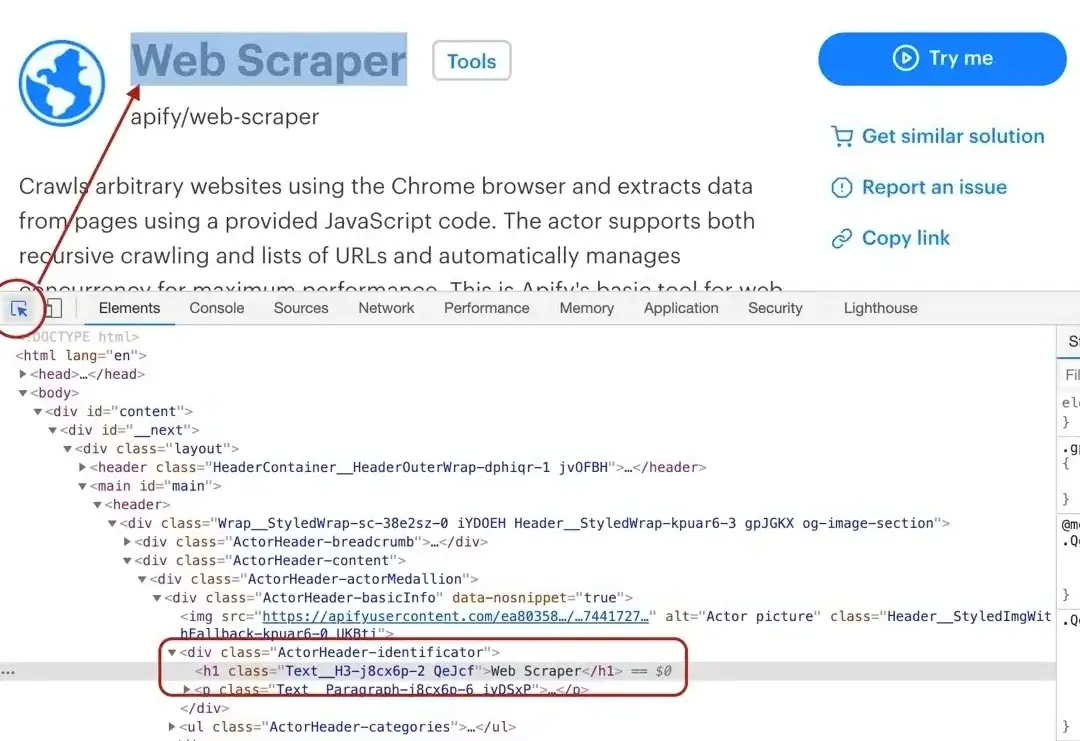

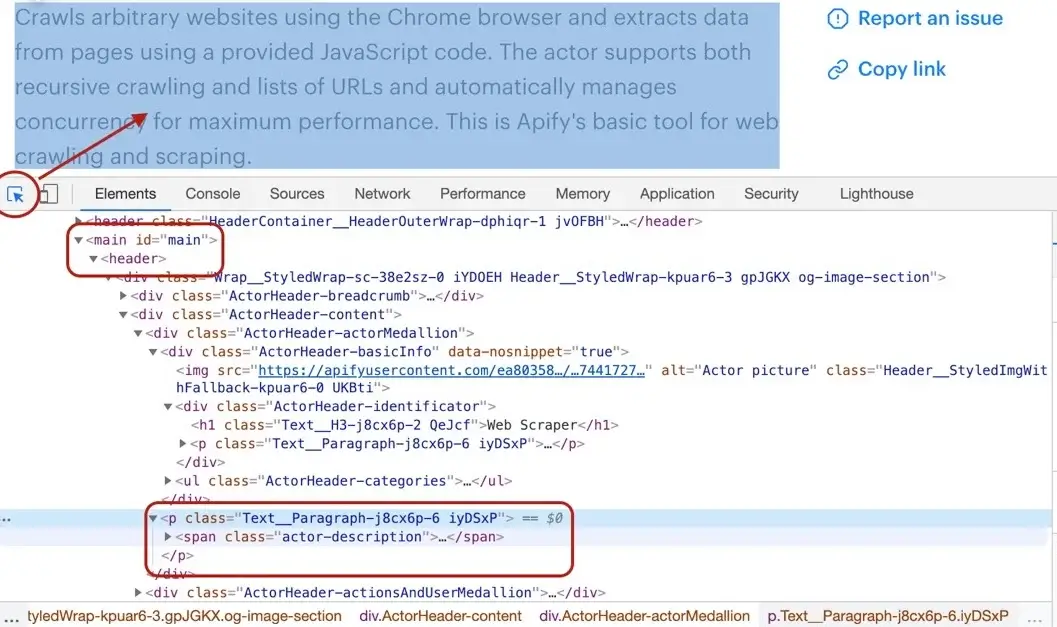

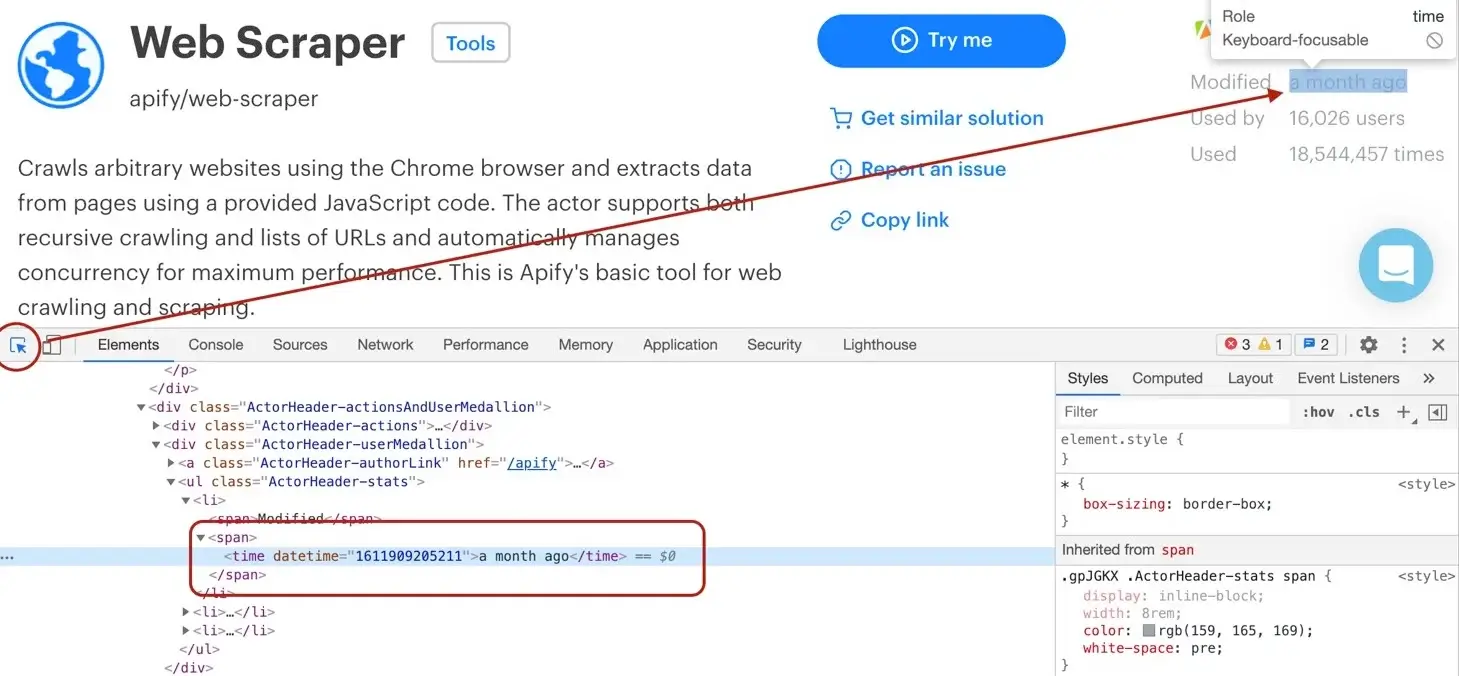

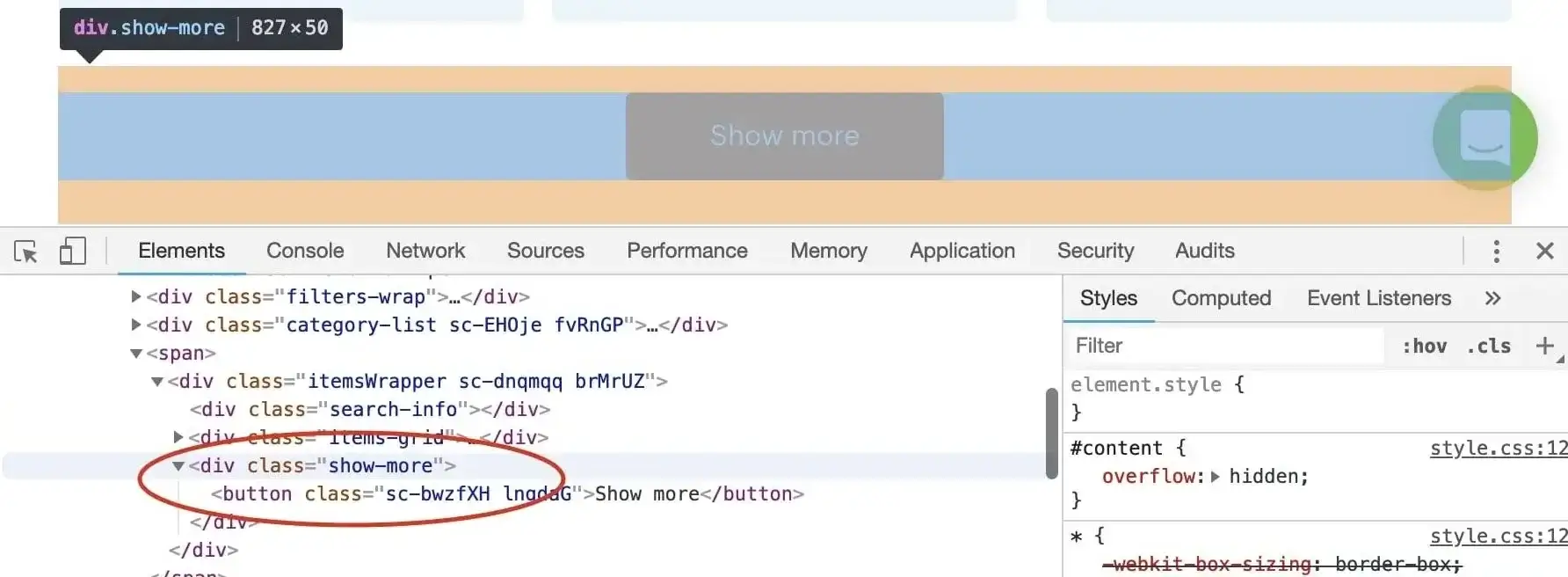

- Scraping with Web Scraper: [//]: # (TODO: Should be updated) # This scraping tutorial will go into the nitty gritty details of extracting data from https://apify.com/store using Web Scraper (apify/web-scraper).

- Validate your Actor idea: Before investing time into building an Actor, validate that people actually need it.

- Find ideas for new Actors: Learn what kind of software tools are suitable to be packaged and published as Actors on Apify, and where you can find inspiration what to build.

- Why publish Actors on Apify: Publishing Actors on Apify Store transforms your web scraping and automation code into revenue-generating products without the overhead of traditional SaaS development.

- Concepts 🤔: # Concepts 🤔 {#concepts} Learn about some common yet tricky concepts and terms that are used frequently within the academy, as well as in the world of scraper development. --- You'll see some terms and concepts frequently repeated throughout various courses in the academy.

- CSS selectors: CSS selectors are patterns used to select HTML elements on a web page.

- Dynamic pages and single-page applications (SPAs): # Dynamic pages and single-page applications (SPAs) {#dynamic-pages} Understand what makes a page dynamic, and how a page being dynamic might change your approach when writing a scraper for it. --- Oftentimes, web pages load additional information dynamically, long after their main body is loaded in the browser.

- HTML elements: An HTML element is a building block of an HTML document.

- HTTP cookies: # HTTP cookies {#cookies} Learn a bit about what cookies are, and how they are utilized in scrapers to appear logged-in, view specific data, or even avoid blocking. --- HTTP cookies are small pieces of data sent by the server to the user's web browser, which are typically stored by the browser and used to send later requests to the same server.

- HTTP headers: # HTTP headers {#headers} Understand what HTTP headers are, what they're used for, and three of the biggest differences between HTTP/1.1 and HTTP/2 headers. --- HTTP headers let the client and the server pass additional information with an HTTP request or response.

- Querying elements:

document.querySelector()anddocument.querySelectorAll()are JavaScript functions that allow you to select elements on a web page using CSS selectors. - What is robotic process automation (RPA)?: # What is robotic process automation (RPA)?

- Deploying your code to Apify: # Deploying your code to Apify {#deploying} In this course learn how to take an existing project of yours and deploy it to the Apify platform as an Actor. --- This section will discuss how to use your newfound knowledge of the Apify platform and Actors from the Getting started section to deploy your existing project's code to the Apify platform as an Actor.

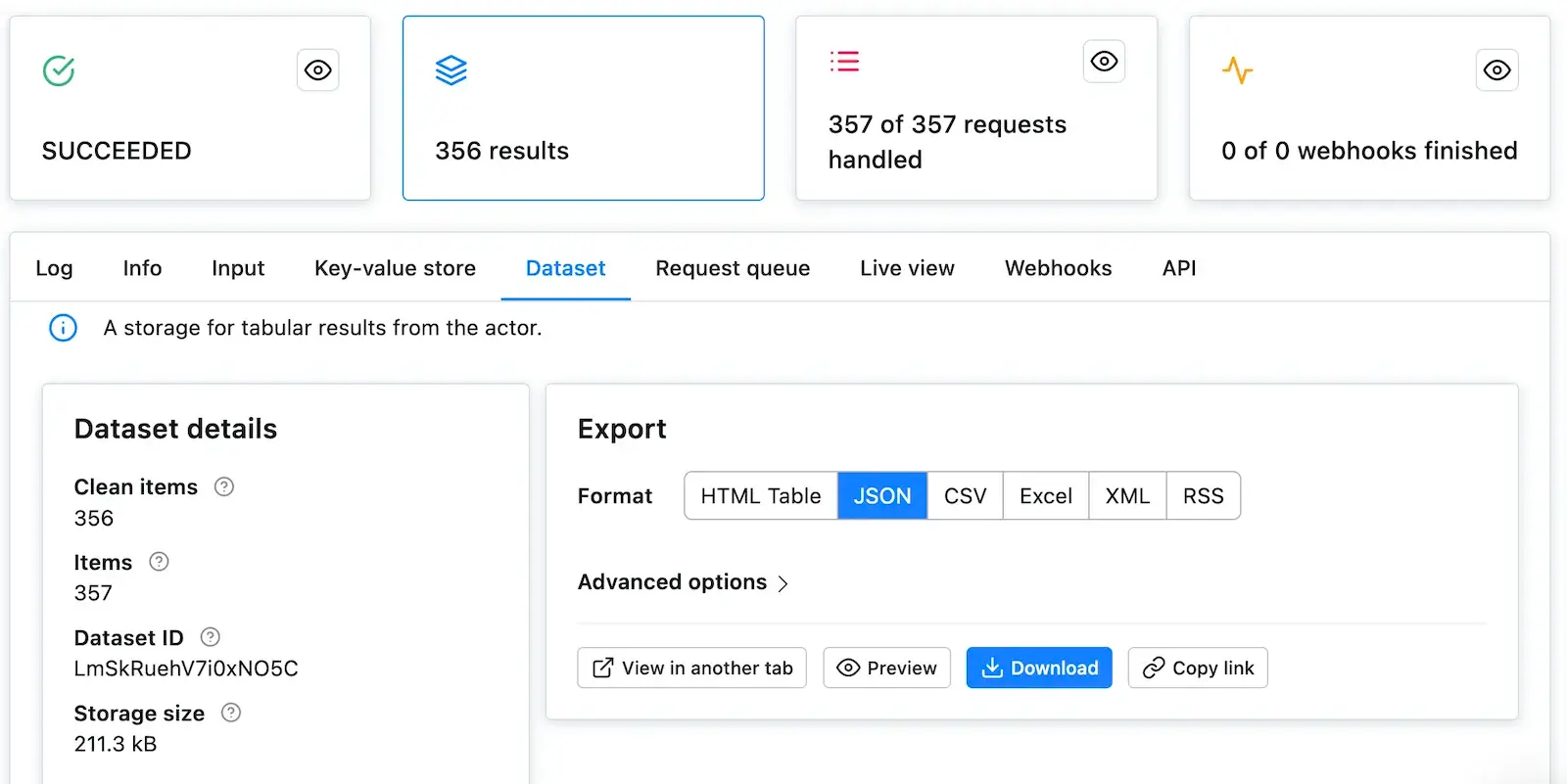

- Creating dataset schema: Learn how to generate an appealing Overview table interface to preview your Actor results in real time on the Apify platform. --- The dataset schema generates an interface that enables users to instantly preview their Actor results in real time.

- Publishing your Actor: Push local code to the platform, or create a new Actor on the console and integrate it with a Git repository to optionally automatically rebuild any new changes. --- Once you've actorified your code, there are two ways to deploy it to the Apify platform.

- Creating Actor Dockerfile: Understand how to write a Dockerfile (Docker image blueprint) for your project so that it can be run within a Docker container on the Apify platform. --- The Dockerfile is a file which gives the Apify platform (or Docker, more specifically) instructions on how to create an environment for your code to run in.

- How to write Actor input schema: Learn how to generate a user interface on the platform for your Actor's input with a single file - the INPUT_SCHEMA.json file. --- Though writing an input schema for an Actor is not a required step, it is most definitely an ideal one.

- Managing Actor inputs and outputs: **Learn to accept input into your Actor, do something with it, and then return output.

- Expert scraping with Apify: # Expert scraping with Apify {#expert-scraping} After learning the basics of Actors and Apify, learn to develop pro-level scrapers on the Apify platform with this advanced course. --- This course will teach you the nitty gritty of what it takes to build pro-level scrapers with Apify.

- Webhooks & advanced Actor overview: # Webhooks & advanced Actor overview {#webhooks-and-advanced-actors} **Learn more advanced details about Actors, how they work, and the default configurations they can take.

- Apify API & client: # Apify API & client {#api-and-client} Gain an in-depth understanding of the two main ways of programmatically interacting with the Apify platform - through the API, and through a client. --- You can use one of the two main ways to programmatically interact with the Apify platform: by directly using Apify's RESTful API, or by using the JavaScript and Python API clients.

- Bypassing anti-scraping methods: # Bypassing anti-scraping methods {#bypassing-anti-scraping-methods} Learn about bypassing anti-scraping methods using proxies and proxy/session rotation together with Crawlee and the Apify SDK. --- Effectively bypassing anti-scraping software is one of the most crucial, but also one of the most difficult skills to master.

- Managing source code: # Managing source code {#managing-source-code} **Learn how to manage your Actor's source code more efficiently by integrating it with a GitHub repository.

- Migrations & maintaining state: # Migrations & maintaining state {#migrations-maintaining-state} Learn about what Actor migrations are and how to handle them properly so that the state is not lost and runs can safely be resurrected. --- We already know that Actors are Docker containers that can be run on any server.

- Saving useful run statistics: # Saving useful run statistics {#savings-useful-run-statistics} Understand how to save statistics about an Actor's run, what types of statistics you can save, and why you might want to save them for a large-scale scraper. --- Using Crawlee and the Apify SDK, we are now able to collect and format data coming directly from websites and save it into a Key-Value store or Dataset.

- Solutions: # Solutions **View all of the solutions for all of the activities and tasks of this course.

- Handling migrations: # Handling migrations {#handling-migrations} Get real-world experience of maintaining a stateful object stored in memory, which will be persisted through migrations and even graceful aborts. --- Let's first head into our demo-actor and create a new file named asinTracker.js in the src folder.

- Integrating webhooks: # Integrating webhooks {#integrating-webhooks} **Learn how to integrate webhooks into your Actors.

- Managing source: # Managing source View in-depth answers for all three of the quiz questions that were provided in the corresponding lesson about managing source code. --- In the lesson corresponding to this solution, we discussed an extremely important topic: source code management.

- Rotating proxies/sessions: # Rotating proxies/sessions {#rotating-proxy-sessions} Learn firsthand how to rotate proxies and sessions in order to avoid the majority of the most common anti-scraping protections. --- If you take a look at our current code for the Amazon scraping Actor, you might notice this snippet:

js const proxyConfiguration = await Actor.createProxyConfiguration({ groups: ['RESIDENTIAL'], });We didn't provide much explanation for this initially, as it was not directly relevant to the lesson at hand. - Saving run stats: # Saving run stats {#saving-stats} Implement the saving of general statistics about an Actor's run, as well as adding request-specific statistics to dataset items. --- The code in this solution will be similar to what we already did in the Handling migrations solution; however, we'll be storing and logging different data.

- Using the Apify API & JavaScript client: # Using the Apify API & JavaScript client {#using-api-and-client} Learn how to interact with the Apify API directly through the well-documented RESTful routes, or by using the proprietary Apify JavaScript client. --- Since we need to create another Actor, we'll once again use the

apify createcommand and start from an empty template. - Using storage & creating tasks: # Using storage & creating tasks {#using-storage-creating-tasks} ## Quiz answers 📝 {#quiz-answers} Q: What is the relationship between Actors and tasks? A: Tasks are pre-configured runs of Actors.

- Tasks & storage: # Tasks & storage {#tasks-and-storage} **Understand how to save the configurations for Actors with Actor tasks.

- Monetizing your Actor: Learn how you can monetize your web scraping and automation projects by publishing Actors to users in Apify Store. --- When you publish your Actor on the Apify platform, you have the option to make it a Paid Actor and earn revenue from users who benefit from your tool.

- Getting started: # Getting started {#getting-started} Get started with the Apify platform by creating an account and learning about the Apify Console, which is where all Apify Actors are born! --- Your gateway to the Apify platform is your Apify account.

- Actors: **What is an Actor?

- The Apify API: # The Apify API {#the-apify-api} Learn how to use the Apify API to programmatically call your Actors, retrieve data stored on the platform, view Actor logs, and more! --- Apify's API is your ticket to the Apify platform without even needing to access the Apify Console web-interface.

- Apify client: # Apify client {#apify-client} Interact with the Apify API in your code by using the apify-client package, which is available for both JavaScript and Python. --- Now that you've gotten your toes wet with interacting with the Apify API through raw HTTP requests, you're ready to become familiar with the Apify client, which is a package available for both JavaScript and Python that allows you to interact with the API in your code without explicitly needing to make any GET or POST requests.

- Creating Actors: **This lesson offers hands-on experience in building and running Actors in Apify Console using a template.

- Inputs & outputs: Create an Actor from scratch which takes an input, processes that input, and then outputs a result that can be used elsewhere. --- Actors, as any other programs, take inputs and generate outputs.

- Why a glossary?: # Why a glossary?

- Scraping with Node.js: # Scraping with Node.js A collection of various Node.js tutorials on scraping sitemaps, optimizing your scrapers, using popular Node.js web scraping libraries, and more. --- This section contains various web-scraping or web-scraping related tutorials for Node.js.

- How to add external libraries to Web Scraper: Sometimes you need to use some extra JavaScript in your Web Scraper page functions.

- How to analyze and fix errors when scraping a website: # How to analyze and fix errors when scraping a website {#scraping-with-sitemaps} **Learn how to deal with random crashes in your web-scraping and automation jobs.

- Apify's free Google SERP API: You need to regularly grab SERP data about your target keywords?

- Avoid EACCES error in Actor builds with a custom Dockerfile: Sometimes when building an Actor using a custom Dockerfile, you might receive errors like: ```shell Missing write access to ...

- Block requests in Puppeteer: :::caution Improve Performance: Use

blockRequestsUnfortunately, in the recent version of Puppeteer, request interception disables the native cache and slows down the Actor significantly. - How to optimize Puppeteer by caching responses: # How to optimize Puppeteer by caching responses {#caching-responses-in-puppeteer} Learn why it is important for performance to cache responses in memory when intercepting requests in Puppeteer and how to implement it in your code. --- > In the latest version of Puppeteer, the request-interception function inconveniently disables the native cache and significantly slows down the crawler.

- How to choose the right scraper for the job: # How to choose the right scraper for the job {#choosing-the-right-scraper} Learn basic web scraping concepts to help you analyze a website and choose the best scraper for your particular use case. --- You can use one of the two main ways to proceed with building your crawler: 1.

- How to scrape from dynamic pages: # How to scrape from dynamic pages {#dealing-with-dynamic-pages} **Learn about dynamic pages and dynamic content.

- Running code in a browser console: A lot of beginners struggle through trial and error while scraping a simple site.

- Filter out blocked proxies using sessions: _This article explains how the problem was solved before the SessionPool class was added into Apify SDK.

- BasicCrawler: One of the main defense mechanisms websites use to ensure they are not scraped by bots is allowing only a limited number of requests from a specific IP address.

- How to fix 'Target closed' error in Puppeteer and Playwright: # How to fix 'Target closed' error in Puppeteer and Playwright Learn about common causes for the 'Target closed' error in browser automation and what you can do to fix it. --- The

Target closederror happens when you try to access thepageobject (or some of its parent objects like thebrowser), but the underlying browser tab has already been closed. - How to save screenshots from puppeteer: A good way to debug your puppeteer crawler in Apify Actors is to save a screenshot of a browser window to the Apify key-value store.

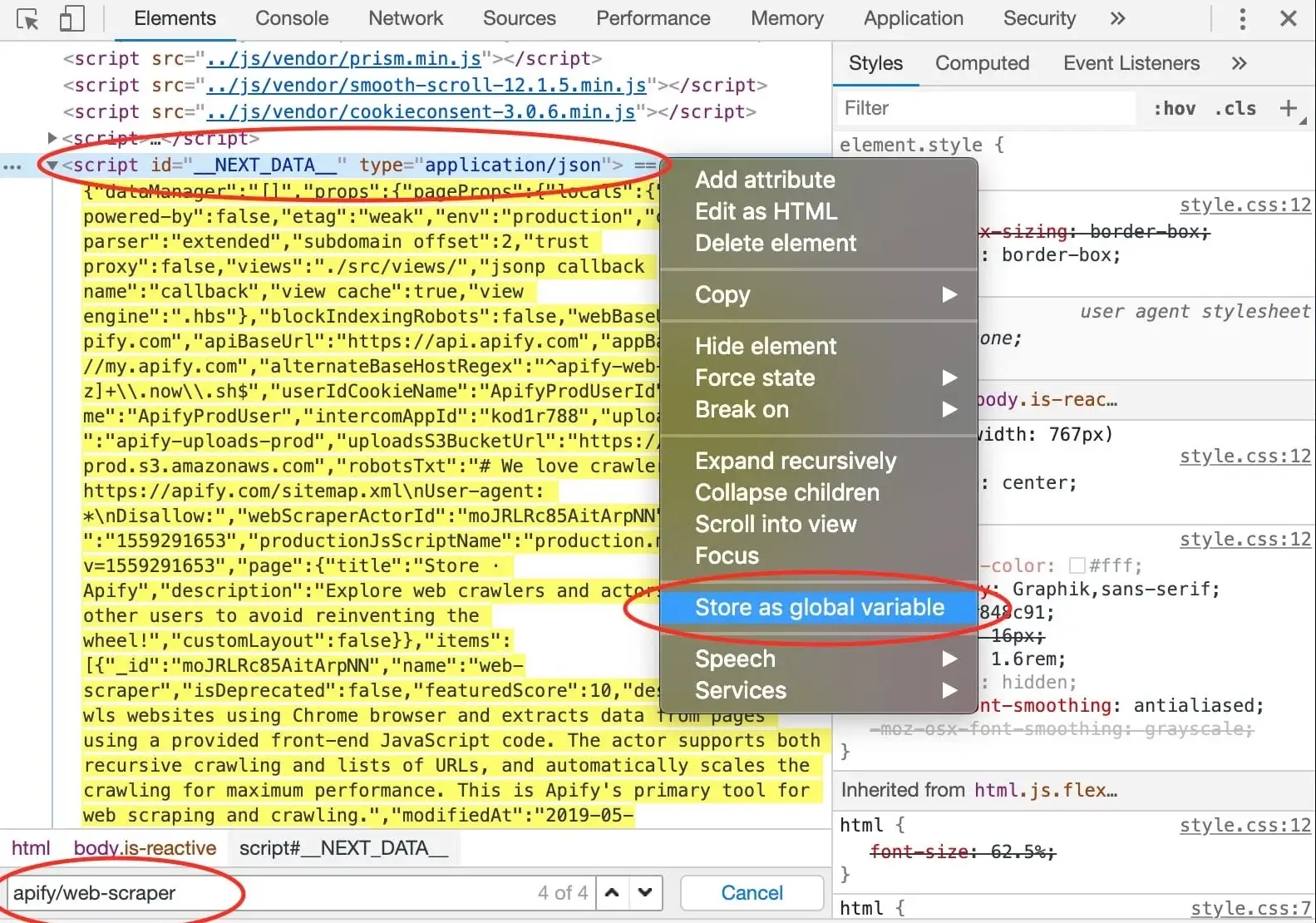

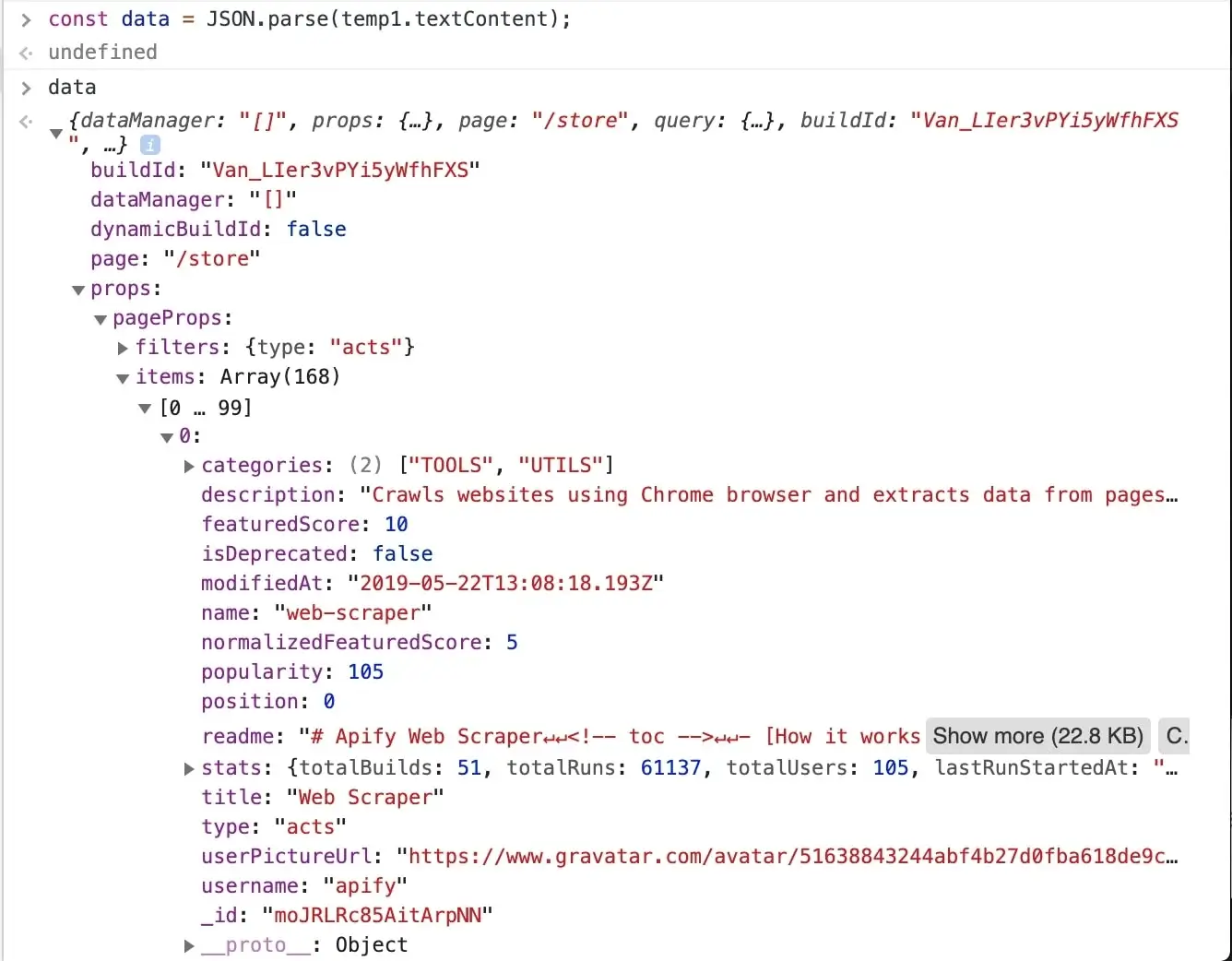

- How to scrape hidden JavaScript objects in HTML: # How to scrape hidden JavaScript objects in HTML {#what-is-js-in-html} Learn about "hidden" data found within the JavaScript of certain pages, which can increase the scraper reliability and improve your development experience. --- Depending on the technology the target website is using, the data to be collected not only can be found within HTML elements, but also in a JSON format within `` tags in the DOM.

- Scrape website in parallel with multiple Actor runs: # Scrape website in parallel with multiple Actor runs **Learn how to run multiple instances of an Actor to scrape a website faster.

- How to optimize and speed up your web scraper: # How to optimize and speed up your web scraper {#optimizing-scrapers} **We all want our scrapers to run as cost-effective as possible.

- Enqueuing start pages for all keywords: Sometimes you need to process the same URL several times, but each time with a different setup.

- Request labels and how to pass data to other requests: Are you trying to use Actors for the first time and don't know how to deal with the request label or how to pass data to the request?

- How to scrape from sitemaps: # How to scrape from sitemaps {#scraping-with-sitemaps} :::tip Processing sitemaps automatically with Crawlee Crawlee allows you to scrape sitemaps with ease.

- How to scrape sites with a shadow DOM: # How to scrape sites with a shadow DOM {#scraping-shadow-doms} **The shadow DOM enables isolation of web components, but causes problems for those building web scrapers.

- Scraping a list of URLs from a Google Sheets document: You can export URLs from Google Sheets such as this one directly into an Actor's Start URLs field.

- Downloading the file to memory: When doing web automation with Apify, it can sometimes be necessary to submit an HTML form with a file attachment.

- Submitting forms on .ASPX pages: Apify users sometimes need to submit a form on pages created with ASP.NET (URL typically ends with .aspx).

- Using man-in-the-middle proxy to intercept requests in Puppeteer: Sometimes you may need to intercept (or maybe block) requests in headless Chrome / Puppeteer, but

page.setRequestInterception()is not 100% reliable when the request is started in a new window. - Waiting for dynamic content: Use these helper functions to wait for data: -

page.waitForin Puppeteer (or Puppeteer Scraper (apify/puppeteer-scraper)). - When to use Puppeteer Scraper: You may have read in the Web Scraper readme or somewhere else at Apify that Puppeteer Scraper is more powerful and gives you more control over the browser, enabling you to do almost anything.

- How to use Apify from PHP: # How to use Apify from PHP Apify's RESTful API allows you to use the platform from basically anywhere.

- Puppeteer & Playwright course: # Puppeteer & Playwright course {#puppeteer-playwright-course} Learn in-depth how to use two of the most popular Node.js libraries for controlling a headless browser - Puppeteer and Playwright. --- Puppeteer and Playwright are libraries that allow you to automate browsing.

- Browser: # Browser {#browser} Understand what the Browser object is in Puppeteer/Playwright, how to create one, and a bit about how to interact with one. --- In order to automate a browser in Playwright or Puppeteer, we need to open one up programmatically.

- Creating multiple browser contexts: # Creating multiple browser contexts {#creating-browser-contexts} Learn what a browser context is, how to create one, how to emulate devices, and how to use browser contexts to automate multiple sessions at one time. --- A BrowserContext is an isolated incognito session within a Browser instance.

- Common use cases: # Common use cases {#common-use-cases} Learn about some of the most common use cases of Playwright and Puppeteer, and how to handle these use cases when you run into them. --- You can do about anything with a headless browser, but, there are some extremely common use cases that are important to understand and be prepared for when you might run into them.

- Downloading files: # Downloading files Learn how to automatically download and save files to the disk using two of the most popular web automation libraries, Puppeteer and Playwright. --- Downloading a file using Puppeteer can be tricky.

- Logging into a website: # Logging into a website {#logging-into-a-website} Understand the "login flow" - logging into a website, then maintaining a logged in status within different browser contexts for an efficient automation process. --- Whether it's auto-renewing a service, automatically sending a message on an interval, or automatically cancelling a Netflix subscription, one of the most popular things headless browsers are used for is automating things within a user's account on a certain website.

- Paginating through results: # Paginating through results {#paginating-through-results} Learn how to paginate through results on websites that use either pagination based on page numbers or dynamic lazy loading. --- If you're trying to collect data on a website that has millions, thousands, or even hundreds of results, it is very likely that they are paginating their results to reduce strain on their back-end as well as on the users loading and rendering the content.

- Scraping iFrames: # Scraping iFrames **Extracting data from iFrames can be frustrating.

- Submitting a form with a file attachment: # Submitting a form with a file attachment Understand how to download a file, attach it to a form using a headless browser in Playwright or Puppeteer, then submit the form. --- We can use Puppeteer or Playwright to simulate submitting the same way a human-operated browser would.

- Executing scripts: # Executing scripts {#executing-scripts} Understand the two different contexts which your code can be run in, and how to run custom scripts in the context of the browser. --- An important concept to understand when dealing with headless browsers is the context in which your code is being run.

- Extracting data: # Extracting data {#extracting-data} Learn how to extract data from a page with evaluate functions, then how to parse it by using a second library called Cheerio. --- Now that we know how to execute scripts on a page, we're ready to learn a bit about data extraction.

- Injecting code: # Injecting code {#injecting-code} Learn how to inject scripts prior to a page's load (pre-injecting), as well as how to expose functions to be run at a later time on the page. --- In the previous lesson, we learned how to execute code on the page using

page.evaluate(), and though this fits the majority of use cases, there are still some more unusual cases. - Opening a page: # Opening a page {#opening-a-page} Learn how to create and open a Page with a Browser, and how to use it to visit and programmatically interact with a website. --- When you open up your regular browser and visit a website, you open up a new page (or tab) before entering the URL in the search bar and hitting the Enter key.

- Interacting with a page: # Interacting with a page {#interacting-with-a-page} **Learn how to programmatically do actions on a page such as clicking, typing, and pressing keys.

- Page methods: # Page methods {#page-methods} Understand that the Page object has many different methods to offer, and learn how to use two of them to capture a page's title and take a screenshot. --- Other than having methods for interacting with a page and waiting for events and elements, the Page object also supports various methods for doing other things, such as reloading, screenshotting, changing headers, and extracting the page's content.

- Waiting for elements and events: # Waiting for elements and events {#waiting-for-elements-and-events} Learn the importance of waiting for content and events before running interaction or extraction code, as well as the best practices for doing so. --- In a perfect world, every piece of content served on a website would be loaded instantaneously.

- Using proxies: # Using proxies {#using-proxies} Understand how to use proxies in your Puppeteer and Playwright requests, as well as a couple of the most common use cases for proxies. --- Proxies are a great way of appearing as if you are making requests from a different location.

- Reading & intercepting requests: # Reading & intercepting requests {#reading-intercepting-requests} **You can use DevTools, but did you know that you can do all the same stuff (plus more) programmatically?

- Scraping with Python: # Scraping with Python A collection of various Python tutorials to aid you in your journey to becoming a master web scraping and automation developer. --- This section contains various web-scraping or web-scraping related tutorials for Python.

- How to process data in Python using Pandas: # How to process data in Python using Pandas Learn how to process the resulting data of a web scraper in Python using the Pandas library, and how to visualize the processed data using Matplotlib. --- In the previous tutorial, we learned how to scrape data from the web in Python using the Beautiful Soup library.

- How to scrape data in Python using Beautiful Soup: # How to scrape data in Python using Beautiful Soup Learn how to create a Python Actor and use Python libraries to scrape, process and visualize data extracted from the web. --- Web scraping is not limited to the JavaScript world.

- Run a web server on the Apify platform: **A web server running in an Actor can act as a communication channel with the outside world.

- Web scraping basics for JavaScript devs: Learn how to use JavaScript to extract information from websites in this practical course, starting from the absolute basics. --- In this course we'll use JavaScript to create an application for watching prices.

- Crawling websites with Node.js: **In this lesson, we'll follow links to individual product pages.

- Extracting data from a web page with browser DevTools: In this lesson we'll use the browser tools for developers to manually extract product data from an e-commerce website. --- In our pursuit to scrape products from the Sales page, we've been able to locate parent elements containing relevant data.

- Inspecting web pages with browser DevTools: In this lesson we'll use the browser tools for developers to inspect and manipulate the structure of a website. --- A browser is the most complete tool for navigating websites.

- Locating HTML elements on a web page with browser DevTools: In this lesson we'll use the browser tools for developers to manually find products on an e-commerce website. --- Inspecting Wikipedia and tweaking its subtitle is fun, but let's shift gears and focus on building an app to track prices on an e-commerce site.

- Downloading HTML with Node.js: **In this lesson we'll start building a Node.js application for watching prices.

- Extracting data from HTML with Node.js: **In this lesson we'll finish extracting product data from the downloaded HTML.

- Using a scraping framework with Node.js: **In this lesson, we'll rework our application for watching prices so that it builds on top of a scraping framework.

- Getting links from HTML with Node.js: **In this lesson, we'll locate and extract links to individual product pages.

- Locating HTML elements with Node.js: **In this lesson we'll locate product data in the downloaded HTML.

- Parsing HTML with Node.js: **In this lesson we'll look for products in the downloaded HTML.

- Using a scraping platform with Node.js: **In this lesson, we'll deploy our application to a scraping platform that automatically runs it daily.

- Saving data with Node.js: **In this lesson, we'll save the data we scraped in the popular formats, such as CSV or JSON.

- Scraping product variants with Node.js: In this lesson, we'll scrape the product detail pages to represent each product variant as a separate item in our dataset. --- We'll need to figure out how to extract variants from the product detail page, and then change how we add items to the data list so we can add multiple items after scraping one product URL.

- Web scraping basics for Python devs: Learn how to use Python to extract information from websites in this practical course, starting from the absolute basics. --- In this course we'll use Python to create an application for watching prices.

- Crawling websites with Python: **In this lesson, we'll follow links to individual product pages.

- Extracting data from a web page with browser DevTools: In this lesson we'll use the browser tools for developers to manually extract product data from an e-commerce website. --- In our pursuit to scrape products from the Sales page, we've been able to locate parent elements containing relevant data.

- Inspecting web pages with browser DevTools: In this lesson we'll use the browser tools for developers to inspect and manipulate the structure of a website. --- A browser is the most complete tool for navigating websites.

- Locating HTML elements on a web page with browser DevTools: In this lesson we'll use the browser tools for developers to manually find products on an e-commerce website. --- Inspecting Wikipedia and tweaking its subtitle is fun, but let's shift gears and focus on building an app to track prices on an e-commerce site.

- Downloading HTML with Python: **In this lesson we'll start building a Python application for watching prices.

- Extracting data from HTML with Python: **In this lesson we'll finish extracting product data from the downloaded HTML.

- Using a scraping framework with Python: **In this lesson, we'll rework our application for watching prices so that it builds on top of a scraping framework.

- Getting links from HTML with Python: **In this lesson, we'll locate and extract links to individual product pages.

- Locating HTML elements with Python: **In this lesson we'll locate product data in the downloaded HTML.

- Parsing HTML with Python: **In this lesson we'll look for products in the downloaded HTML.

- Using a scraping platform with Python: **In this lesson, we'll deploy our application to a scraping platform that automatically runs it daily.

- Saving data with Python: **In this lesson, we'll save the data we scraped in the popular formats, such as CSV or JSON.

- Scraping product variants with Python: In this lesson, we'll scrape the product detail pages to represent each product variant as a separate item in our dataset. --- We'll need to figure out how to extract variants from the product detail page, and then change how we add items to the data list so we can add multiple items after scraping one product URL.

- Tools 🔧: # Tools 🔧 {#tools} Discover a variety of tools that can be used to enhance the scraper development process, or even unlock doors to new scraping possibilities. --- Here at Apify, we've found many tools, some quite popular and well-known and some niche, which can aid any developer in their scraper development process.

- The Apify CLI: # The Apify CLI {#the-apify-cli} Learn about, install, and log into the Apify CLI - your best friend for interacting with the Apify platform via your terminal. --- The Apify CLI helps you create, develop, build and run Apify Actors, and manage the Apify cloud platform from any computer.

- What's EditThisCookie?: # What's EditThisCookie?

- What is Insomnia: # What is Insomnia {#what-is-insomnia} Learn about Insomnia, a valuable tool for testing requests and proxies when building scalable web scrapers. --- Despite its name, the Insomnia desktop application has absolutely nothing to do with having a lack of sleep.

- What is ModHeader?: # What is ModHeader?

- What is Postman?: # What is Postman?

- What's Proxyman?: # What's Proxyman?

- Quick JavaScript Switcher: # Quick JavaScript Switcher **Discover a handy tool for disabling JavaScript on a certain page to determine how it should be scraped.

- What is SwitchyOmega?: # What is SwitchyOmega?

- User-Agent Switcher: # User-Agent Switcher Learn how to switch your User-Agent header to different values in order to monitor how a certain site responds to the changes. --- User-Agent Switcher is a Chrome extension that allows you to quickly change your User-Agent and see how a certain website would behave with different user agents.

- Tutorials 📚: # Tutorials 📚 Learn about various different specific topics related to web-scraping and web-automation with the Apify Academy tutorial lessons! --- In web scraping, there are a whole lot of niche cases that you will run into.

- Web scraping basics for JavaScript devs: # Web scraping basics for JavaScript devs {#welcome} **Learn how to develop web scrapers with this comprehensive and practical course.

- Best practices when writing scrapers: # Best practices when writing scrapers {#best-practices} Understand the standards and best practices that we here at Apify abide by to write readable, scalable, and maintainable code. --- Every developer has their own style, which evolves as they grow and learn.

- Challenge: # Challenge Test your knowledge acquired in the previous sections of this course by building an Amazon scraper using Crawlee's CheerioCrawler! --- Before moving onto the other courses in the academy, we recommend following along with this section, as it combines everything you've learned in the previous lessons into one cohesive project that helps you prove to yourself that you've thoroughly understood the material.

- Initialization & setting up: # Initialization & setting up **When you extract links from a web page, you often end up with a lot of irrelevant URLs.

- Modularity: # Modularity Before you build your first web scraper with Crawlee, it is important to understand the concept of modularity in programming. --- Now that we've gotten our first request going, the first challenge is going to be selecting all of the resulting products on the page.

- Scraping Amazon: # Scraping Amazon **Build your first web scraper with Crawlee.

- Basics of crawling: **Learn how to crawl the web with your scraper.

- Exporting data: # Exporting data {#exporting-data} Learn how to export the data you scraped using Crawlee to CSV or JSON. --- In the previous lessons, you learned that: 1.

- Filtering links: # Filtering links {#filtering-links} **When you extract links from a web page, you often end up with a lot of irrelevant URLs.

- Finding links: Learn what a link looks like in HTML and how to find and extract their URLs when web scraping using both DevTools and Node.js. --- Many kinds of links exist on the internet, and we'll cover all the types in the advanced Academy courses.

- Your first crawl: # Your first crawl {#your-first-crawl} **Learn how to crawl the web using Node.js, Cheerio and an HTTP client.

- Headless browsers: # Headless browsers {#headless-browser} **Learn how to scrape the web with a headless browser using only a few lines of code.

- Professional scraping 👷: # Professional scraping 👷 {#pro-scraping} Learn how to build scrapers quicker and get better and more robust results by using Crawlee, an open-source library for scraping in Node.js. --- While it's definitely an interesting exercise to do all the programming manually, and we hope you enjoyed it, it's neither the most effective, nor the most efficient way of scraping websites.

- Recap of data extraction basics: # Recap of data extraction basics {#quick-recap} Review our e-commerce website scraper and refresh our memory about its code and the programming techniques we used to extract and save the data. --- We finished off the first section of the Web scraping basics for JavaScript devs course by creating a web scraper in Node.js.

- Relative URLs: # Relative URLs {#filtering-links} Learn about absolute and relative URLs used on web pages and how to work with them when parsing HTML with Cheerio in your scraper. --- You might have noticed in the previous lesson that while printing URLs to the DevTools console, they would always show in full length, like this:

text https://warehouse-theme-metal.myshopify.com/products/denon-ah-c720-in-ear-headphonesBut in the Elements tab, when checking the `` attributes, the URLs would look like this:text /products/denon-ah-c720-in-ear-headphonesWhat's up with that? - Scraping data: # Scraping data {#scraping-data} Learn how to add data extraction logic to your crawler, which will allow you to extract data from all the websites you crawled. --- At the very beginning of this course, we learned that the term web scraping usually means a combined process of data extraction and crawling.

- Basics of data extraction: # Basics of data extraction {#basics} Learn about HTML, CSS, and JavaScript, the basic building blocks of a website, and how to use them in web scraping and data extraction. --- Every web scraping project starts with some detective work.

- Starting with browser DevTools: Learn about browser DevTools, a valuable tool in the world of web scraping, and how you can use them to extract data from a website. --- Even though DevTools stands for developer tools, everyone can use them to inspect a website.

- Prepare your computer for programming: # Prepare your computer for programming {#prepare-computer} **Set up your computer to be able to code scrapers with Node.js and JavaScript.

- Extracting data with DevTools: Continue learning how to extract data from a website using browser DevTools, CSS selectors, and JavaScript via the DevTools console. --- In the previous parts of the DevTools tutorial, we were able to extract information about a single product from the Sales collection of the Warehouse store.

- Extracting data with Node.js: **Continue learning how to create a web scraper with Node.js and Cheerio.

- Scraping with Node.js: Learn how to use JavaScript and Node.js to create a web scraper, plus take advantage of the Cheerio and Got-scraping libraries to make your job easier. --- Finally, we have everything ready to start scraping!

- Setting up your project: # Setting up your project {#setting-up} **Create a new project with npm and Node.js.

- Saving results to CSV: # Saving results to CSV {#saving-to-csv} Learn how to save the results of your scraper's collected data to a CSV file that can be opened in Excel, Google Sheets, or any other spreadsheets program. --- In the last lesson, we were able to extract data about all the on-sale products from Warehouse Store.

- Finding elements with DevTools: Learn how to use browser DevTools, CSS selectors, and JavaScript via the DevTools console to extract data from a website. --- With the knowledge of the basics of DevTools we can finally try doing something more practical - extracting data from a website.

- Introduction: # Introduction {#introduction} Start learning about web scraping, web crawling, data extraction, and popular tools to start developing your own scraper. --- Web scraping or crawling?

Legal documents

- Apify Legal: ## Company details (Impressum) Apify Technologies s.r.o. Registered seat: Vodickova 704/36, 110 00 Prague 1, Czech Republic VAT ID: CZ04788290 (EU), GB373153700 (UK) Company ID: 04788290 Czech limited liability company registered in the Commercial Register kept by the Municipal Court of Prague, File No.: C 253224 Represented by managing director Jan Čurn IBAN: CZ0355000000000027434378 SWIFT / BIC: RZBCCZPP ### Contacts General: hello@apify.com Legal team contact: legal@apify.com Privacy team contact: privacy@apify.com Apify Trust Center: https://trust.apify.com/ ### Trademarks "APIFY" is a word trademark registered with USPTO (4517178), EUIPO (011628377), UKIPO (UK00911628377), and DPMA (3020120477984).

- Apify Acceptable Use Policy: # Apify Acceptable Use Policy Apify Technologies s.r.o., with its registered seat at Vodičkova 704/36, Nové Město, 110 00 Prague 1, Czech Republic, Company ID No.: 04788290, registered in the Commercial Register kept by the Municipal Court of Prague, File No.: C 253224 (hereinafter referred to as “we” or “Apify”), is committed to making sure that the Platform and the Website are being used only for legitimate and legal purposes.

- Apify Affiliate Program Terms and Conditions: # Apify Affiliate Program Terms and Conditions Effective date: May 14, 2024 Latest version effective from: July 5, 2025 --- Apify Technologies s.r.o., with its registered seat at Vodičkova 704/36, 110 00 Prague 1, Czech Republic, Company reg.

- Apify Candidate Referral Program: # Apify Candidate Referral Program Last Updated: April 14, 2025 --- Apify Technologies s.r.o., as the announcer (“Apify”), is constantly looking for new employees and prefers to recruit people based on credible references.Therefore, Apify is announcing this public candidate referral program.

- Apify $1M Challenge Terms and Conditions: # Apify $1M Challenge Terms and Conditions Effective date: November 3, 2025 Apify Technologies s.r.o., a company registered in the Czech Republic, with its registered office at Vodičkova 704/36, 110 00 Prague 1, Czech Republic, Company ID No.: 04788290 ("Apify", "we", "us") offers you (also referred to as "participant") the opportunity to enroll in the "Apify $1M Challenge" ("Challenge"), which is subject to the following "Apify 1M Challenge Terms and Conditions" ("Challenge Terms").

- Apify Community Code of Conduct: # Apify Community Code of Conduct Effective Date: August 18, 2025 --- ## Overview and Purpose Apify community is intended to be a place for further collaboration, support, and brainstorming.

- Apify Cookie Policy: # Apify Cookie Policy Apify Technologies s.r.o., with its registered seat at Vodičkova 704/36, 110 00 Prague 1, Czech Republic, Company reg.

- Apify Data Processing Addendum: # Apify Data Processing Addendum Last Updated: January 13, 2025 --- If you wish to execute this DPA, continue here and follow instructions in the PandaDoc form.

- Apify Event Terms and Conditions: # Apify Event Terms and Conditions Effective date: November 3, 2025 These Event Terms and Conditions ("Terms") apply to all Events organized or co-organized by Apify Technologies s.r.o., a company registered in the Czech Republic, with its registered office at Vodičkova 704/36, 110 00 Prague 1, Czech Republic, Company ID No.: 04788290 ("Apify", "we", "us"), whether in-person, hybrid, or online ("Events").

- Apify Open Source Fair Share Program Terms and Conditions: # Apify Open Source Fair Share Program Terms and Conditions You are reading terms and conditions that are no longer effective.

- Apify GDPR Information: # Apify GDPR Information The European Union (“EU”) General Data Protection Regulation (“GDPR”) replaces the 1995 EU Data Protection Directive.

- Apify General Terms and Conditions: # Apify General Terms and Conditions Effective date: May 14, 2024 --- Apify Technologies s.r.o., with its registered seat at Vodičkova 704/36, 110 00 Prague 1, Czech Republic, Company reg.

- Apify General Terms and Conditions October 2022: ## Version History You are reading terms and conditions that are no longer effective.

- Apify Store Publishing Terms and Conditions December 2022: ## Version History You are reading terms and conditions that are no longer effective.

- Apify Privacy Policy: # Apify Privacy Policy Last Updated: February 10, 2025 Welcome to the Apify Privacy Policy!

- Apify Store Publishing Terms and Conditions: # Apify Store Publishing Terms and Conditions Last updated: February 26, 2025 --- Apify Technologies s.r.o., with its registered seat at Vodičkova 704/36, 110 00 Prague 1, Czech Republic, Company reg.

- Apify Whistleblowing Policy: # Apify Whistleblowing Policy [verze v českém jazyce níže] Last updated: April 14, 2025 At Apify, we are committed to upholding the highest standards of integrity, ethics, and accountability.

Platform documentation

- Apify platform: > Apify is a cloud platform that helps you build reliable web scrapers, fast, and automate anything you can do manually in a web browser.

- Actors: **Learn how to develop, run and share serverless cloud programs.

- Actor development: **Read about the technical part of building Apify Actors.

- Actor definition: Learn how to turn your arbitrary code into an Actor simply by adding an Actor definition directory. --- A single isolated Actor consists of source code and various settings.

- actor.json: Learn how to write the main Actor configuration in the

.actor/actor.jsonfile. --- Your main Actor configuration is in the.actor/actor.jsonfile at the root of your Actor's directory. - Dataset schema specification: Learn how to define and present your dataset schema in an user-friendly output UI. --- The dataset schema defines the structure and representation of data produced by an Actor, both in the API and the visual user interface.

- Dataset validation: Specify the dataset schema within the Actors so you can add monitoring and validation at the field level. --- To define a schema for a default dataset of an Actor run, you need to set

fieldsproperty in the dataset schema. - Dockerfile: **Learn about the available Docker images you can use as a base for your Apify Actors.

- Actor input schema: **Learn how to define and validate a schema for your Actor's input with code examples.

- Secret input: **Learn about making some Actor input fields secret and encrypted.

- Actor input schema specification: **Learn how to define and validate a schema for your Actor's input with code examples.

- Key-value store schema specification: Learn how to define and present your key-value store schema to organize records into collections. --- The key‑value store schema organizes keys into logical groups called collections, which can be used to filter and categorize data both in the API and the visual user interface.

- Actor output schema: Learn how to define and present the output of your Actor. --- The Actor output schema builds upon the schemas for the dataset and key-value store.

- Source code: Learn about the Actor's source code placement and its structure. --- The Apify Actor's source code placement is defined by its Dockerfile.

- Automated tests for Actors: **Learn how to automate ongoing testing and make sure your Actors perform over time.

- Builds and runs: Learn about Actor builds and runs, their lifecycle, versioning, and other properties. --- Actor builds and runs are fundamental concepts within the Apify platform.

- Builds: **Learn about Actor build numbers, versioning, and how to use specific Actor version in runs.

- Runs: Learn about Actor runs, how to start them, and how to manage them. --- When you start an Actor, you create a run.

- State persistence: **Learn how to maintain an Actor's state to prevent data loss during unexpected restarts.

- Deployment: Learn how to deploy your Actors to the Apify platform and build them. --- Deploying an Actor involves uploading your source code and building it on the Apify platform.

- Continuous integration for Actors: Learn how to set up automated builds, deploys, and testing for your Actors. --- Automating your Actor development process can save time and reduce errors, especially for projects with multiple Actors or frequent updates.

- Source types: Learn about Apify Actor source types and how to deploy an Actor from GitHub using CLI or Gist. --- This section explains the various sources types available for Apify Actors and how to deploy an Actor from GitHub using CLI or Gist.

- Performance: Learn how to get the maximum value out of your Actors, minimize costs, and maximize results. --- ## Optimization Tips This guide provides tips to help you maximize the performance of your Actors, minimize costs, and achieve optimal results.

- Programming interface: Learn about the programming interface of Apify Actors, important commands and features provided by the Apify SDK, and how to use them in your Actors. --- This chapter will guide you through all the commands you need to build your first Actor.

- Basic commands: Learn how to use basic commands of the Apify SDK for both JavaScript and Python. --- This page covers essential commands for the Apify SDK in JavaScript & Python.

- Container web server: Learn about how to run a web server inside your Actor to enable communication with the outside world through both UI and API. --- Each Actor run is assigned a unique URL (e.g.

- Actor environment variables: Learn how to provide your Actor with context that determines its behavior through a plethora of pre-defined environment variables set by the Apify platform. --- ## How to use environment variables in an Actor You can set up environment variables for your Actor in two ways: - Set up environment variables in

actor.json- Set up environment variables in Apify Console :::info Environment variable precedence Your local.actor/actor.jsonfile overrides variables set in Apify Console. - Metamorph: The metamorph operation transforms an Actor run into the run of another Actor with a new input. --- ## Transform Actor runs Metamorph is a powerful operation that transforms an Actor run into the run of another Actor with a new input.

- Standby mode: Use Actors as an API server for fast response times. --- Traditional Actors are designed to run a single task and then stop.

- Status messages: Learn how to use custom status messages to inform users about an Actor's progress. --- Each Actor run has a status, represented by the

statusfield. - System events in Apify Actors: Learn about system events sent to your Actor and how to benefit from them. --- ## Understand system events Apify's system notifies Actors about various events, such as: - Migration to another server - Abort operations triggered by another Actor - CPU overload These events help you manage your Actor's behavior and resources effectively.

- Quick start: Create your first Actor using the Apify Web IDE or locally in your IDE. --- :::info Before you build Before you start building your own Actor, try out a couple of existing Actors from Apify Store.

- Build with AI: Use pre-built prompts, reference Apify docs via llms.txt, and follow best practices to build Actors efficiently with AI coding assistants. --- You will learn several approaches to building Apify Actors with the help of AI coding assistants.

- Local development: Create your first Actor locally on your machine, deploy it to the Apify platform, and run it in the cloud. --- ## What you'll learn This guide walks you through the full lifecycle of an Actor.

- Web IDE: Create your first Actor using the web IDE in Apify Console. --- ## What you'll learn This guide walks you through the full lifecycle of an Actor.

- Publishing and monetization: **Apify provides a platform for developing, publishing, and monetizing web automation solutions called Actors.

- Monetize your Actor: Learn how you can monetize your web scraping and automation projects by publishing Actors to users in Apify Store. --- Apify Store allows you to monetize your web scraping, automation and AI Agent projects by publishing them as paid Actors.

- Pay per event: Learn how to monetize your Actor with pay-per-event (PPE) pricing, charging users for specific actions like Actor starts, dataset items, or API calls, and understand how to set profitable, transparent event-based pricing. --- The PPE pricing model offers a flexible monetization option for Actors on Apify Store.

- Pay per result: Learn how to monetize your Actor with pay-per-result (PPR) pricing, charging users based on the number of results produced and stored in the dataset, and understand how to set profitable, transparent result-based pricing. --- In this model, you set a price per 1,000 results.